MEVconomics.wtf Part 2

Second part of MEVconomics.wtf, the 7-hour conference devoted to MEV. As part 2, a focus on Layer 2s seems appropriate

Video Overview

- What protocol economics look like in general

- Changes that happened to both Ethereum protocol and application

- Some of the issues in the future

Protocol economics

Political economic questions (1:00)

Example about behavior of the chain : if the chain is suddenly being attacked, does the application have some problems and does it affect any of the incentives ?

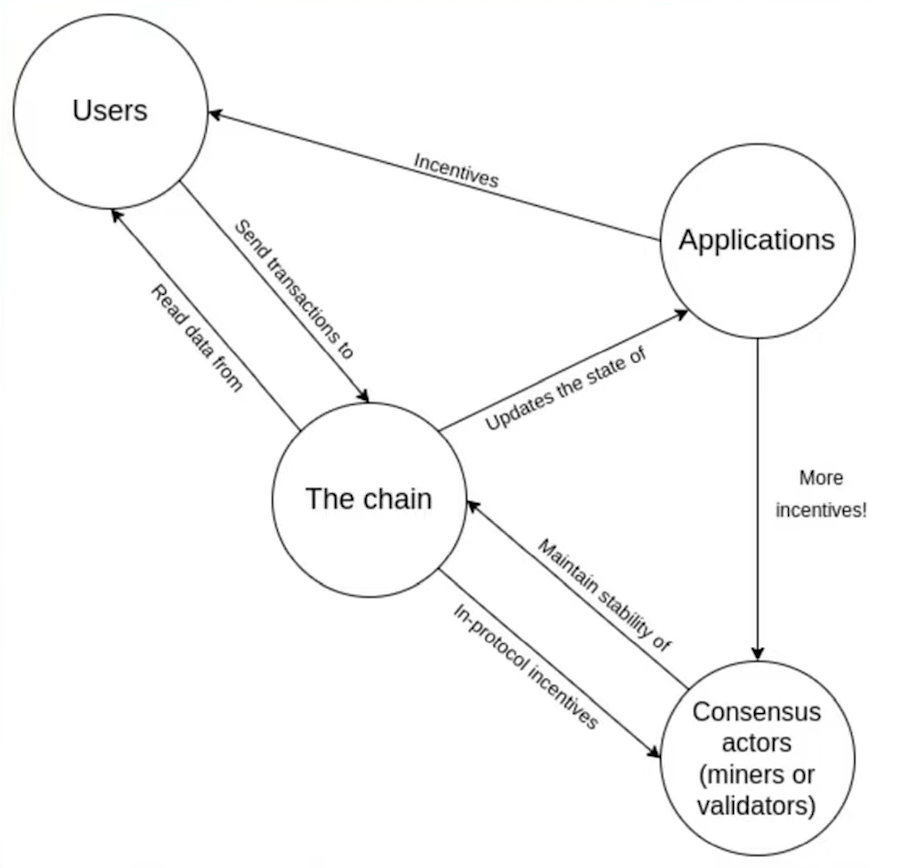

How the chain works

- User send transactions to the chain and read data from the chain

- Applications are the reason why users send transactions, and they give incentives to users

- Consensus actors maintain chain stability through mining rewards, validating rewards, penalties, and other in-protocol defined rewards to stick with their roles

Ultimately, MEV is about extra protocol incentives that arise mostly accidentally and how it ends up affecting some of the incentives to run the chain.

The risks to watch for (3:00)

The main risk is about validator incentives. We must ensure that validators act correctly instead of acting incorrectly or attempting attacks on the chain. The battle between user incentives (transaction fees) and potential bribes to censor the chain is a concern.

Aside form that, economies of scale can lead to centralization. If consensus actors see constantly complex and shifting strategies, especially with proprietary information required, it leads to domination by large pools.

Incentives to have low latency implies geographic concentration and cloud computing. Both of these things actually contribute to juridiction risks, which affect the credible neutrality of a blockchain, as we've seen over the past year (with OFAC compliant blocks)

Changes that happened

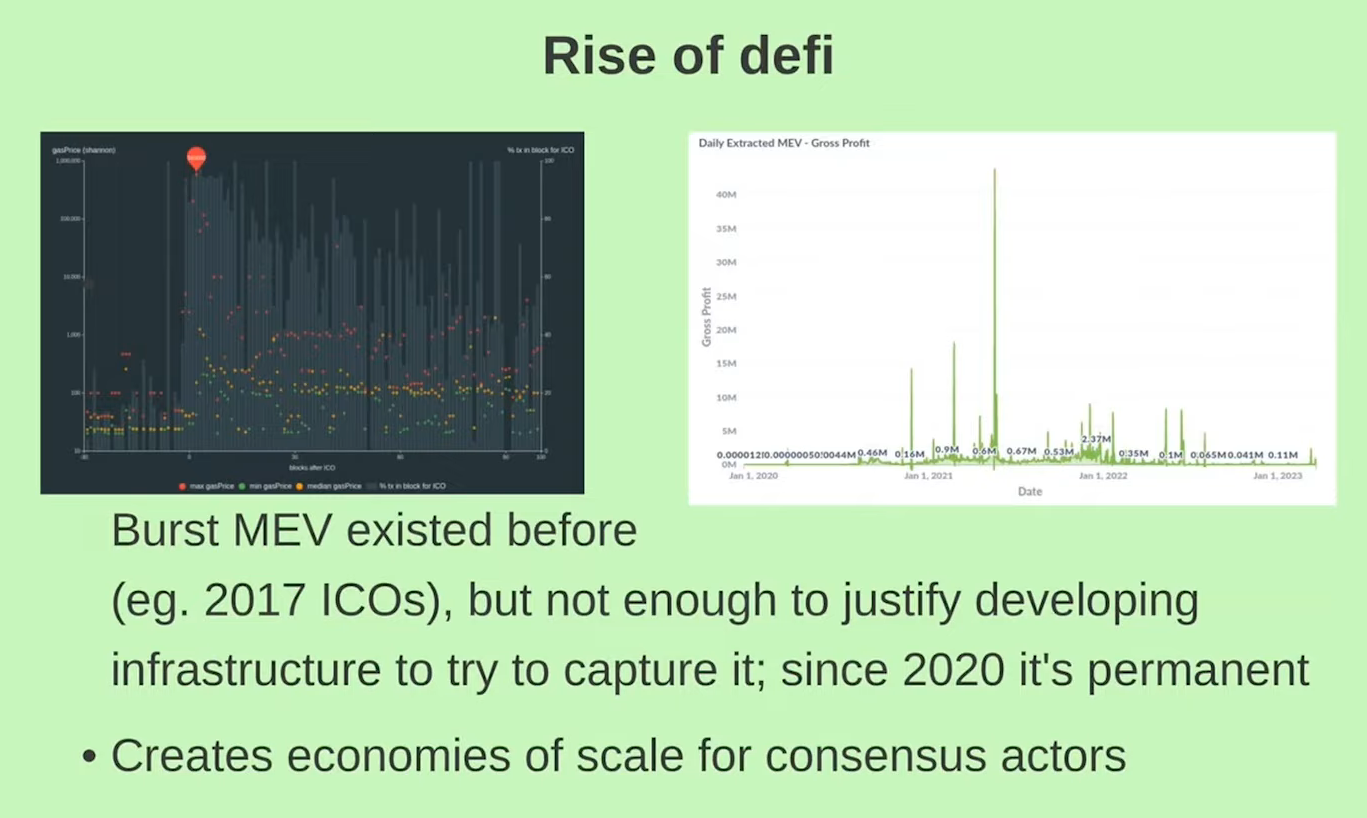

Rise of DeFi (6:45)

Opportunities for miners to profit from manipulating blocks have existed since 2017 with the famous ICOs, but there wasn't enough value to justify infrastructure costs to capture it.

Since 2020 and DeFi Summer, infrastructure costs to capture MEV became justified, as there are MEV opportunities in every block and bigger spikes than 2017.

This created the necessity for things like Proposer-Builder Separation (PBS) to try splitting up the economies of scale, so that the remaining roles would not rely so heavily on economies of scale.

Historical changes (8:45)

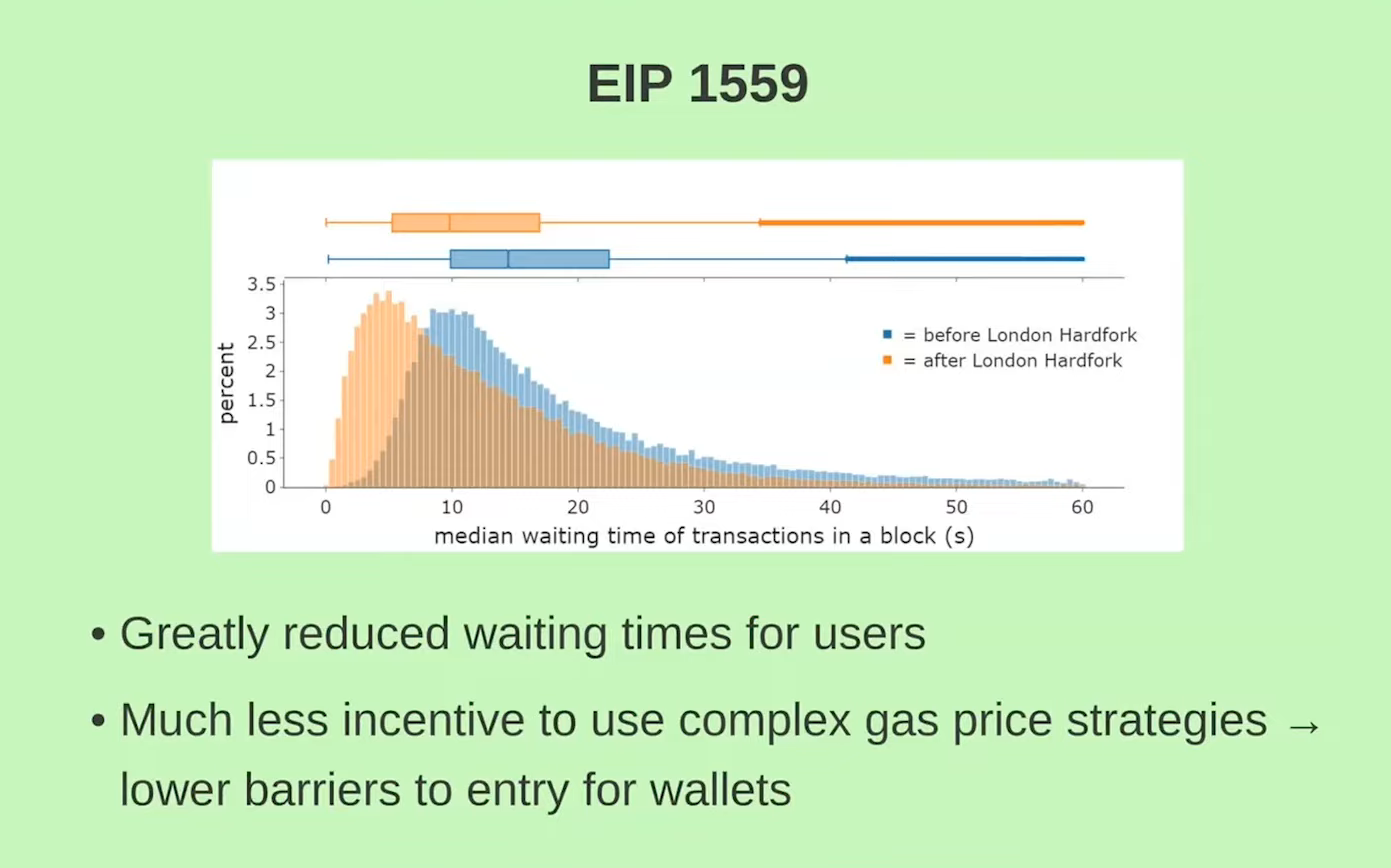

EIP-1559 has brought significant improvements to the user experience. As for Merge, it's not yet clear whether this update has been beneficial or detrimental to Ethereum, as there are so many changes

The long-term effects of the merge are still uncertain but will likely have a lasting impact on Ethereum

Upcoming changes

Withdrawals (11:30)

Withdrawals will be enabled in the next hard fork, leading to a reduction in total staked amount. But people will become more confident getting staked as they can get their money back

Vitalik thinks the main practical consequence of this is going to be a change to the total amount of staking and possibly also a change to the composition of stakers

Single Secret Leader Elections (12:30)

Single secret leader elections ensure that the proposer's identity is only known to themselves before the block is produced, reducing the risk of denial-of-service attacks against proposals.

Proposers can voluntarily reveal their identity and prove that they will be the proposer.

Research question : are there unintended effects and in particular, especially in the combination of PBS, would there be incentives for proposers to pre reveal their identity to anyone ?

Single slot finality (13:15)

Single slot finality means blocks are immediately finalized instead of finalizing after two epochs (64 blocks)

It may increase reorg security and make Ethereum more bridge-friendly, except for special cases like inactivity leak.

But as we'll get into with layer 2 pre confirmations, single slot finality will not be de facto single slot for a lot of users and Vitalik thinks that's going to be an interesting nuance.

Layer 2 and protocol economics

The impact on layer 1 economics depends on how layer 2 solutions are implemented :

- Sequencing : "Based Rollups" are layer 1 doing the sequencing for them (more info from Justin Drake post), and "L2-controlled" means the Layer 2 has its own sequencer

- Percentage of Layer 1 DeFi moving to Layer 2 : no impact if all Layer 1 DeFi doesn't move, significant if all Layer 1 DeFi does

- Layer 2 pre-confirmations, protocol economics, and interaction effects are also important considerations.

Who can submit a batch ? (15:15)

Base roll-ups involve batches of transactions committed into layer 1. There are different approaches to sequencing transaction in Based Rollups solutions.

For example, "Total anarchy" approach allows anyone to submit a batch at any time. PBS reduces costs and makes total anarchy approach viable, but it has important drawbacks, as wasted gas.

Other approaches include centralized sequencers, DPoS voting, and random selection. So there a lot of options that are not based...The question is : do rollups want to be based or not ?

Could Layer 2s absorb "complex" Layer 1 MEV ? (17:00)

No if :

- Rollups are based. The PBS system just does sequencing for Layer 2, so Layer 2 MEV becomes Layer 1 MEV.

- A large part of L1 DeFi stays on L1

- Rapid L1 transaction inclusion help L2 arbitrage in other ways.

Otherwise...Maybe ?

Fraud and censorship (17:45)

If you can censor a chain for a week AND it doesn't socially reorg you, then you can steal from optimistic rollups (Optimism 👀)

That said, much shorter-term censorship attacks also exist on DeFi. We can do a huge MEV attack on all kind of DeFi projects. We talk about Layer 2s but this is a problem on Layer 1s too

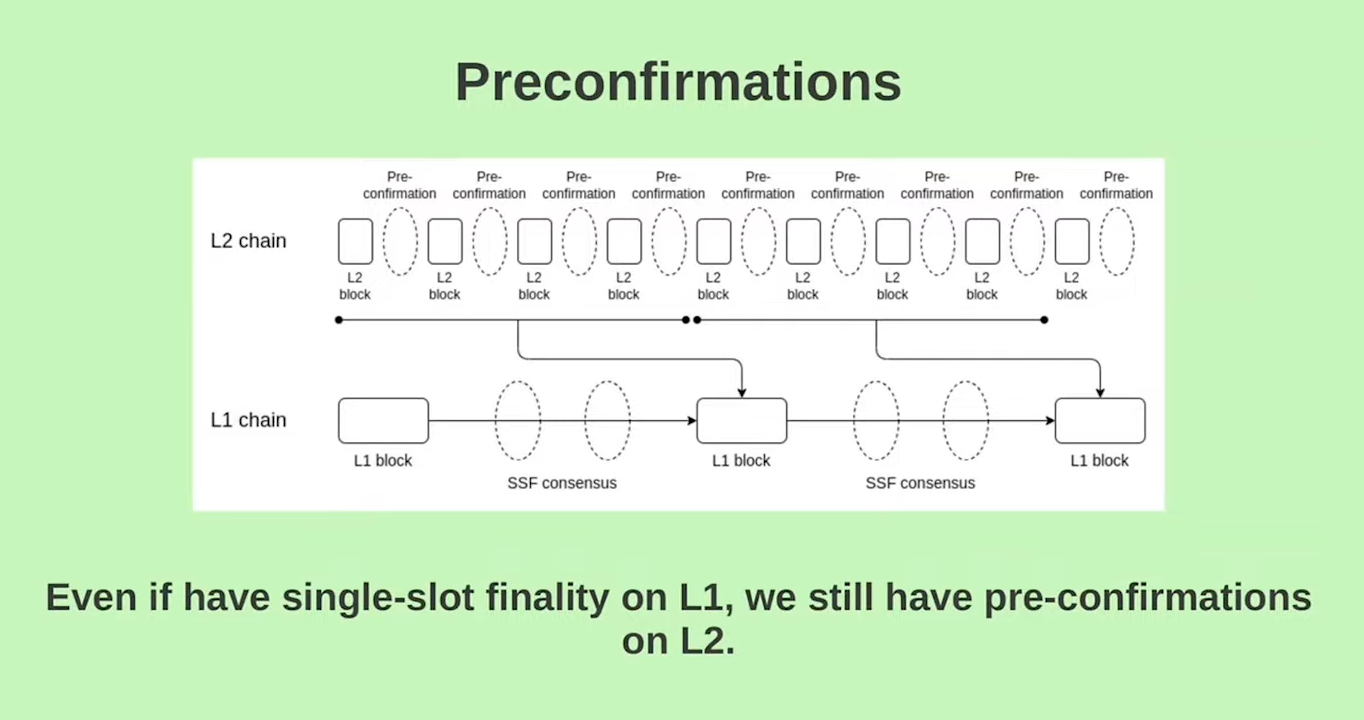

Preconfirmations (18:30)

Users generally prefer faster confirmations than the standard 12 seconds. Pre-confirmations are being considered by many Roll-Up protocols to address this issue.

The idea is to have an off-chain pre-confirmation protocol that confirms layer 2 blocks before submitting them to layer one. This process involves verifying a compact proof of the pre-confirmation in a layer 1 block.

With this approach, users experience a three-step confirmation process :

- Acceptance of transaction

- Pre-confirmation of layer 2 block

- Final confirmation on layer 1

Single-Slot Finality at layer 1 becomes more feasible when most users rely on pre-confirmations in layer 2. However, there will always be a distinction between the confirmation speeds

Free confirmation (19:45)

Having two tiers of confirmation is likely inevitable due to user expectations regarding transaction confirmations over time

Pre-confirmations in layer 2 are incompatible with based rollups, because the submission protocol must respect the pre-confirmation mechanism. If a set of layer 2 blocks does not adhere to pre-confirmations, it will be rejected.

Conclusion (21:00)

Decentralizing Sequencers...

...Wait, it's all PBS, always has been

Every roll up has a decentralization roadmap. However, the way that sequencing works in layer two, it actually combines what is the equivalent of a layer 1 proposer and layer 1 block builder in the same role

This creates a variety of problems unless we start to address it by separating these two roles on layer 2, just like we did on layer 1 with Proposer-Builder Separation

Even with PBS on L2, we face challenges like privacy, Cross-domain MEV and Latency that require decentralizing the builder role.

Decentralizing rollup sequencers

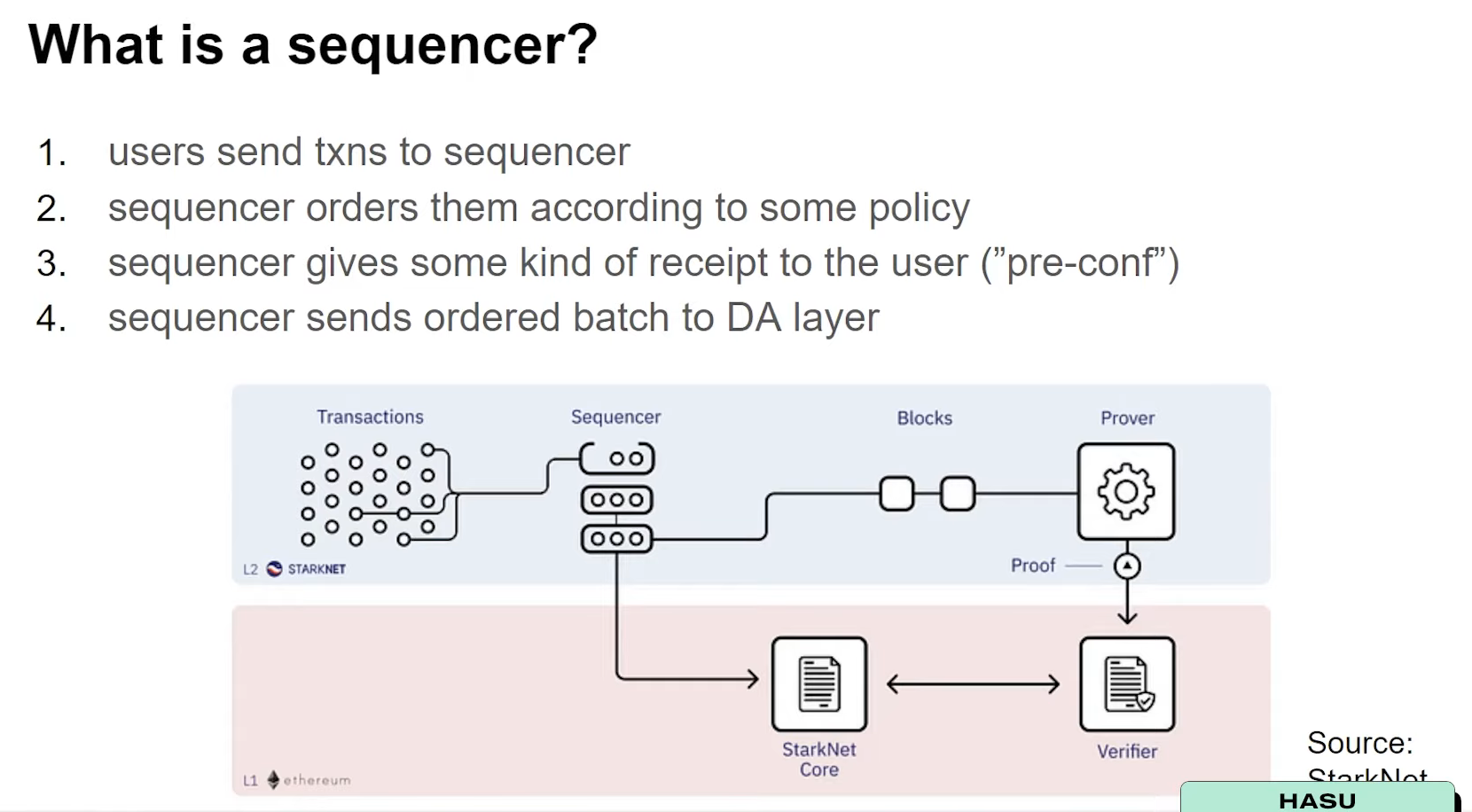

What is a sequencer ? (1:30)

There are basically 4 steps involved in a sequencer, which work in pretty much every Layer 2 :

Depending on the Layer 2, there might be some small differences. For example, in Starknet, the sequencer is also the prover so they have even more responsibility, but all of these can be stripped out over time

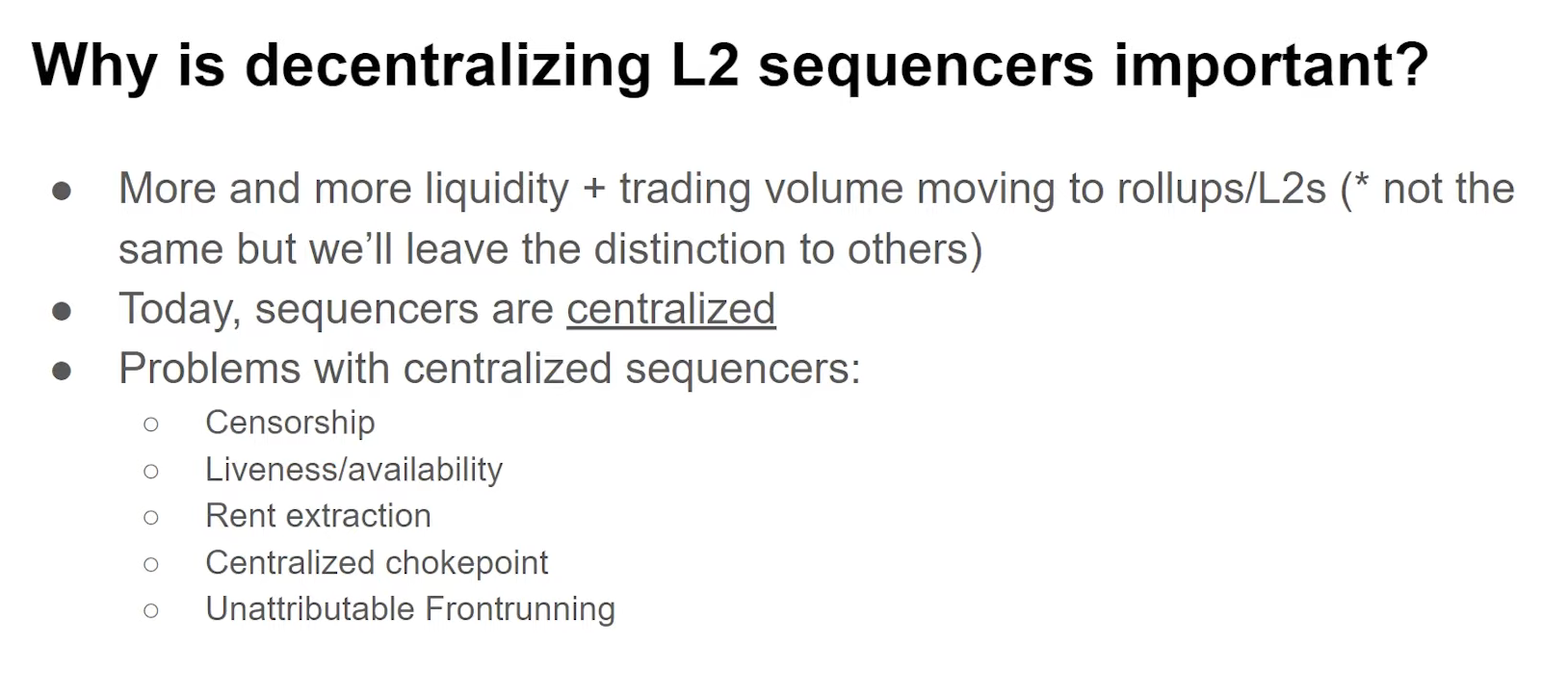

Why is decentralizing L2 sequencers important ? (2:30)

We didn't even started to imagine the end state of Ethereum scaling. Currently, rollup providers aren't just focused on building their own rollups. They have all pivoted to kind of Rollups-as-a-Service.

Therefore, Hasu thinks it's possible to see a future where spinning up a blockchain will look like spinning up a smart contract today, with numerous roll-ups functioning as independent blockchains. The problem is, all of these sequencers are centralized.

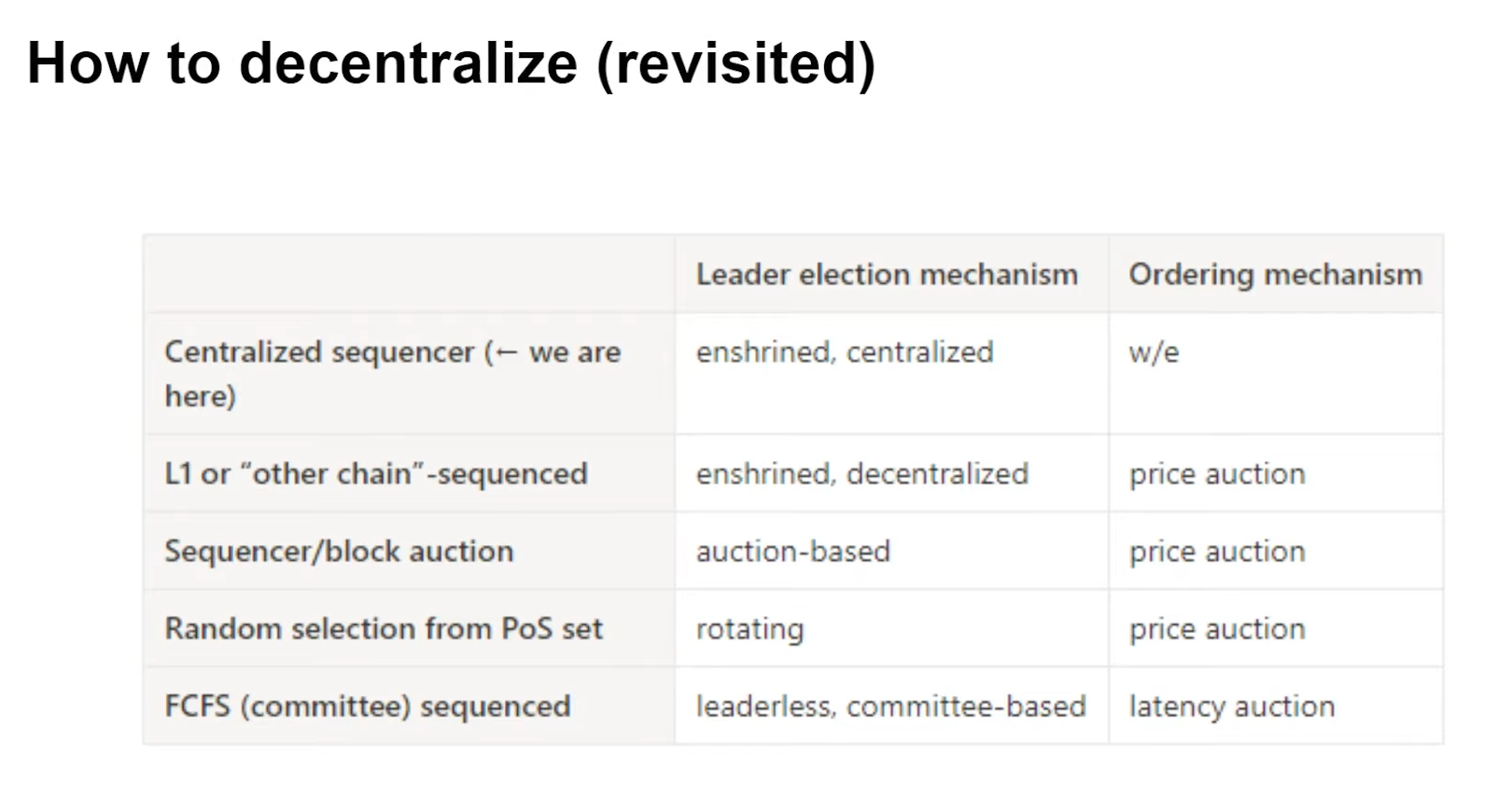

How to decentralize ? (4:15)

- The first proposal is "Based Rollups" (L1 or other chain-sequanced) like Vitalik mentionned before

- Shared sequencing, where one blockchain sequences multiple others (Espresso style)

- Sequencer/block auction, where the right to propose the next block or next series of blocks is auctioned off every now and then (Optimism style)

- Random selection from Proof-of-Stake set, where you have basically a consensus mechanism on top of your rollup and a proposer is selected randomly (Stargate style)

- Various committee based solutions, the most common is "first-come, first-served"

Sequencer = L1 Proposer + L1 Builder

If we look more closely, we can see that there are at least 2 components to the sequencer :

- Leader election mechanism (Proposing)

- Ordering mechanism (building)

How to decentralize revisited (6:00)

The proposals put forward actually have different lead election mechanisms, different ordering mechanisms. But we also see that current proposals focus mainly on leader election mechanisms rather than ordering mechanisms.

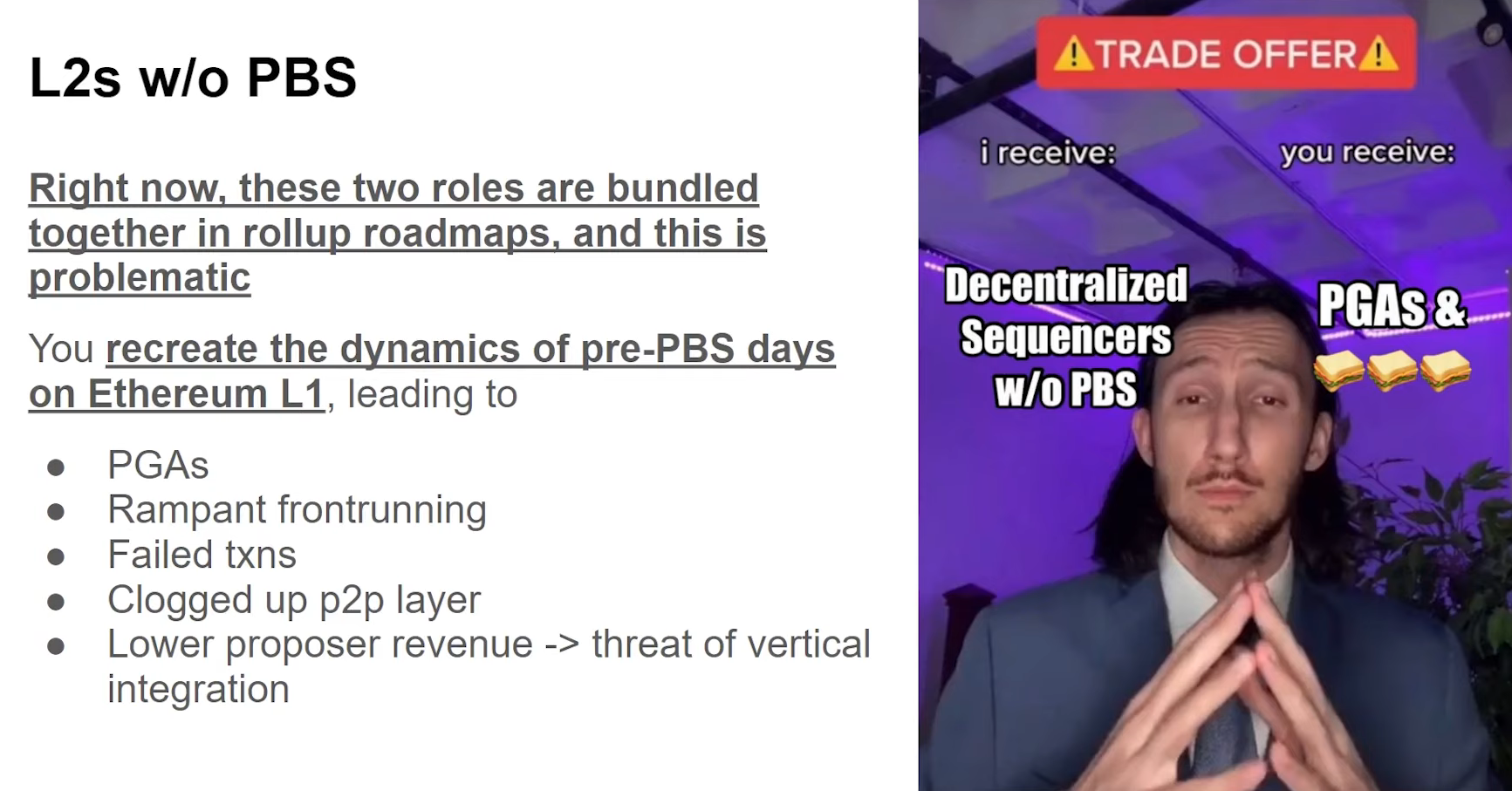

This is a problem, because if we do Layer 2s without PBS, we recreate the dynamics of pre-PBS days from ethereum layer 1 on these rollups.

Put another way, all these problems that we talked about two years ago on Ethereum are now back on rollups, and worse than before because MEV is a big deal today.

Hasu thinks it's more accurate to talk about proposer as a service because that's what it really is. We need to start separating proposer and builder roles on layer 2, just like we did on layer 1

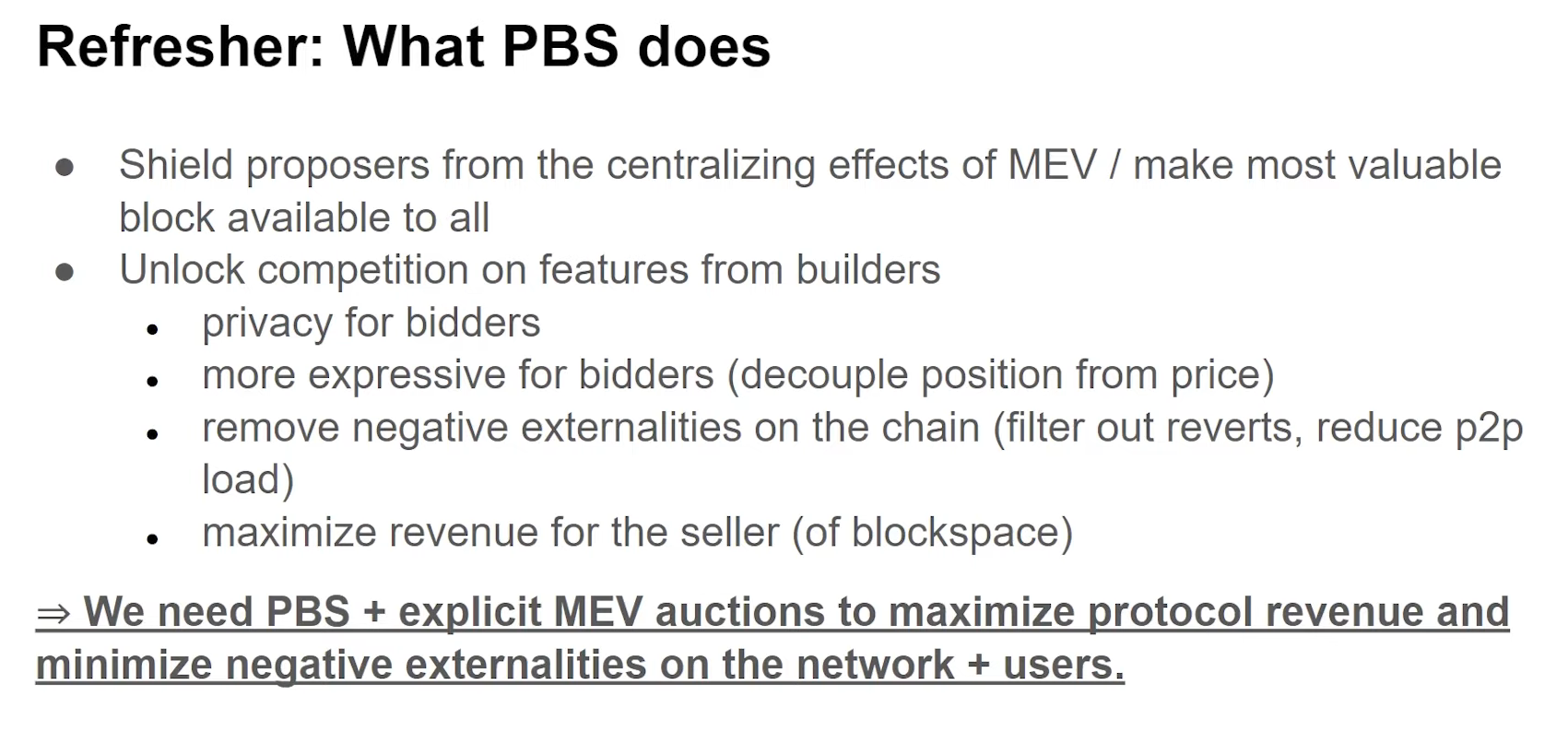

What PBS does (8:00)

Before PBS, we were only able to express basically these two dimensions through a single unit, which was the gas price.

With PBS, whether it's a small solo validator or it's a big staking pool like Lido or Coinbase, they all make the same from MEV and that's a huge achievement

PBS on Layer 2 faces novel challenges

Privacy (9:45)

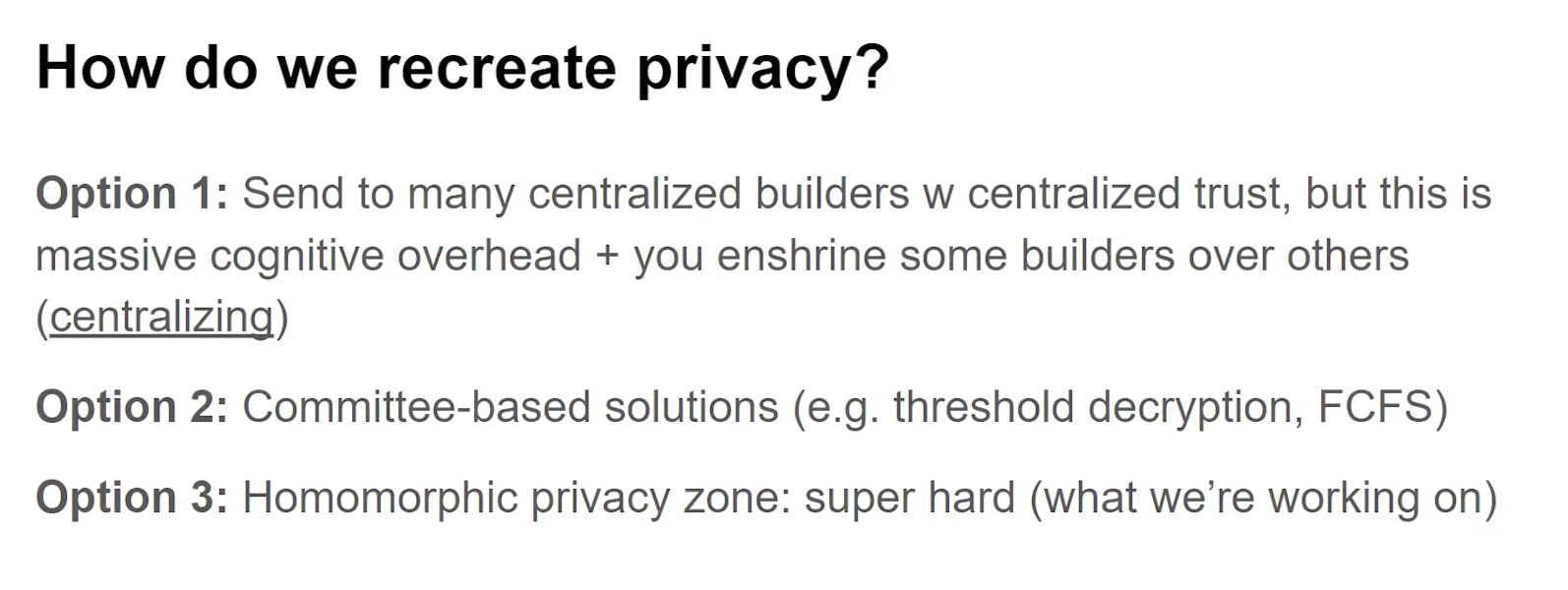

Privacy is probably the biggest challenge when decentralizing the sequencer. Centralized sequencers make provacy feel easy, but decentralizing is "hard af". We have a very hard choice : do we remove privacy or decentralize it ?

Both paths are very hard and it's not clear that either of them is necessarily better or easier than the other.

- At least these centralized block builders are competing with each other in a sort of competitive market, but you need to decide which builder you trust, and which builder you don't trust

- Threshold encryption or first come first serve models can be used but may still be centralized.

- Sharing the same type of privacy among different parties can provide decentralized privacy.

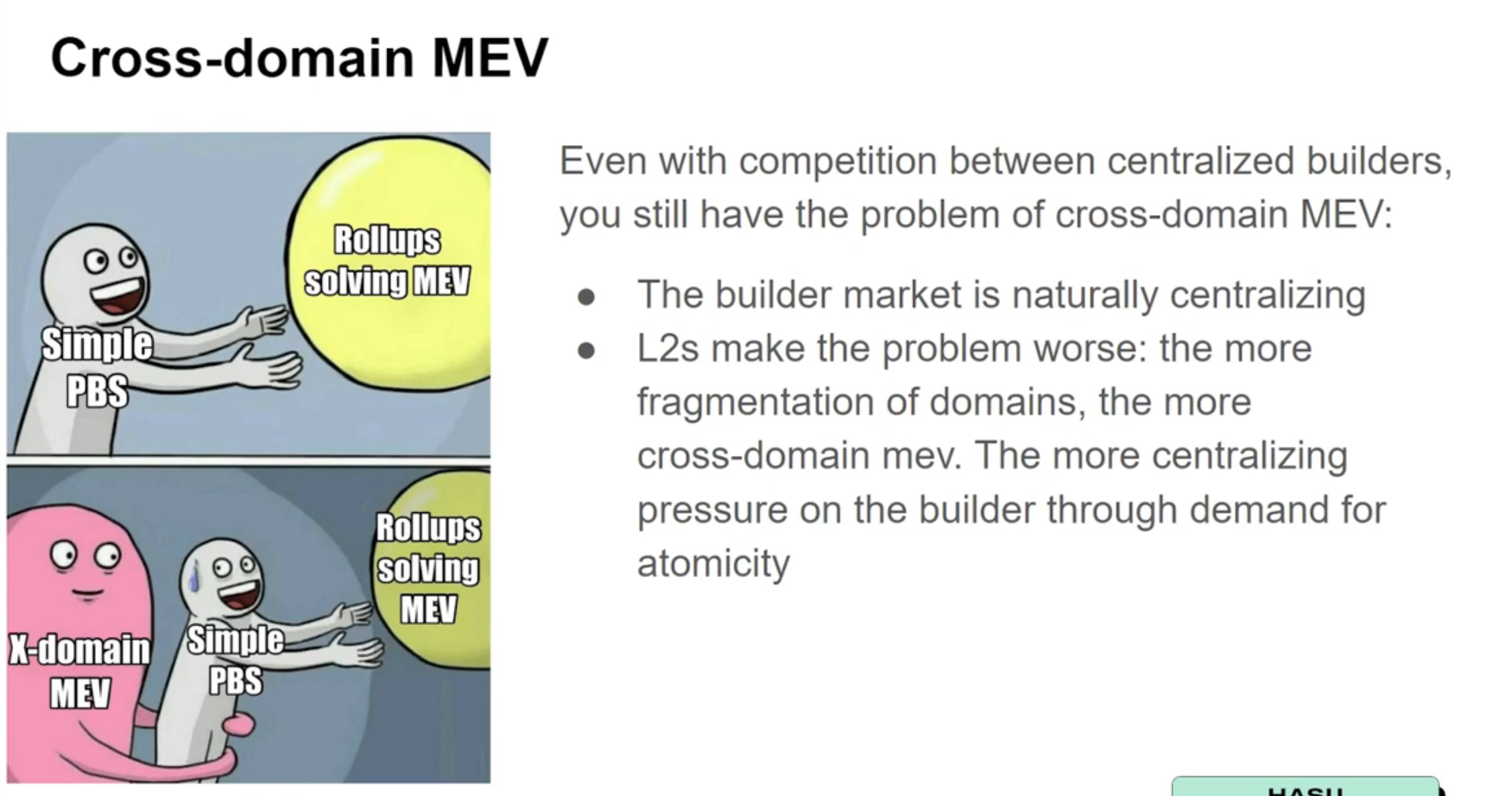

Cross-domain MEV (13:00)

The builder market is already naturally very centralizing due to economies of scale. But the simple existence of Layer 2s make the problem worse because of liquidity fragmentation.

Latency (13:45)

It turned out that users liked faster confirmations so much, they had to switch to a centralized sequencer with faster pre-confirmations. This lower latency is a big friction for decentralization.

We need our systems to be insensitive to latency. If we lower the block time too much, we discourage participation from anyone who is not able to co locate in that same geographical area.

PBS is essential but not enough

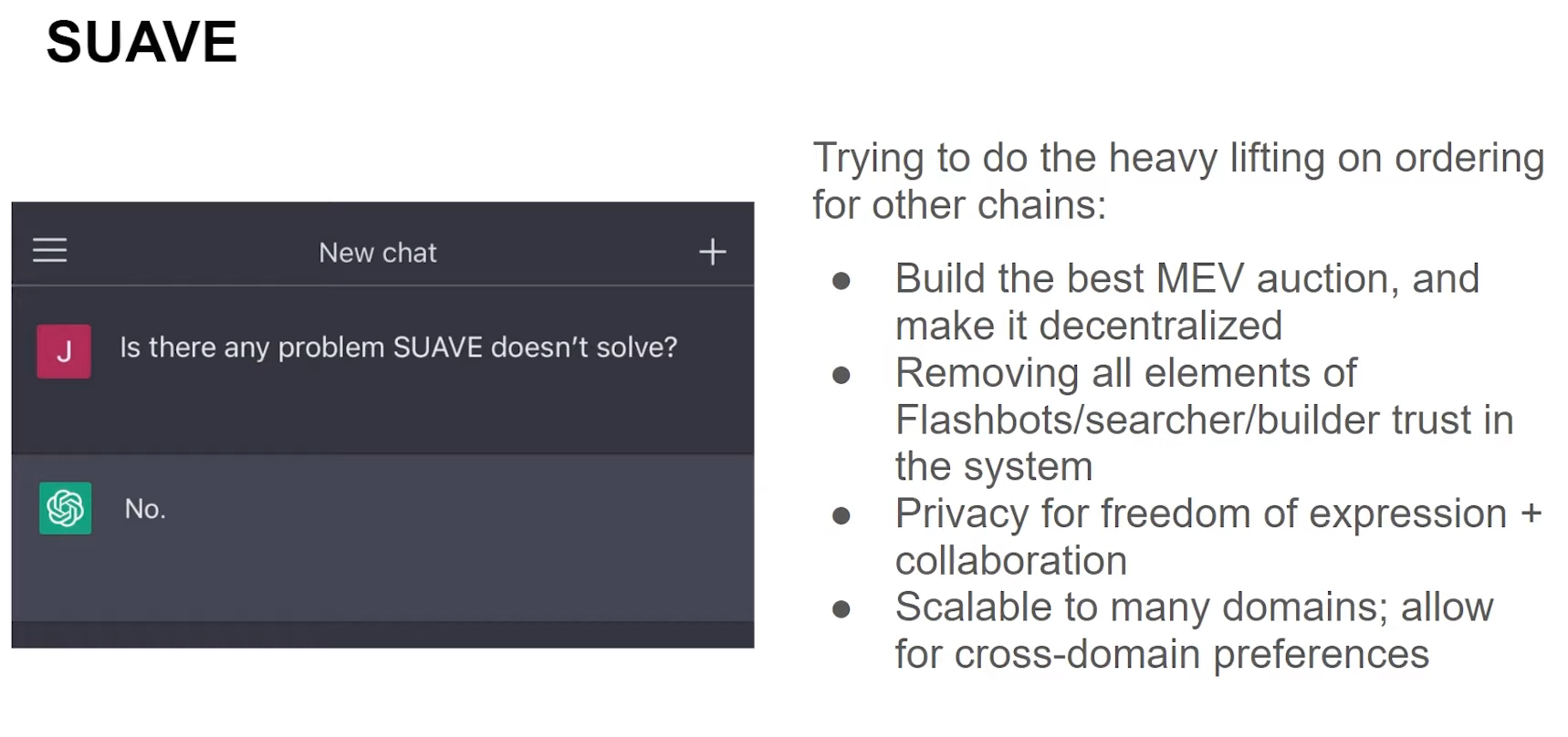

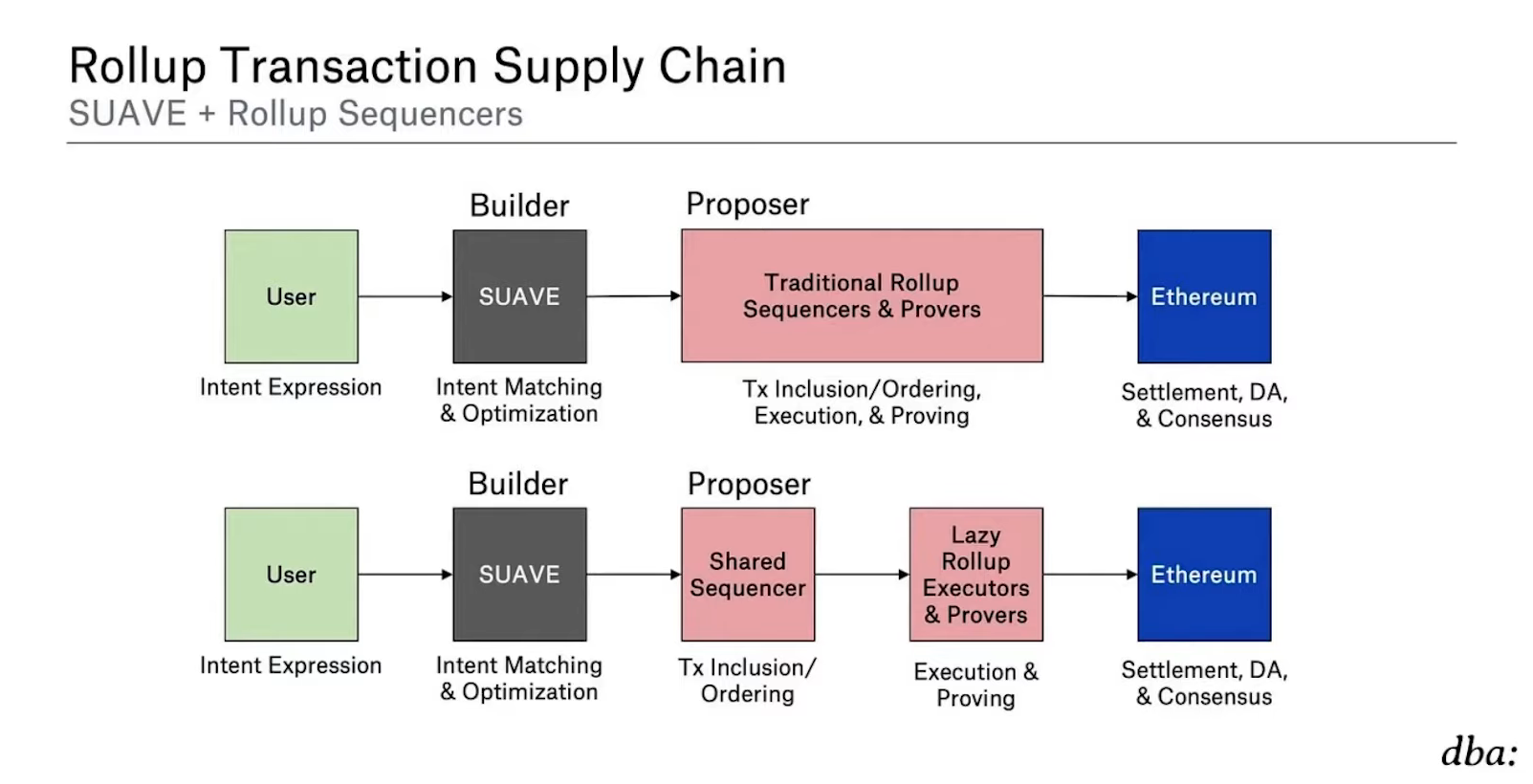

SUAVE (14:30)

We also need to look into decentralizing the builder role itself, and Hasu introduced Suave as a proposal aimed at decentralizing the builder role.

In the rollup endgame, we're going to need the decentralized sequencer, going to need the decentralized builder, and we're going to need the private mempool, otherwise it's not going to work out

Conclusion (16:30)

Remember : Sequencer = L1 Proposer + L1 Builder. Separation is great, but we must not ignore the builder part, as we need to decentralize both.

MEVconomics in L2

A closer look at the Sequencer's role

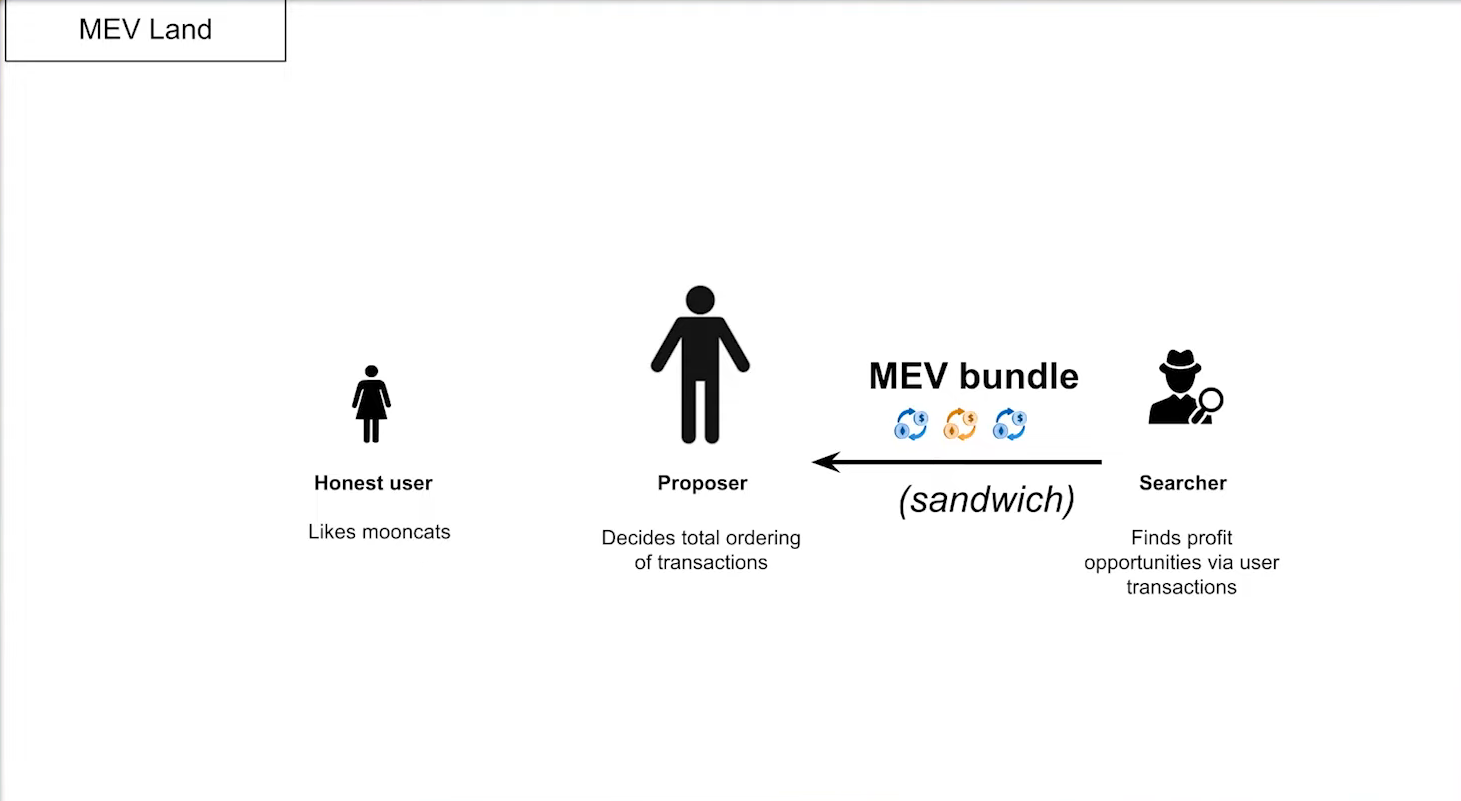

The MEV Land

MEV Land population (0:45)

Three agents are involved :

- Honest user : All the users want is to buy/sell their tokens.

- Proposer : Orders pending transactions and decide the final ordering of those transactions in the next block

- Searcher : The MEV Bots that look for MEV opportunities, bundle them up and pay proposers to include them.

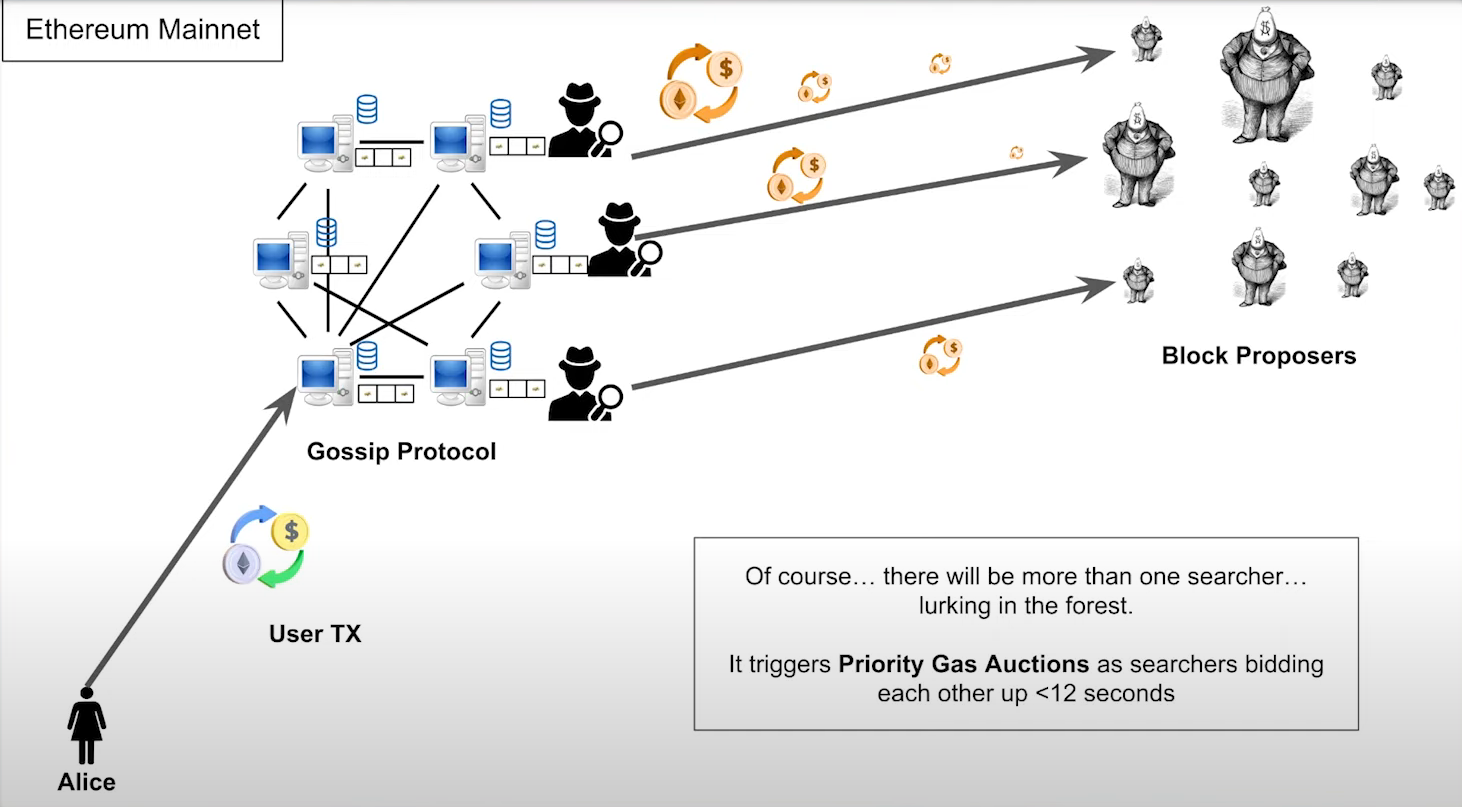

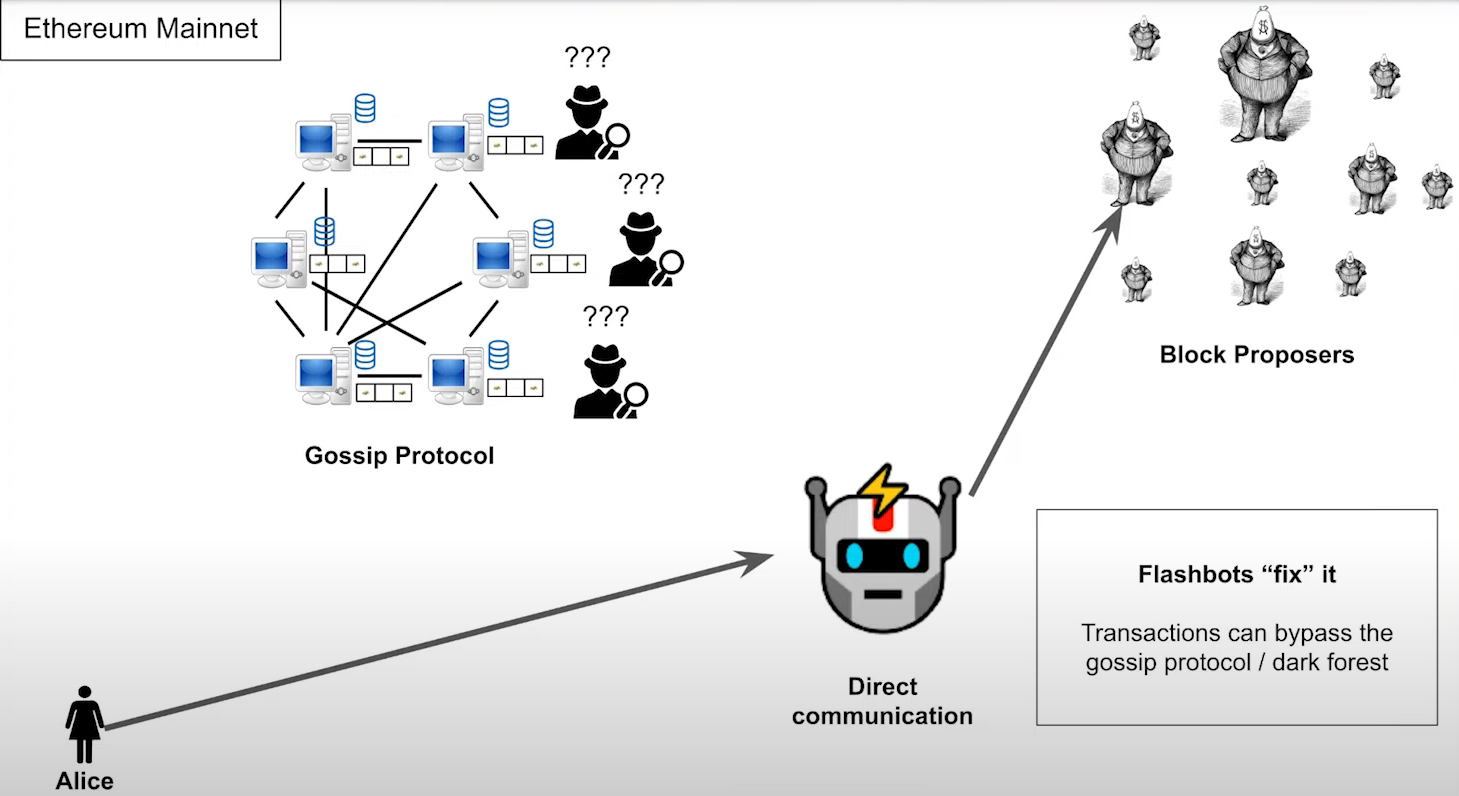

Life Cycle of a transaction before flashbots (1:15)

How does the user get their transaction and communicate it to the proposer using a peer-to-peer network ?

- Alice send a transaction to a peer

- Within, 1-2 seconds, every peer in the network get a copy of this transaction, including their proposers

- They'll take this transaction and hopefully include it in their block.

Problem : this is a dark forest. Anyone could be on it, including a searcher, so they could listen out for the user's transaction, inspect it, find an mev opportunity and then front run the user to steal the profit. This leads to "Priority gas auctions", where the searchers bid each other up.

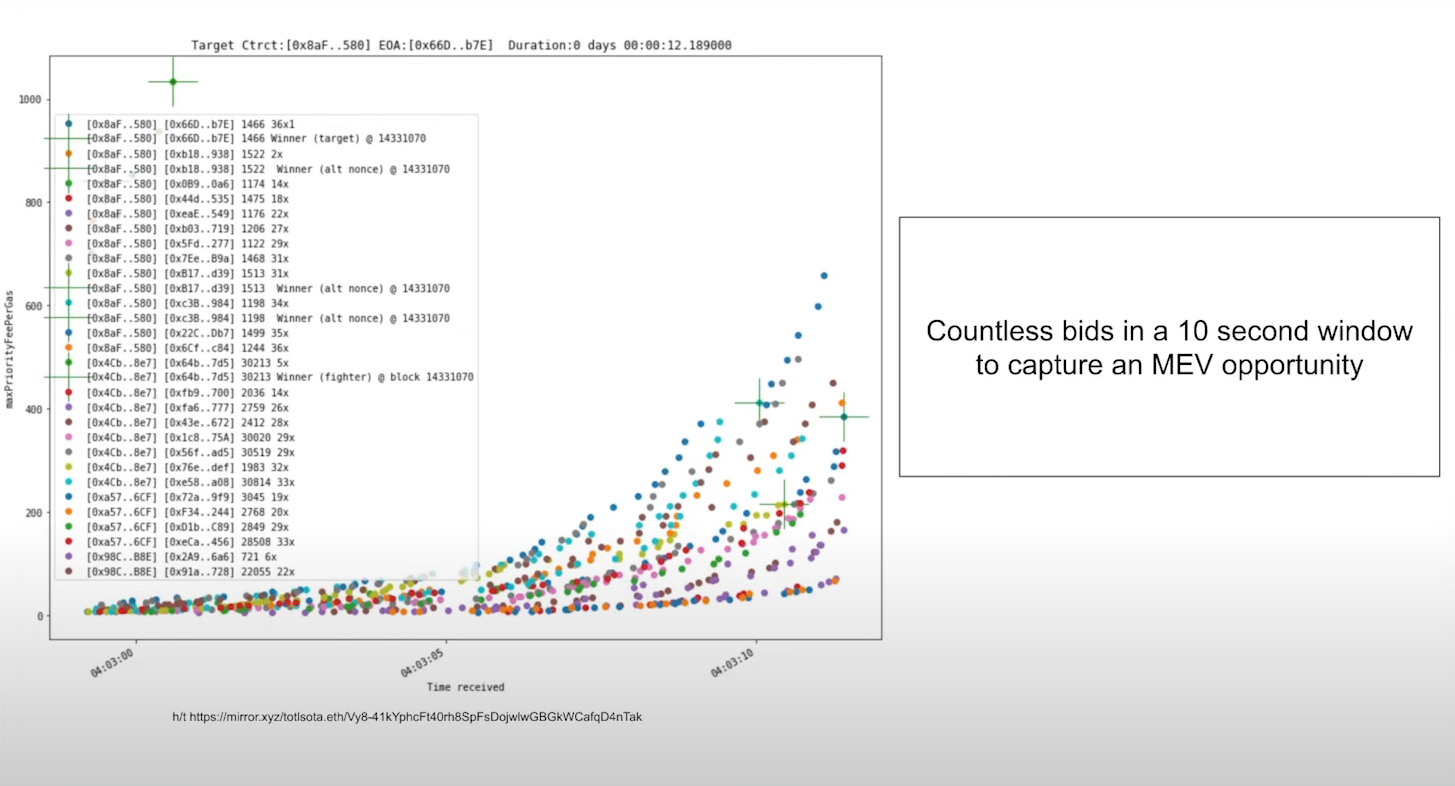

We can see here in the graph within a ten second period there's basically like hundreds of transactions being sent. There are 2 issues about it :

- Priority Gas Auctions are wasteful, as there are lots of failed transactions

- MEV in unrestricted. We're taking the user's transaction, throwing it to the wolves and just hoping it gets to the other side

Life Cycle of a transaction after flashbots (3:30)

Flashbots came as a solution to address unrestricted MEV by allowing users to send transactions directly to Flashbots, which gave them directly to the block proposer instead of going through the peer-to-peer network.

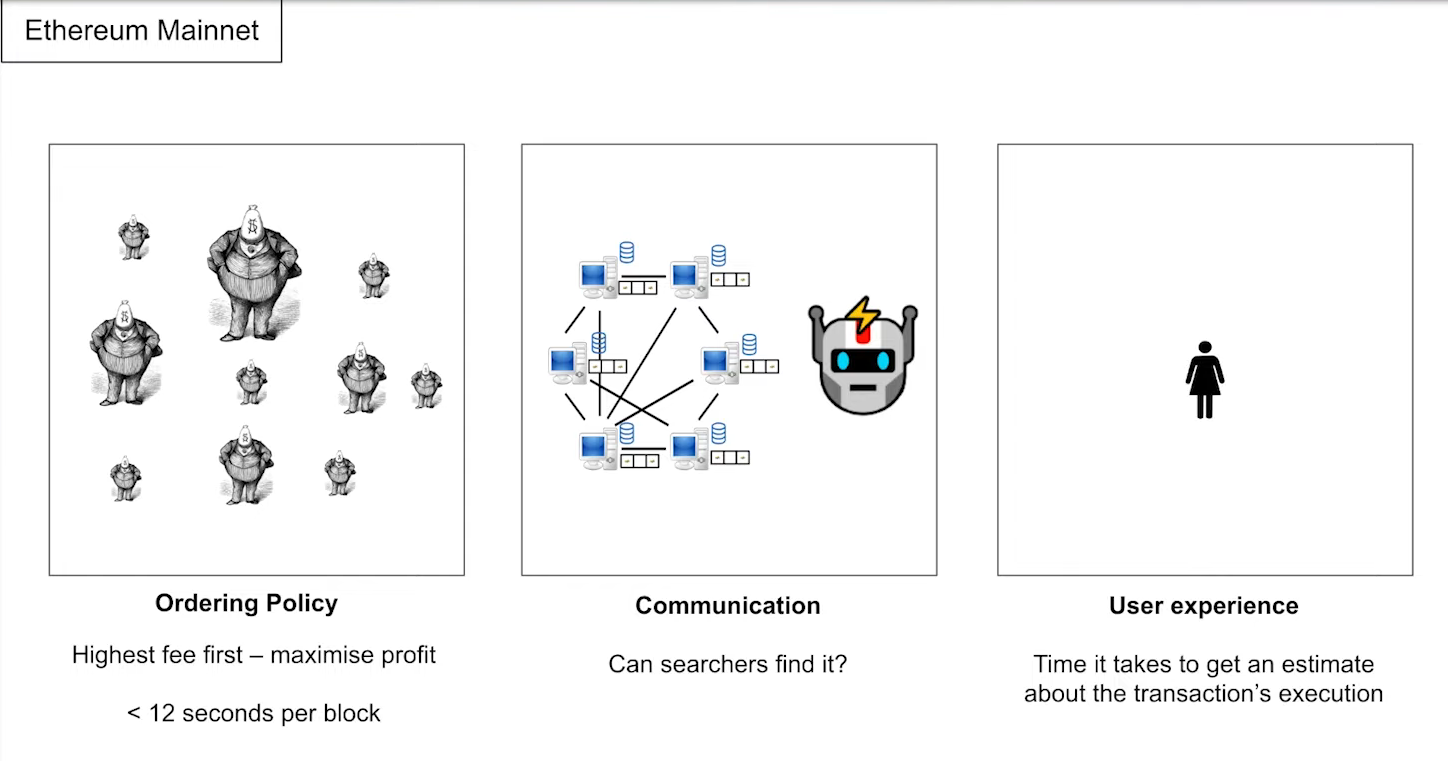

What can we extract from this scenario ? (4:00)

- The ordering Policy : we're picking transactions based on the fee, highest fee first, and than around 12 seconds to do this.

- Communication : How does the block proposer learn about the transaction and how do the searchers find it as well ?

- User Experience : How long does it take for a user to be informed that their transactions confirmed and how it was executed ? (at the end of the day, MEV exists thanks to users)

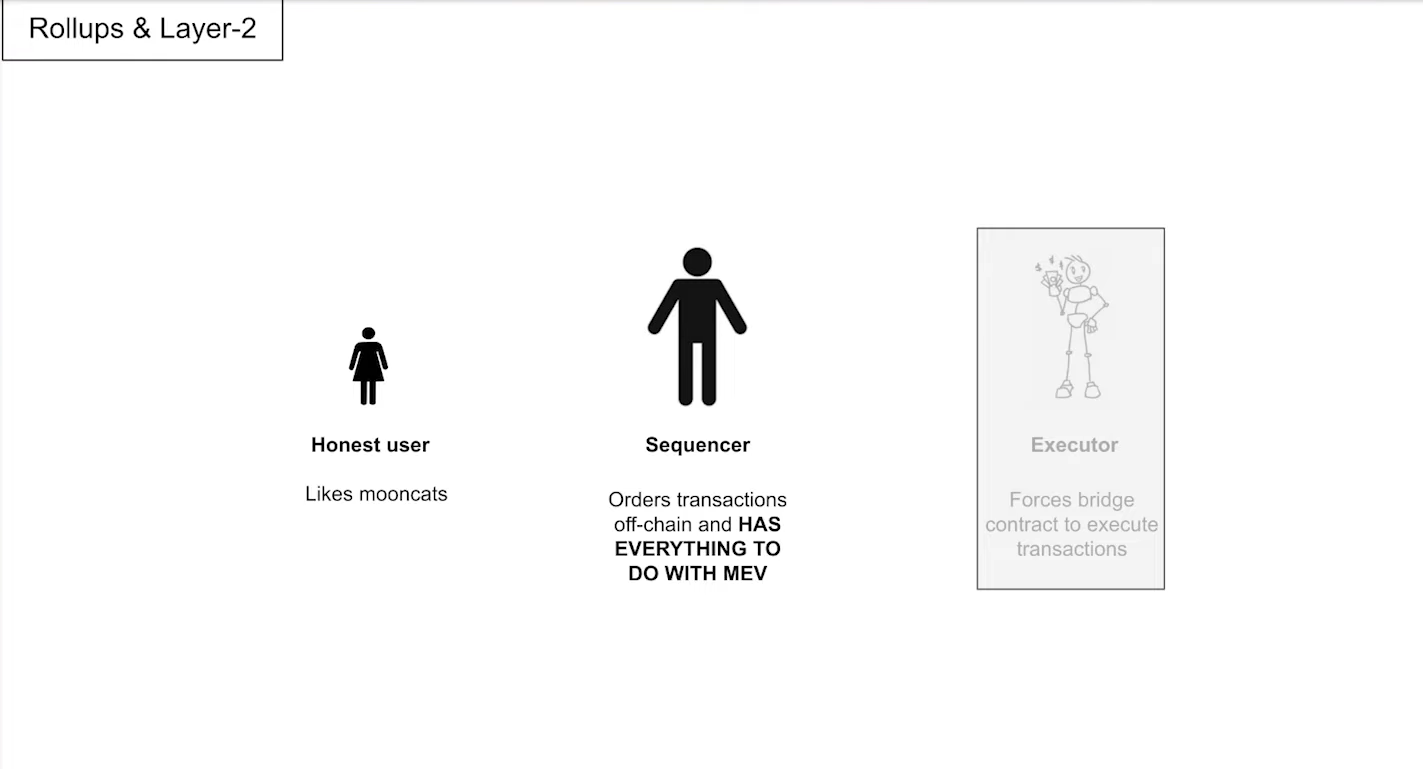

The Layer 2 MEV Land (4:30)

There are three actors in rollup land as well :

- Honest user : Initiates transactions.

- Sequencer : Orders pending transactions for inclusion in a rollup block.

- Executor : Executes transactions.

Sequencer have everything to with MEV, as it decides the list of transactions and their ordering. Also, the lifecycle is pretty similar

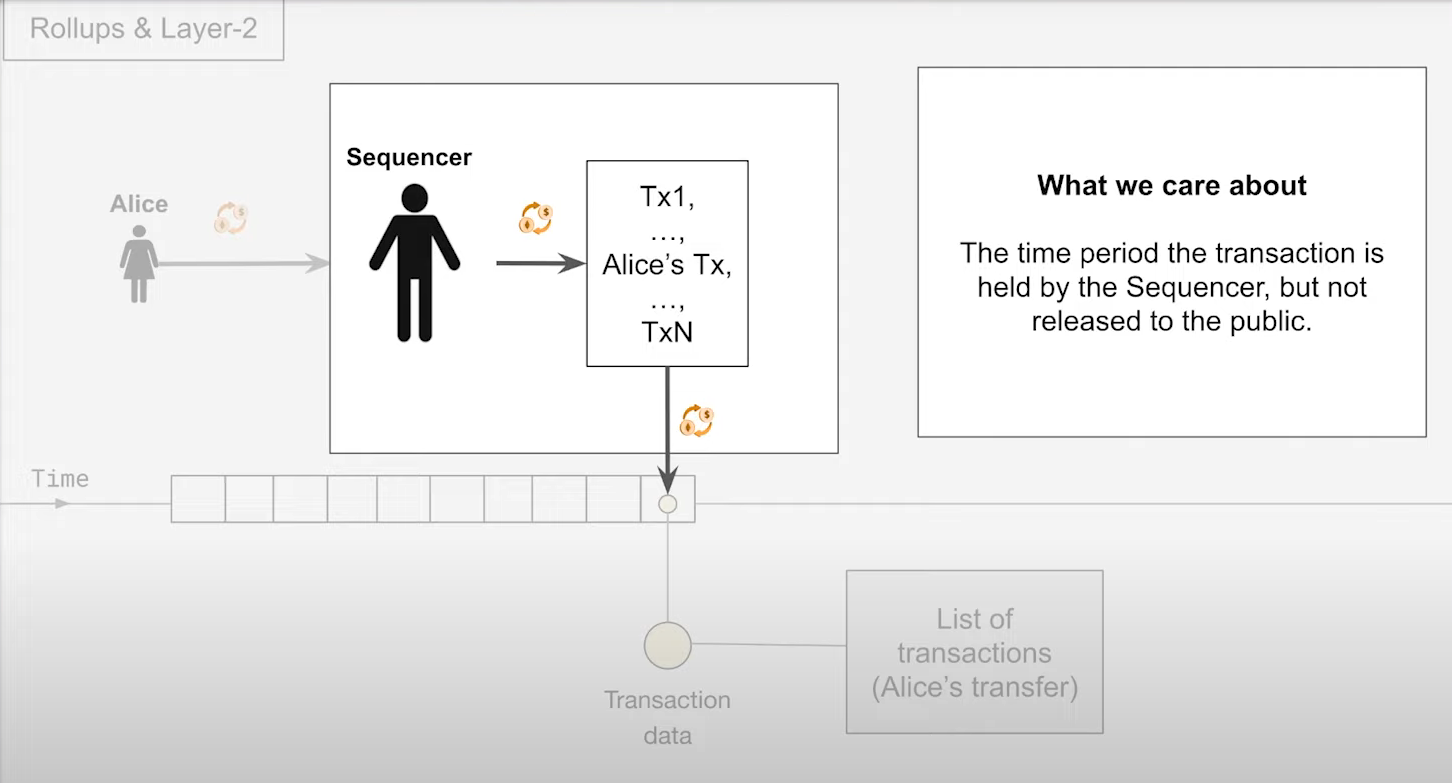

Lifecycle of a transaction in a Sequencer (5:15)

- The Sequencer has a list of pending transactions

- Ordering the transactions according to the ordering Policy

- Post them onto Ethereum

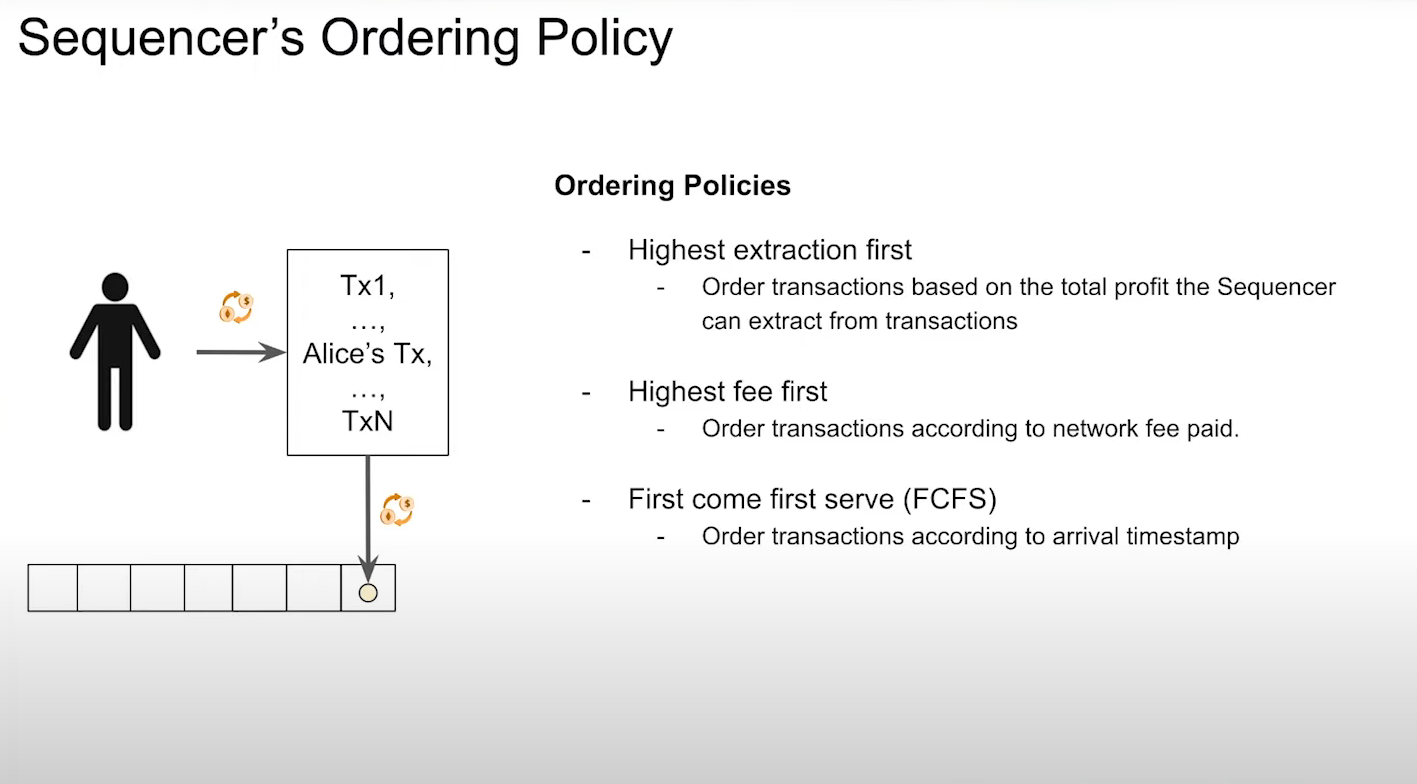

Sequencer ordering policies

Let's admit Alice can communicate directly with the Sequencer. When does Alice get a response from the sequencer and what type of response do they get ?

It's going to depend on how the sequencer decides the order of these transactions, then of course what we care about is the sequencer's ordering policy.

Highest Extraction first (6:15)

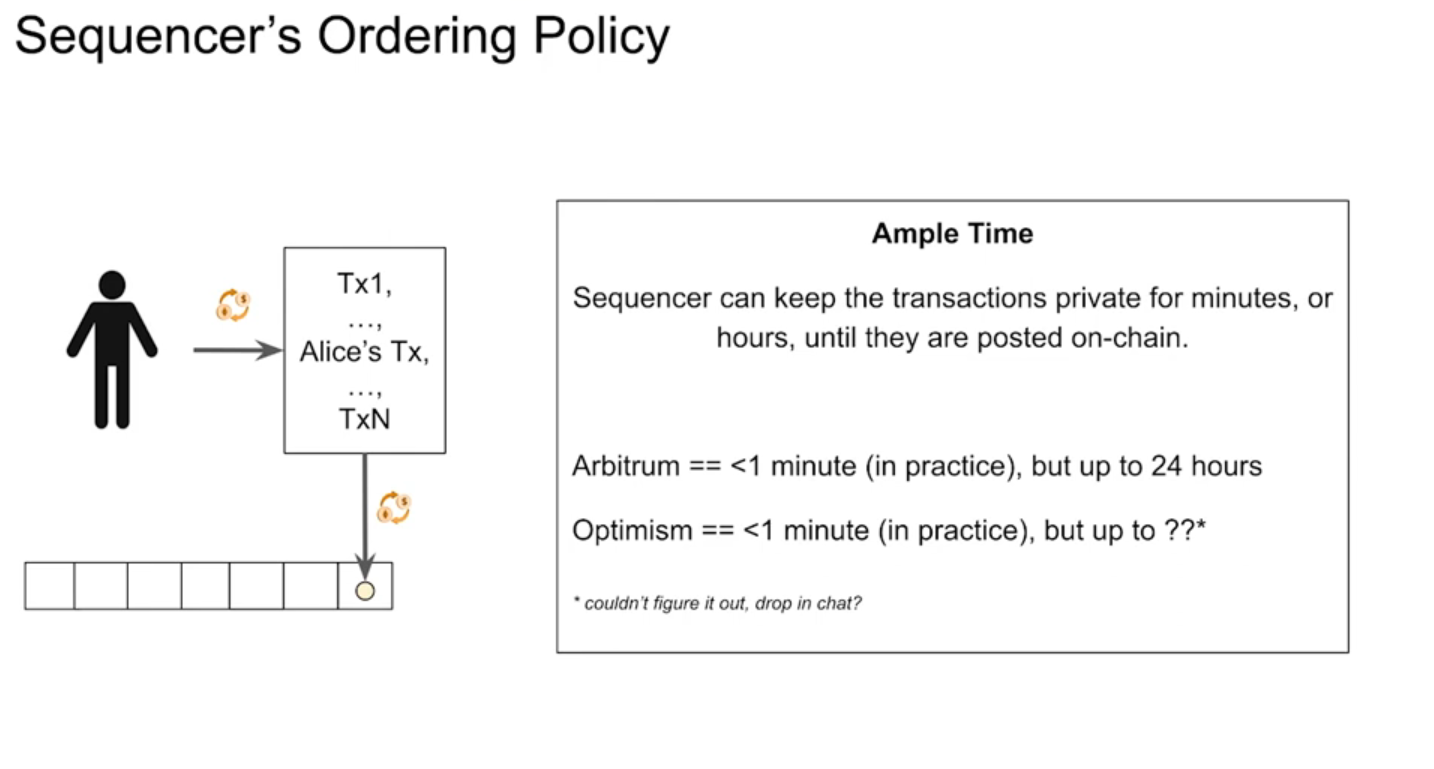

Basically when you talk about sequencers and mev and layer two, this is the first ordering policy that everyone talks about.

A user can be presented with "free transactions" as the MEV rewards are sufficient. But if we allow the sequencer to extract value for two to 3 hours, well, that sucks for the user

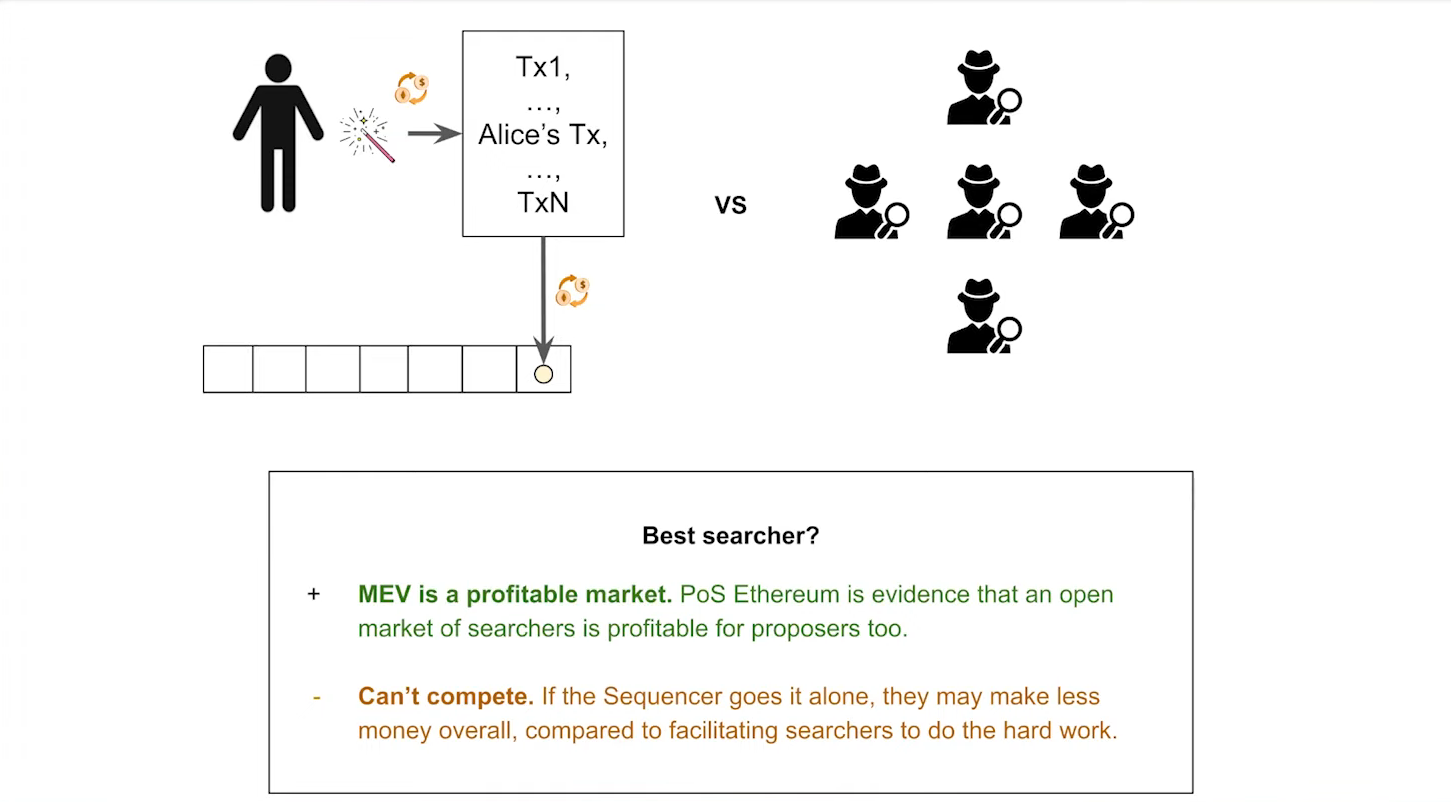

According to Patrick, we don't have to solve the fair ordering problem for now. There's a very good chance that a sequencer can make more money by having an open market of searchers do the hard work as opposed to trying to extract the MEV themselves.

Highest fee first (9:15)

This policy is similar to Ethereum's current system :

- Sequencers receive pending transaction lists from searchers.

- Searchers extract MEV and send bundles to the sequencer with payment.

- Sequencer orders transactions based on the payments received

Same user experience than Highest Extration First : users could still have free transactions because the transaction fee is actually the MEV that's extracted. But again, this could have a long delay.

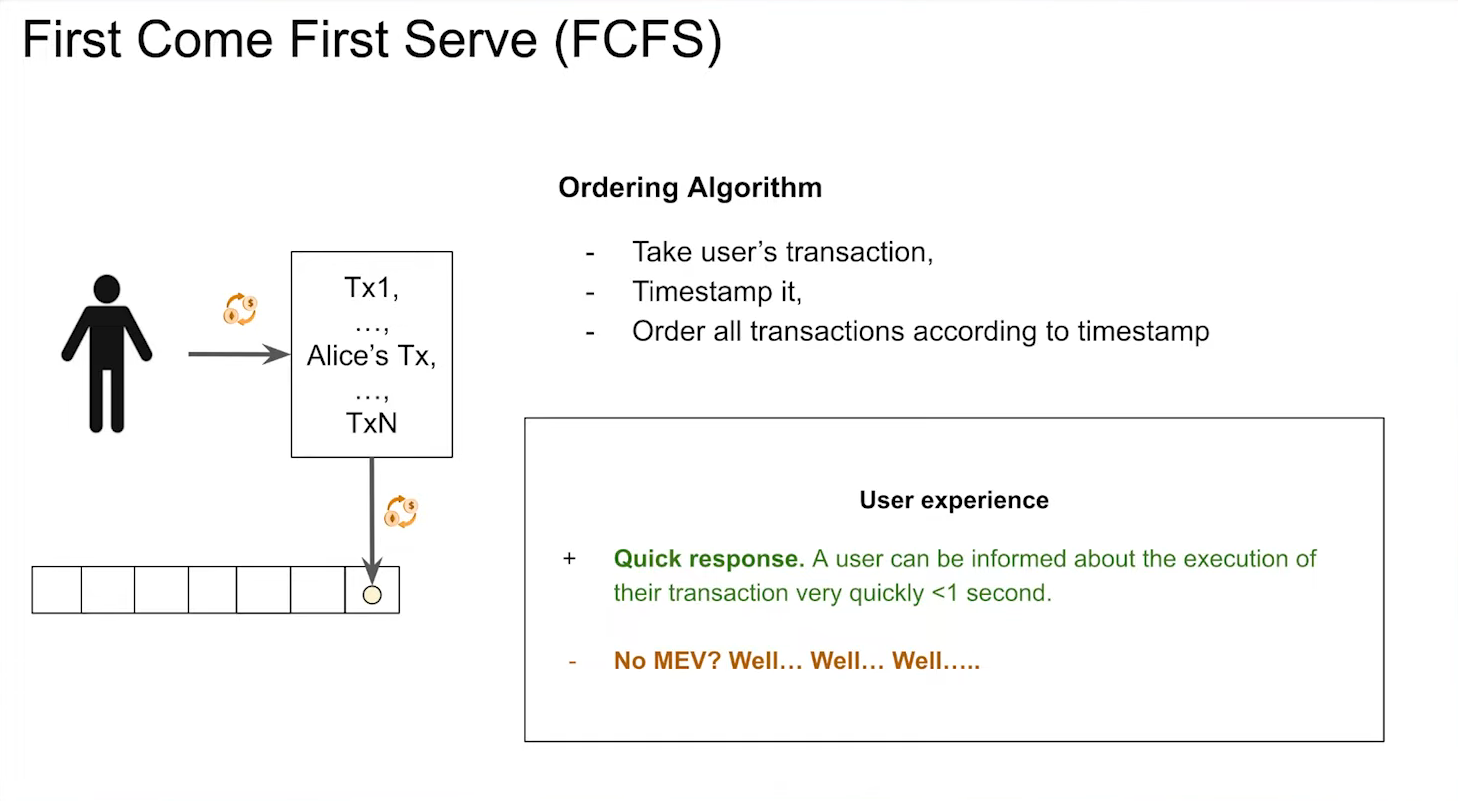

First Come First Serve "FCFS" (10:30)

FCFS seeks to prioritize user experience :

- User will send their transaction to the sequencer

- The sequencer will timestamp the transaction

- Transaction is ordered according to the timestamp

Somehow, it's like transacting on Coinbase : We send our transaction to the service provider and they return back a response to say it's confirmed.

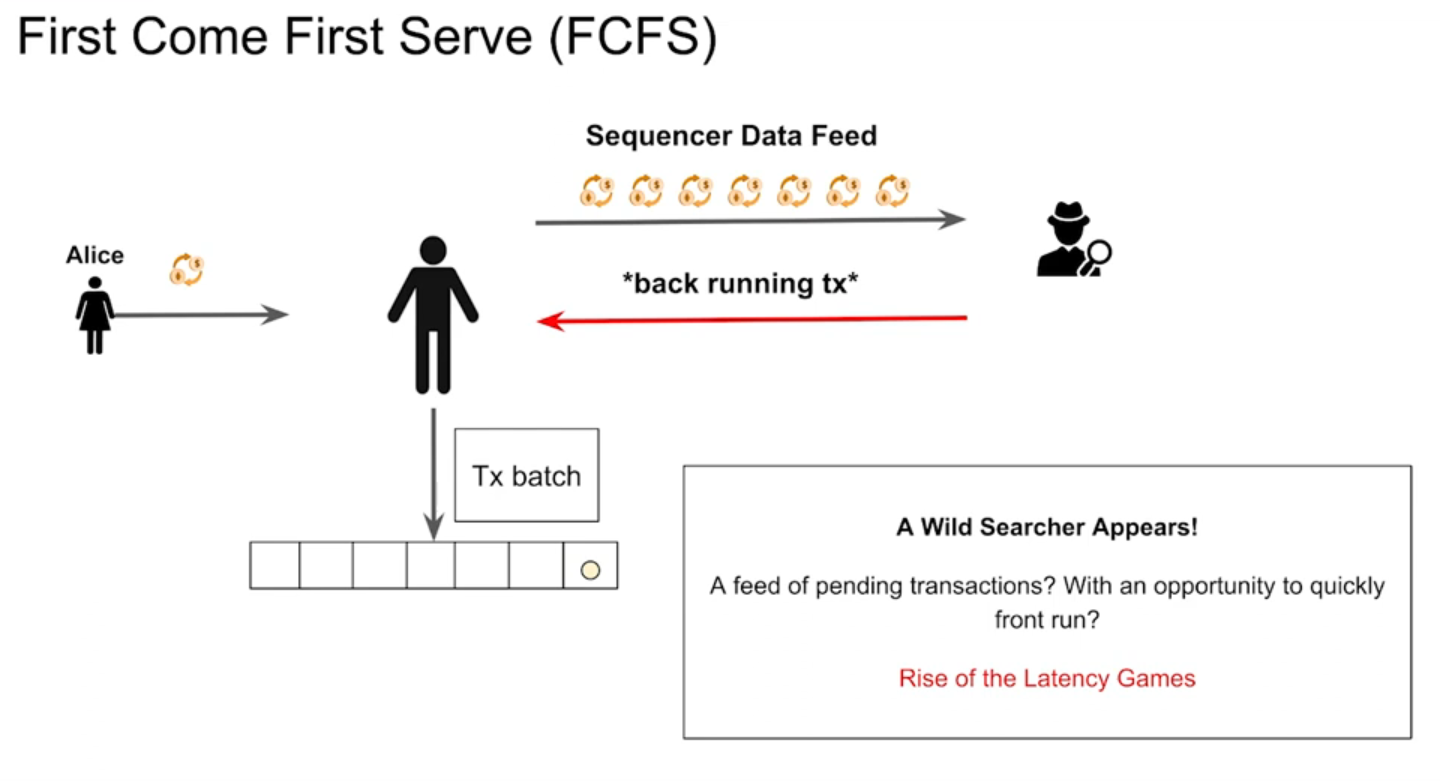

However, it's delusional to think there's no MEV out of this :

When a user gives their transaction, the sequencer will create a little block within 250 milliseconds and then publish that off at a feed.

Now, what happens when you have a data feed that's releasing data about transactions in real time ? Searchers lurk in the bushes

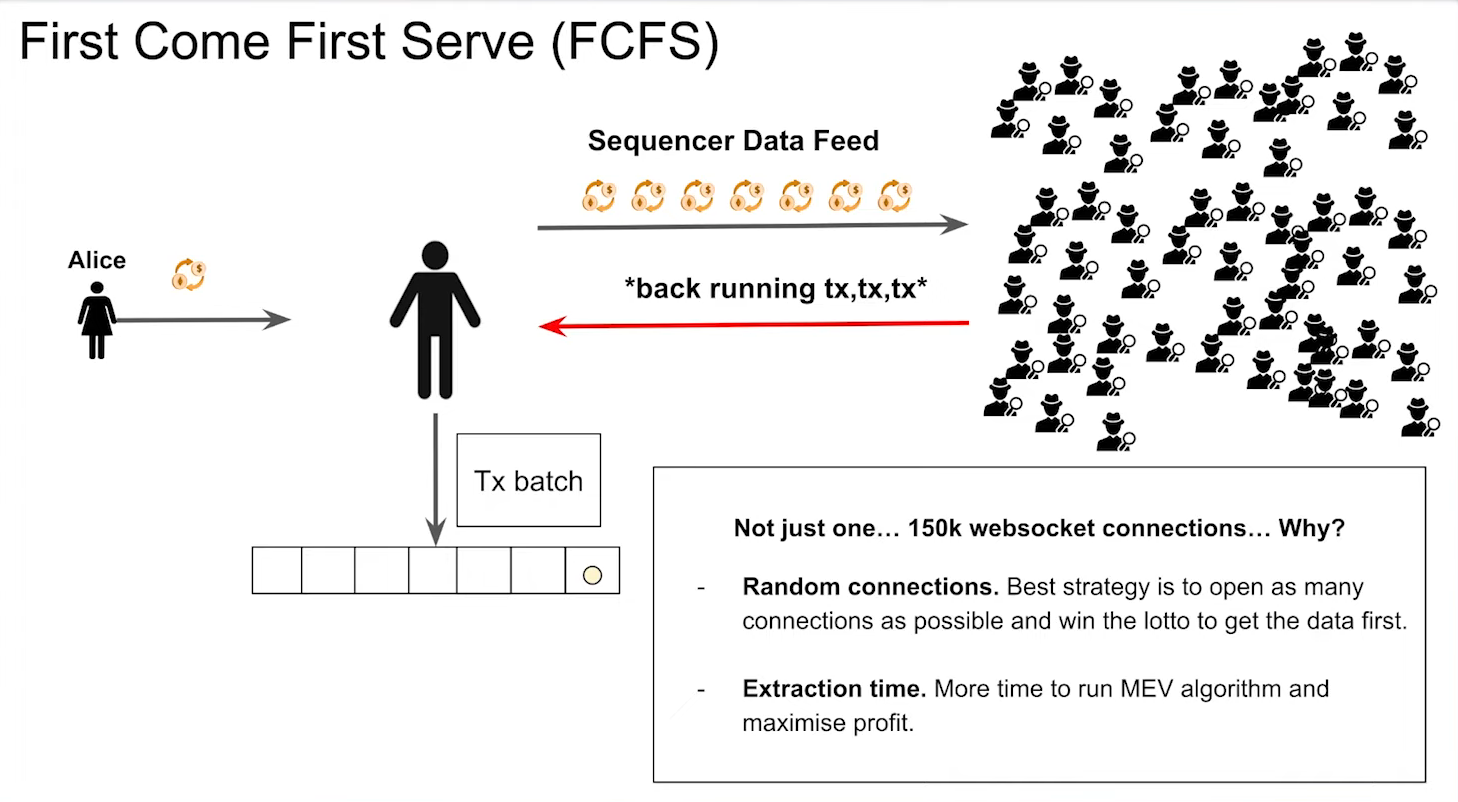

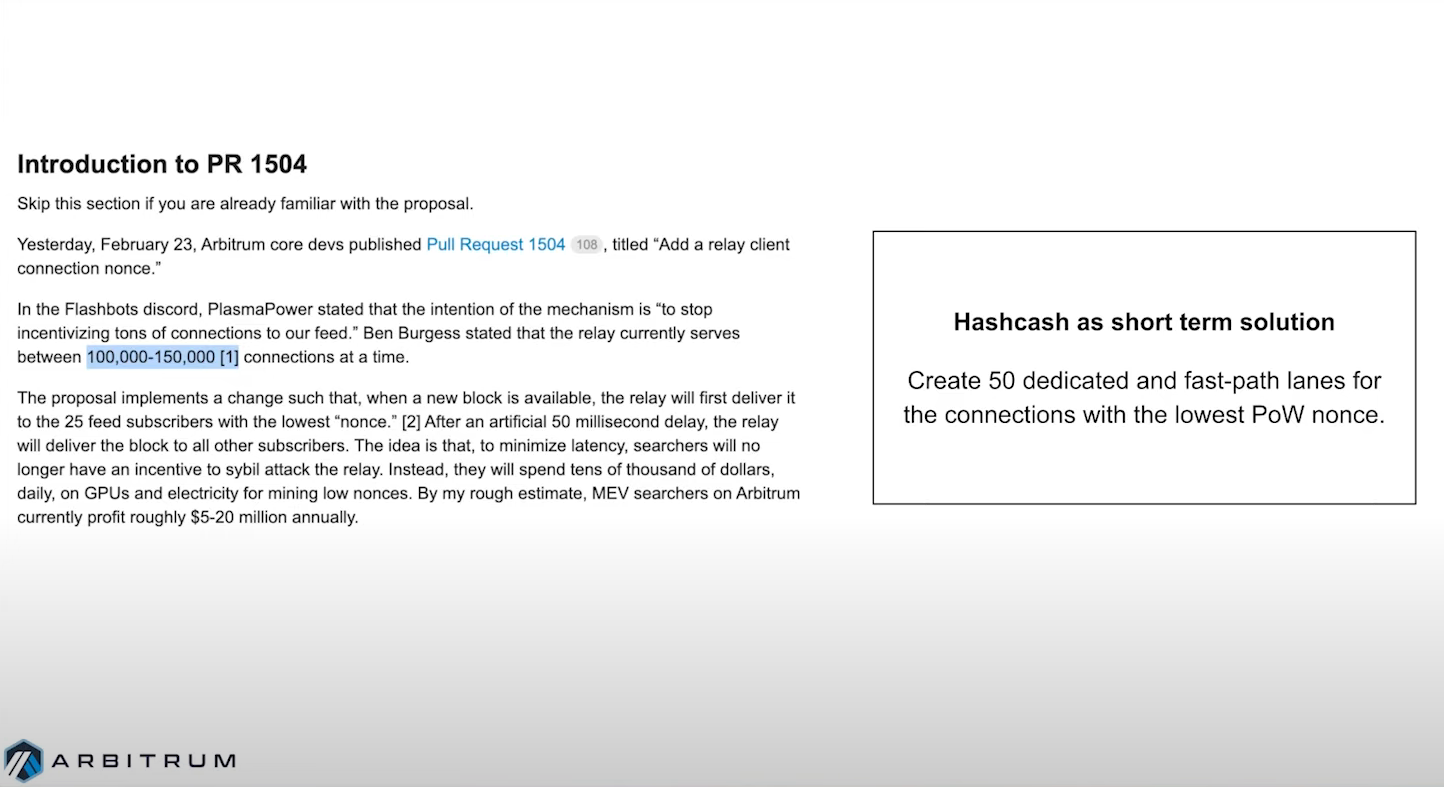

Whoever gets that transaction data first is the one who wins the mev opportunity. And this leads to the rise of latency games because you don't end up with one searcher, you end up with 150,000 WebSocket connections (as Arbitrum experienced). A solution to this is "hashcash", but this is for short term.

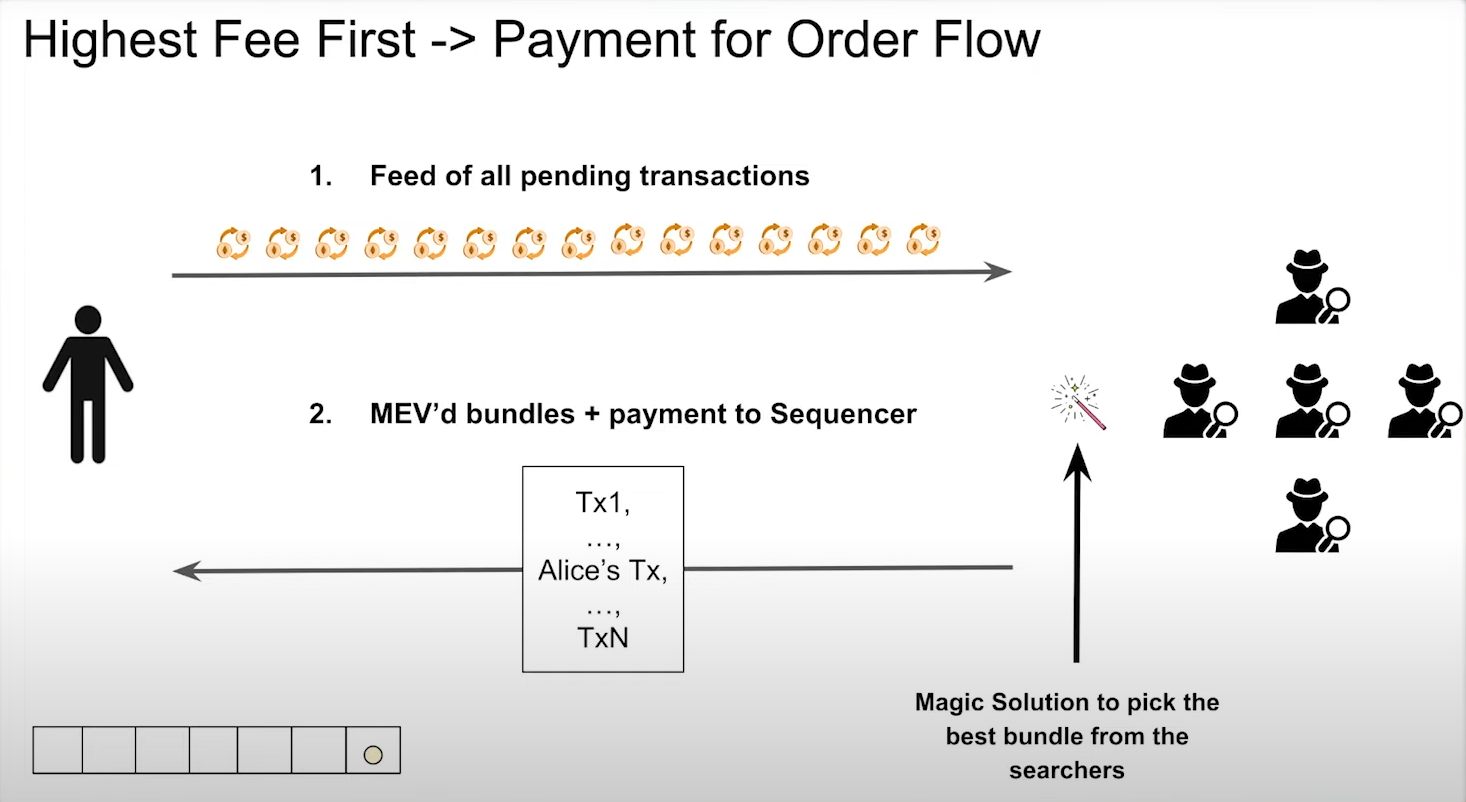

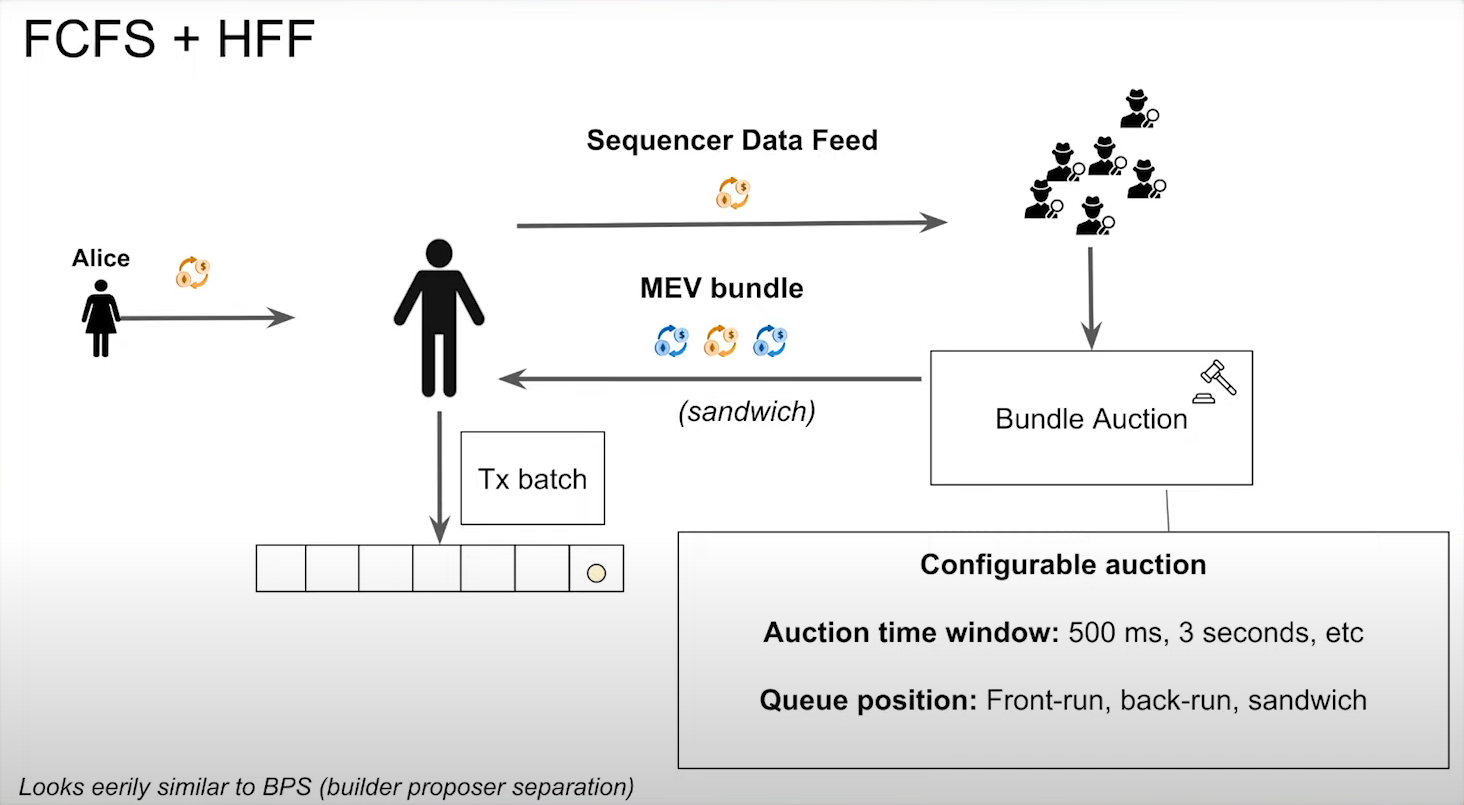

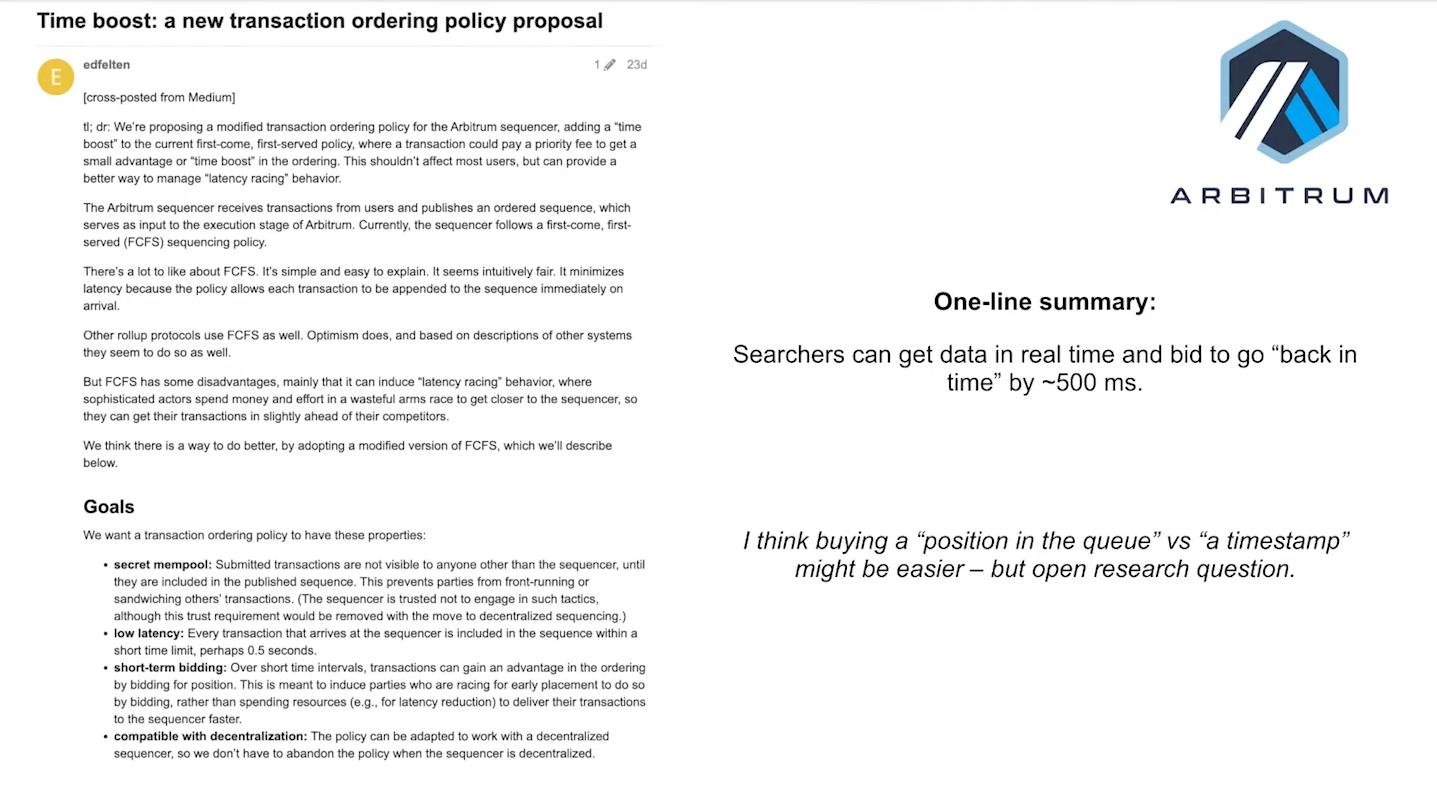

FCFS + HFF (13:00)

A more interesting solution according to Patrick is combining First Come First Serve and Highest Fee First :

- Users' transactions are collected by sequencers who pass them onto searchers in small bundles.

- Searchers participate in an auction by extracting responses from the bundle they received and submit their bundle with payment to the sequencer.

- The sequencer selects the bundle that offers the highest payment and confirms it.

Benefits :

- Fast confirmations for users' transactions (500 milliseconds)

- Searchers have an open market for participating in auctions, allowing them to find profitable extractions within smaller bundles.

- Sequencers can decide which types of extraction are allowed or not (front-running, background transactions, sandwich transactions, or all of the above).

Everything that is said about the FCFS + HFF is to be taken conditionally, because it's still an open problem today