MEVconomics.wtf : a 7-hour conference summarized in 64 minutes

MEVconomics.wtf is a 7-hour conference which offers an impressive amount of relevant information about MEV. But by reading this summary, you can get the essentials while saving time

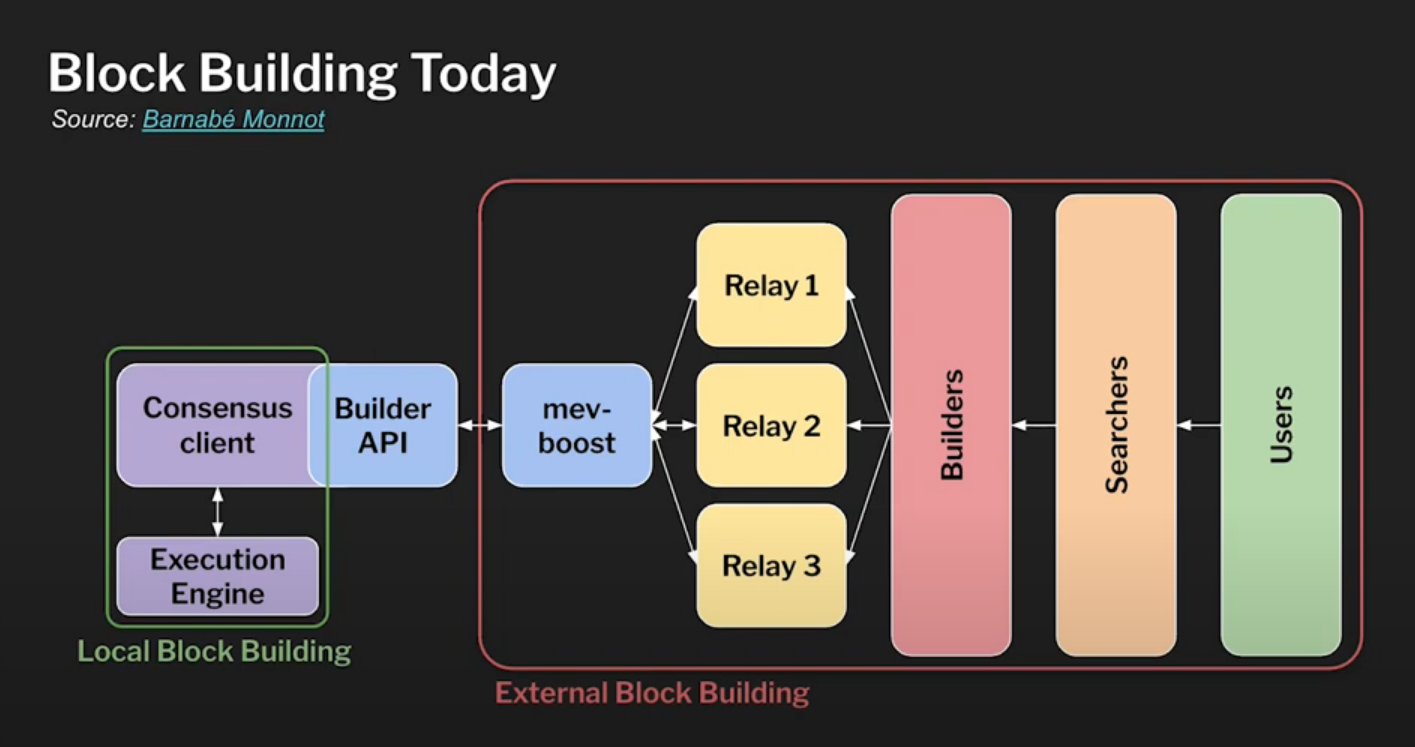

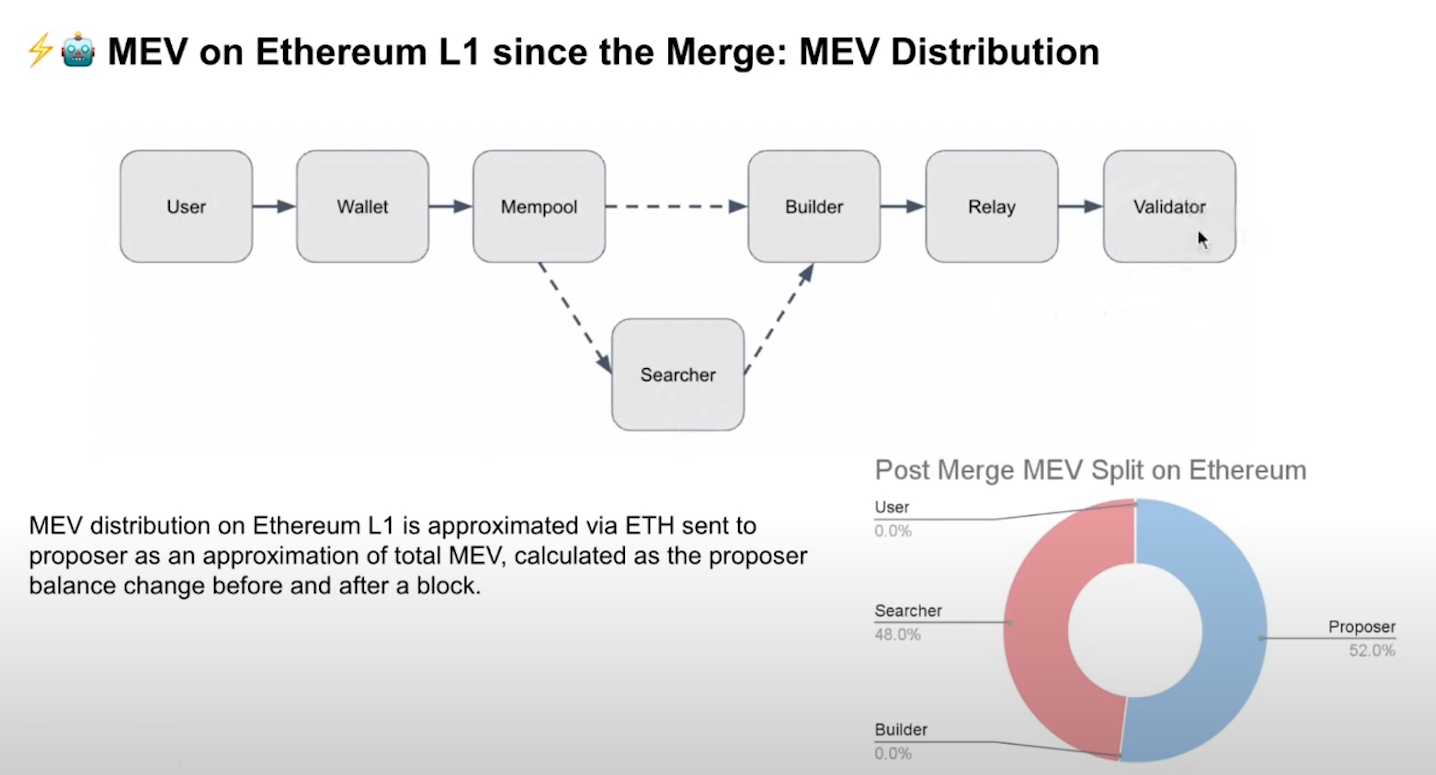

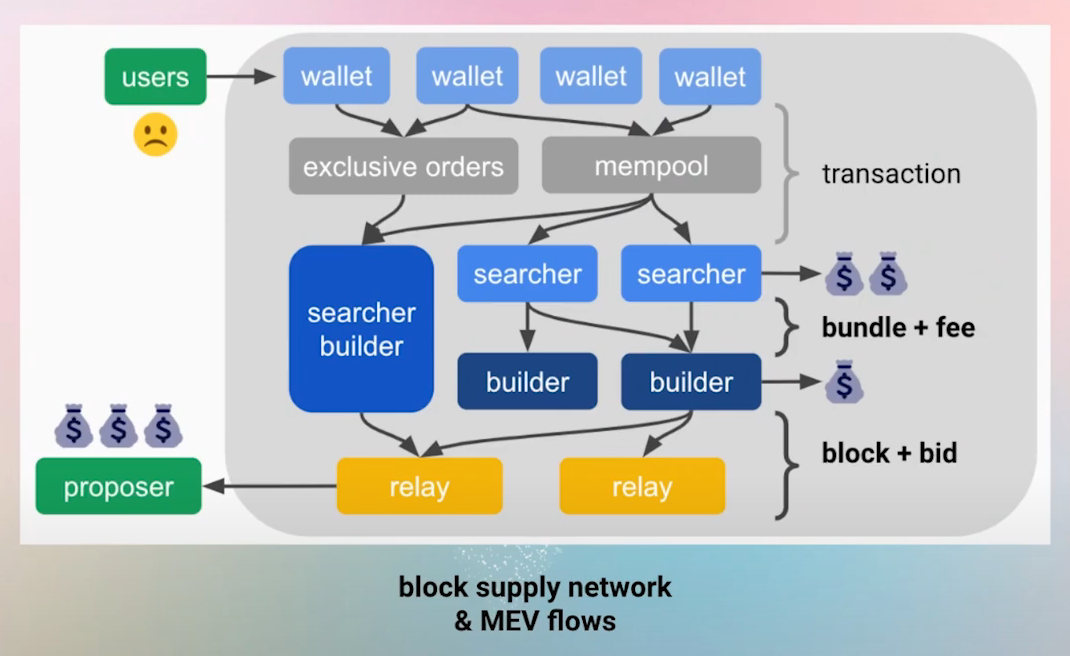

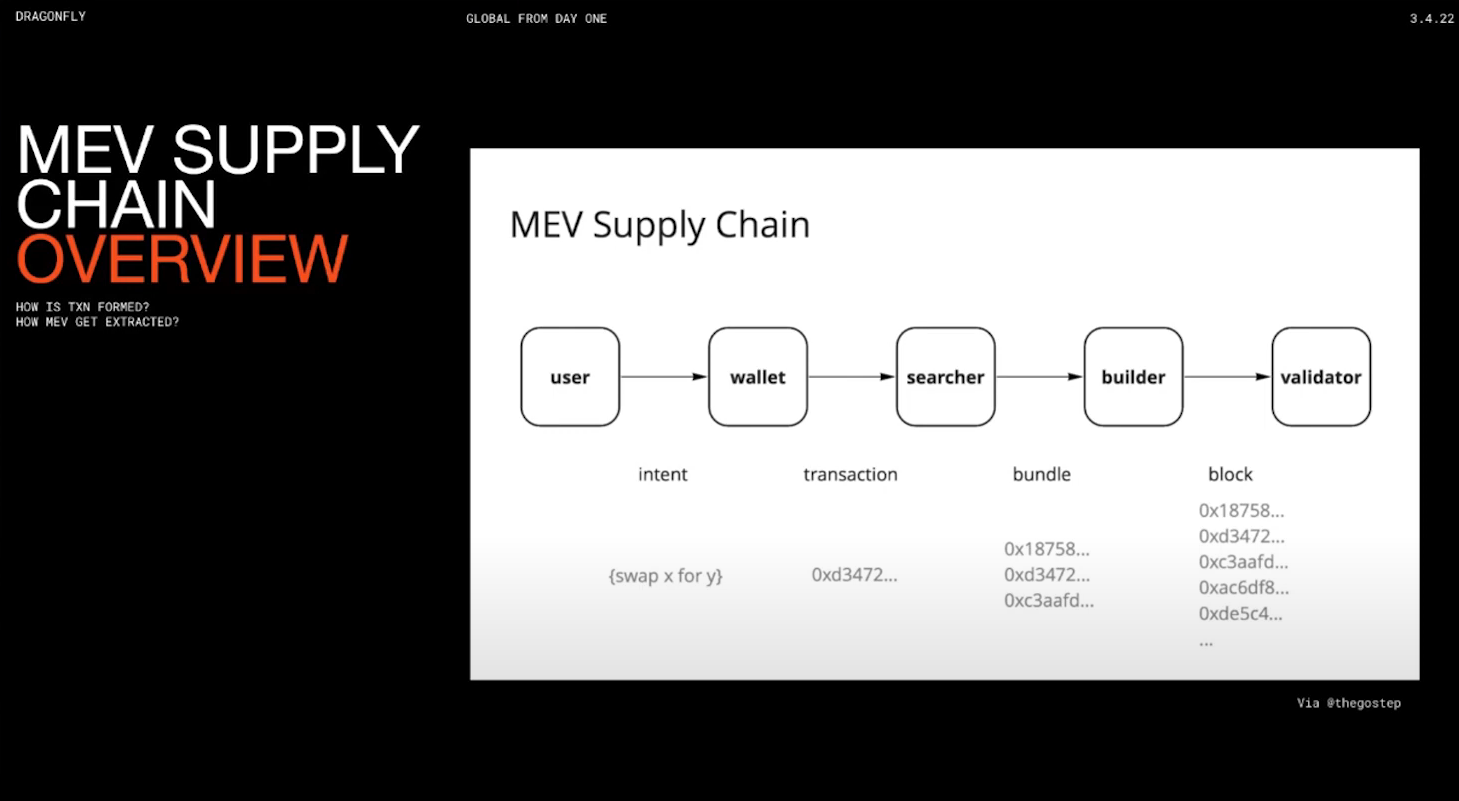

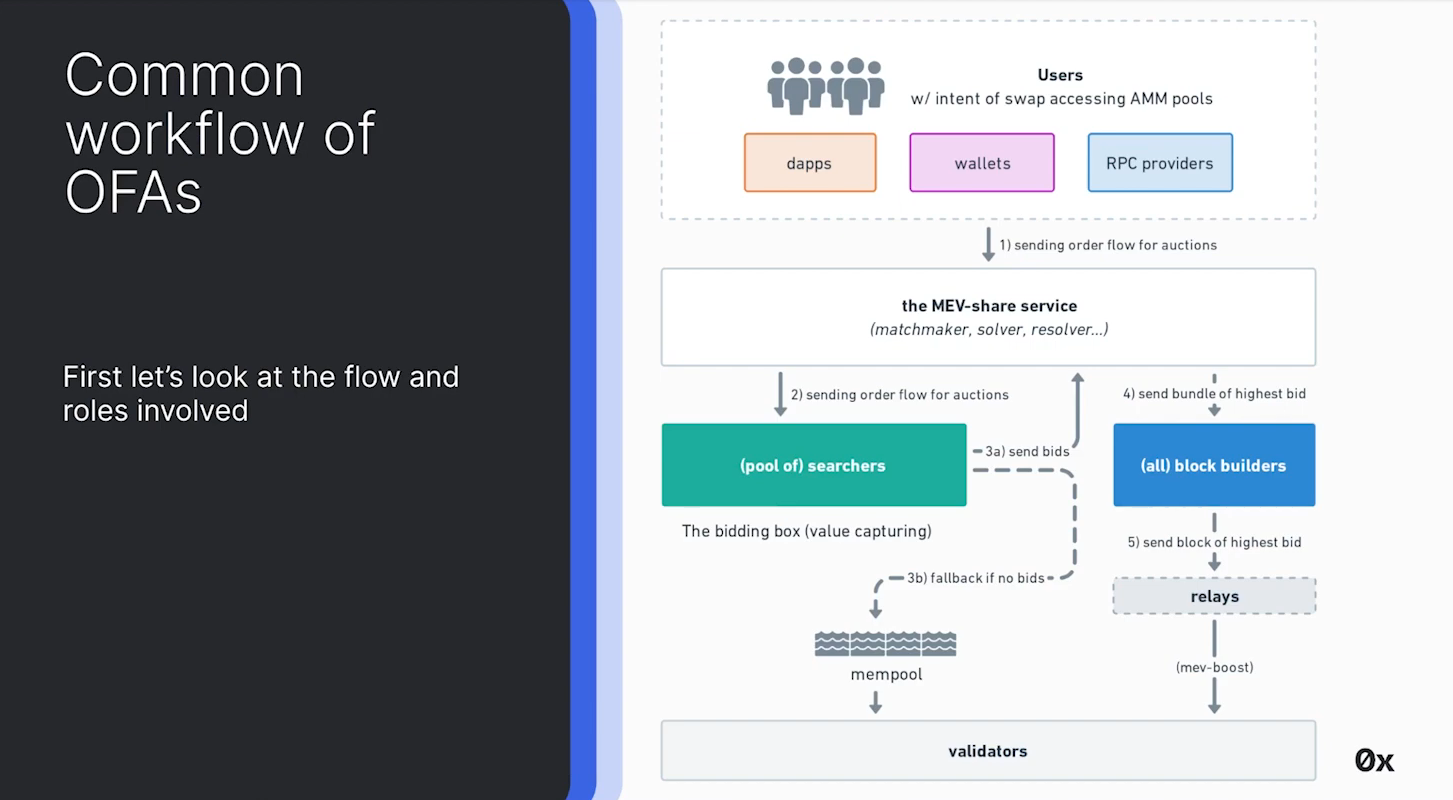

Block building today (1:00)

- Users send transactions

- Searchers bundle transactions ans send them to builders through relays

- Builders create blocks and propose them to validators

The separation between proposers and builders (aka PBS) allows validators to have permissionless access to MEV without needing sophisticated actors.

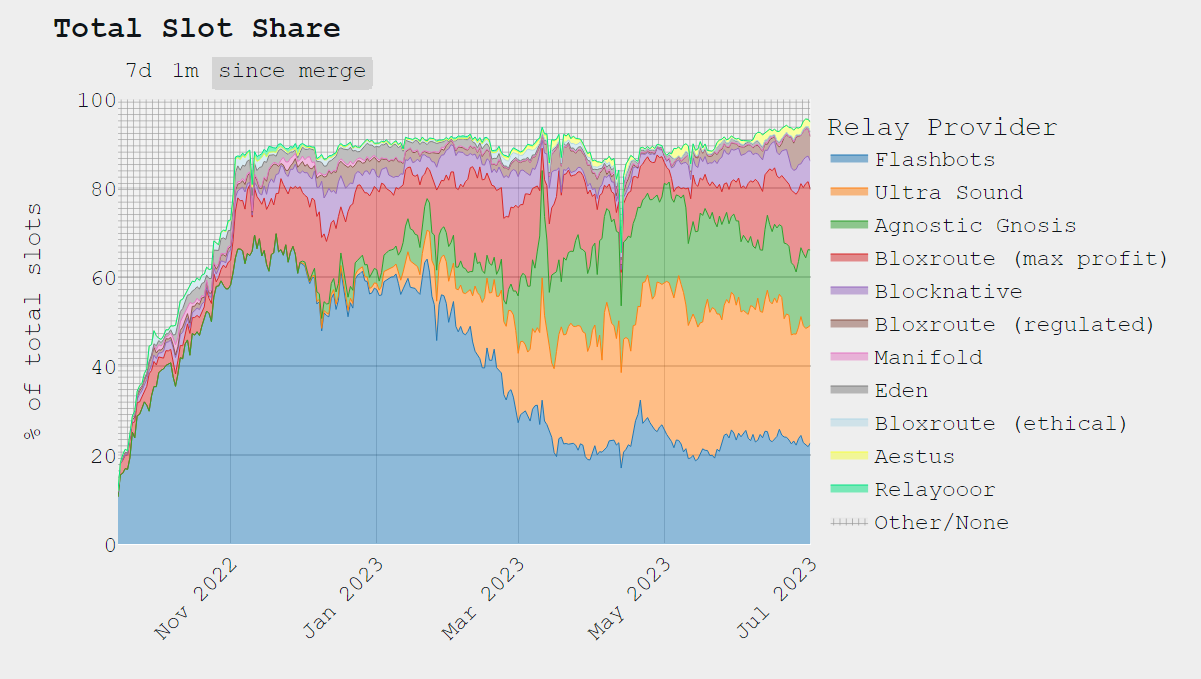

Trends since the merge (2:00)

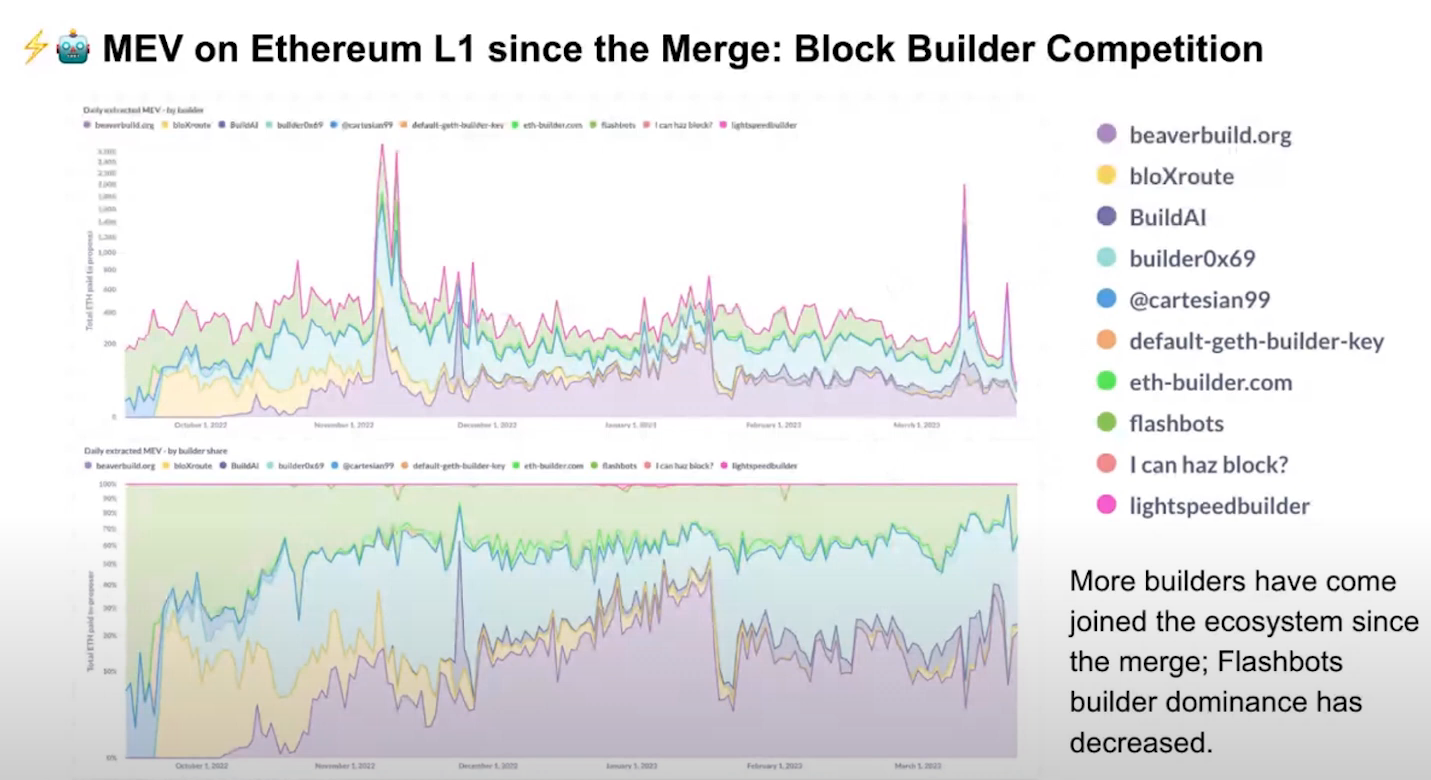

Since the merge, the builder market has become more diversified with many participants taking market share (we can thank Flashbots to have open-sourced their builder).

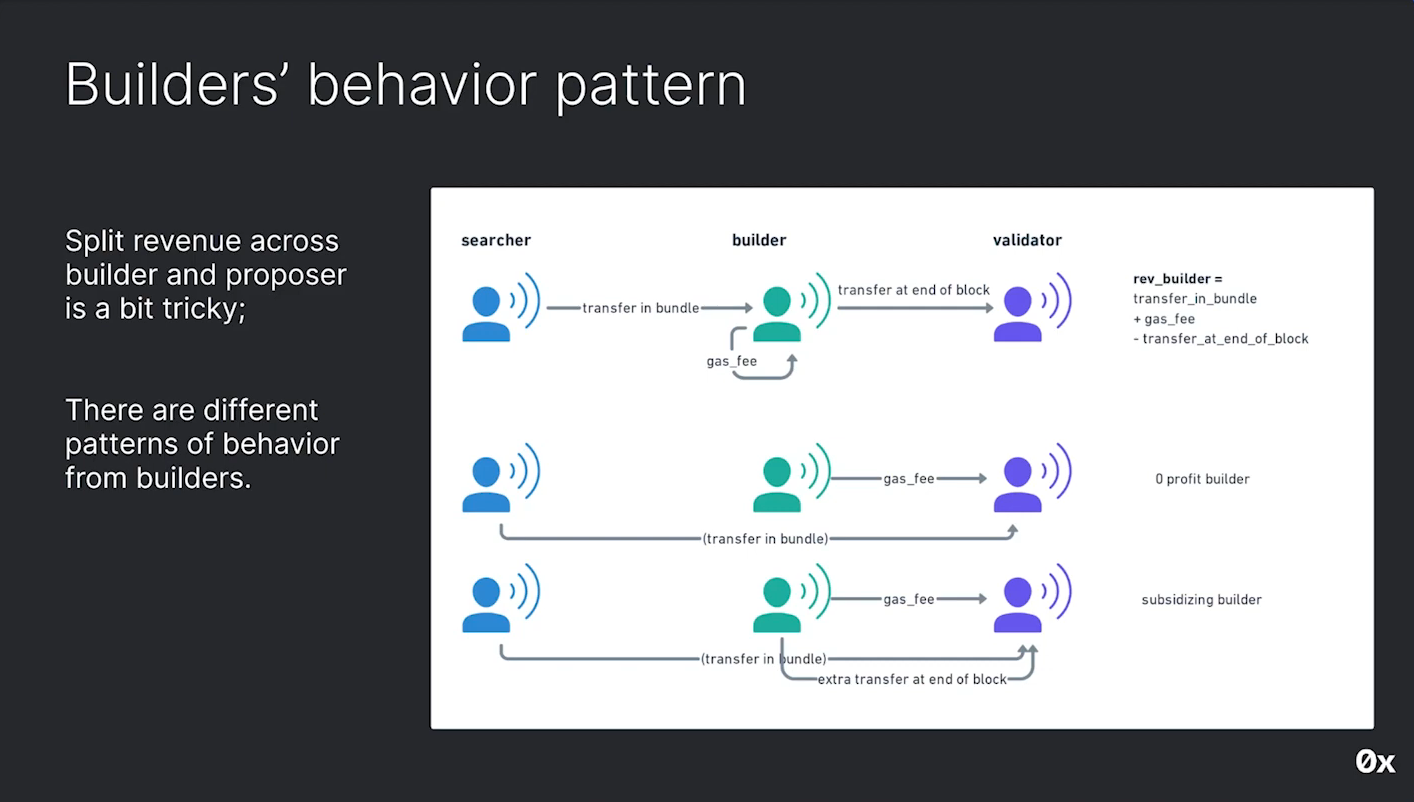

Some builders are able to make profits while others operate on a flat model passing everything through. But builders have the potential to add additional features in their role.

Centralizing Tendencies (3:00)

While there is currently a good amount of decentralization in the builder market, we need to decentralize more :

- Better privacy tech needed to coordinate

- Less trusted

- Return more value to users

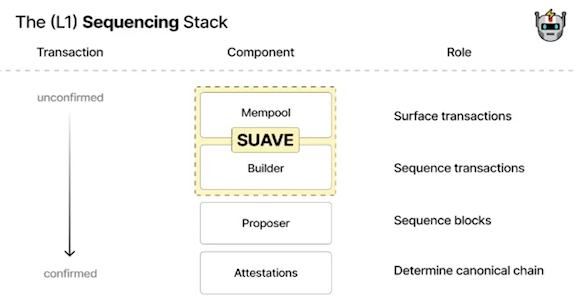

Efforts are being made to build a fully decentralized block building network with multiple participants contributing to building a single block. That is exactly what SUAVE does

Relay Market (4:00)

Relays act as the pipes between builders and validators, transmitting blocks.

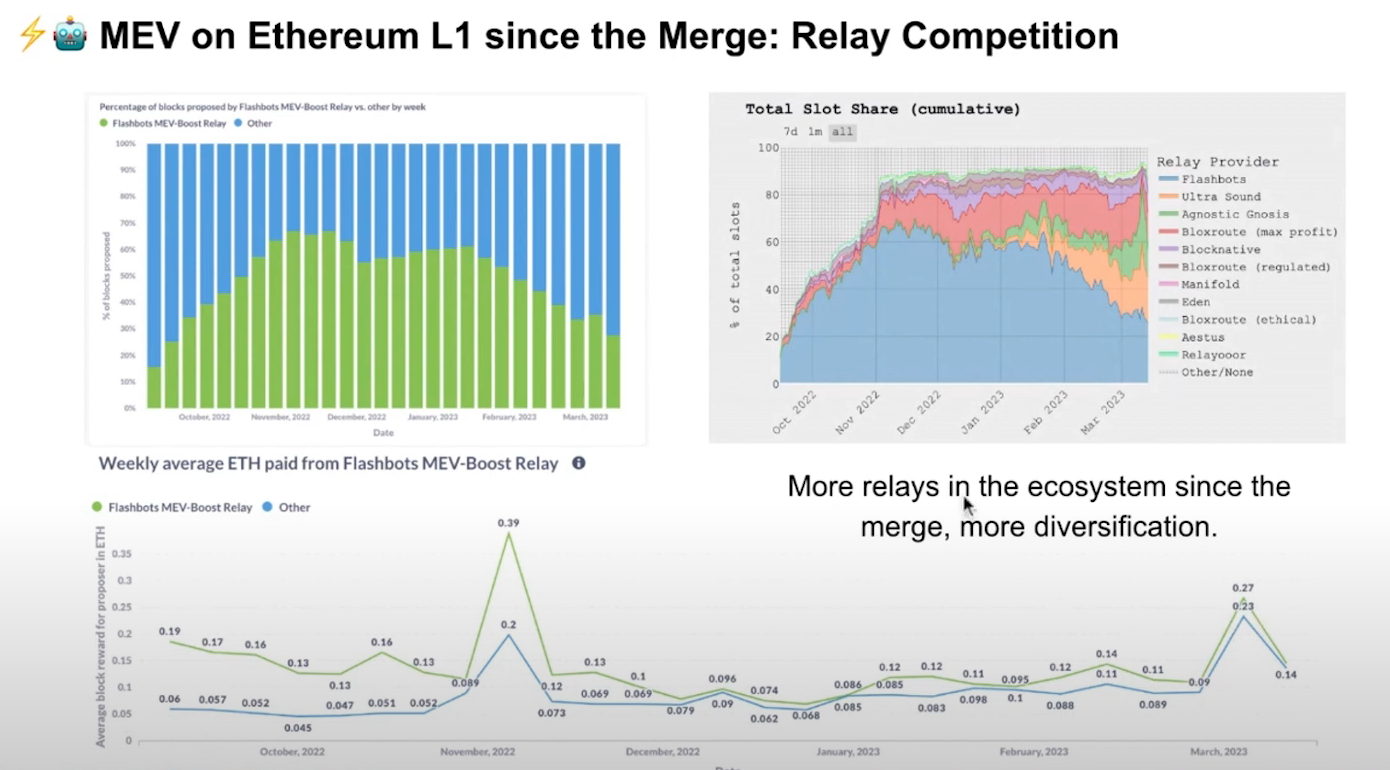

Initially, relays were centralized with flashbots being widely used. Diversification has occurred in recent months, with ultrasound relay which became bigger than Flashbots.

Ultrasound Relay offers a new concept called "Optimistic Relays", where latency is reduced by eliminating the need for relays to validate blocks before sending them to proposers. The problem is that blocks are no longer fully checked but collateralized by builders.

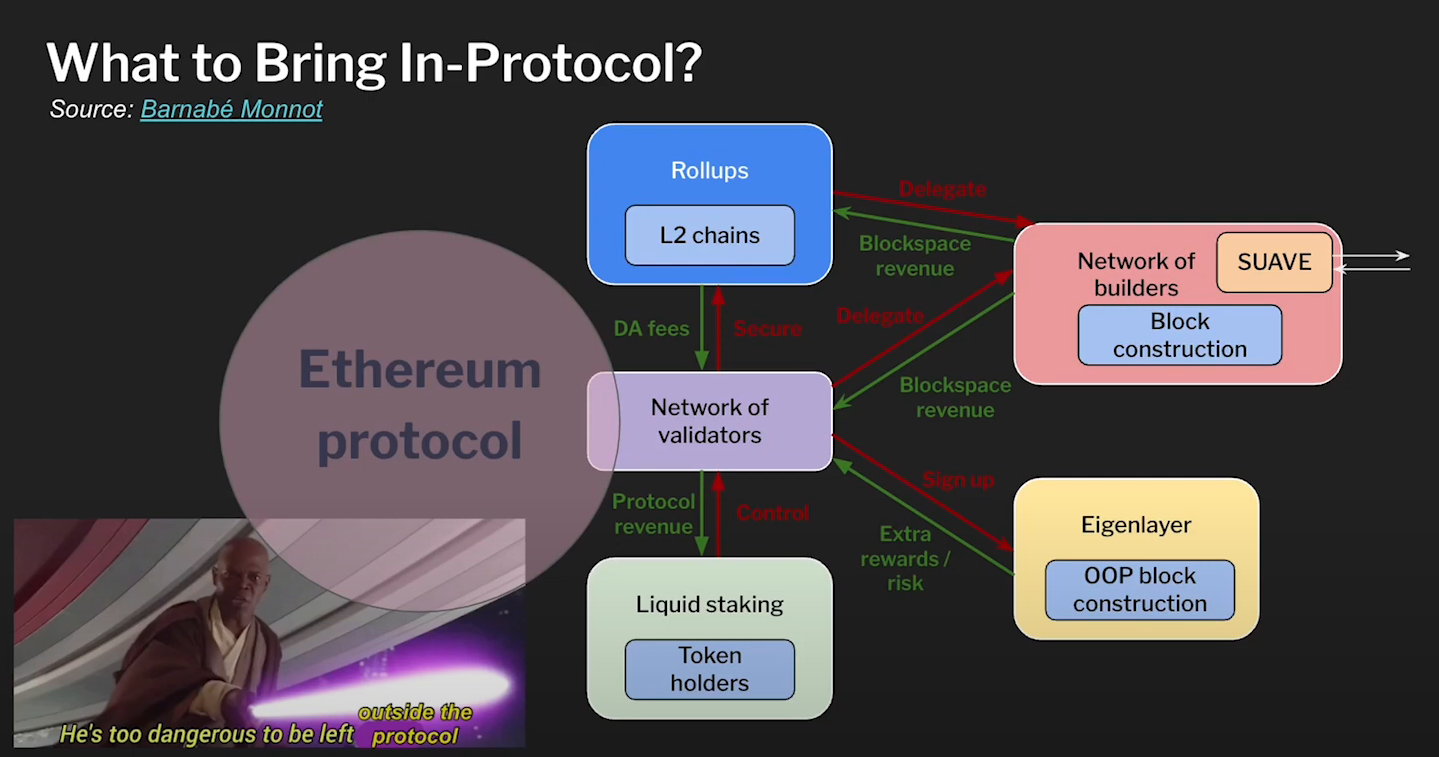

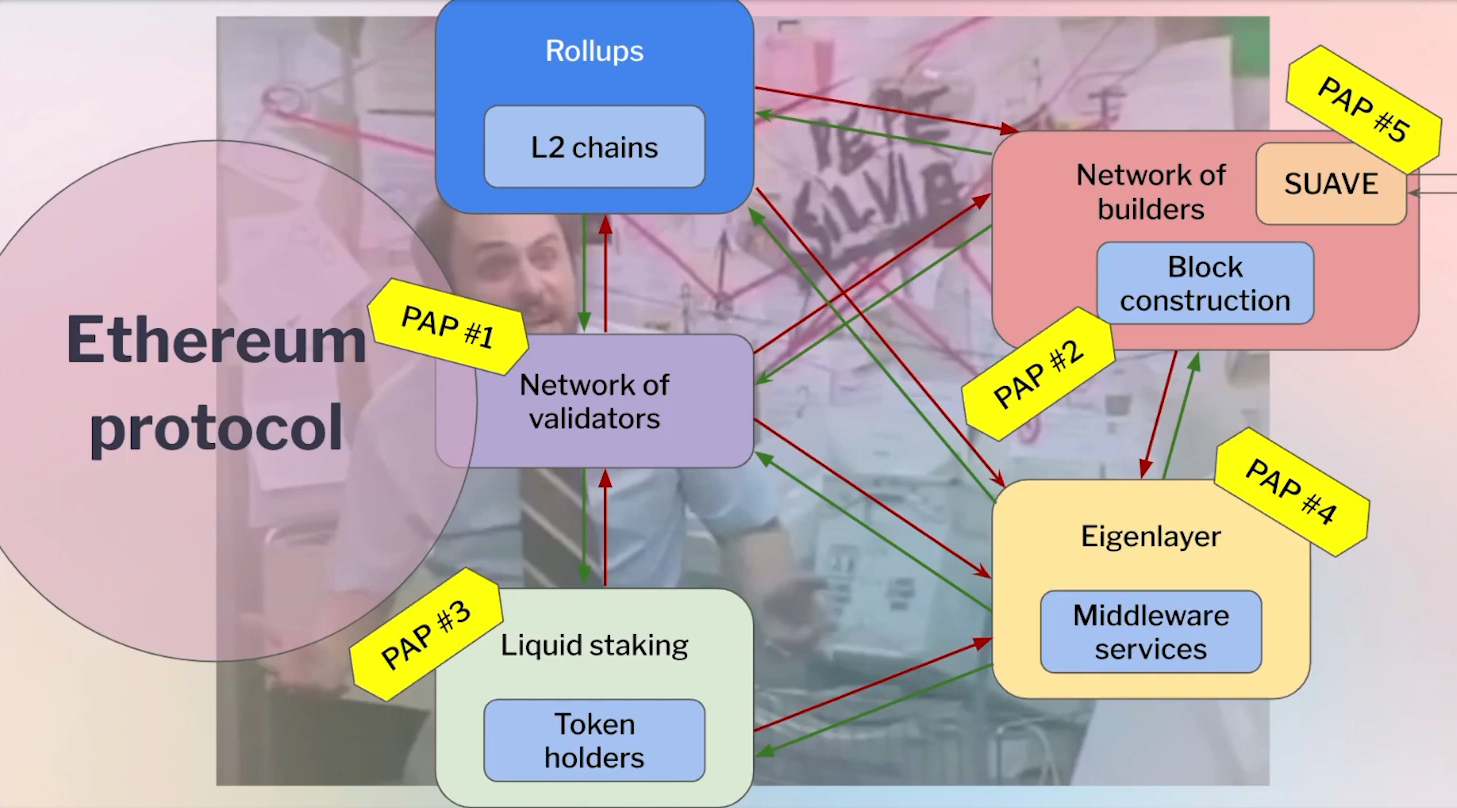

What to bring in-protocol ? (6:30)

The Ethereum protocol is currently simple, and most actors operate outside its core. A perfect example of that is in-protocol Proposer-Builder Separation (PBS). So relays are necessary due to the lack of market structure and allocation mechanism for PBS built into the protocol itself.

However, we can see mechanisms like Protocol-Enforced Proposer Commitments (PEPC, "pepsi") and Restaking to make the protocol more flexible

Making the Protocol More Flexible (8:00)

Proposal Builder Separation (PBS) is two things : market structure, and allocation mechanism.

The idea of making the protocol aware of external commitments is explored as an alternative to specific proposer-builder separation models. This is what PEPC aims to do, and this relates a lot to Restaking, as proposers can potentially opt into external commitments outside of the protocol

This raises a big question : what is actually the Ethereum protocol's job to guarantee ?

Markets overview

Validators Market (9:30)

A handful of opertaors do control a large amount at stake. While we have high economic security, we have to decentralise the actual state behind all this

For example, Lido made Lido Staking Router : anyone can develop on-ramps for new node operators ranging from solo stakers, to DAOs and Distributed Validator Technology (DVT)

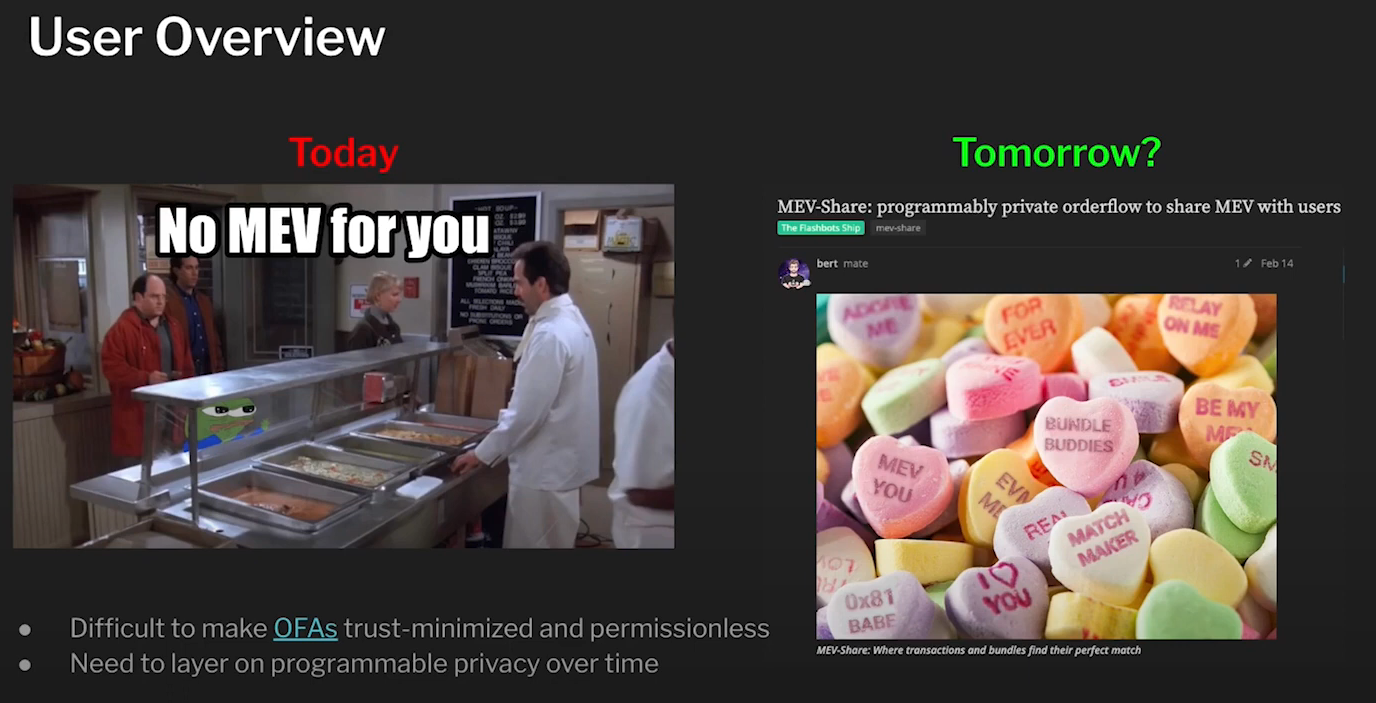

Value for users (10:30)

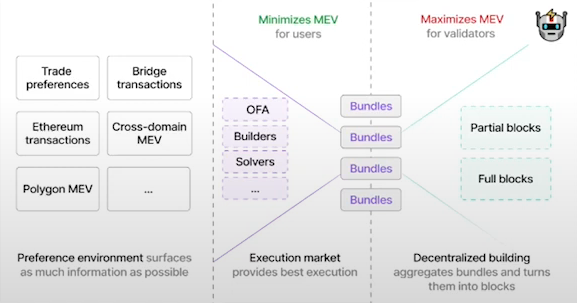

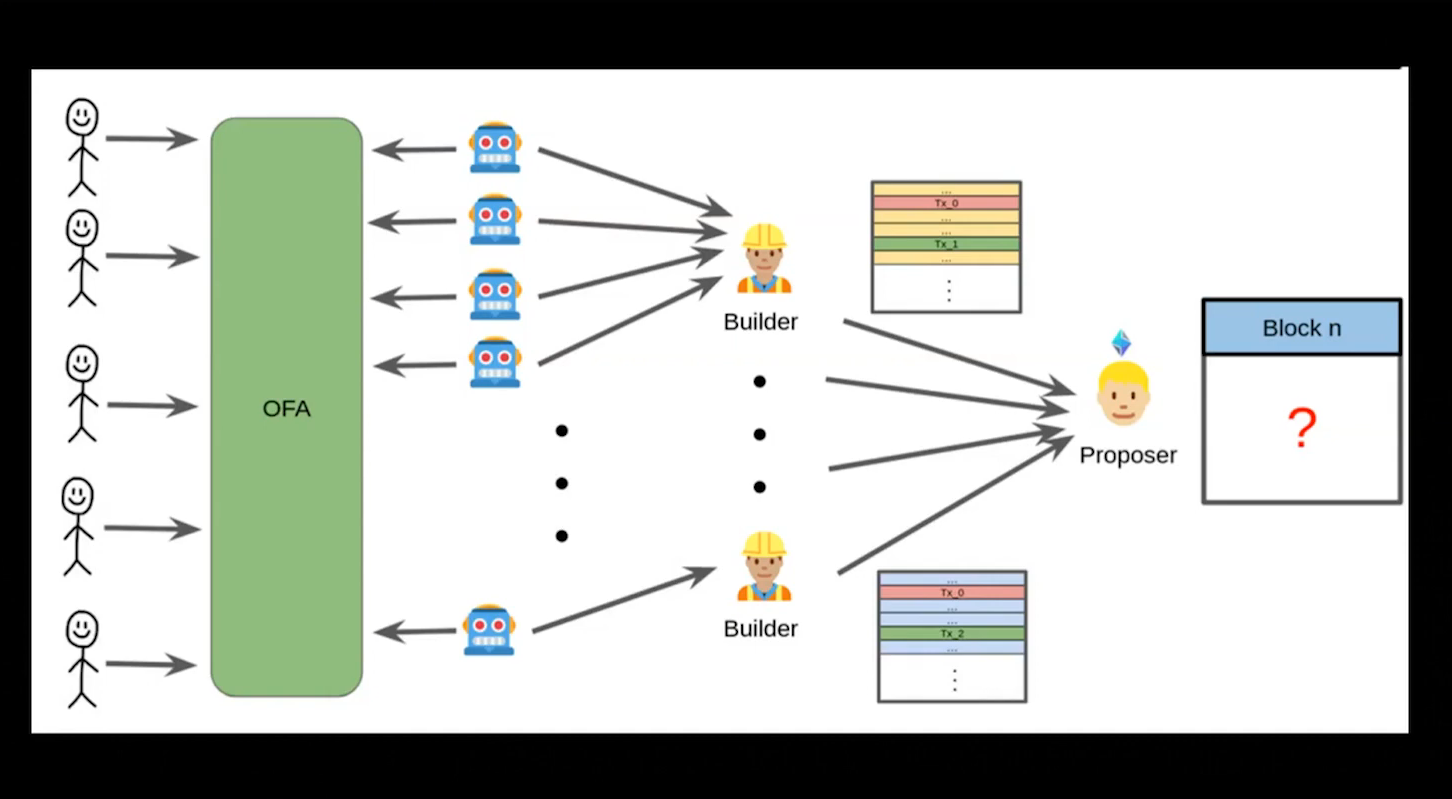

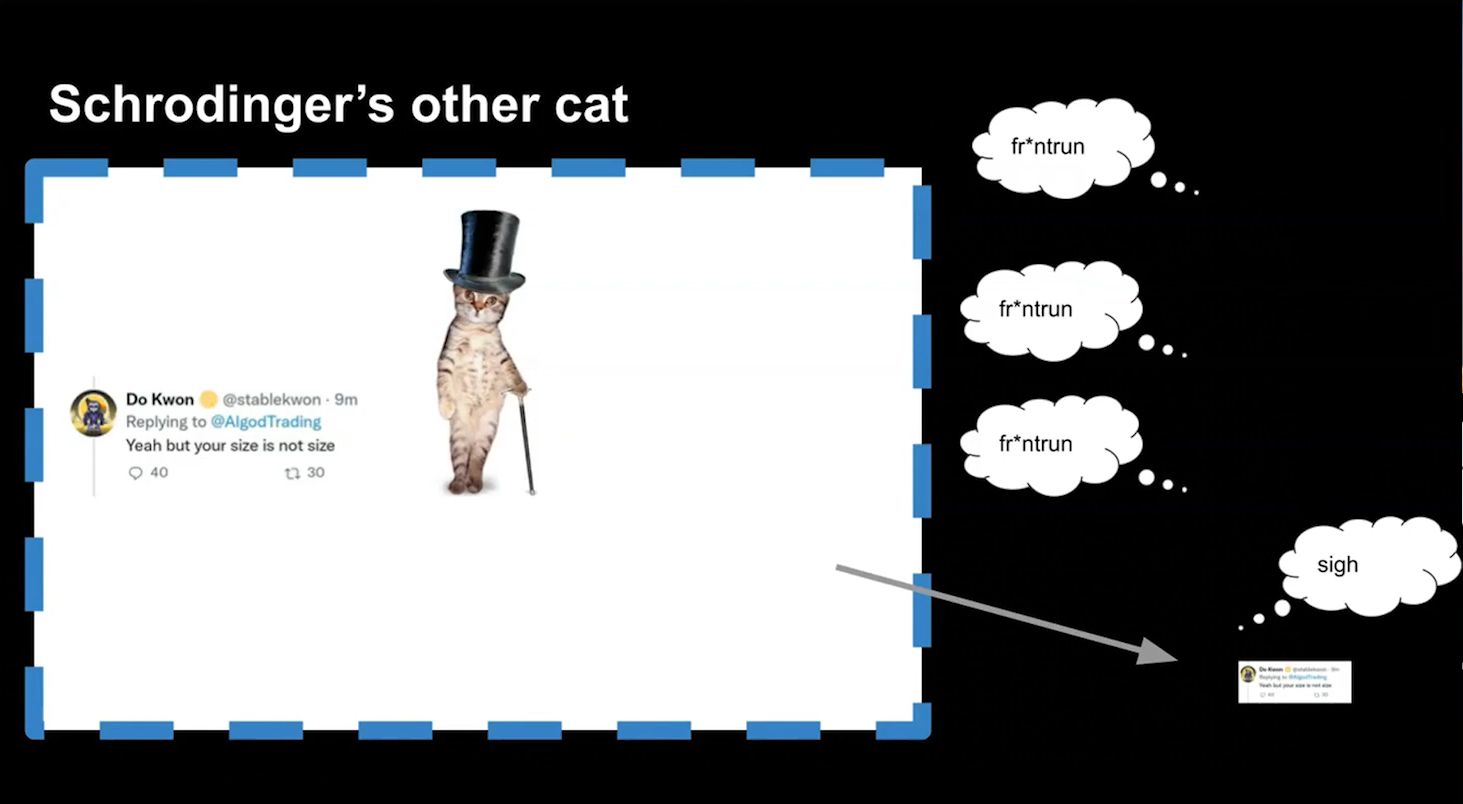

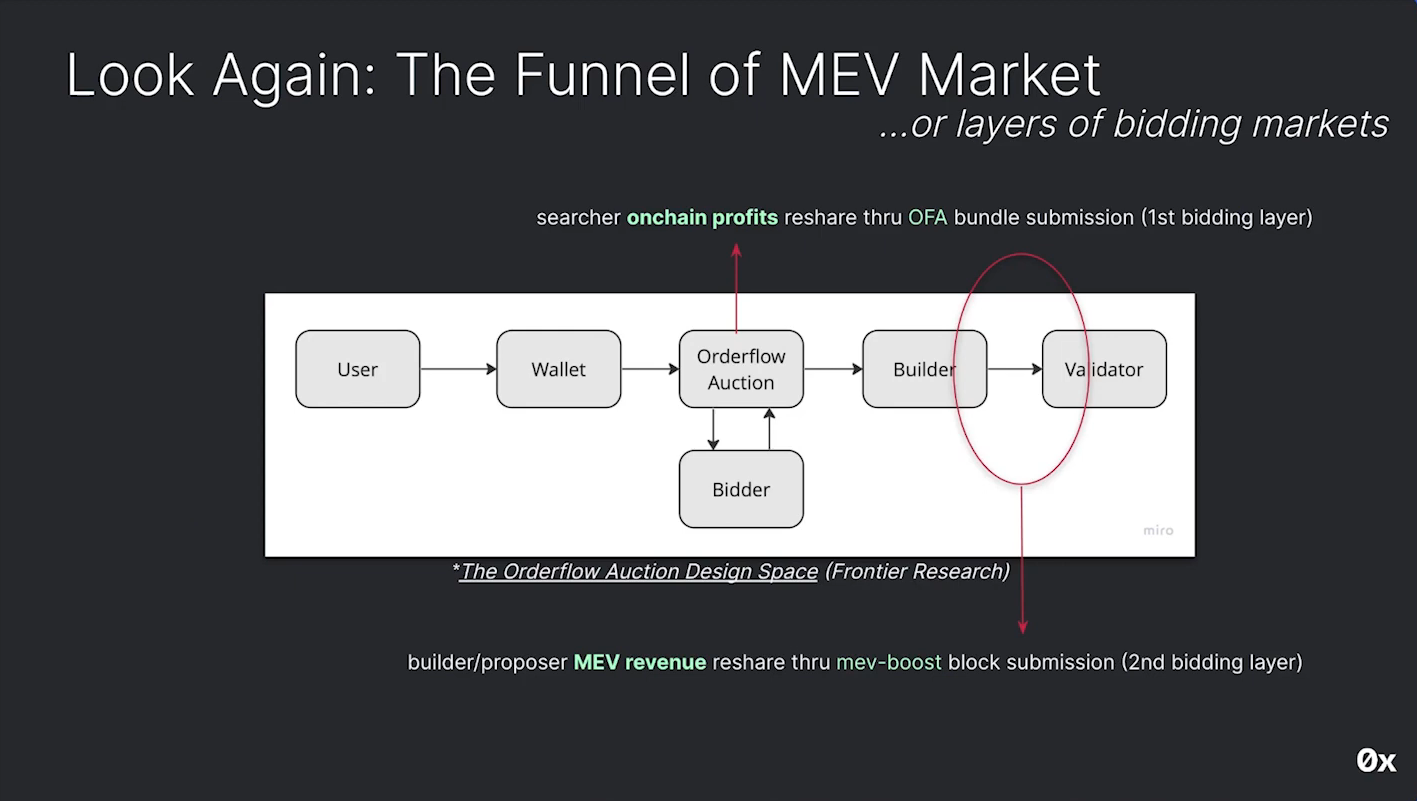

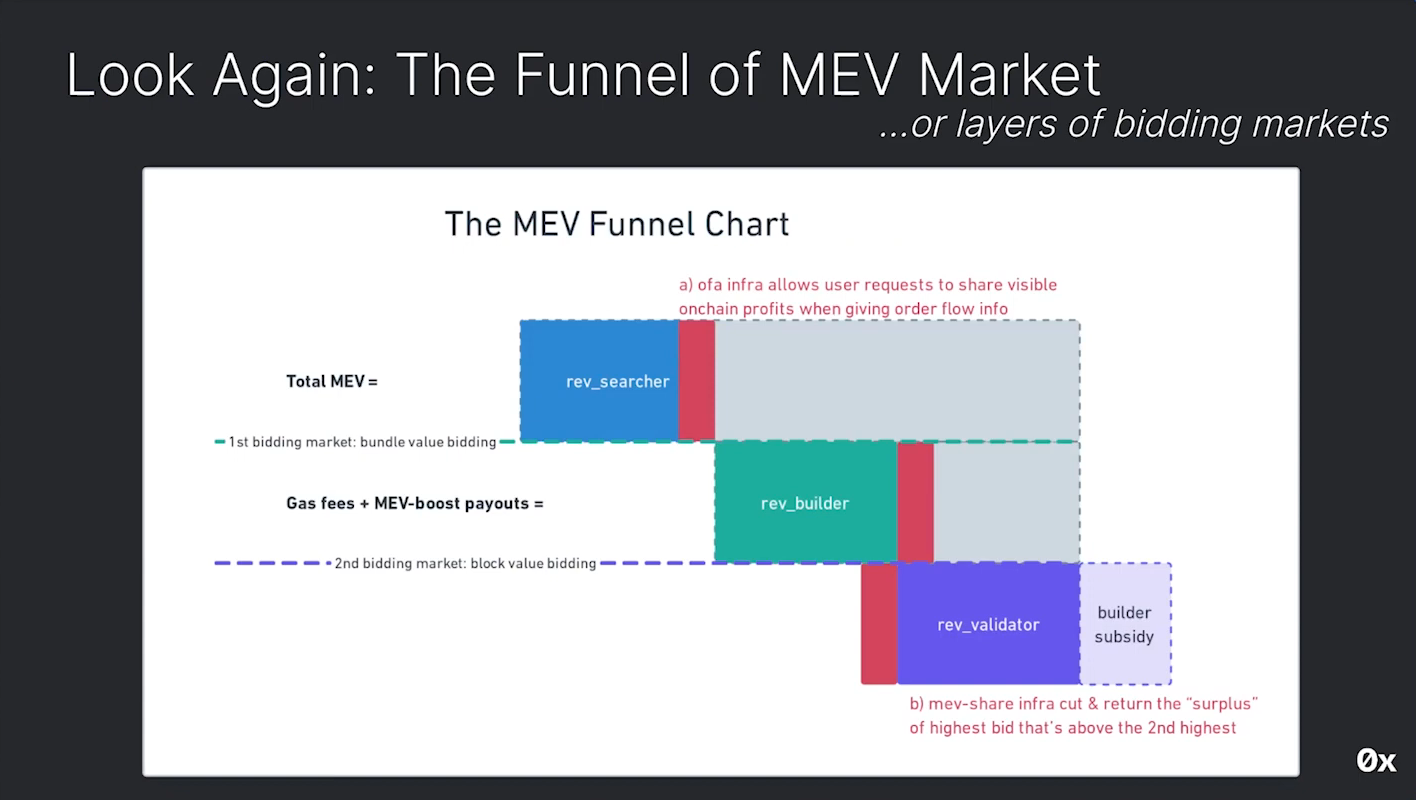

There's no competitive process where people are bidding the user back the actual value of what his order is creating. This is the core concept behind Order Flow Auctions (OFAs)

Order flow auctions involve a competitive process where searchers bid for the right to execute trades. Bidders can capture value by offering higher bids in the auction. The challenge lies in implementing trustless mechanisms for order flow auctions.

Wallets overview (11:45)

Wallets need to monetize their service. They aggregate user order flow and send it through the supply chain, so they have significant power in controlling where orders are executed. But striking private deals between wallet entities and builders, isn't this TradFi ?

A more desirable approach is to create a permissionless system that avoids centralizing forces, especially with OFAs

Application layer Overview (12:30)

In general-purpose environments like Uniswap, a significant portion of generated value benefits Ethereum validators, searchers, builders, etc. Applications aims to internalize MEV to capture more of this value for themselves :

- Osmosis implements a module where their validator automatically performs arbitrages and captures revenue for the application (ProtoRev)

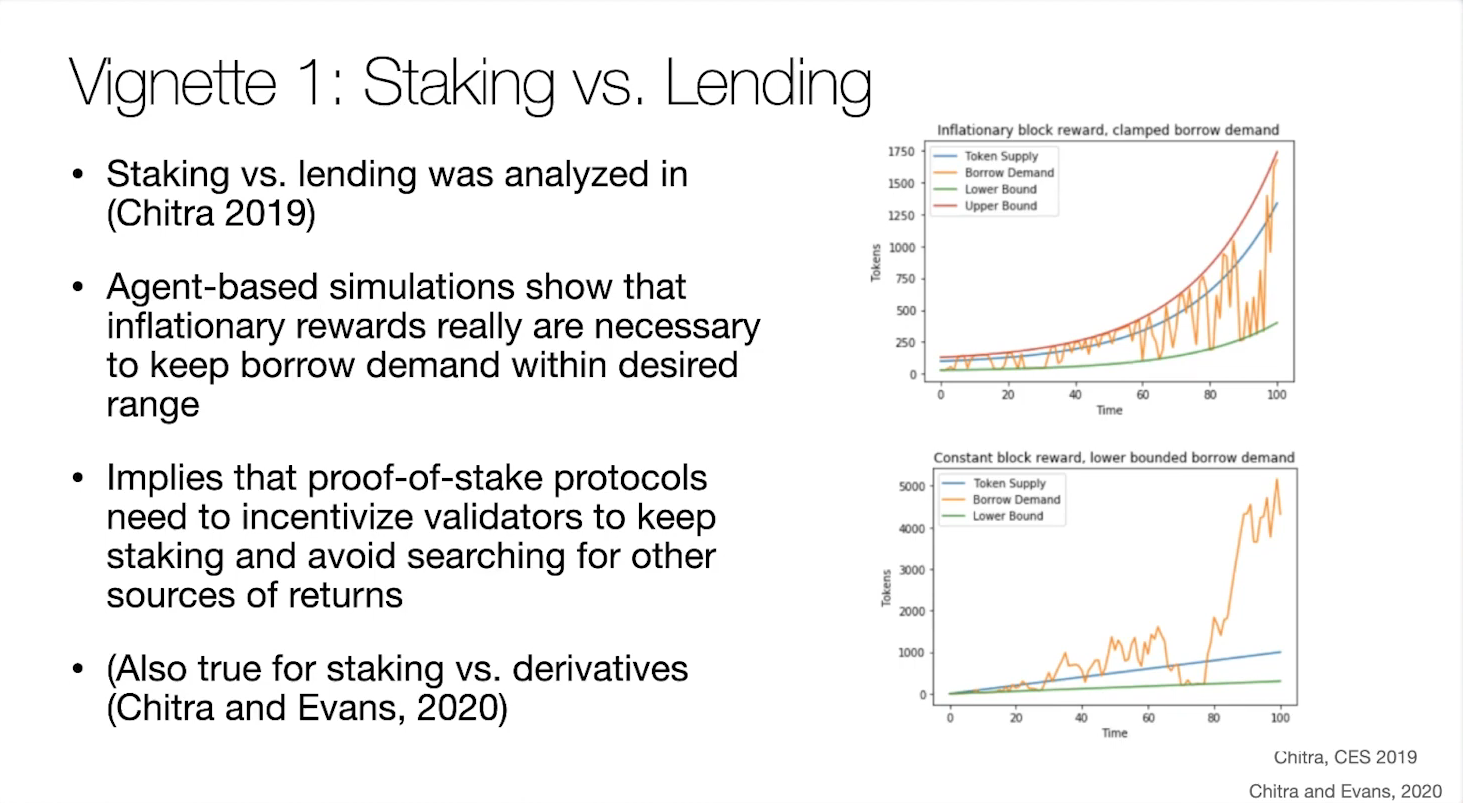

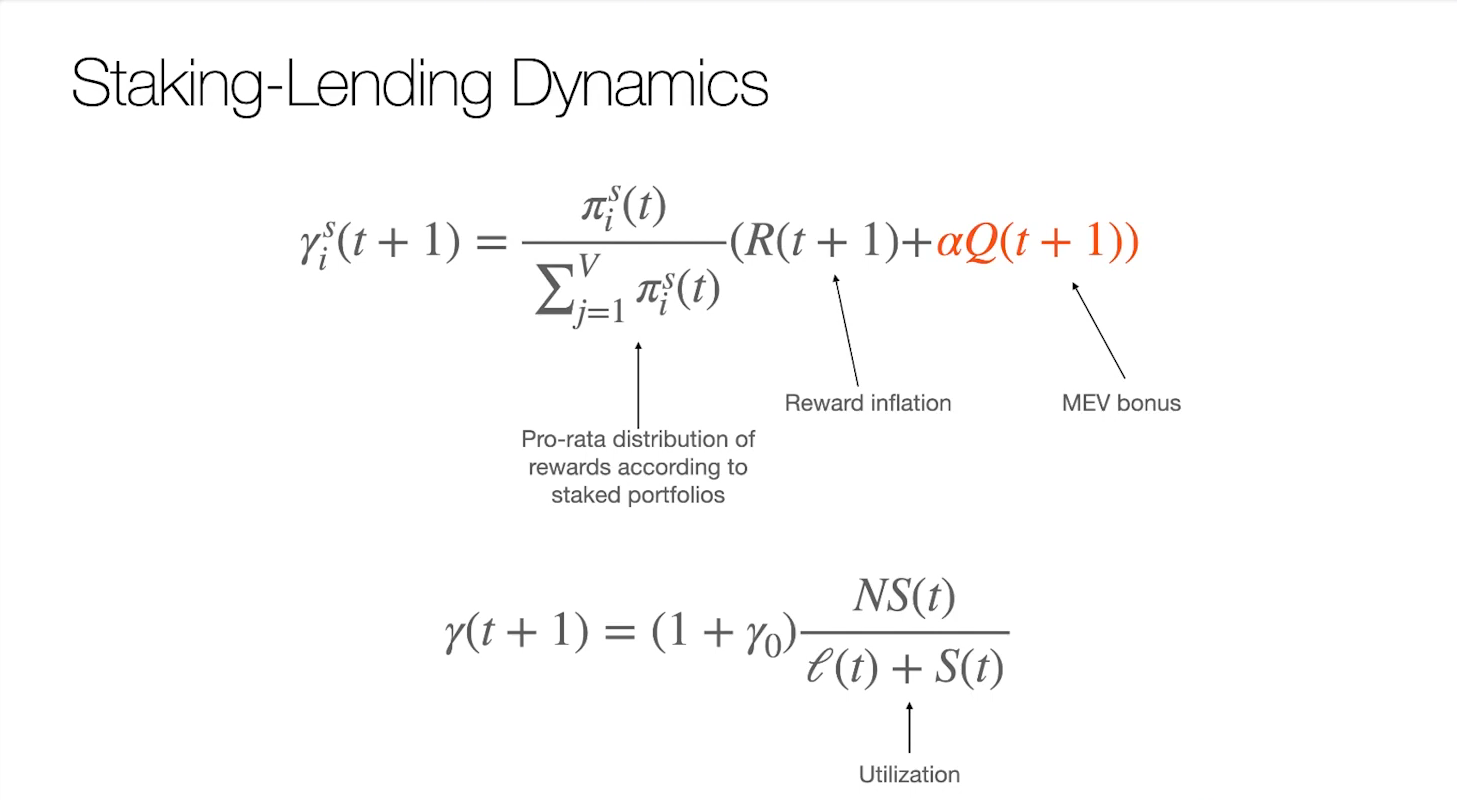

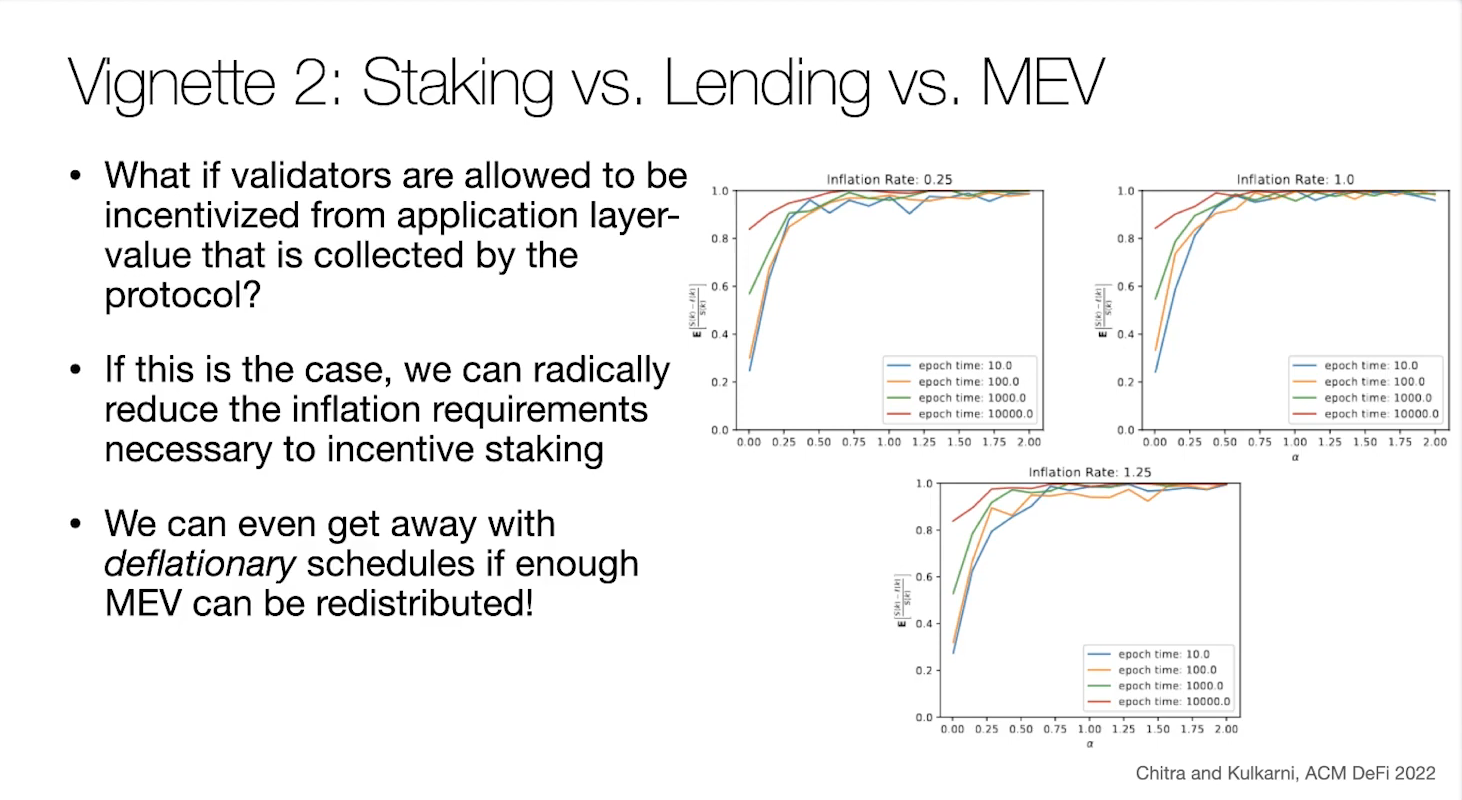

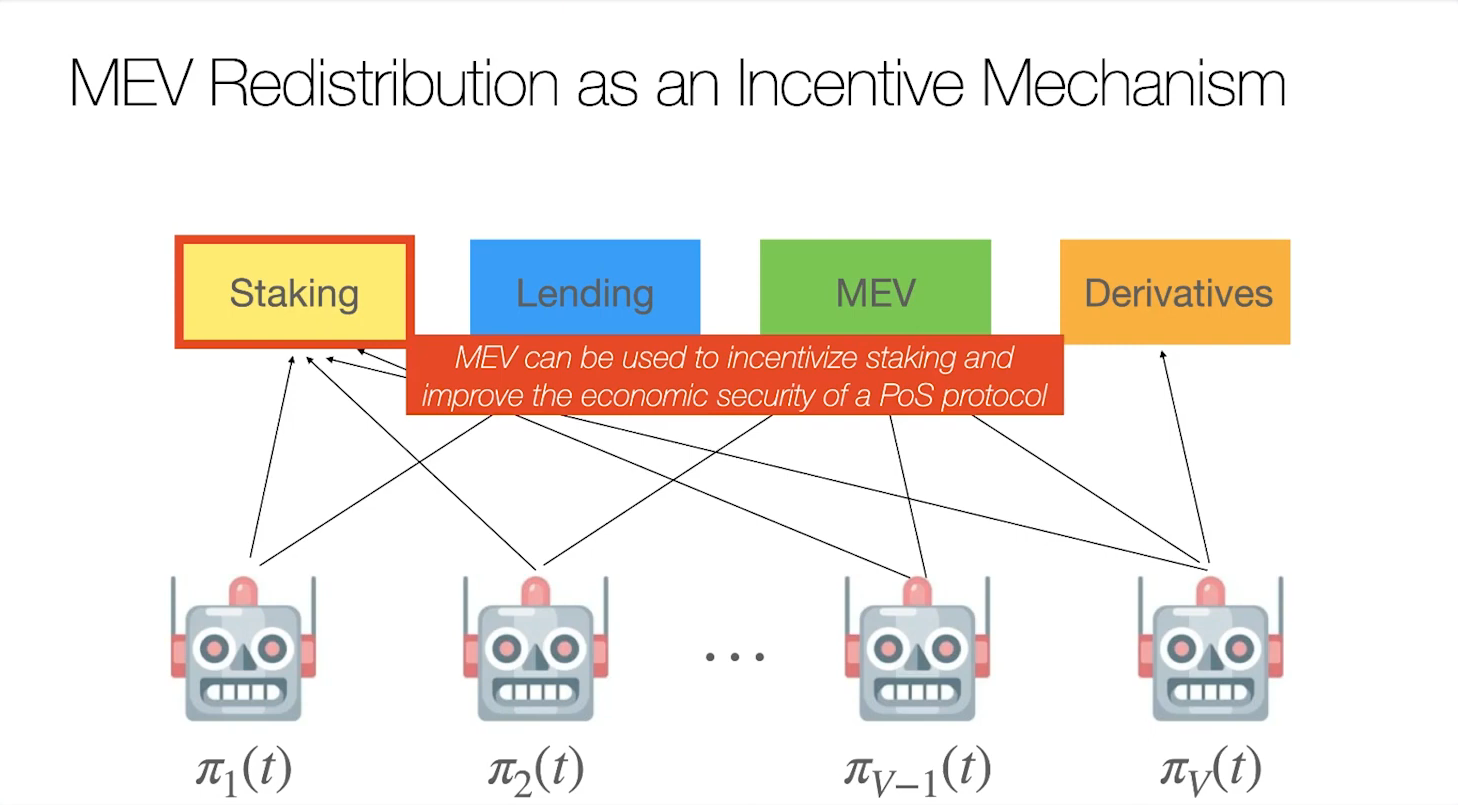

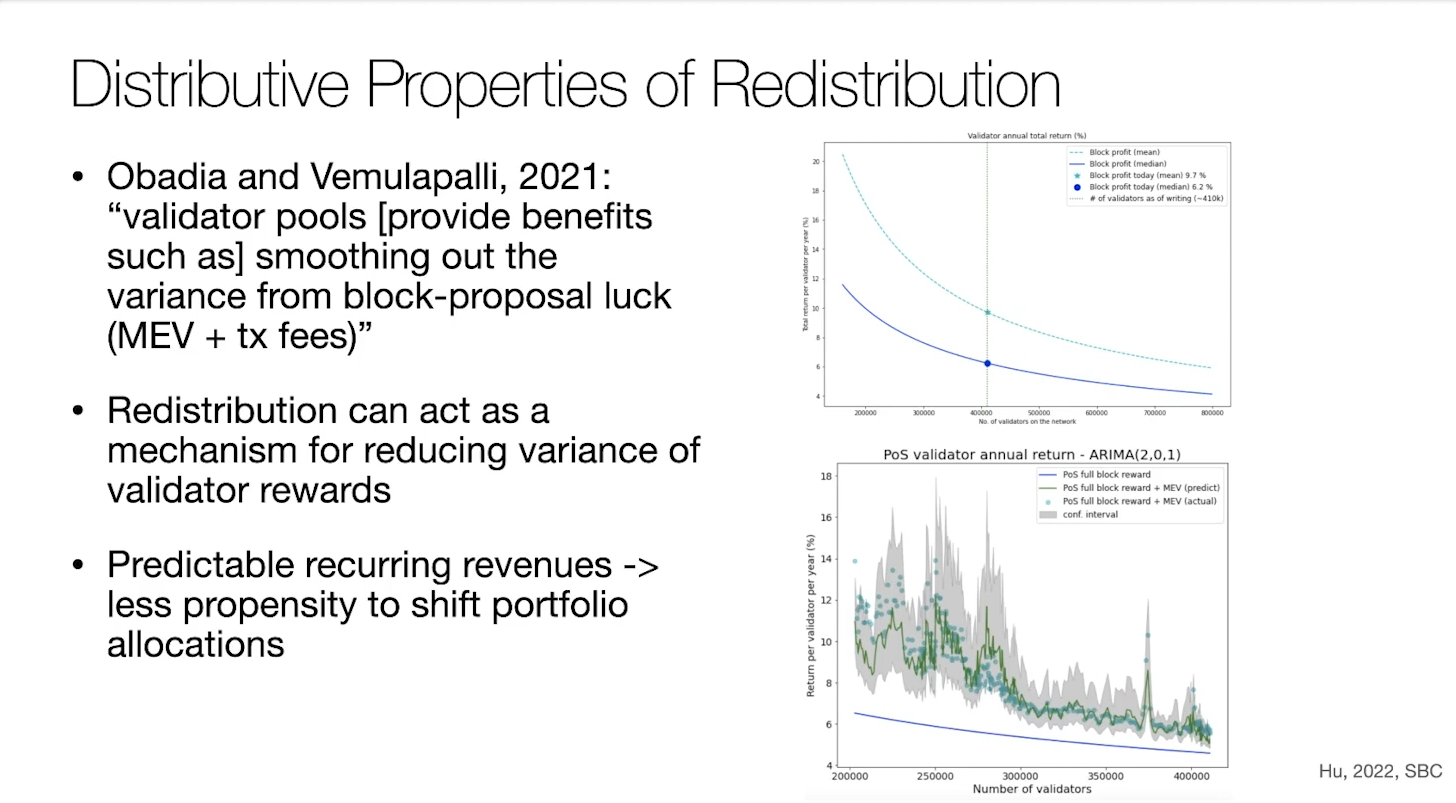

- Another solution is to improve Proof-of-Stake economic security itself with MEV redistribuition

Layer 2 overview (13:15)

MEV is already a problem with centralized sequencers. It becomes worse with decentralization, because it introduces more problems and questions. For example, a first-come, first-served ordering lead to latency games and we can't build a fair game out of this

Some Shared Sequencers are under development :

- Shared sequencers, such as base roll-ups proposed by Justin, allow layer one to sequence multiple roll-ups.

- Other chains like Espresso and Metro offer shared sequencing layers for multiple roll-ups.

Shared sequencers provide exciting benefits but raise complex questions regarding economics and trust in these systems.

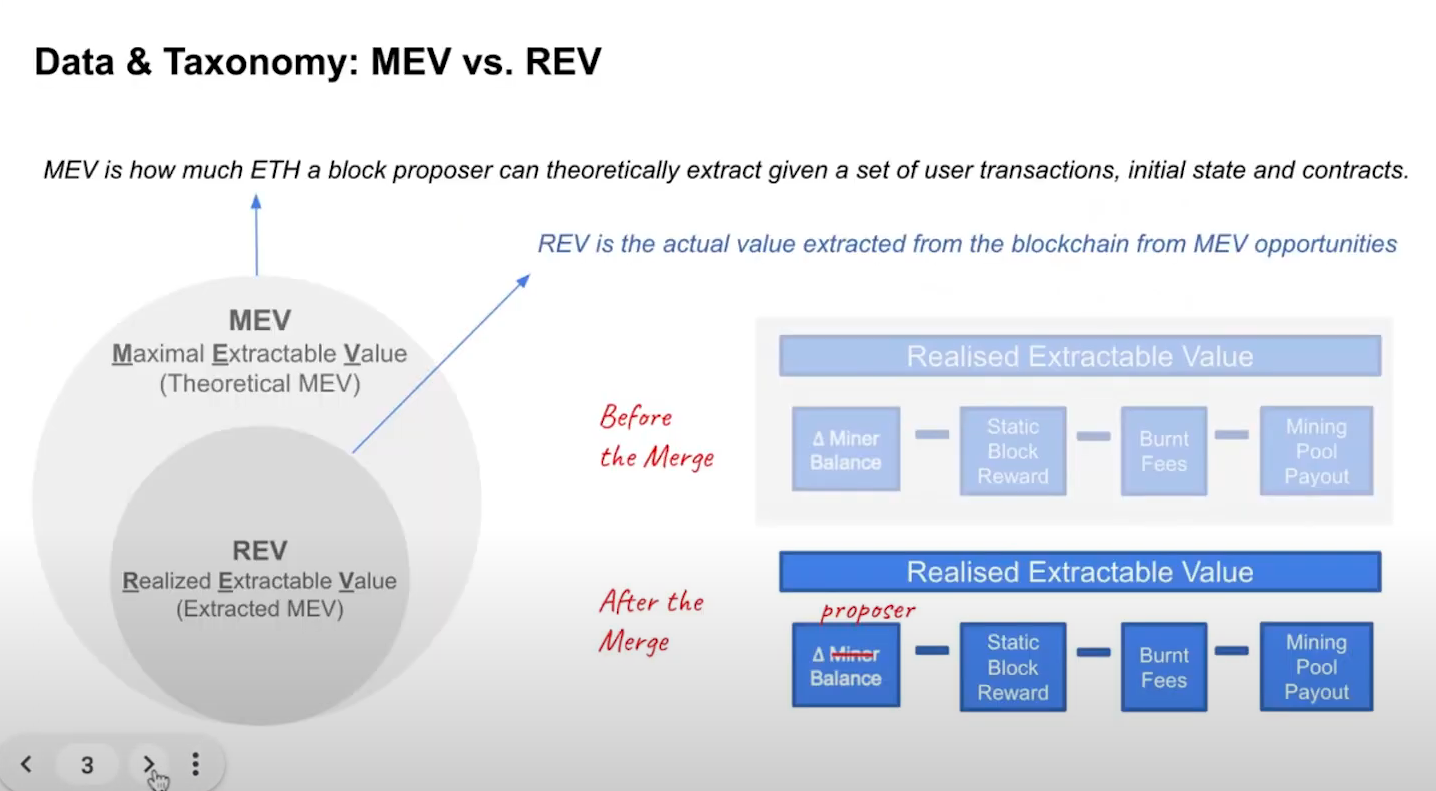

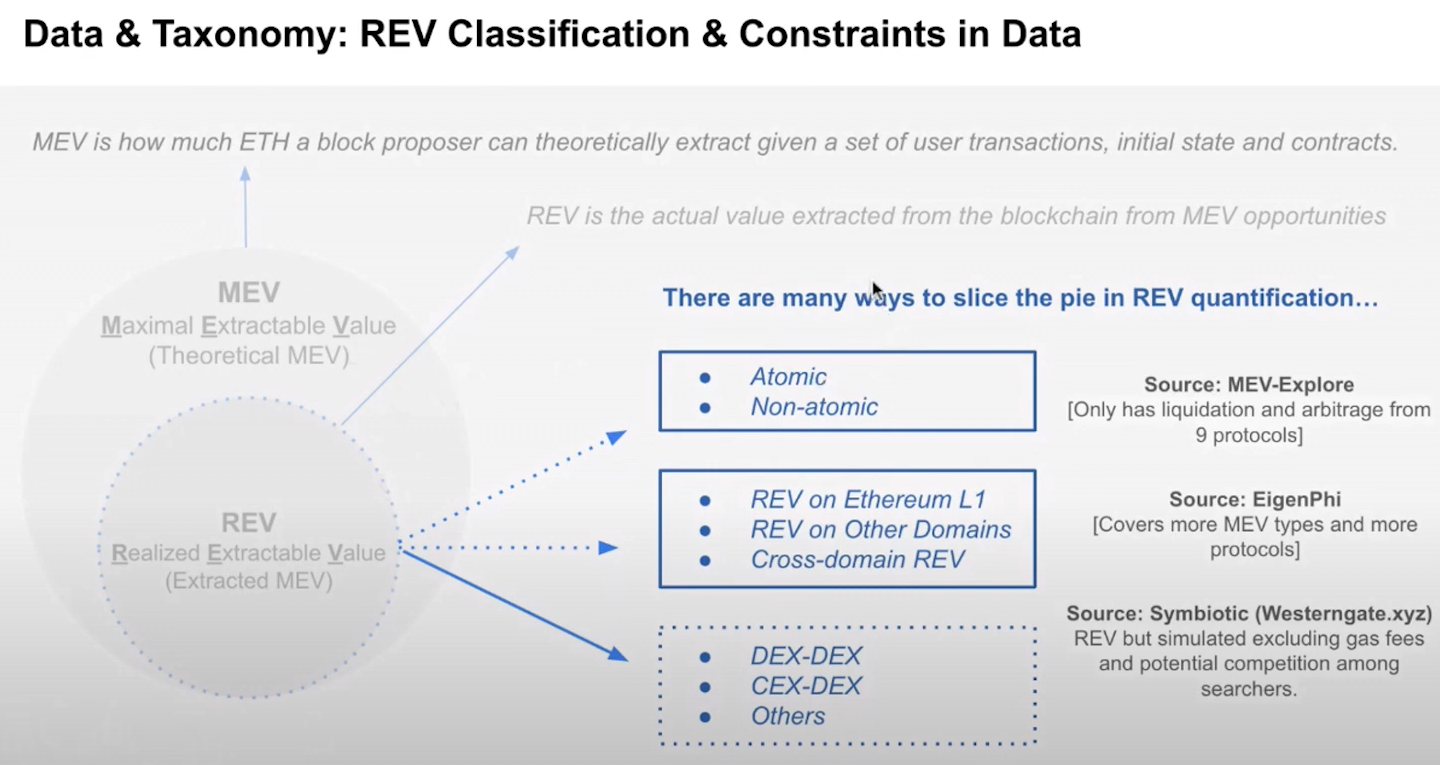

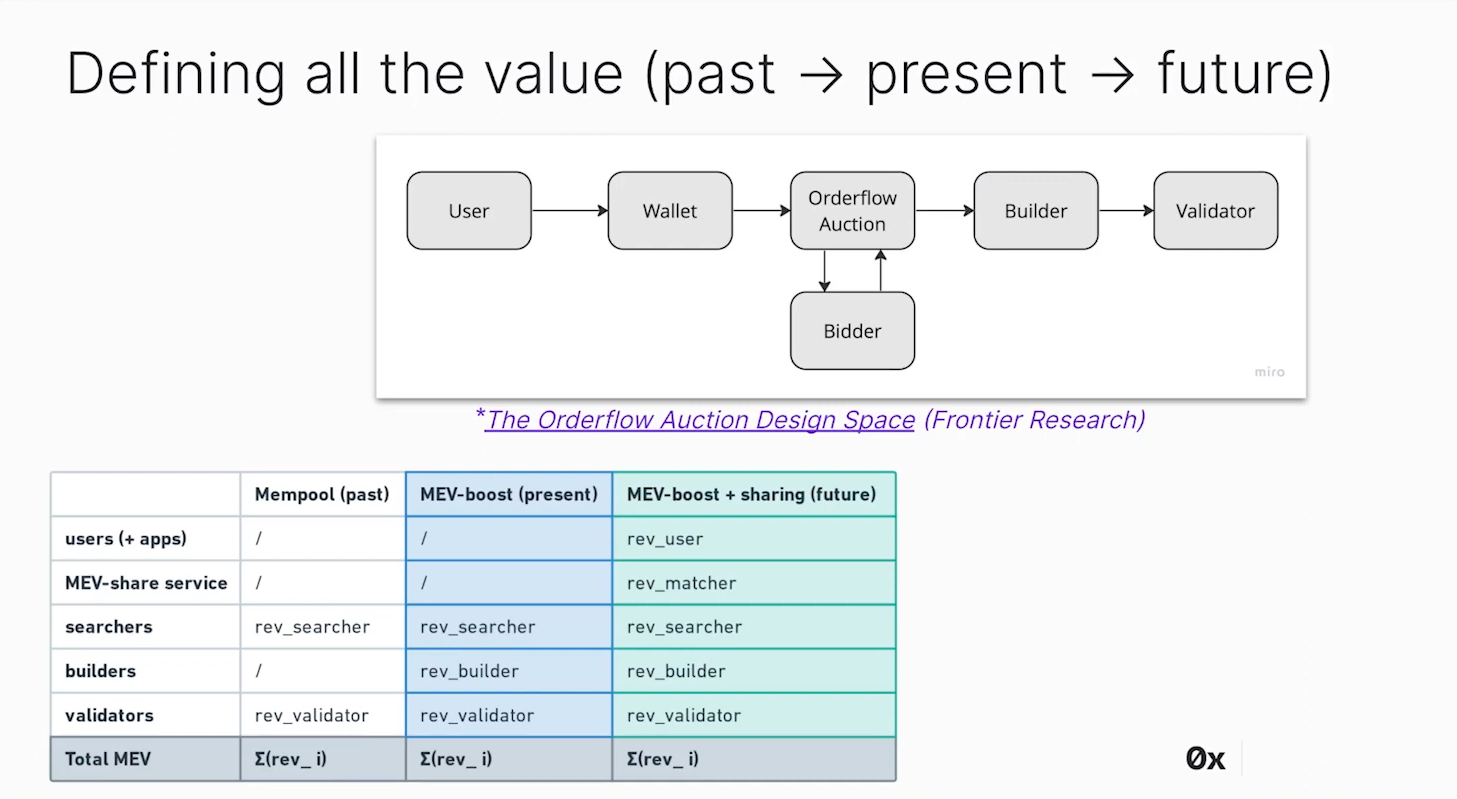

Taxonomy (0:45)

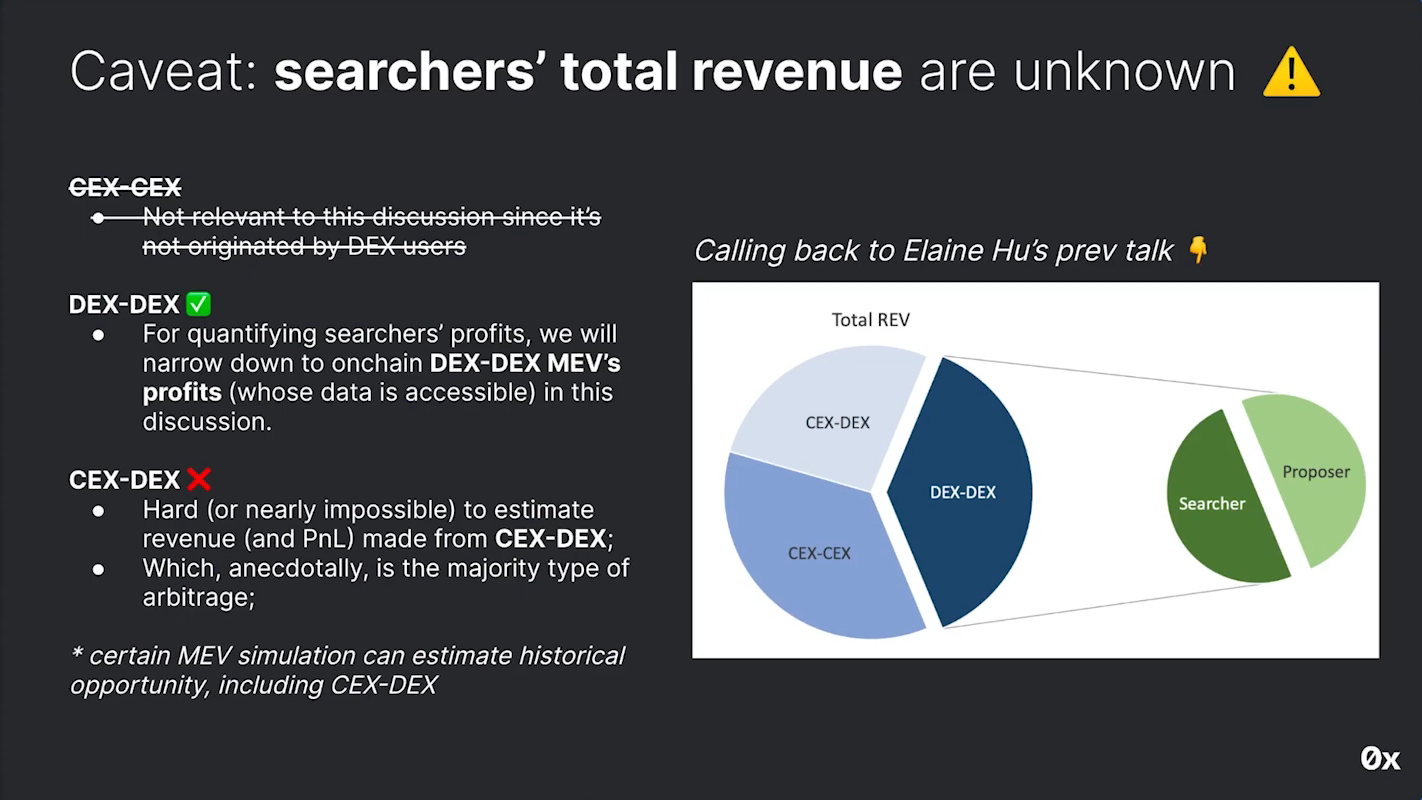

MEV is a theoretical value, whereas REV is the realized value extracted from MEV opportunities

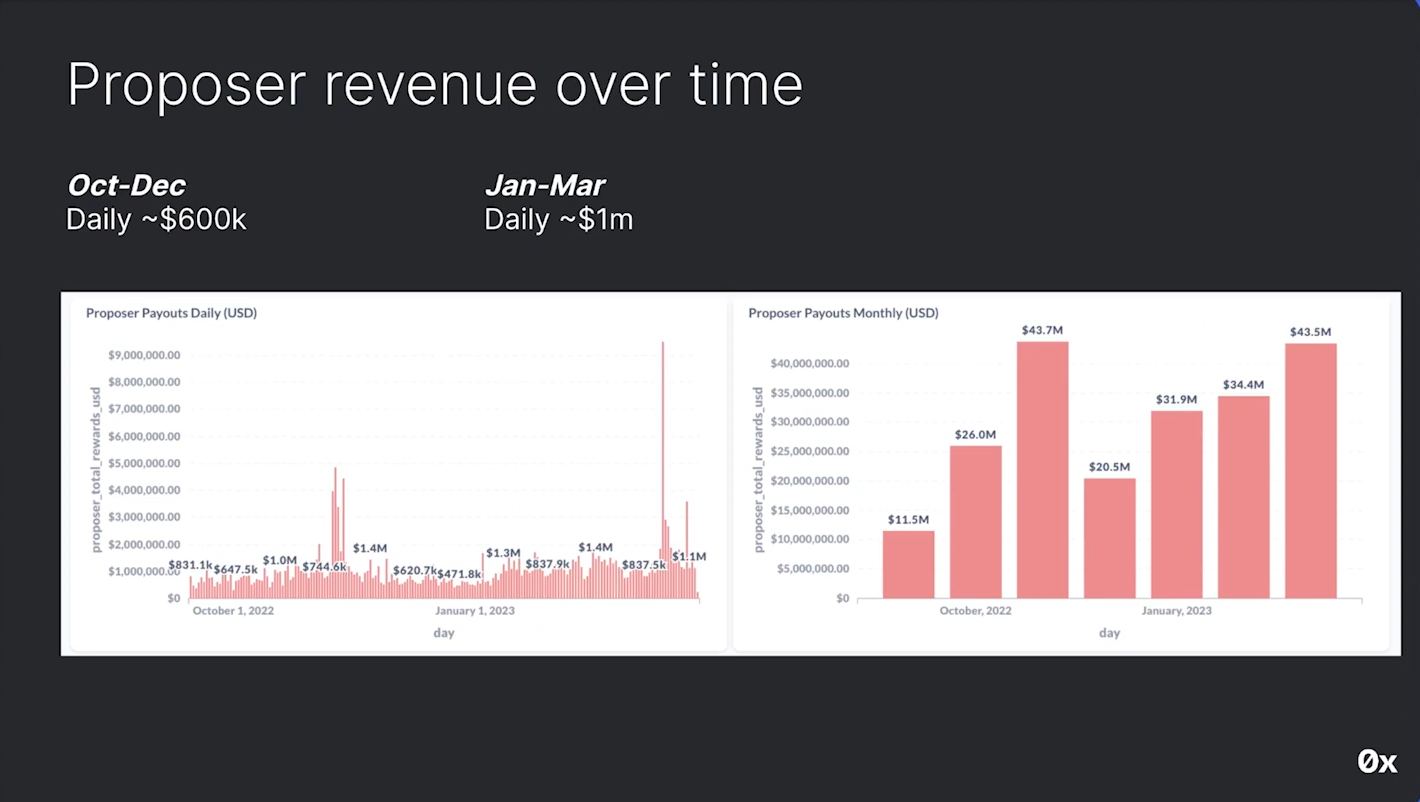

Before the merge, REV was collected from the Miner balance change. After the merge, REV is collected from Proposer balance change, which is basically the same data. We use ETH send to Proposers as an approximation for the total REV.

REV pie in sliced in many parts :

- REV on Atomic and non-atomic arbitrages (MEV-Explore)

- REV on Ethereum L1, Other chains, Cross-chain (EigenPhi)

- REV on exchanges (Odos)

Elaine mentions that sources provide some numbers related to MEV activities but it represents only a small portion of the overall picture.

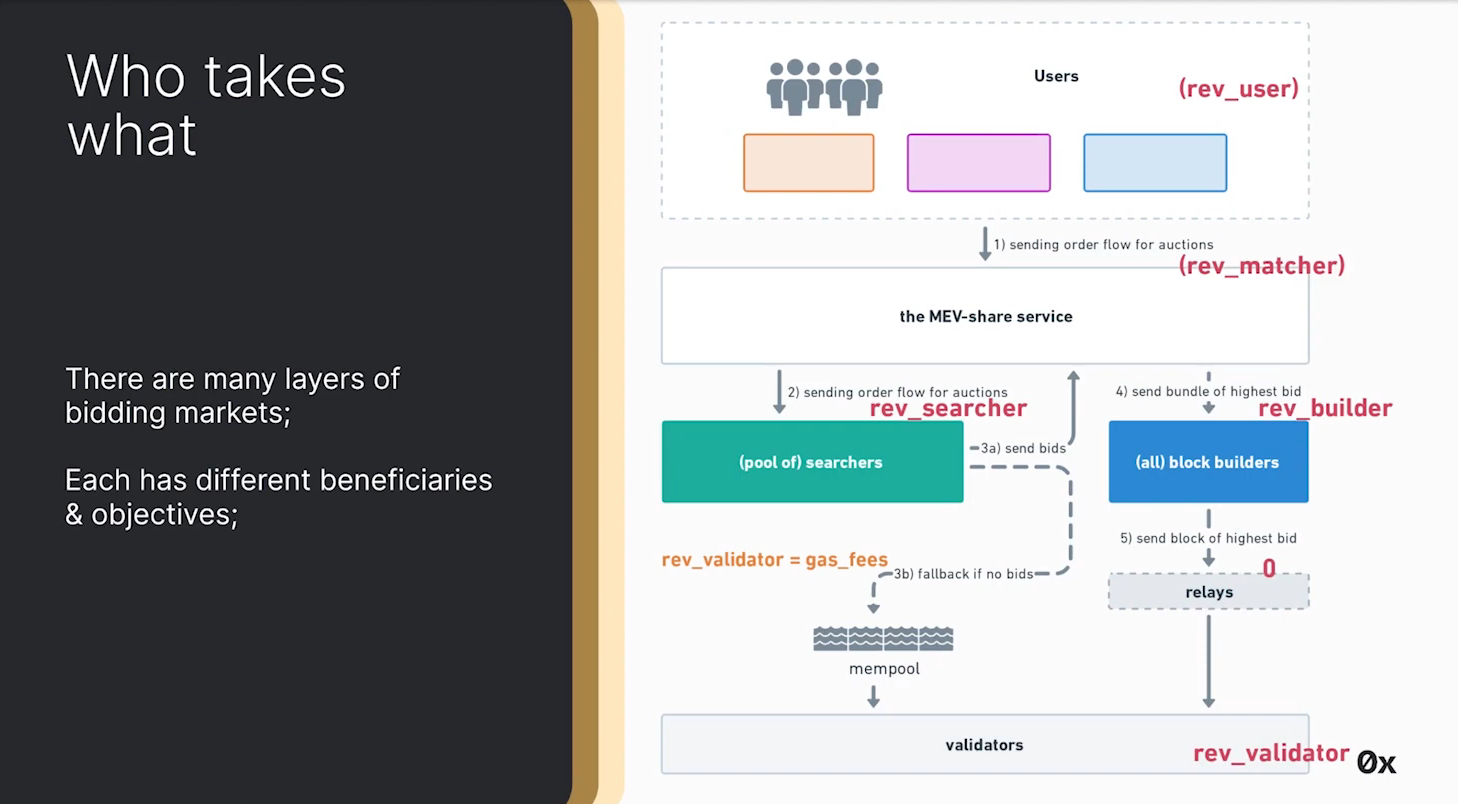

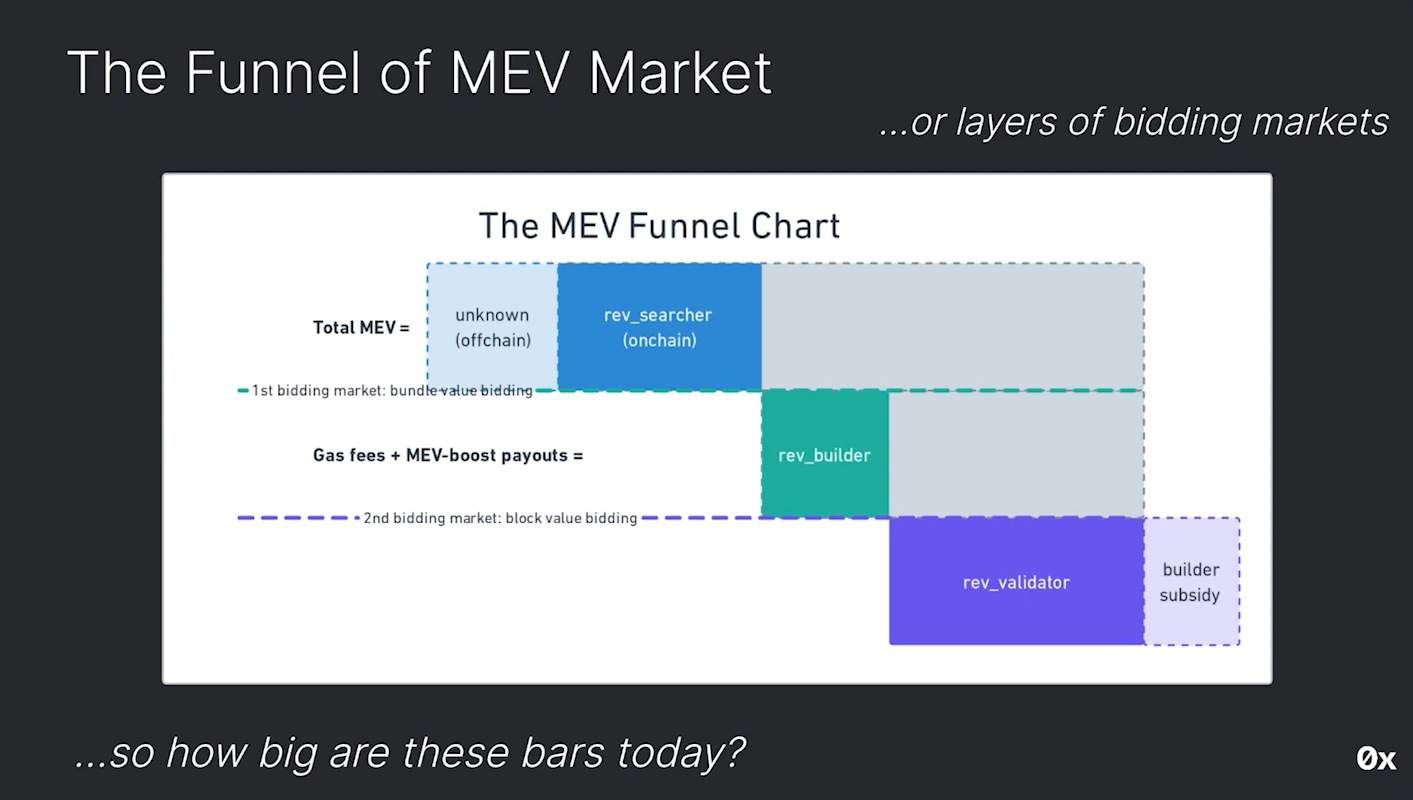

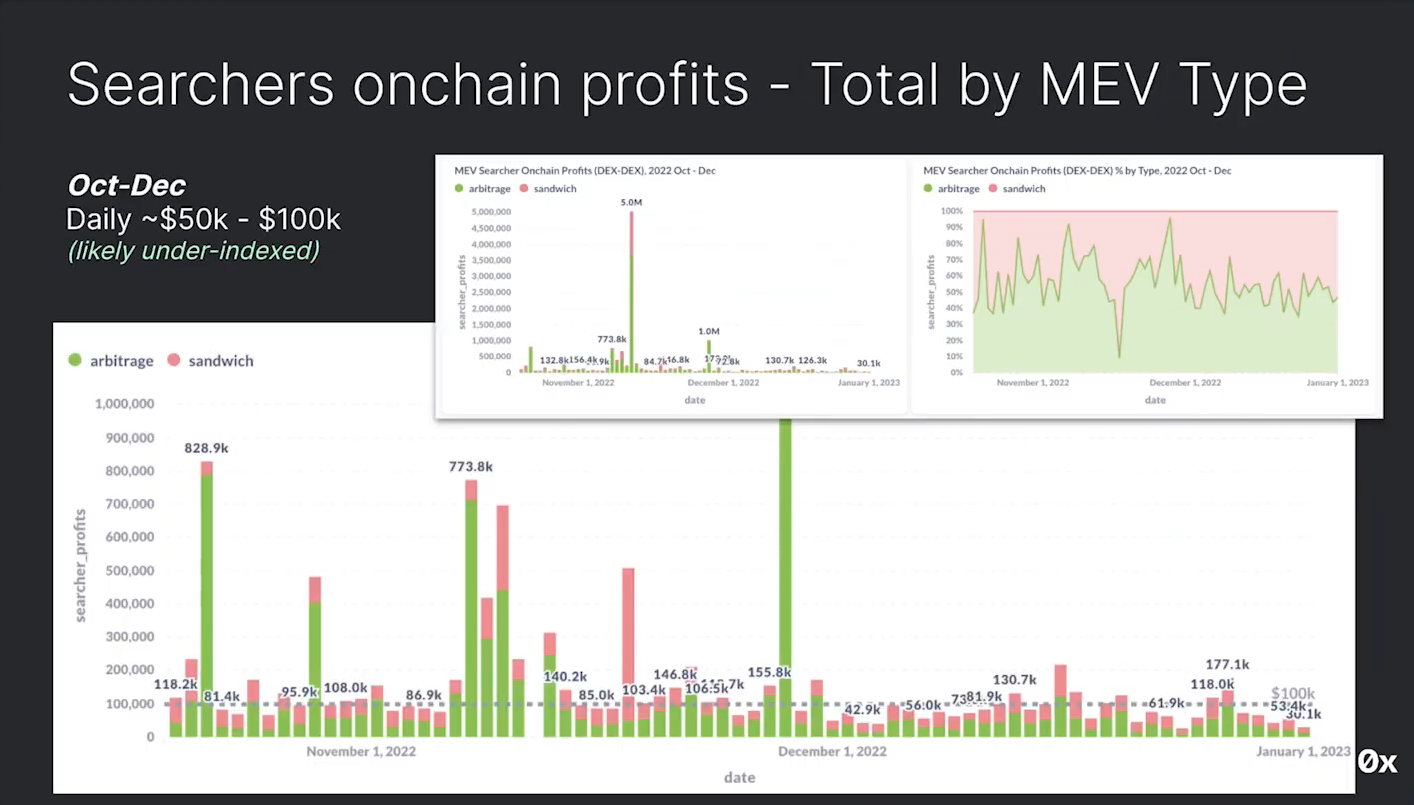

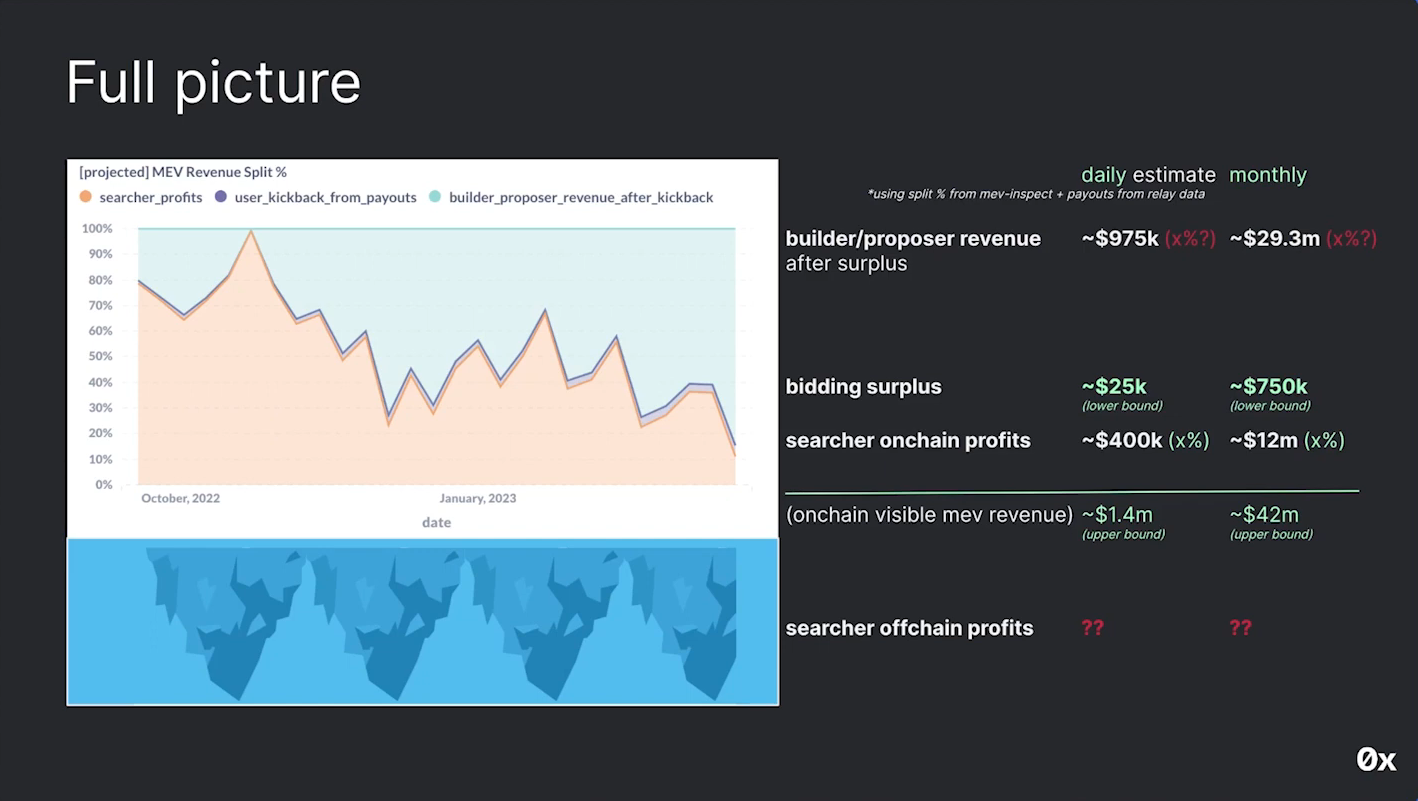

Breakdown of MEV Distribution (5:00)

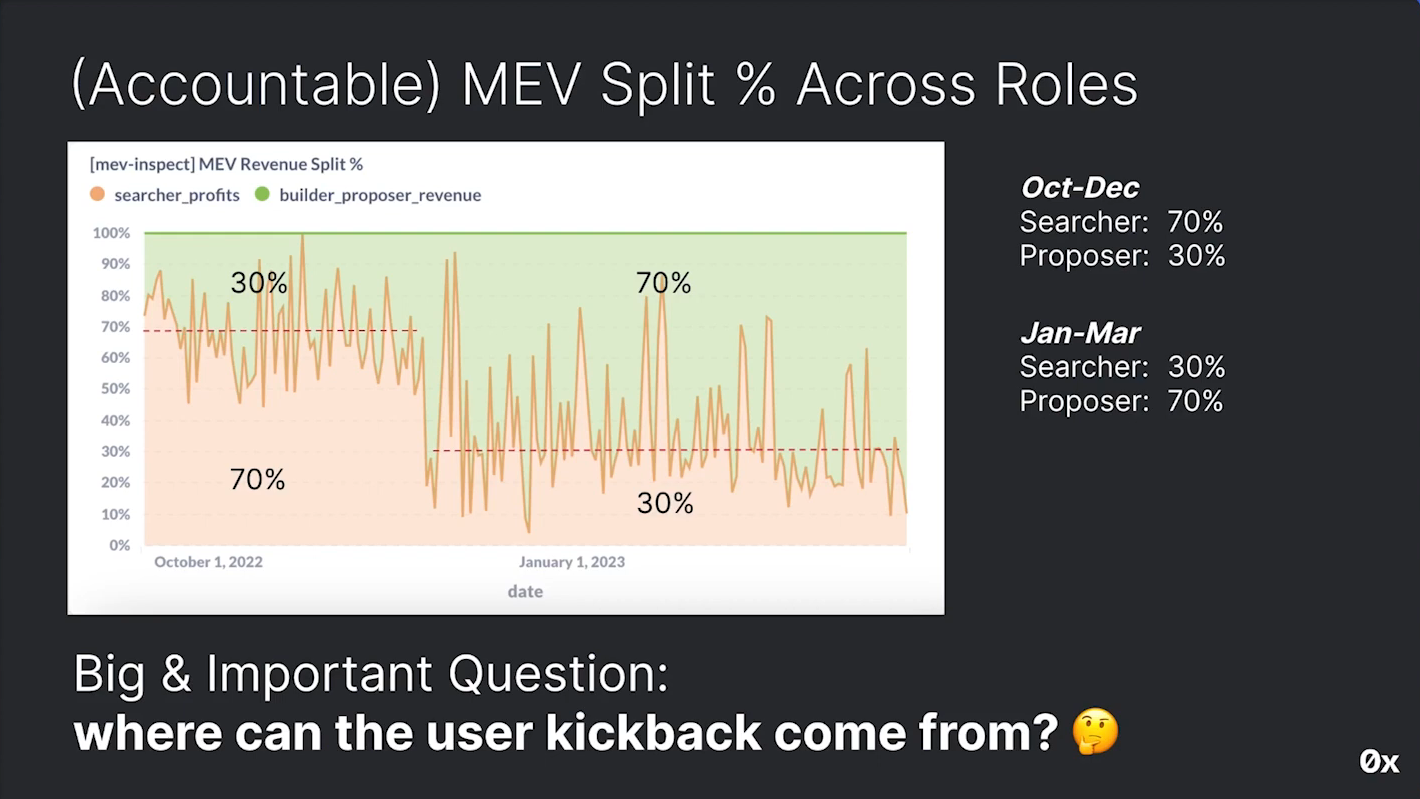

Assuming that only searchers and proposers receive MEV (excluding other parties involved), approximately 48% goes to searchers and 52% goes to proposers.

There has been an increase in relay competition since the merge, with more relays now available compared to earlier. Initially, there were only Flashbots and Block relays, but now there are multiple options. Flashbots' share of relay usage has been decreasing over time.

MEV accelerated the latency game

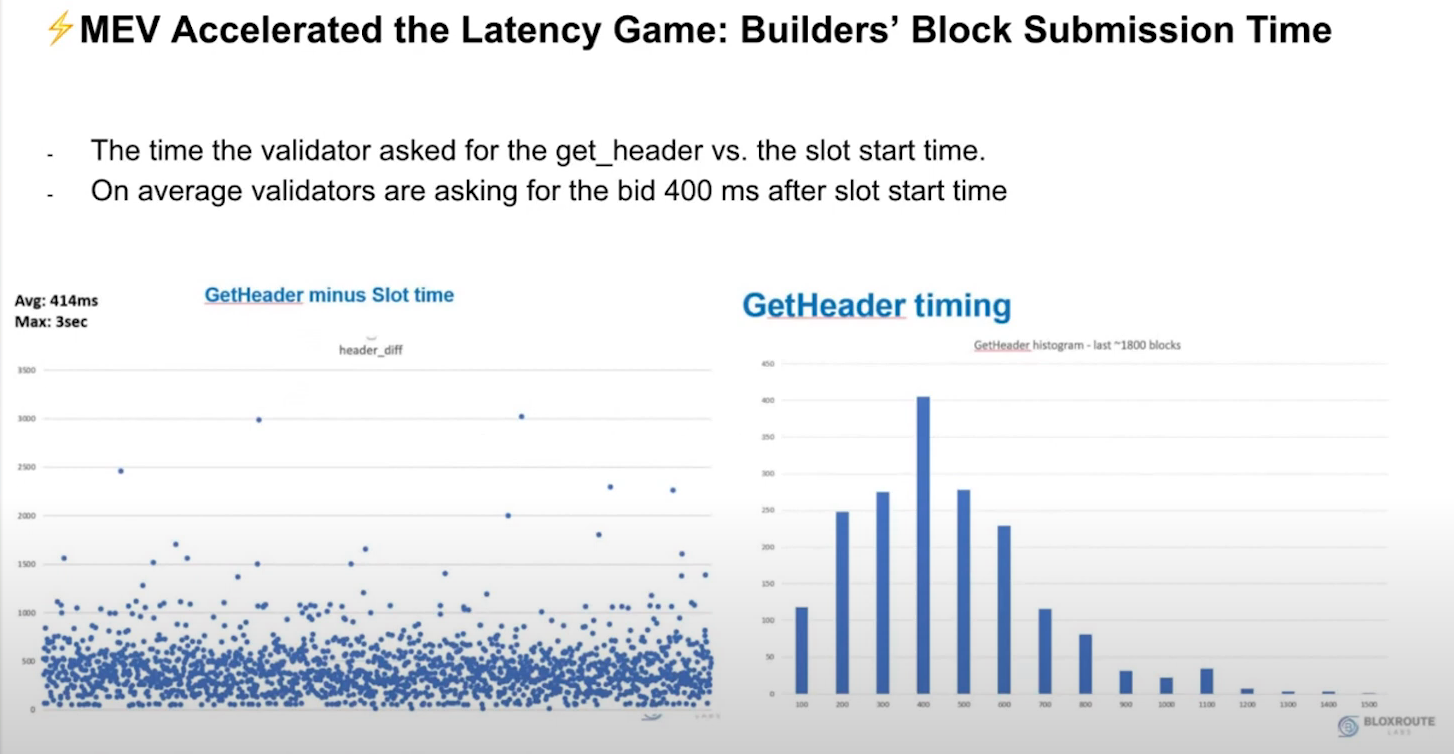

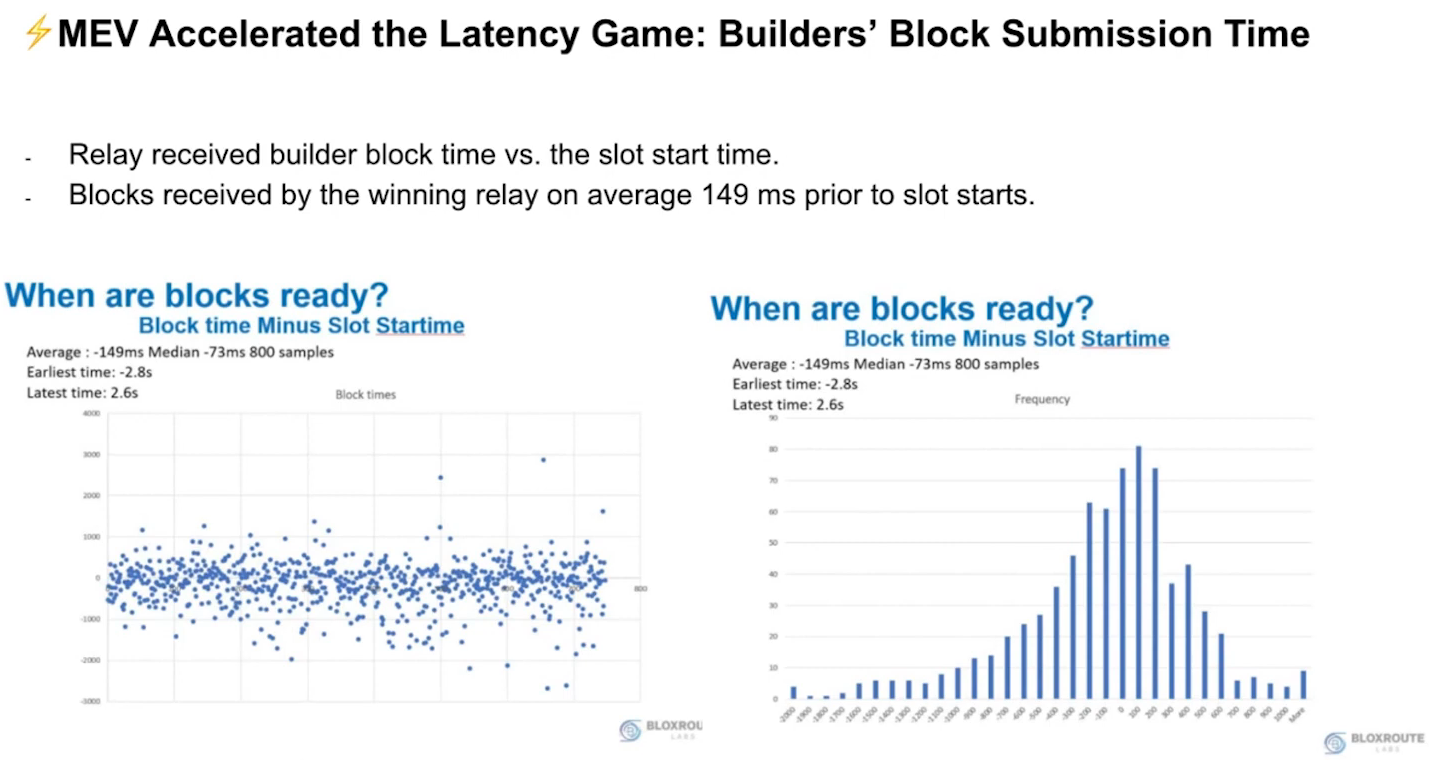

Builder's block Submission time (7:15)

- Validators are asking for the bid around 400 milliseconds on average after the slot starts

- The average winning blocks arrive on-chain approximately 149 milliseconds before the slot starts.

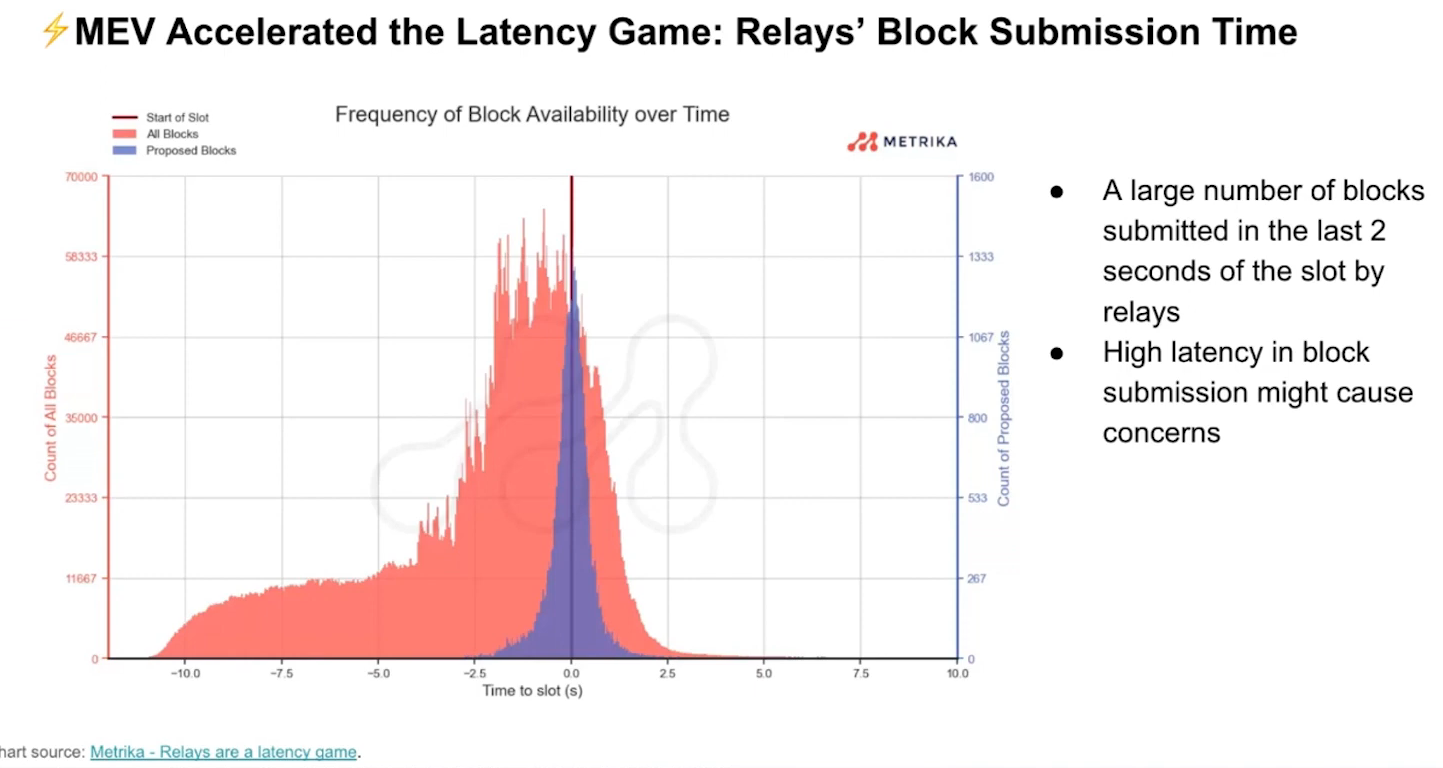

Impact of Block Submission Latency (8:00)

Most blocks are submitted in the last two seconds of the slot, causing high latency in block submission. High latency in block submission can lead to delays in attestation and network congestion.

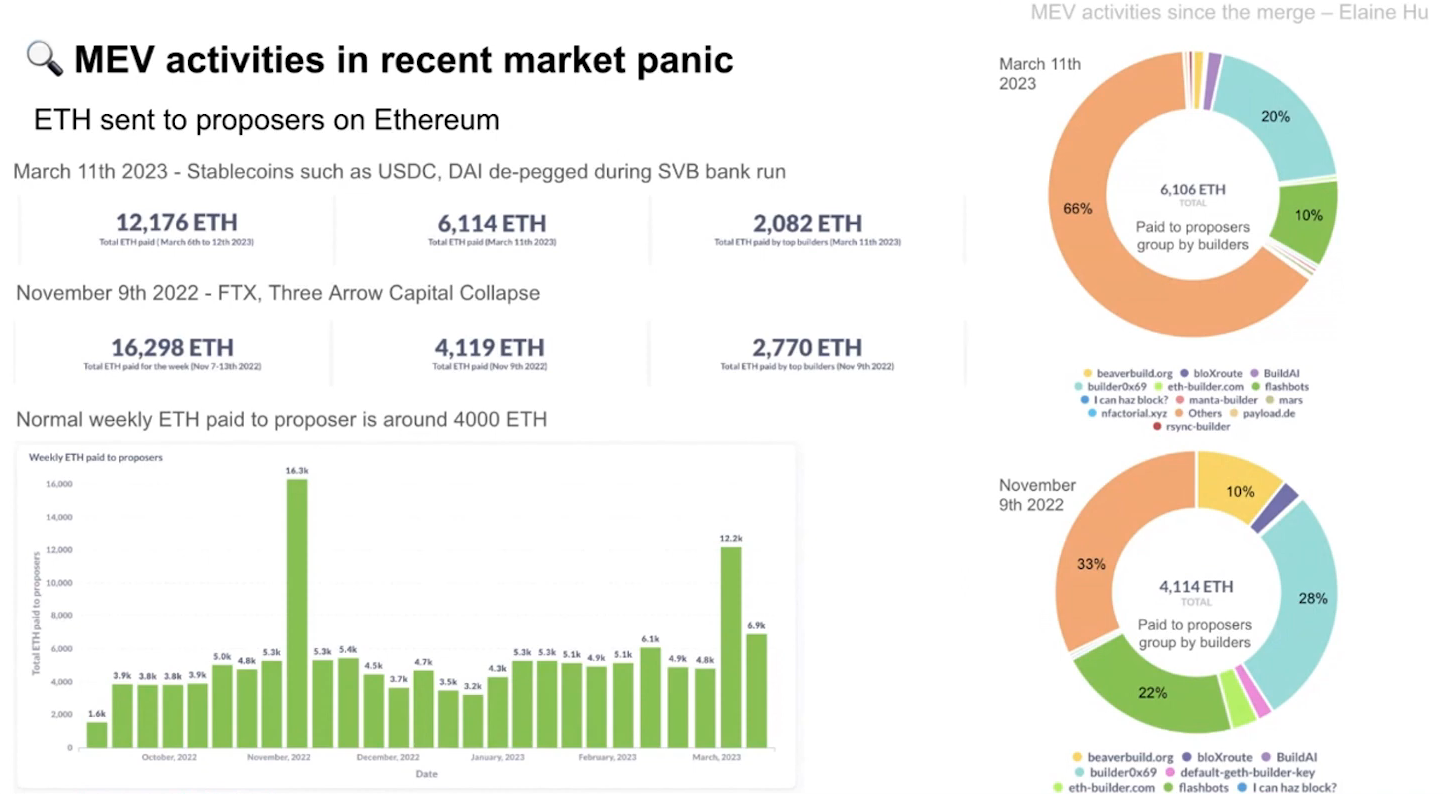

Market Panic events like FTX collapse and SVB bank run have highlighted these issues. Fortunately, SVB bank run recovered swiftly compared to the previous event.

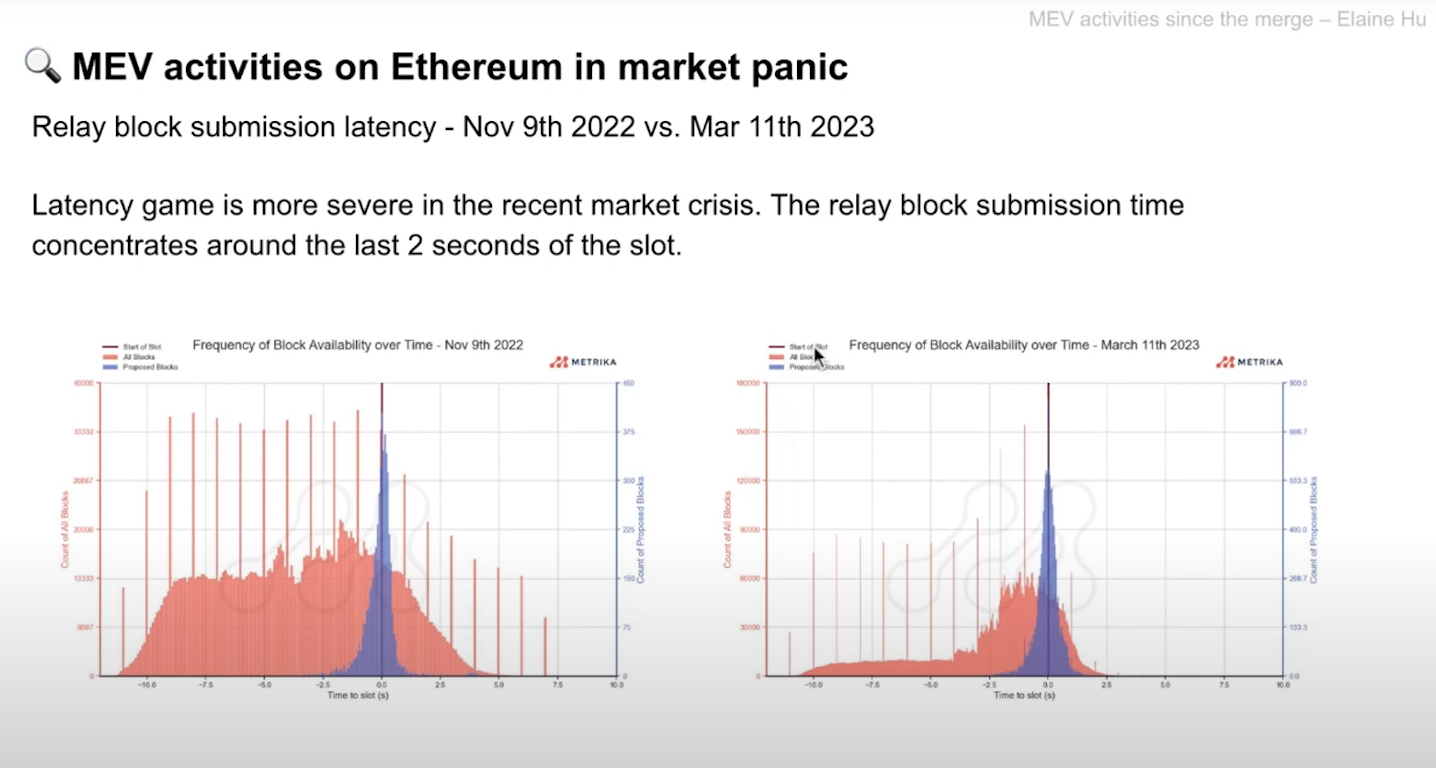

Latency analysis (17:00)

Both FTX and SVB events show a similar pattern to overall latency trends. However, if we compare with latency before the merge, the latency gain is more severe, with most blocks submitted in the last two seconds.

The increased severity of latency gain may be due to relay and builder competition for landing blocks, and not necessarily related to market panic.

MEV activites in recent market panic

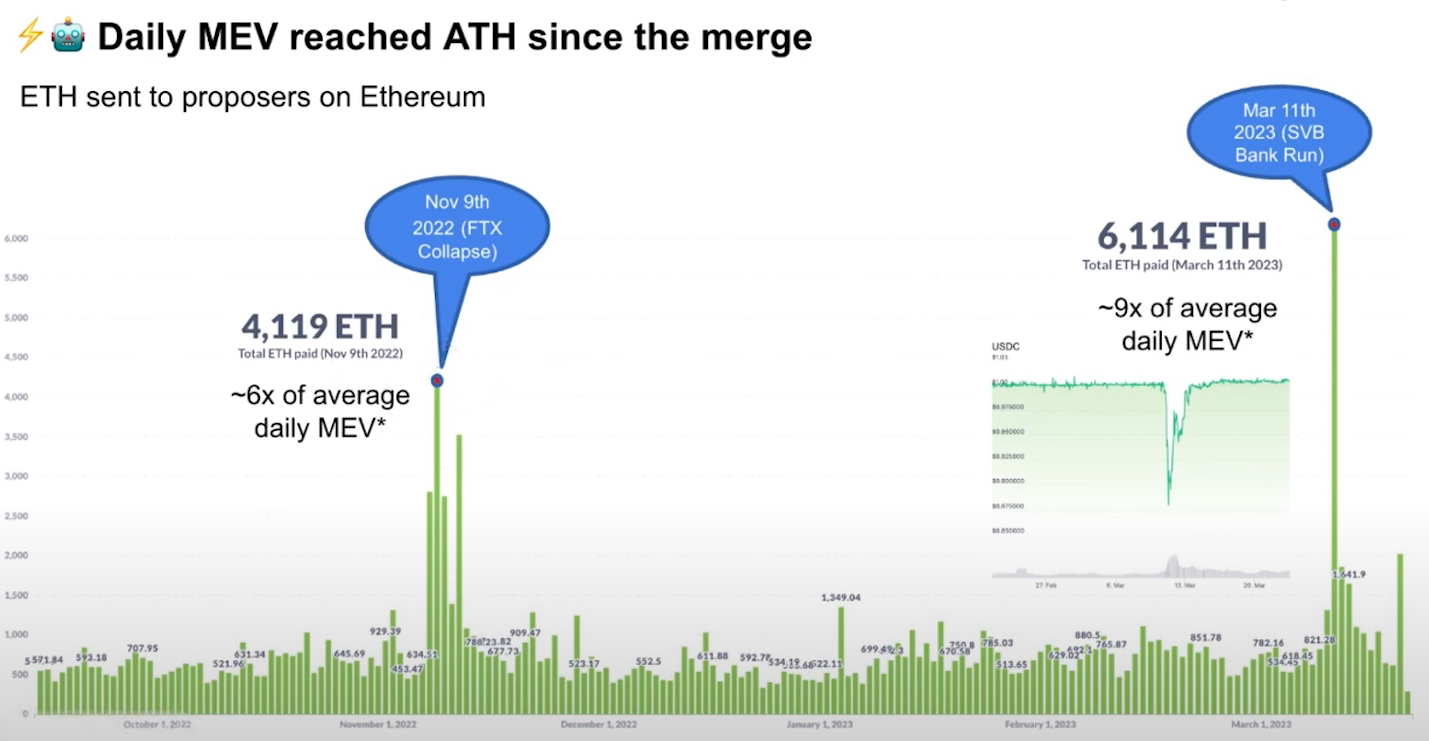

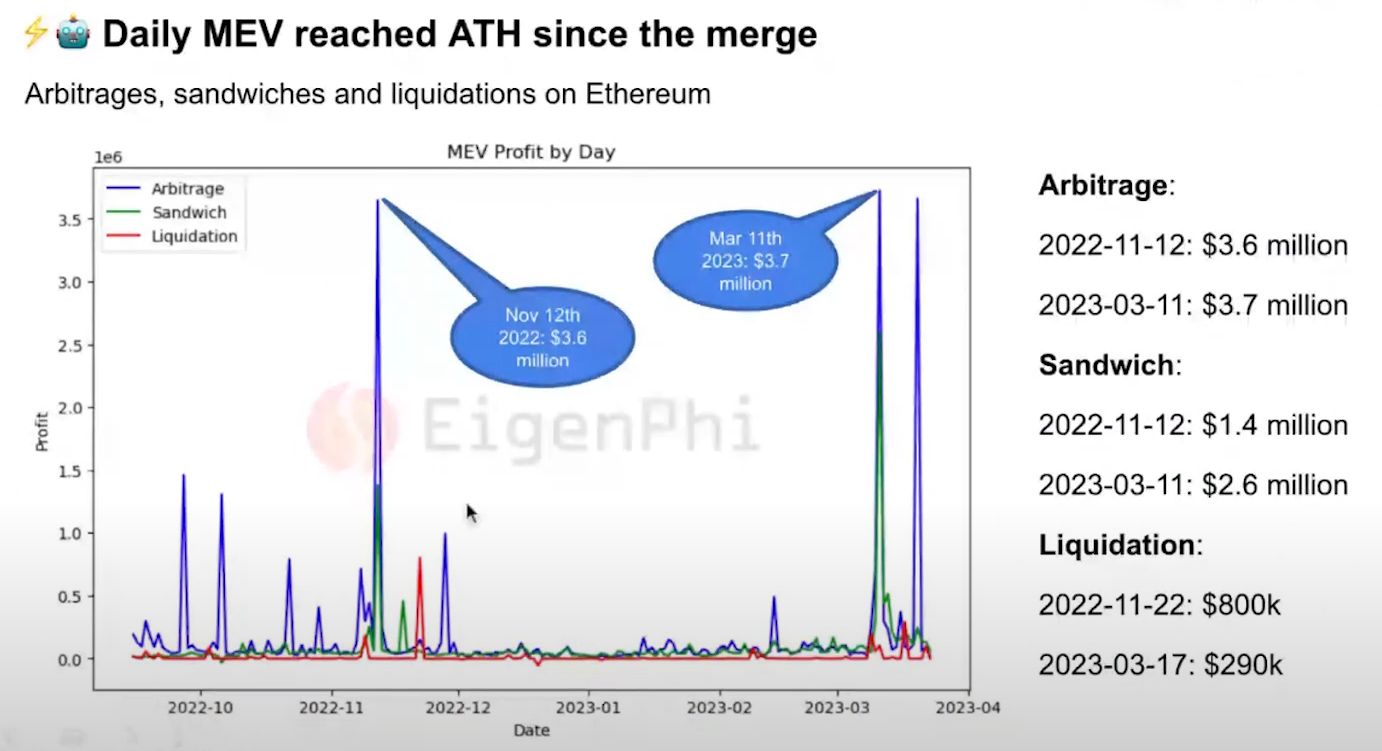

Daily MEV All-Time Highs (9:30)

Both FTX and SVB bank run made a all-time high for daily MEV

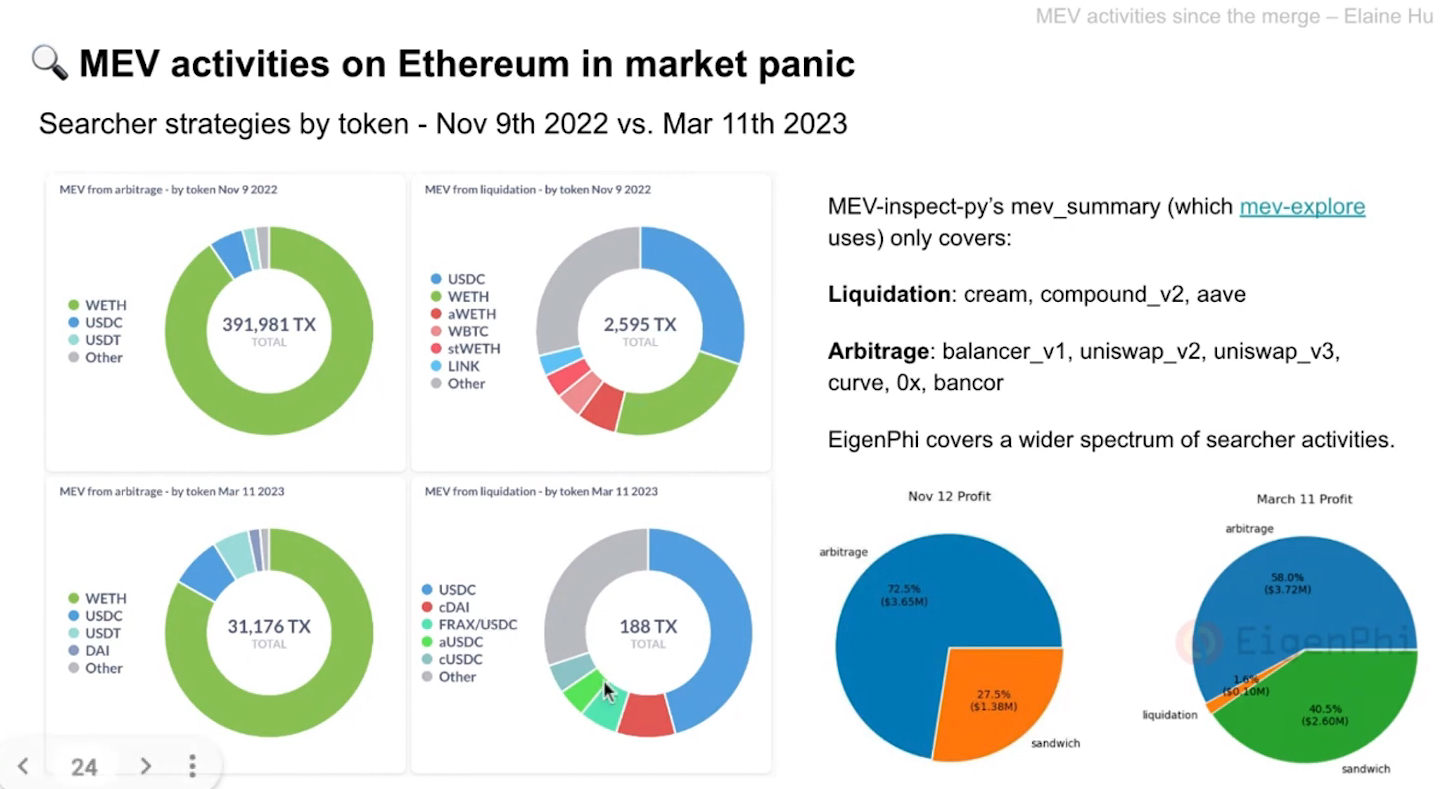

- Arbitrage accounts for a considerable share of MEV in both events (volatility = arbitrage opportunity)

- SVB bank run led to the USDC depeg, which has enabled sandwich attacks in addition to arbitrage and reach a higher all-time high than FTX collapse

How to look into MEV activities (12:00)

Look into two of the highest MEV Days on Ethereum recently :

- Total ETH paid to proposers on Ethereum

- Top builder VS Others by ETH paid & Number of blocks proposed

- Latency in block submission

Compare into cross-chain MEV strategies :

- Arbitrage tokens, chains and protocols

- Arbitrage strategy paths

- Arbitrage Profits

MEV in Uniswap & Curve (15:00)

Most arbitrages happened on Uniswap V2 and V3 during FTX collapse. However, there was an increase in arbing on the Curve stablecoin pool during SVB collapse.

Curve pool's increase in arbitrage activity is expected as the recent event was not highly correlated with liquidation events.

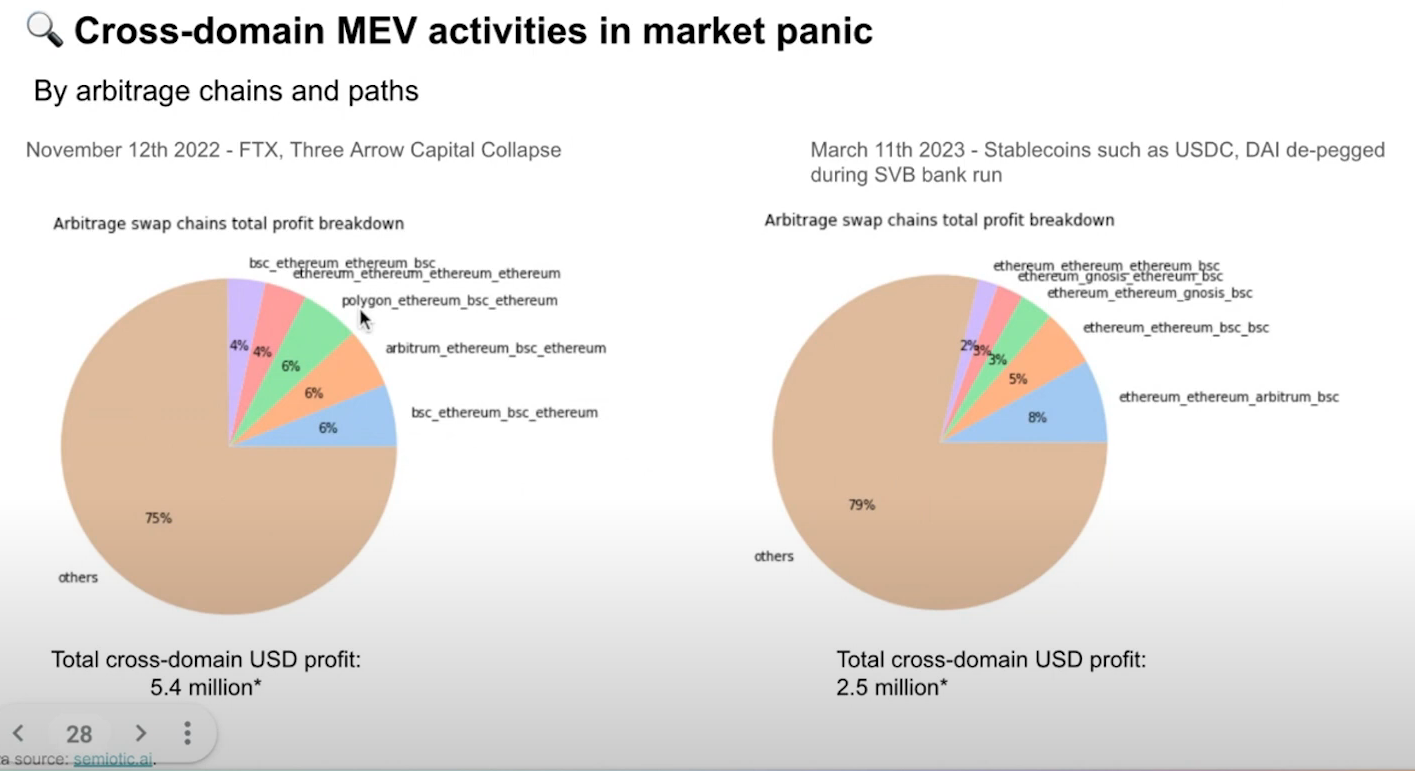

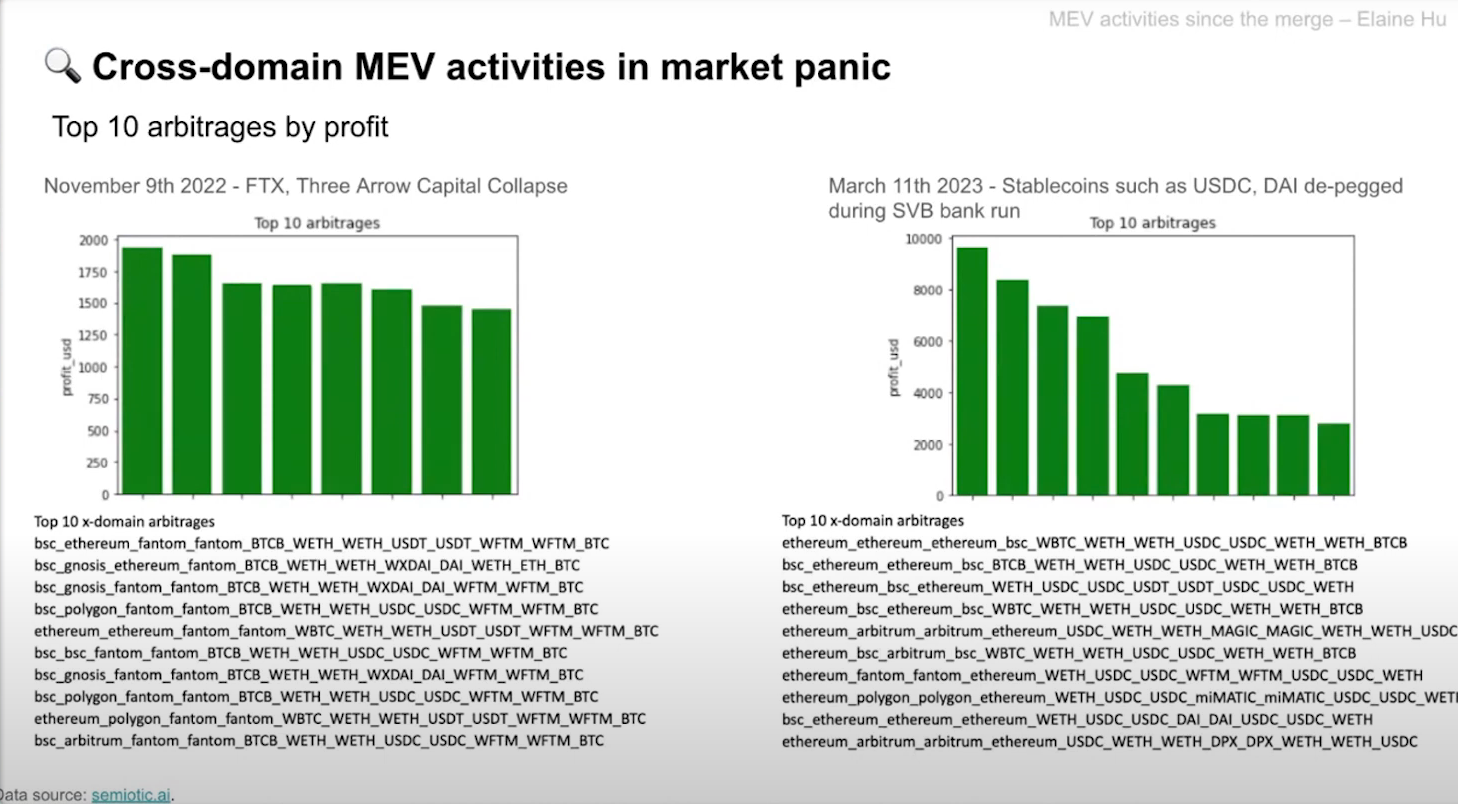

Cross-domain Arbitrage activites (18:15)

Most arbitrage opportunities occur between stablecoins, Bitcoin, Ethereum, and other major tokens.

In november, Polygon and Abritrum were observed. But since March, Ethereum and BSC are the most frequently arbed chains.

About Top 10 arbitrages bu profit, inaccuracies may exist due to missing arbitrage opportunities or data.

Summary points (20:45)

How to marry information and money

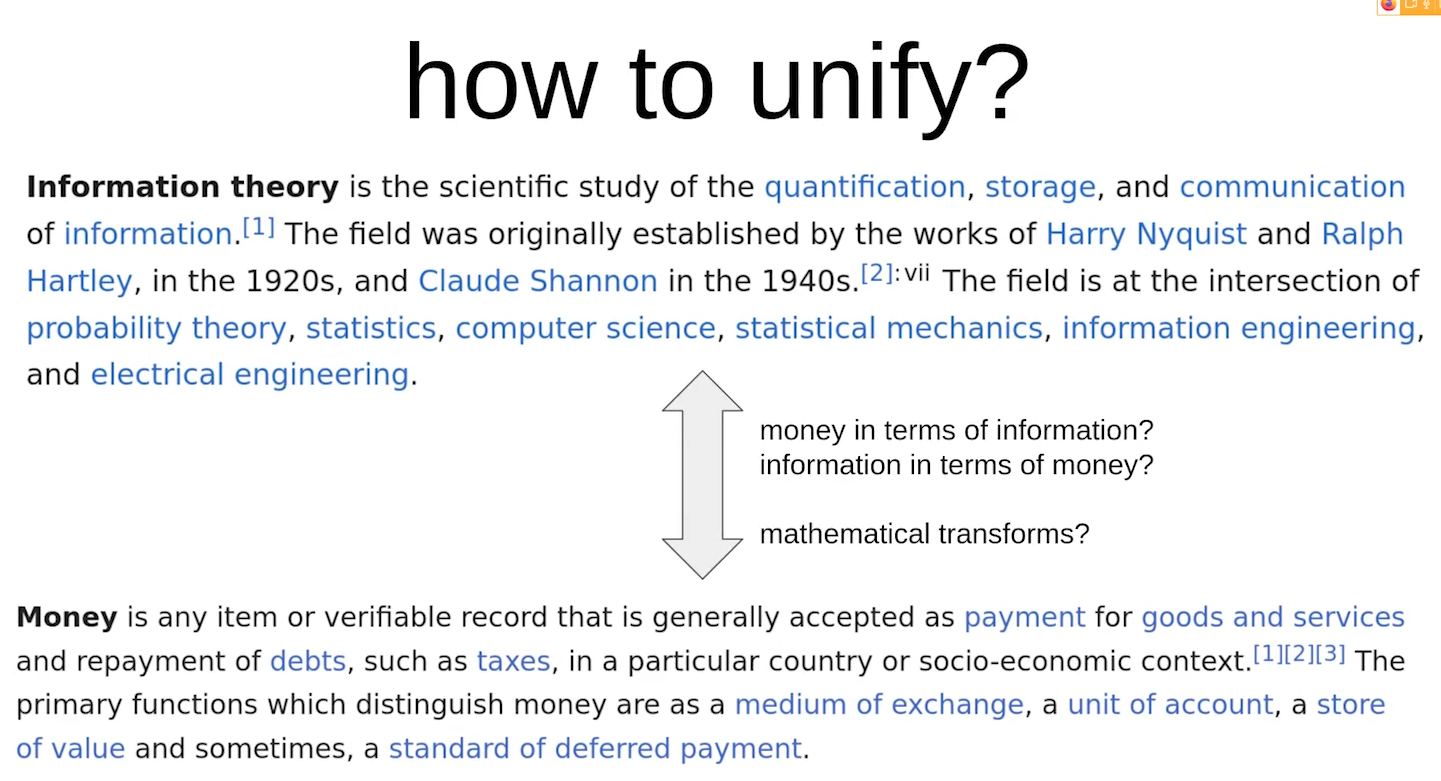

Some context (1:00)

- On one side, we have information based fields of studies (entropy, storage, cryptography, privacy...)

- On the other side we have economics attempting to model human interactions (cryptocurrency, MEV-time auctions...)

Our objective is to try to marry these two disparate fields with a single unifying set of abstractions that'll make both able to talk to each other better

There are similarities (2:15)

Between information and money, there are similarities. For example, quantification plays a crucial role in both domains.

Phil suggests that a clean mathematical transform is needed to unify reasoning about money and information theory. This idea is not original tho, we'll see that many people have been working on it.

So, what's the point ? (4:00)

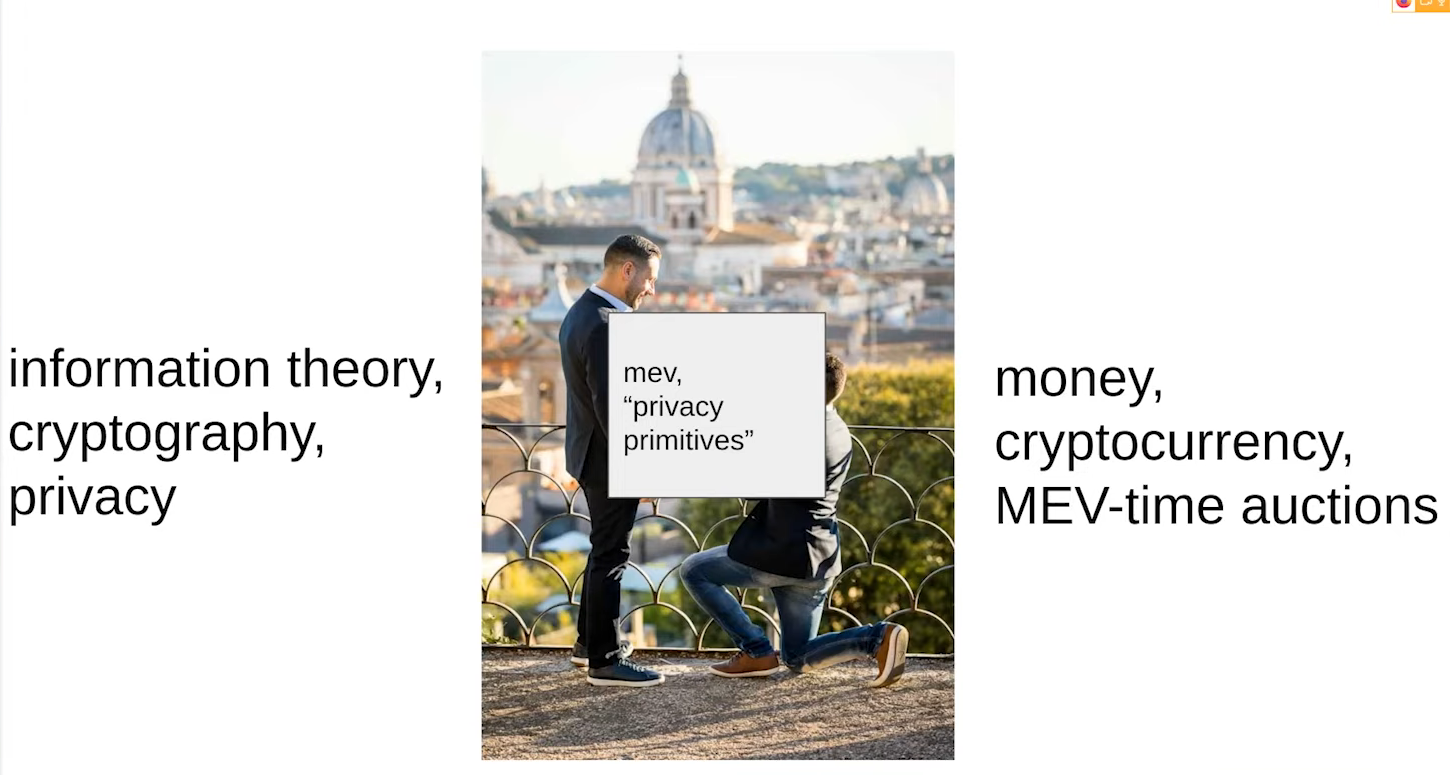

The key to unify information and money lies in both MEV and privacy primitives. That will allow us to use both information and money to create the best systems for users.

Lessons from the past

Mechanism design (4:30)

Mechanism design is a field in economics and game theory started in 1960s, that takes an objectives-first approach to designing economic mechanisms or incentives, toward desired objectives, in strategic settings, where players act rationally. It already aimed to address decision-making based on information silos.

In mechanism design, The aggregation of information into a single output is crucial.

Voting is ducked (5:15)

A theorem from 1973 and 1975 focuses on the problem of allocation and decision-making based on voting systems. They highlight three undesirable properties that create tension in voting systems :

- Dictatorial rule : One voter's input completely determines the outcome of the vote

- Limit the possible outcomes : Restricting choices to only two alternatives is seen as limiting

- Tactical voting : Voters prefer play according to a model of what other people are playing (aka "Metagame") than their own preference

Somehow, all voting is subject to incentives, and votes will never be truthful...

Money as a primitive (7:15)

...But by adding money, more complex mechanisms like auctions can be built, surpassing traditional social choice theory results.

This result underpins the fundamental mechanism design studies : Money serves as a powerful primitive in mechanism design, enabling broader possibilities beyond pure voting or information elicitation.

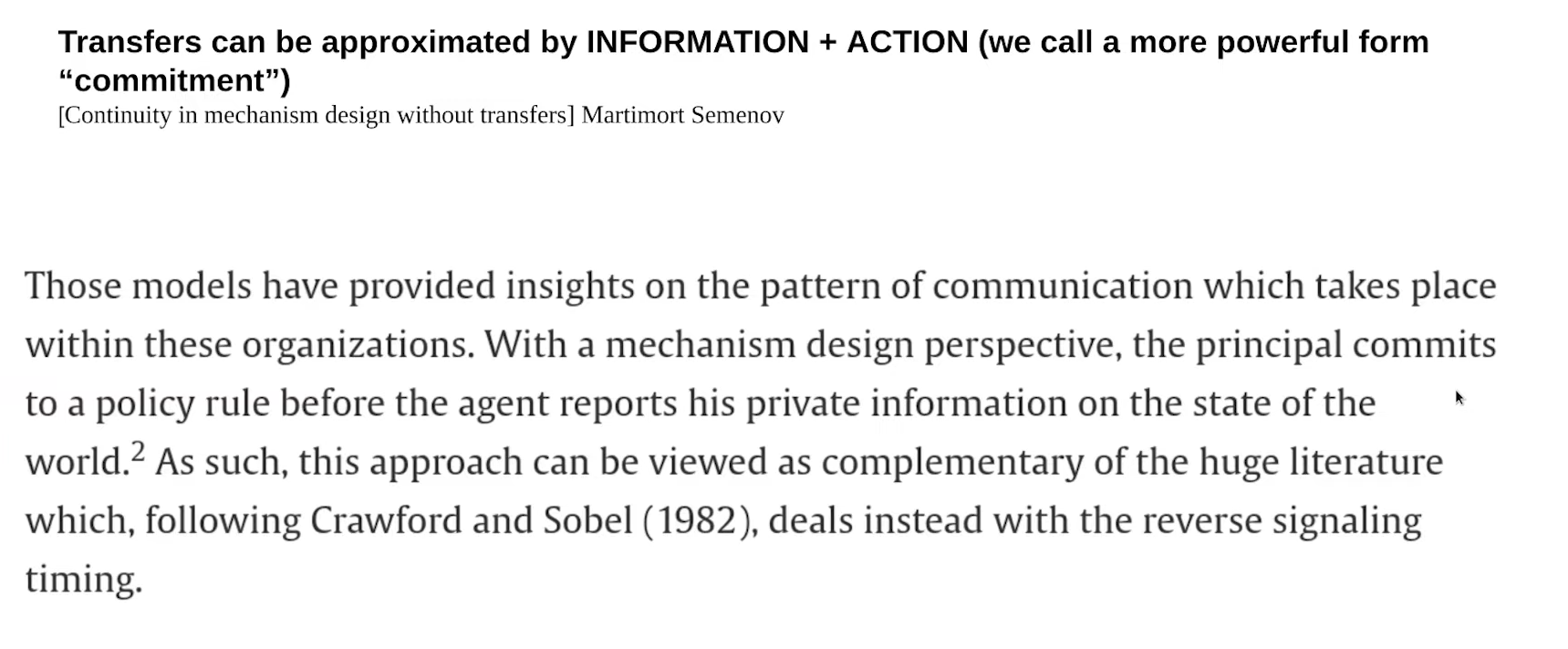

Alternatives to money (8:00)

In 80s and 90s, we began to question Money as a primitive : In many contexts, these monetary transfers simply aren't available but we still want to solve mechanism design problems

So we started to explore alternative models that achieve similar results as money without relying solely on monetary transfers.

We actually found that even in the absence of actual monetary transfers, these can be approximated by "commitment" (information + action)

By having agents commit to a rule and providing private information that aligns with this commitment, many desirable properties can still be achieved without relying on money.

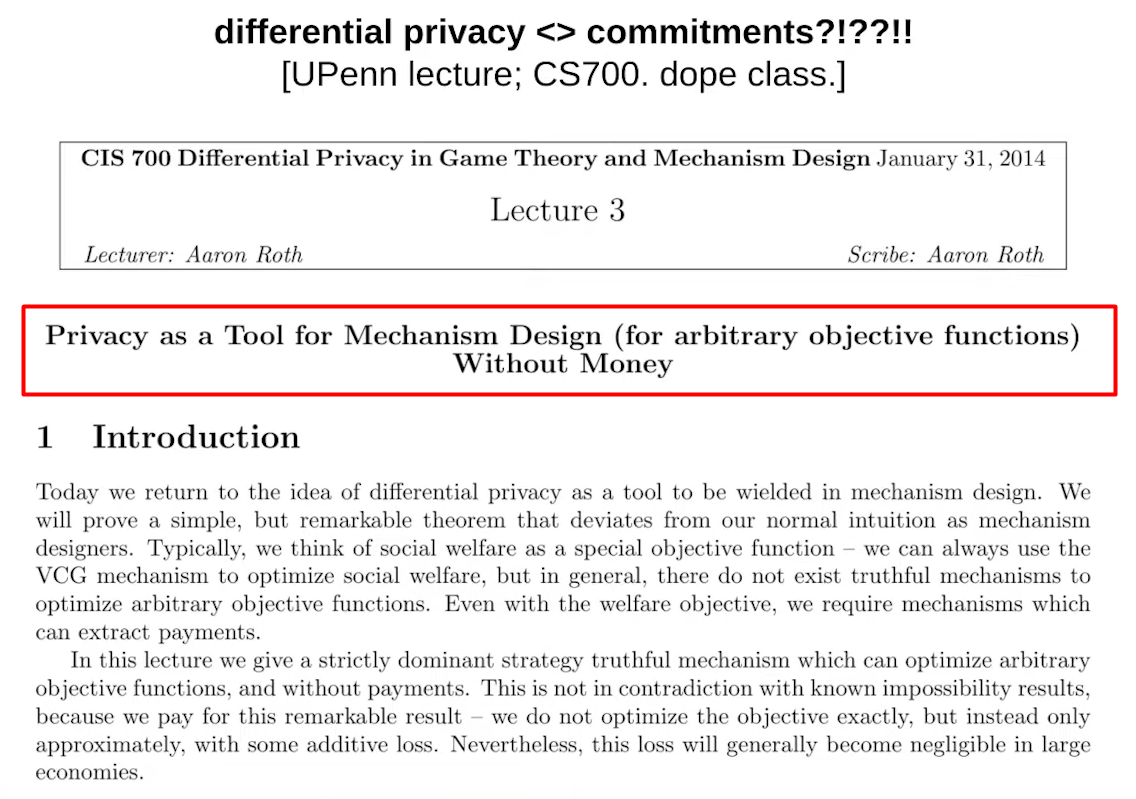

Differential Privacy in Mechanism Design (10:30)

By incorporating differentially correct outputs and differentially private inputs, it becomes possible to achieve a new class of mechanisms that were previously not feasible without differential privacy.

Information theory serves as a framework that connects various fields such as mechanism design, privacy, economics, and money.

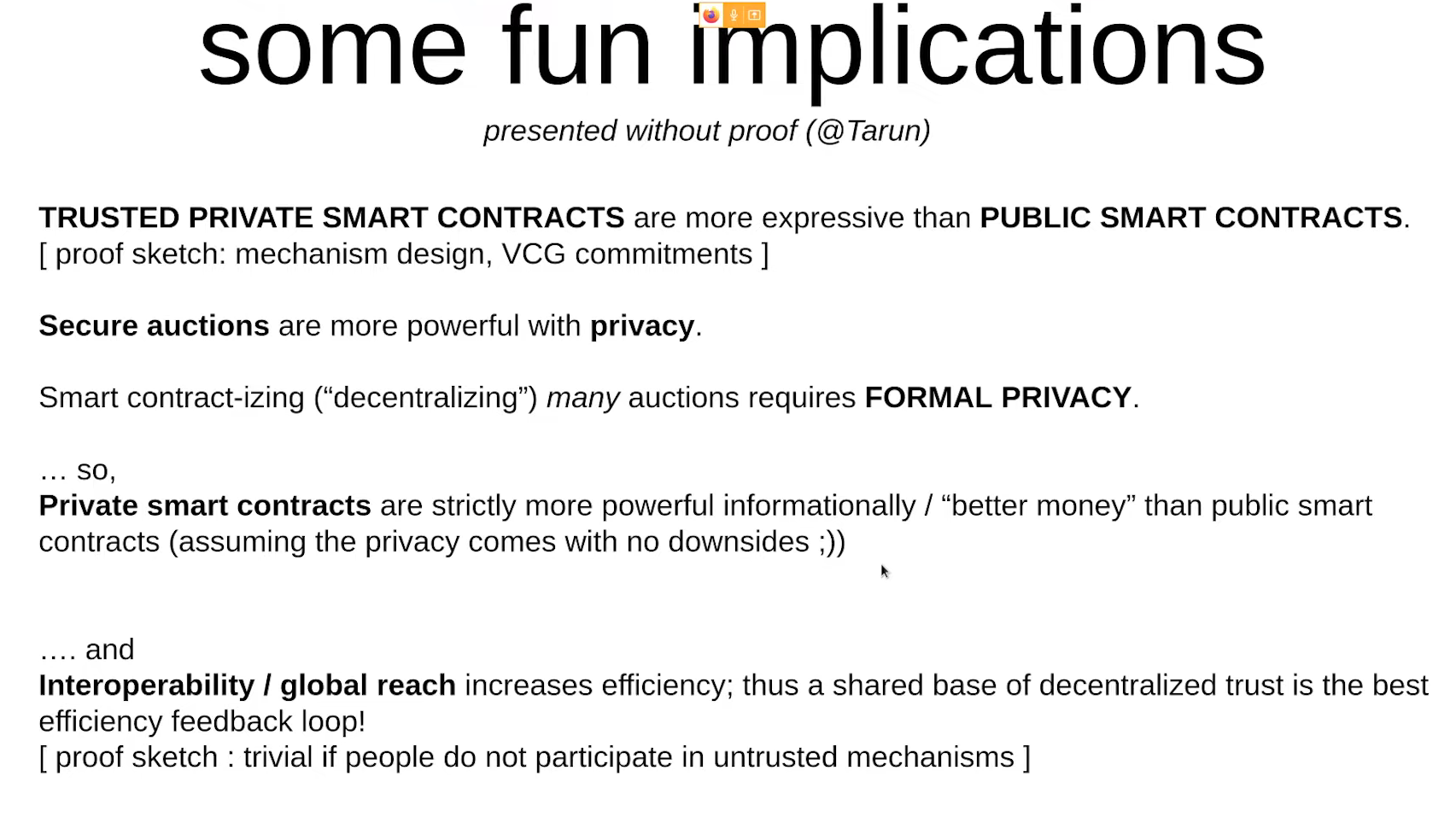

So, in a mechanism design sense, the "right" kind of commitment or Privacy is indistinguishable from money !

A practical implication : trusted private smart contracts (12:00)

Advantages

- Trusted private smart contracts provide more options for approximating properties of money

- They enable the construction of more efficient auctions and mechanisms compared to those relying solely on public transfers.

Limitations

- It's very difficult to imagine building it securely without centralization

- The absence of trustless privacy leads to externalities

Solving privacy challenges

Crypto already has transfers, transfers are trustless but privacy is not, and many mechanisms require trust. From here, we face a gray zone :

- What are the limits of decentralized trust ?

- How can we formulate an abstraction boundary to trust ?

- What do we gain by solving privacy ?

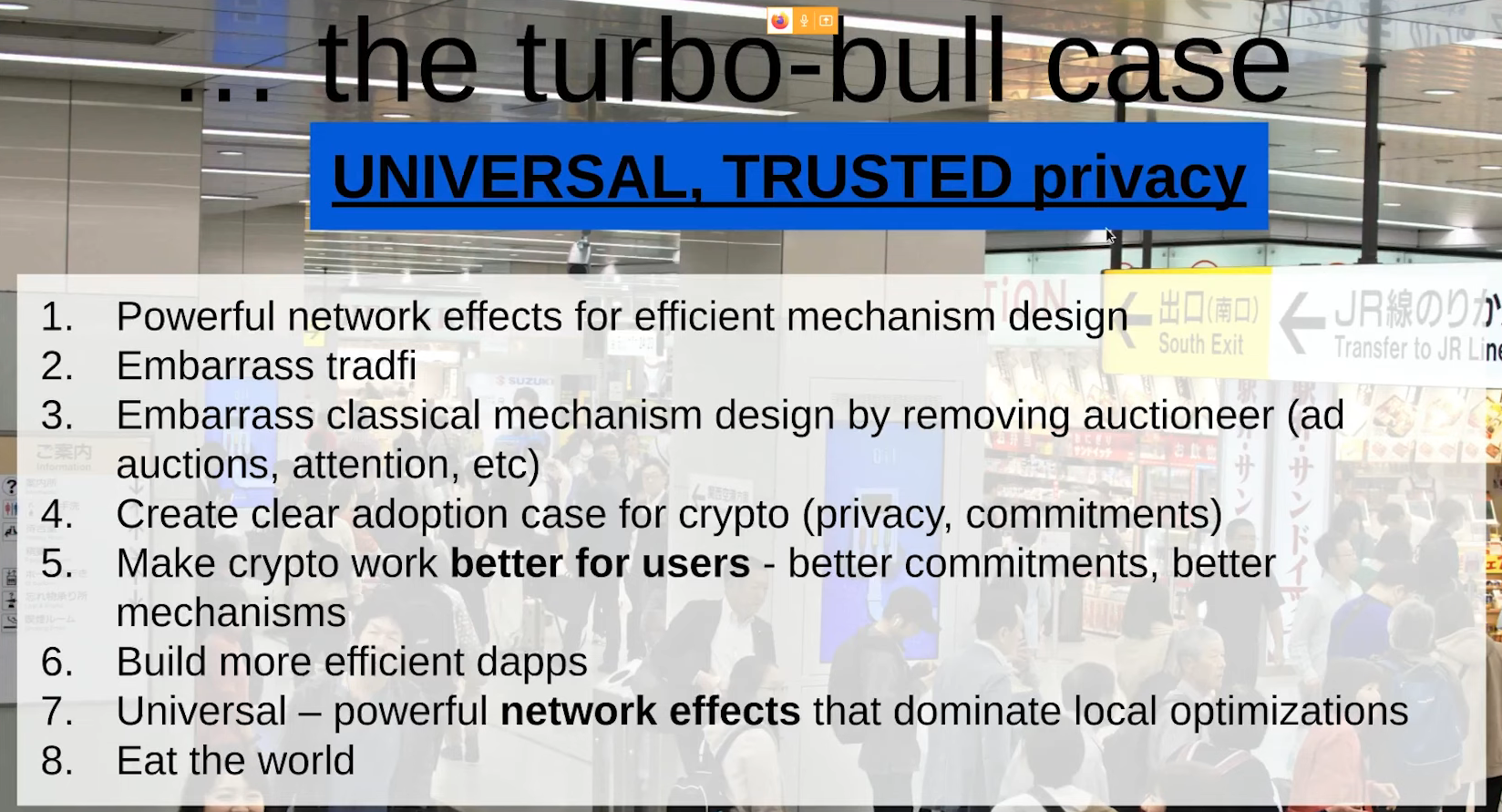

Bull case : we solve the challenges (15:00)

If a solution for universal trusted privacy is found, it would create a clear adoption case which makes crypto work better for users, and build more efficient dapps. In the end, it eats the world because TradFi could not compete against universal trusted privacy

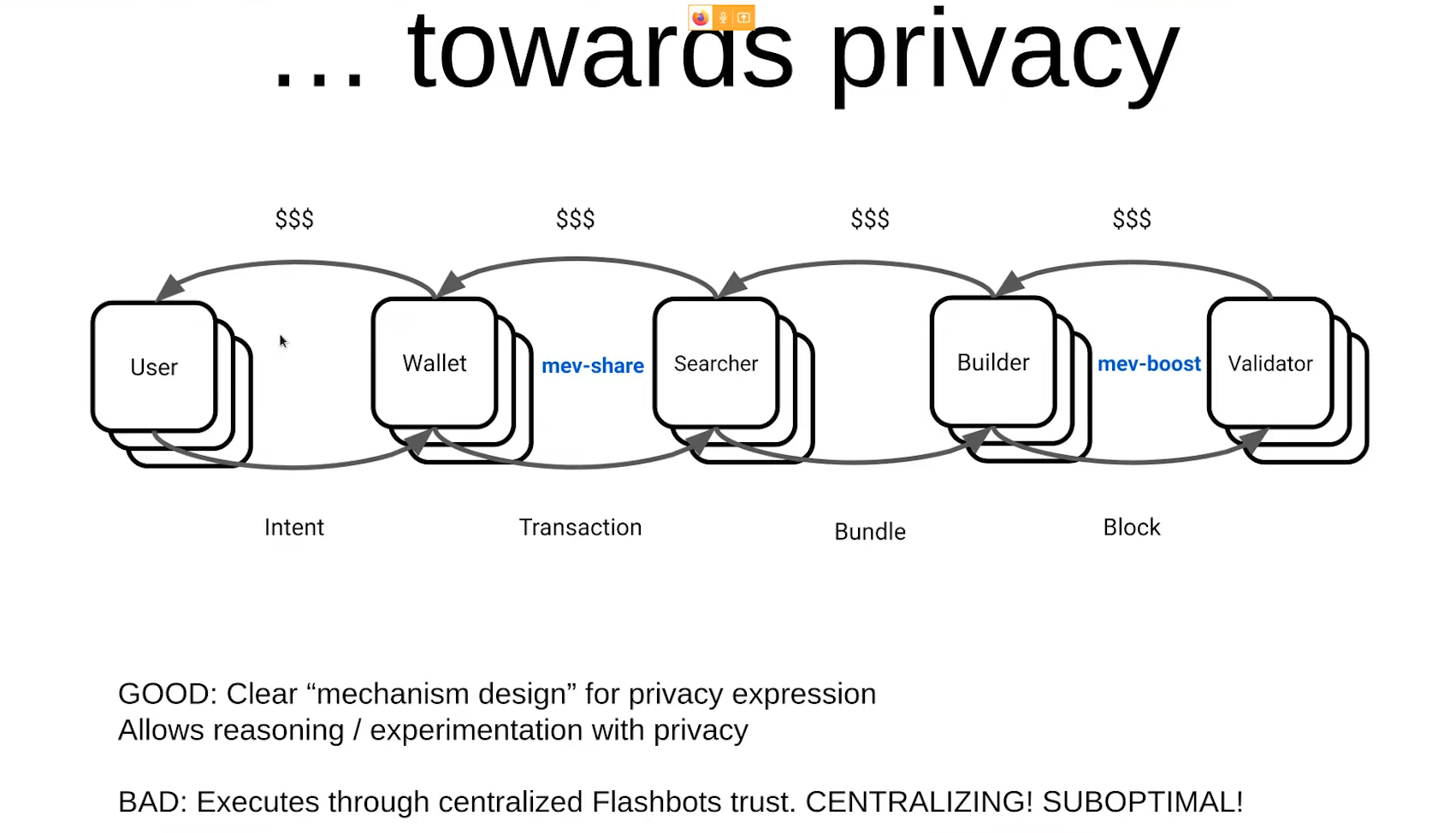

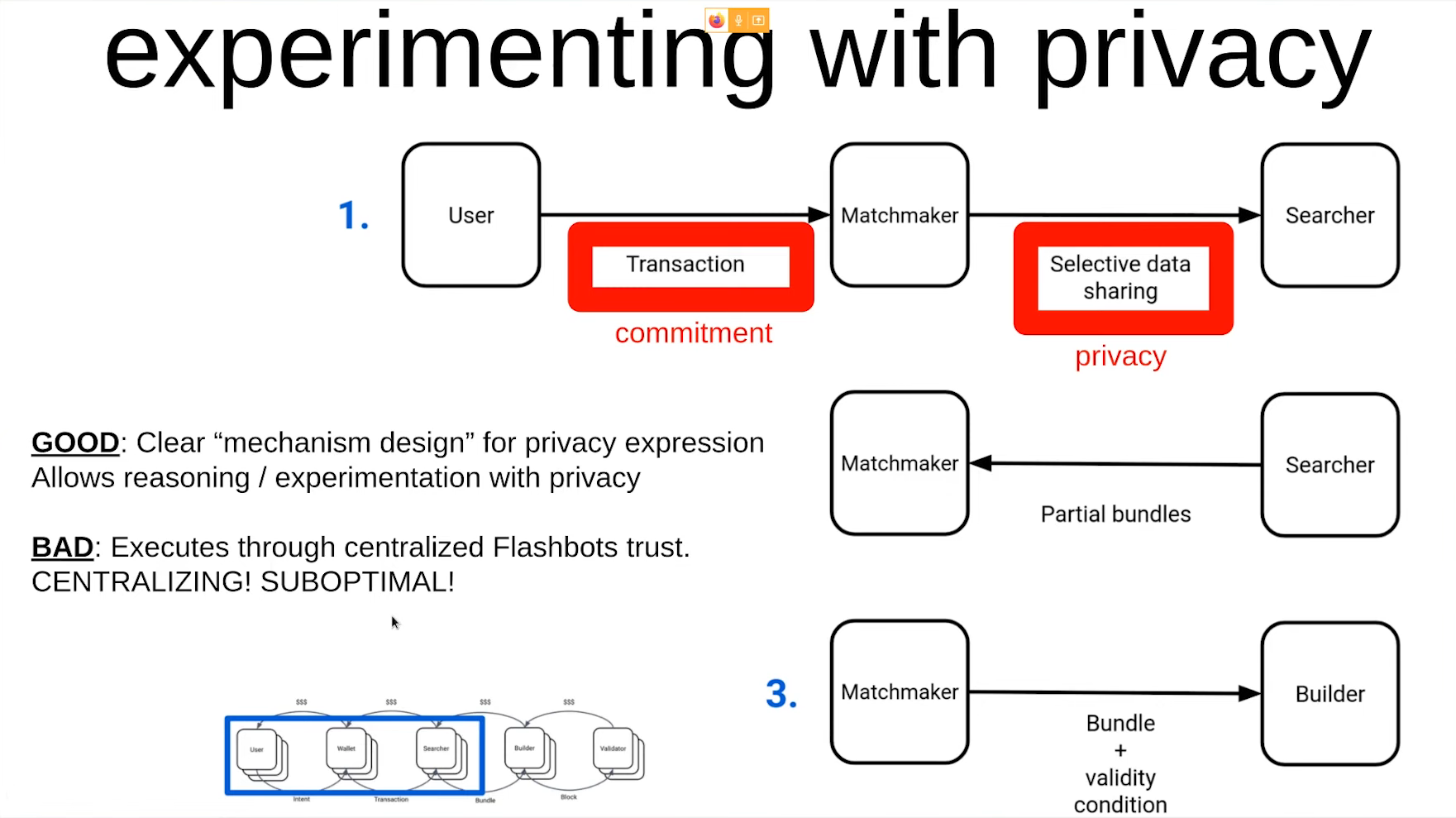

MEV supply chain (16:15)

Both mev-share and mev-boost rely on privacy to achieve efficiency, and this is possible thanks to abstraction boundaries.

However, current privacy solutions like Flashbots are centralized and suboptimal from an information theoretic and decentralized system perspective.

Project SUAVE (18:15)

SUAVE's goal is to achieve defense in depth on the boundary between ideal mechanism design and user-controlled privacy.

While Mev-share and Mev-boost serve their purpose, they are centralized and not ideal in terms of privacy. There is a need to replace these centralized solutions with more decentralized alternatives, and SUAVE seeks to become one of those.

Q&A with Jon Charbonneau

What is the practical state of Privacy Primitive based on where devlopment looks like ? (20:30)

The pressure for privacy is economically driven (so very powerful), as people want to build systems that can only be achieved with privacy, that's why mev-boost & Mev-share exist

Builder features and techniques like PBS and Danksharding are being explored to enable new classes of mechanisms through privacy and commitments.

But fundamentally, Phil doesn't think it's good enough :

- While centralized systems have been functioning adequately so far, they pose a significant centralizing pressure on our overall systems

- Adding technologies like SGX can restrict actors' actions but still rely on centralization (SGX relies on Intel)

- Committees or crypto economics also have their downsides in achieving full decentralization.

Phil emphasizes the need for a combination of techniques that maximize decentralization to counteract economic pressures

Let's say that Etereum doesn't actually end up implementing PBS (or other features). How do we decentralize that role in a better way ? (23:00)

It may not be critical for the Ethereum Foundation (EF) to push hard on implementing certain features if the community is aligned enough towards decentralization.

While EF may not be solely responsible for achieving decentralization, they should have decentralized options as a "nuclear option" if needed.

Phil suggests exploring other ways to decentralize, such as building decentralized alternatives and sharing primitives for users.

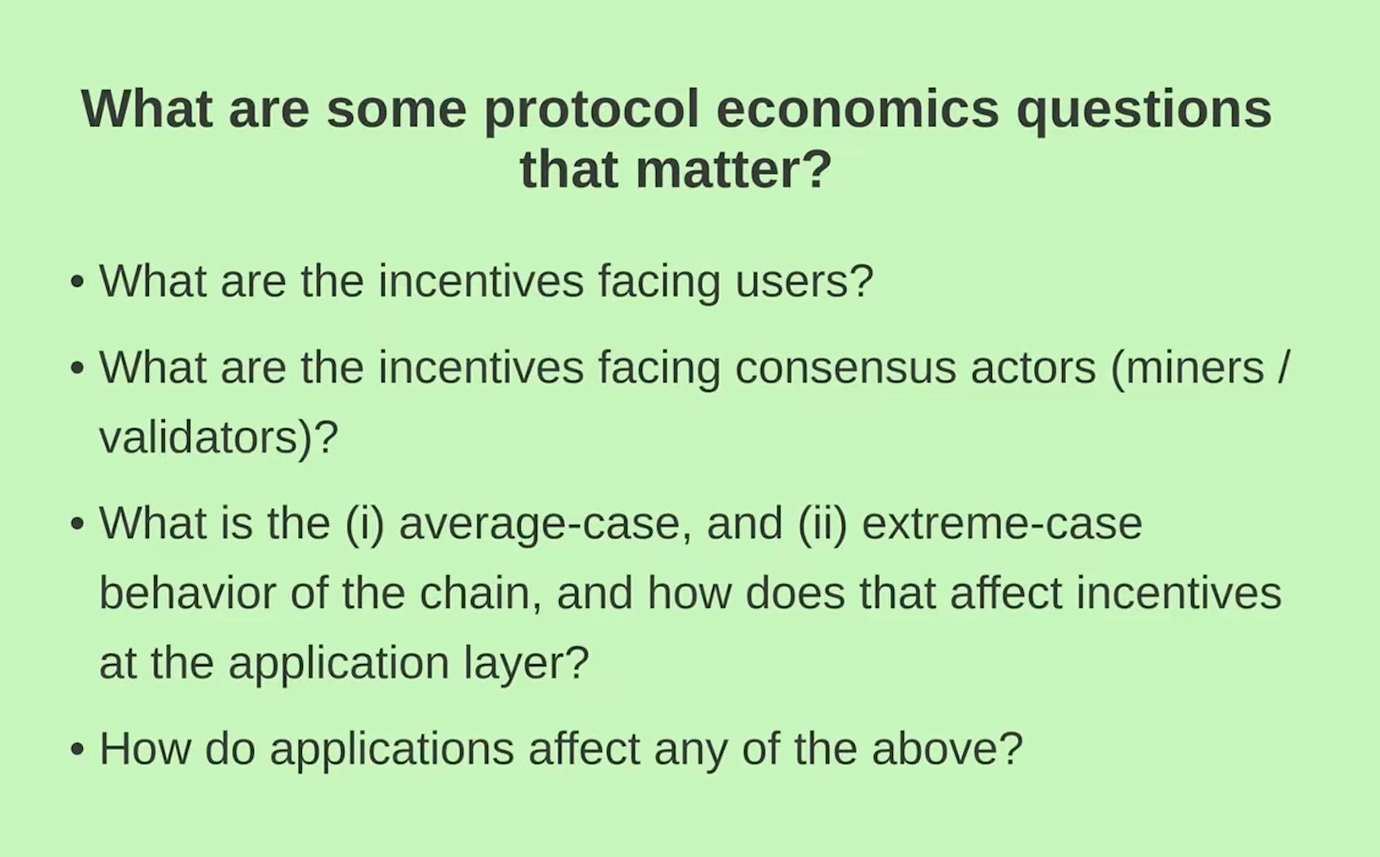

Video Overview

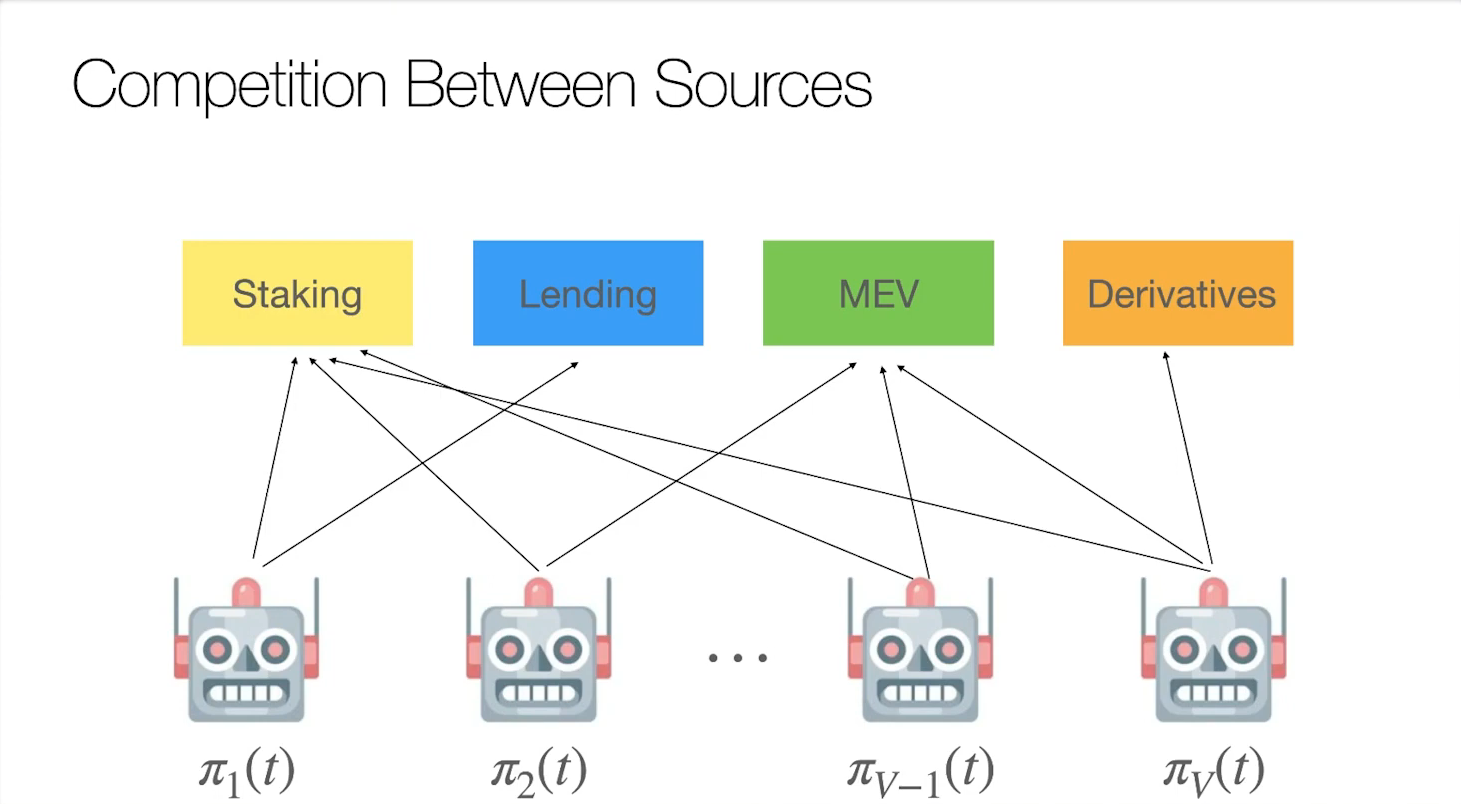

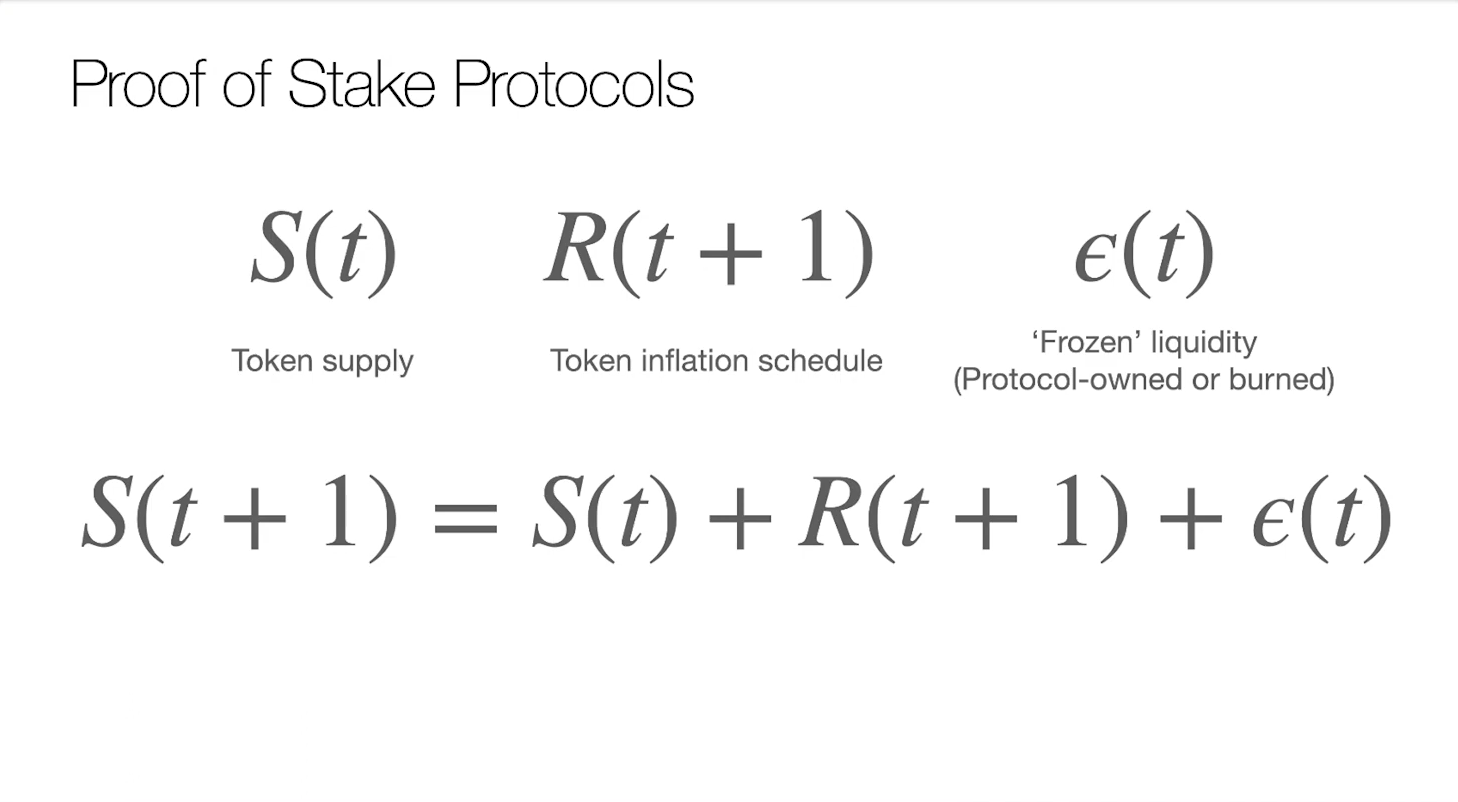

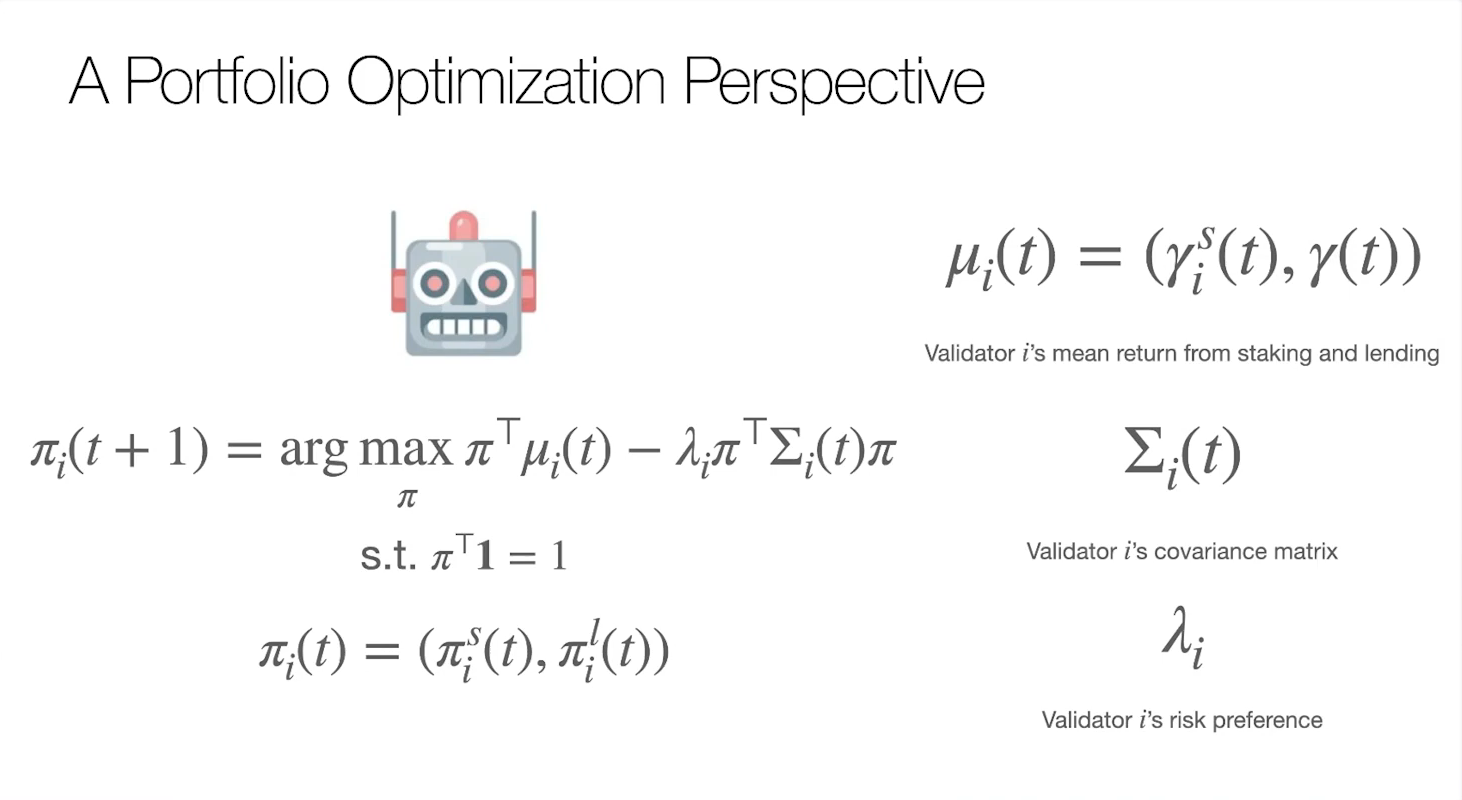

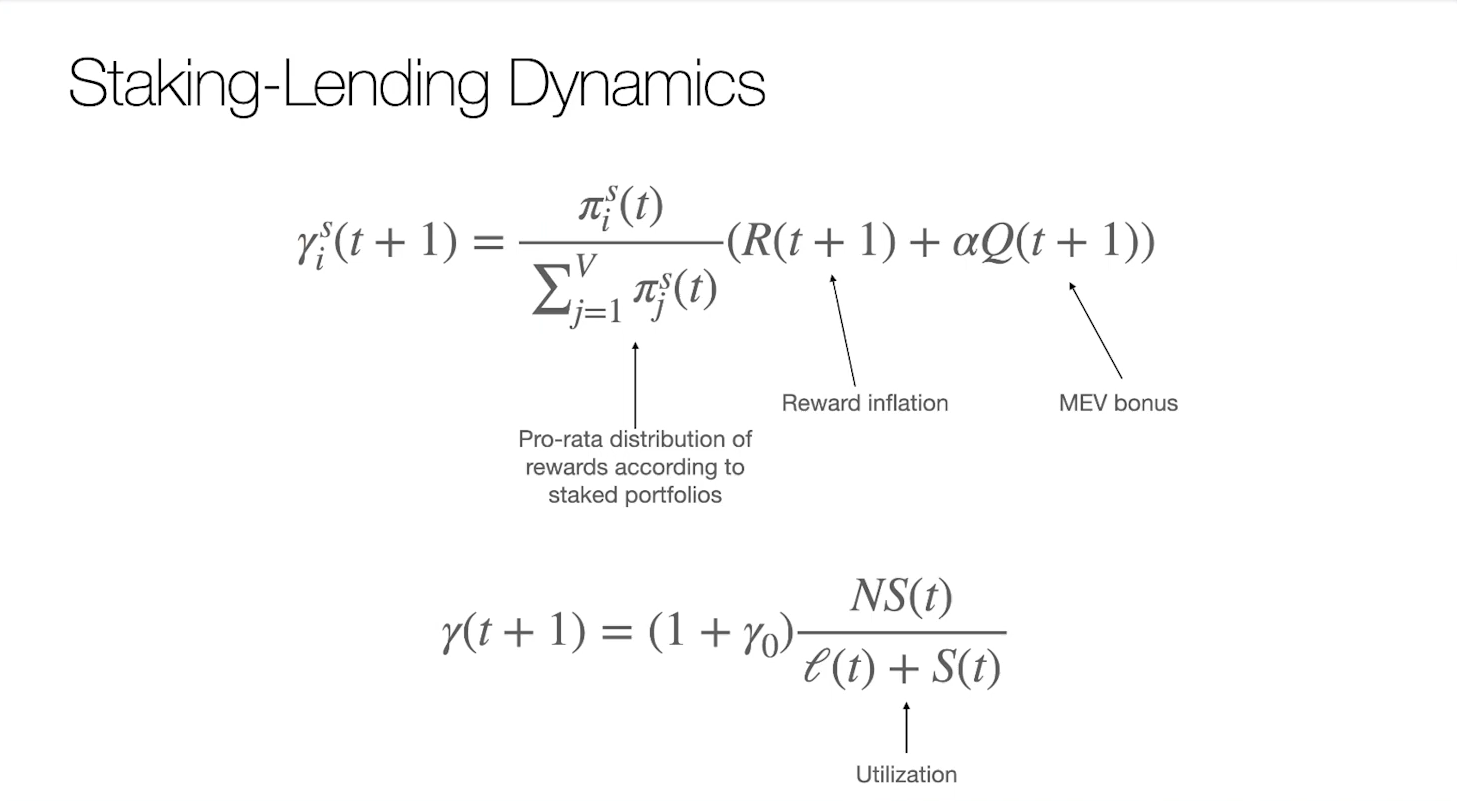

- What protocol economics look like in general

- Changes that happened to both Ethereum protocol and application

- Some of the issues in the future

Protocol economics

Political economic questions (1:00)

Example about behavior of the chain : if the chain is suddenly being attacked, does the application have some problems and does it affect any of the incentives ?

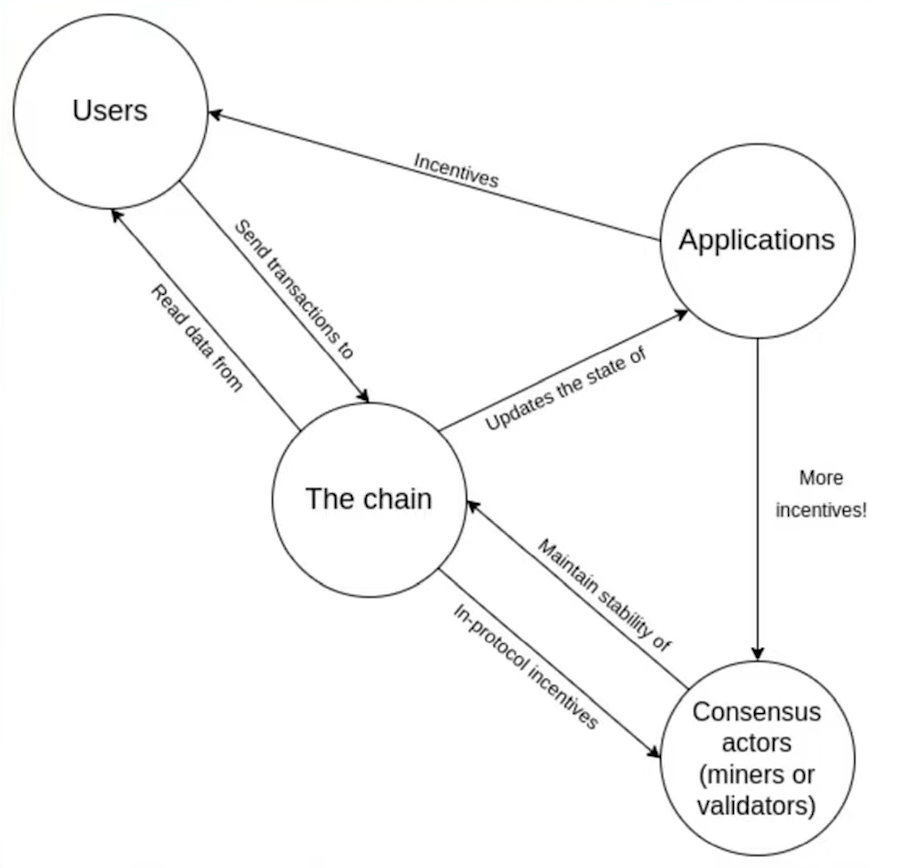

How the chain works

- User send transactions to the chain and read data from the chain

- Applications are the reason why users send transactions, and they give incentives to users

- Consensus actors maintain chain stability through mining rewards, validating rewards, penalties, and other in-protocol defined rewards to stick with their roles

Ultimately, MEV is about extra protocol incentives that arise mostly accidentally and how it ends up affecting some of the incentives to run the chain.

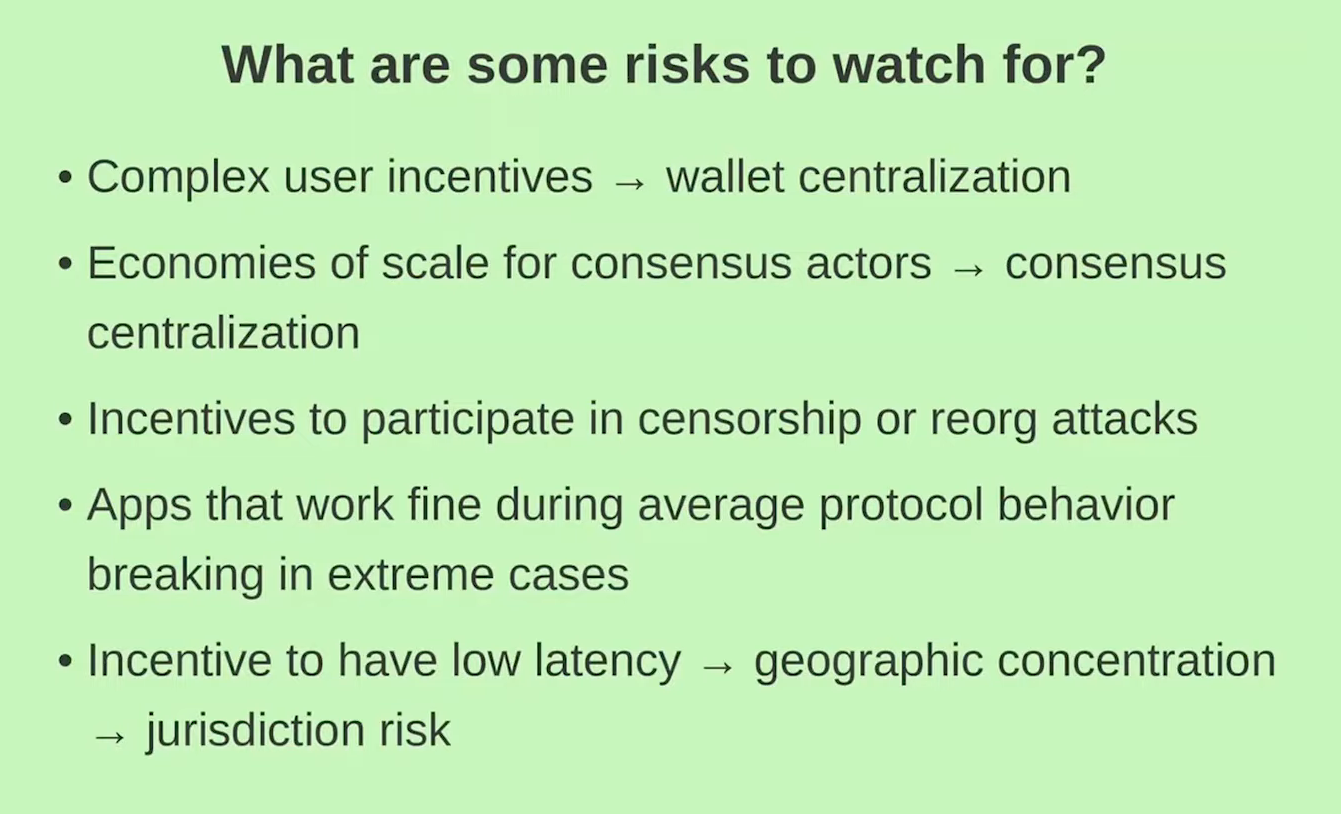

The risks to watch for (3:00)

The main risk is about validator incentives. We must ensure that validators act correctly instead of acting incorrectly or attempting attacks on the chain. The battle between user incentives (transaction fees) and potential bribes to censor the chain is a concern.

Aside form that, economies of scale can lead to centralization. If consensus actors see constantly complex and shifting strategies, especially with proprietary information required, it leads to domination by large pools.

Incentives to have low latency implies geographic concentration and cloud computing. Both of these things actually contribute to juridiction risks, which affect the credible neutrality of a blockchain, as we've seen over the past year (with OFAC compliant blocks)

Changes that happened

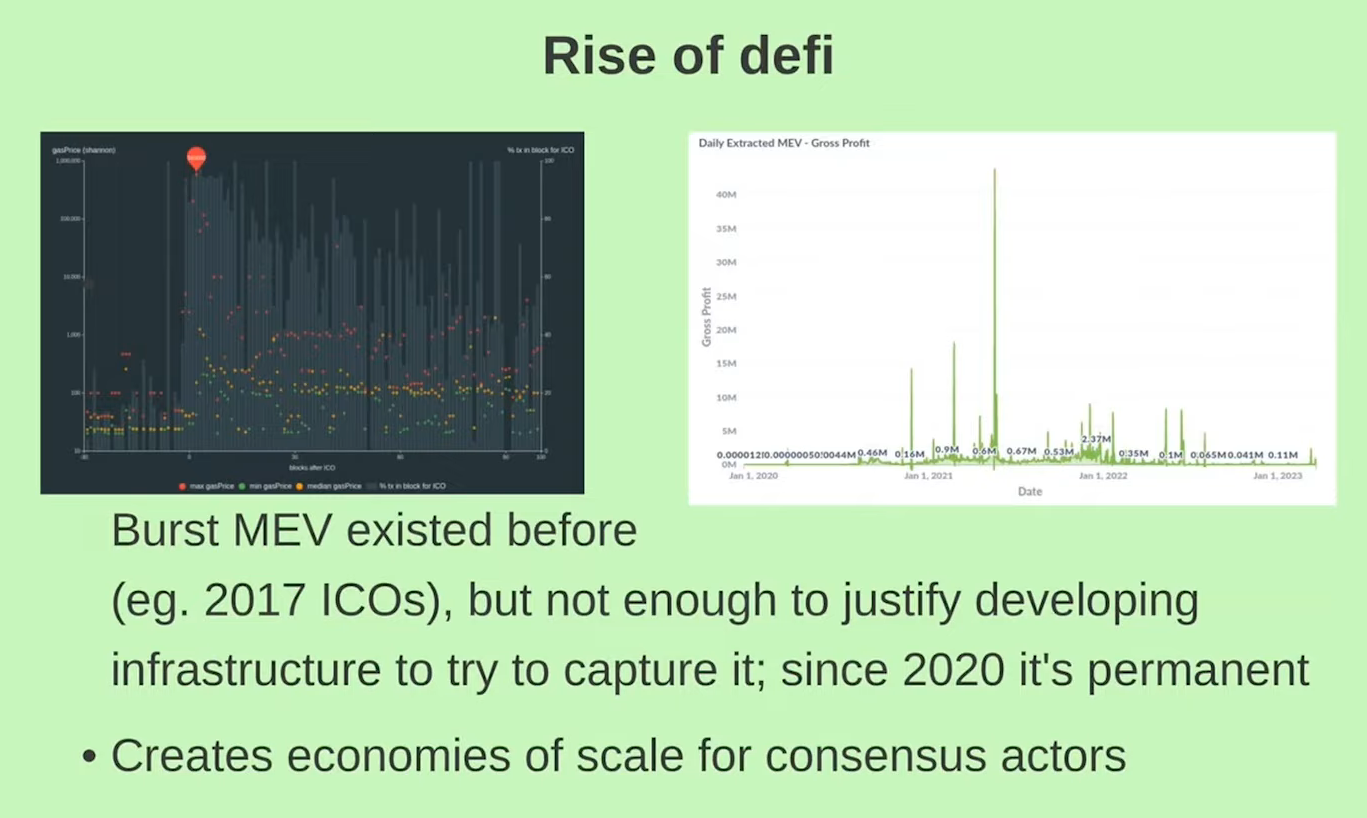

Rise of DeFi (6:45)

Opportunities for miners to profit from manipulating blocks have existed since 2017 with the famous ICOs, but there wasn't enough value to justify infrastructure costs to capture it.

Since 2020 and DeFi Summer, infrastructure costs to capture MEV became justified, as there are MEV opportunities in every block and bigger spikes than 2017.

This created the necessity for things like Proposer-Builder Separation (PBS) to try splitting up the economies of scale, so that the remaining roles would not rely so heavily on economies of scale.

Historical changes (8:45)

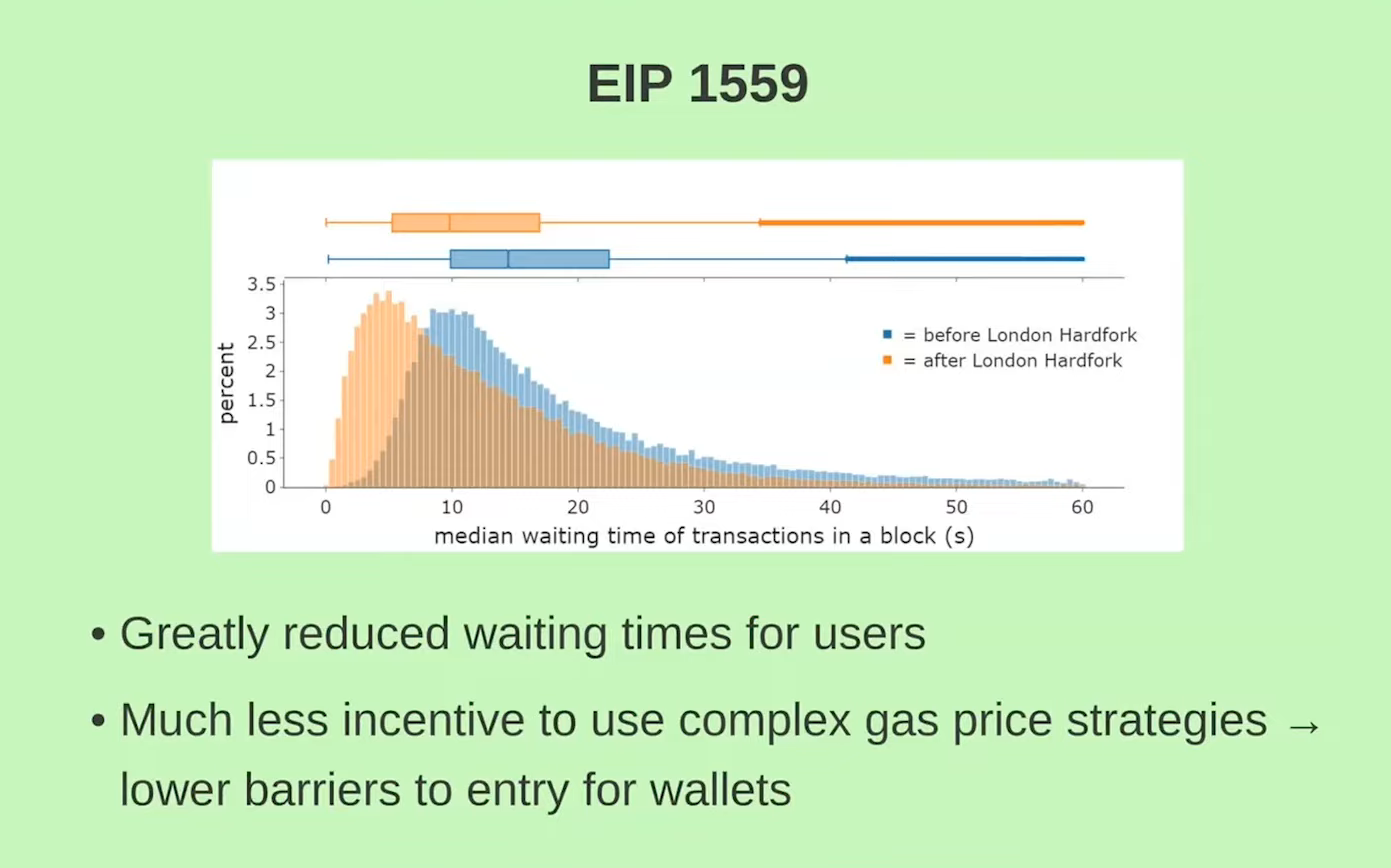

EIP-1559 has brought significant improvements to the user experience. As for Merge, it's not yet clear whether this update has been beneficial or detrimental to Ethereum, as there are so many changes

The long-term effects of the merge are still uncertain but will likely have a lasting impact on Ethereum

Upcoming changes

Withdrawals (11:30)

Withdrawals will be enabled in the next hard fork, leading to a reduction in total staked amount. But people will become more confident getting staked as they can get their money back

Vitalik thinks the main practical consequence of this is going to be a change to the total amount of staking and possibly also a change to the composition of stakers

Single Secret Leader Elections (12:30)

Single secret leader elections ensure that the proposer's identity is only known to themselves before the block is produced, reducing the risk of denial-of-service attacks against proposals.

Proposers can voluntarily reveal their identity and prove that they will be the proposer.

Research question : are there unintended effects and in particular, especially in the combination of PBS, would there be incentives for proposers to pre reveal their identity to anyone ?

Single slot finality (13:15)

Single slot finality means blocks are immediately finalized instead of finalizing after two epochs (64 blocks)

It may increase reorg security and make Ethereum more bridge-friendly, except for special cases like inactivity leak.

But as we'll get into with layer 2 pre confirmations, single slot finality will not be de facto single slot for a lot of users and Vitalik thinks that's going to be an interesting nuance.

Layer 2 and protocol economics

The impact on layer 1 economics depends on how layer 2 solutions are implemented :

- Sequencing : "Based Rollups" are layer 1 doing the sequencing for them (more info from Justin Drake post), and "L2-controlled" means the Layer 2 has its own sequencer

- Percentage of Layer 1 DeFi moving to Layer 2 : no impact if all Layer 1 DeFi doesn't move, significant if all Layer 1 DeFi does

- Layer 2 pre-confirmations, protocol economics, and interaction effects are also important considerations.

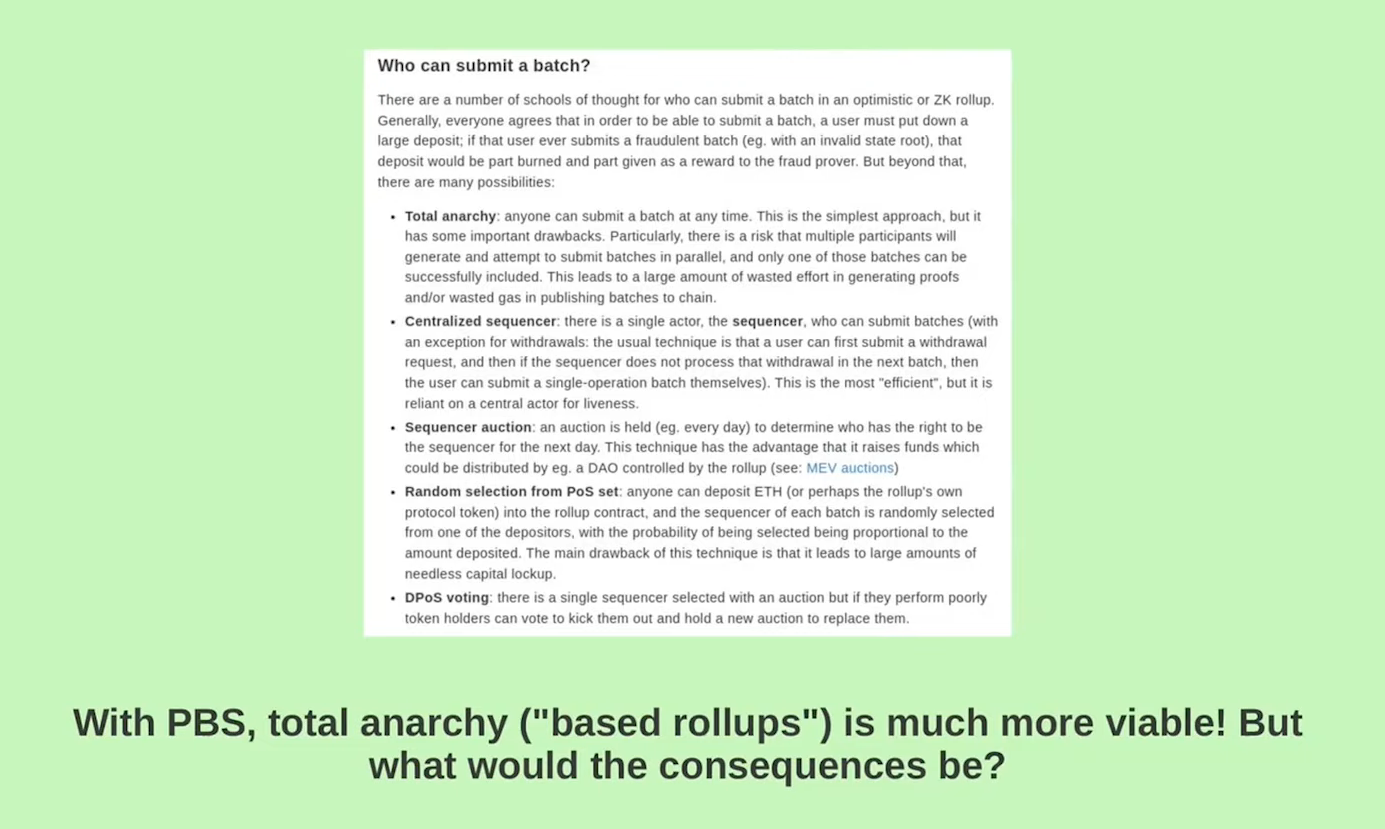

Who can submit a batch ? (15:15)

Base roll-ups involve batches of transactions committed into layer 1. There are different approaches to sequencing transaction in Based Rollups solutions.

For example, "Total anarchy" approach allows anyone to submit a batch at any time. PBS reduces costs and makes total anarchy approach viable, but it has important drawbacks, as wasted gas.

Other approaches include centralized sequencers, DPoS voting, and random selection. So there a lot of options that are not based...The question is : do rollups want to be based or not ?

Could Layer 2s absorb "complex" Layer 1 MEV ? (17:00)

No if :

- Rollups are based. The PBS system just does sequencing for Layer 2, so Layer 2 MEV becomes Layer 1 MEV.

- A large part of L1 DeFi stays on L1

- Rapid L1 transaction inclusion help L2 arbitrage in other ways.

Otherwise...Maybe ?

Fraud and censorship (17:45)

If you can censor a chain for a week AND it doesn't socially reorg you, then you can steal from optimistic rollups (Optimism 👀)

That said, much shorter-term censorship attacks also exist on DeFi. We can do a huge MEV attack on all kind of DeFi projects. We talk about Layer 2s but this is a problem on Layer 1s too

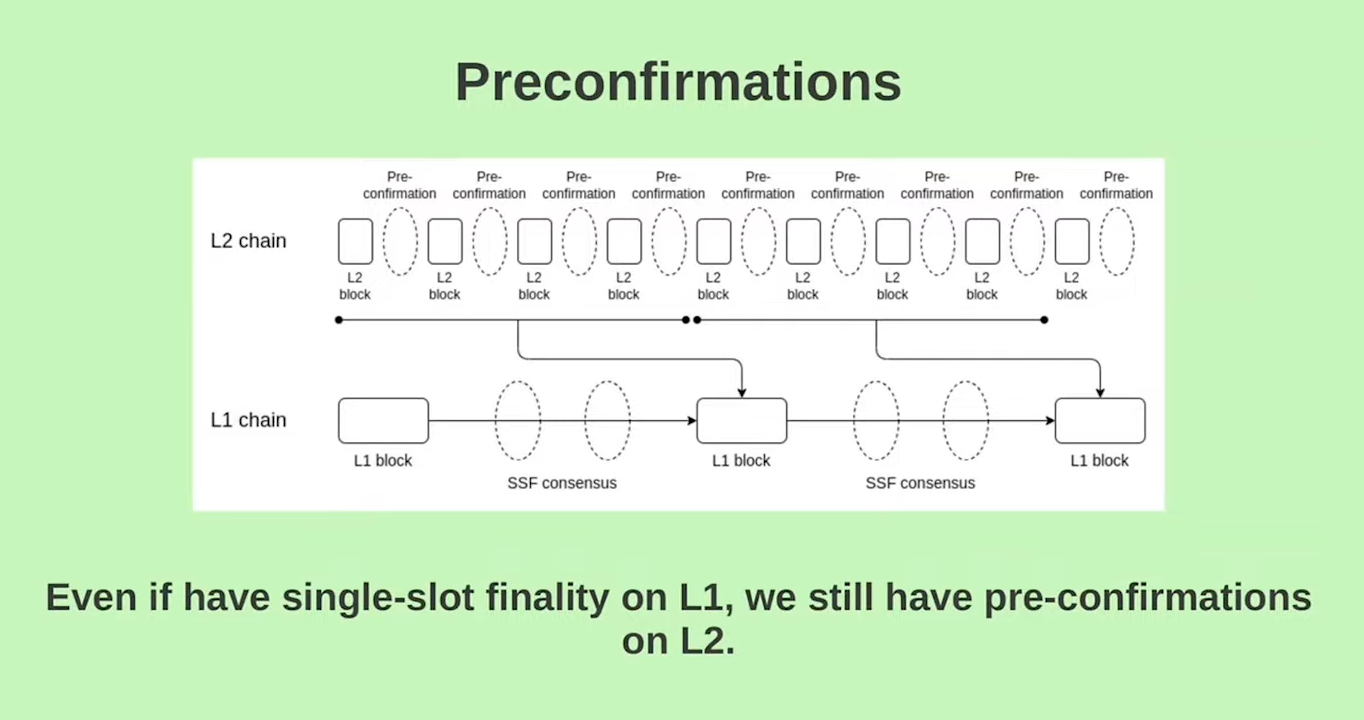

Preconfirmations (18:30)

Users generally prefer faster confirmations than the standard 12 seconds. Pre-confirmations are being considered by many Roll-Up protocols to address this issue.

The idea is to have an off-chain pre-confirmation protocol that confirms layer 2 blocks before submitting them to layer one. This process involves verifying a compact proof of the pre-confirmation in a layer 1 block.

With this approach, users experience a three-step confirmation process :

- Acceptance of transaction

- Pre-confirmation of layer 2 block

- Final confirmation on layer 1

Single-Slot Finality at layer 1 becomes more feasible when most users rely on pre-confirmations in layer 2. However, there will always be a distinction between the confirmation speeds

Free confirmation (19:45)

Having two tiers of confirmation is likely inevitable due to user expectations regarding transaction confirmations over time

Pre-confirmations in layer 2 are incompatible with based rollups, because the submission protocol must respect the pre-confirmation mechanism. If a set of layer 2 blocks does not adhere to pre-confirmations, it will be rejected.

Conclusion (21:00)

Decentralizing Sequencers...

...Wait, it's all PBS, always has been

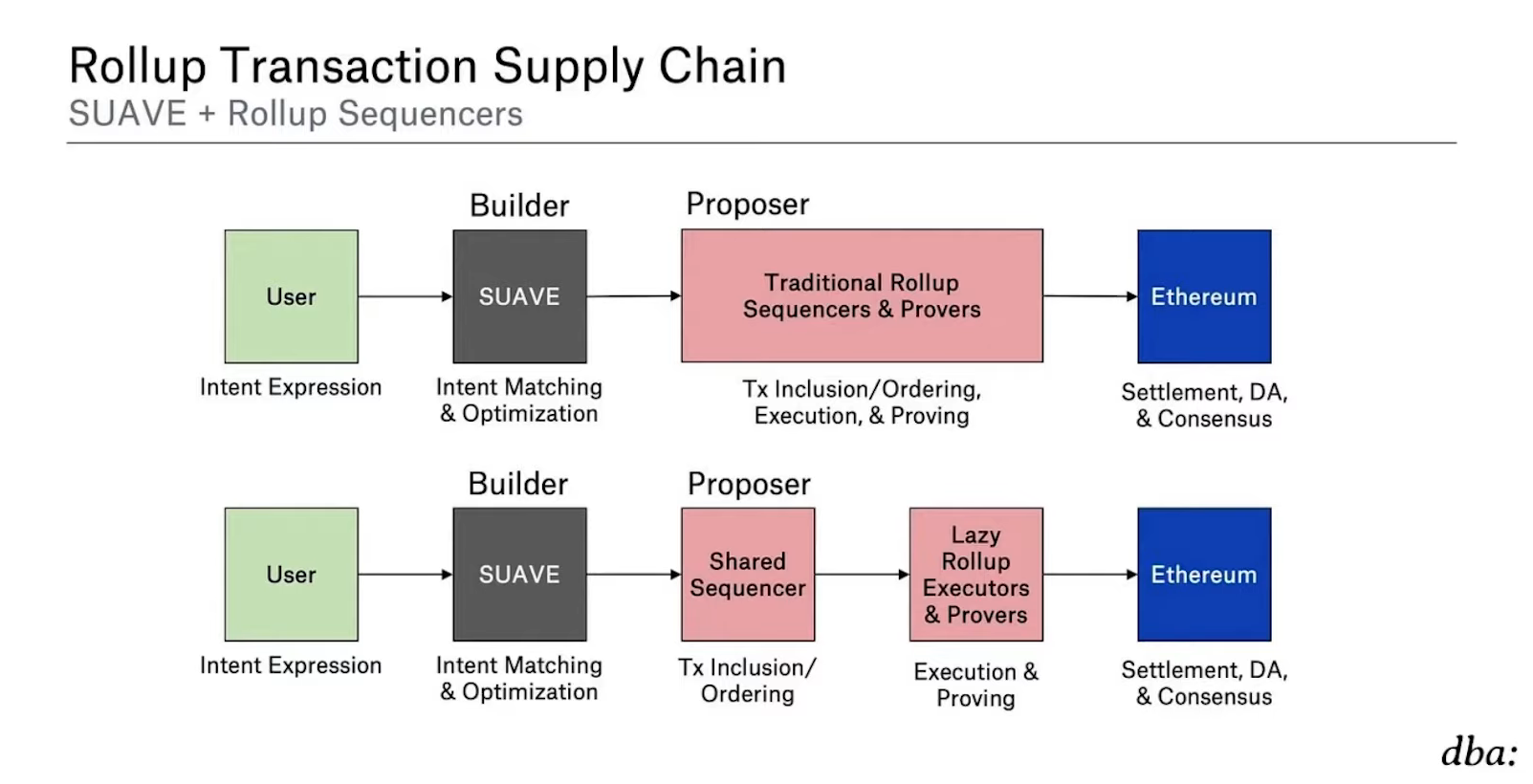

Every roll up has a decentralization roadmap. However, the way that sequencing works in layer two, it actually combines what is the equivalent of a layer 1 proposer and layer 1 block builder in the same role

This creates a variety of problems unless we start to address it by separating these two roles on layer 2, just like we did on layer 1 with Proposer-Builder Separation

Even with PBS on L2, we face challenges like privacy, Cross-domain MEV and Latency that require decentralizing the builder role.

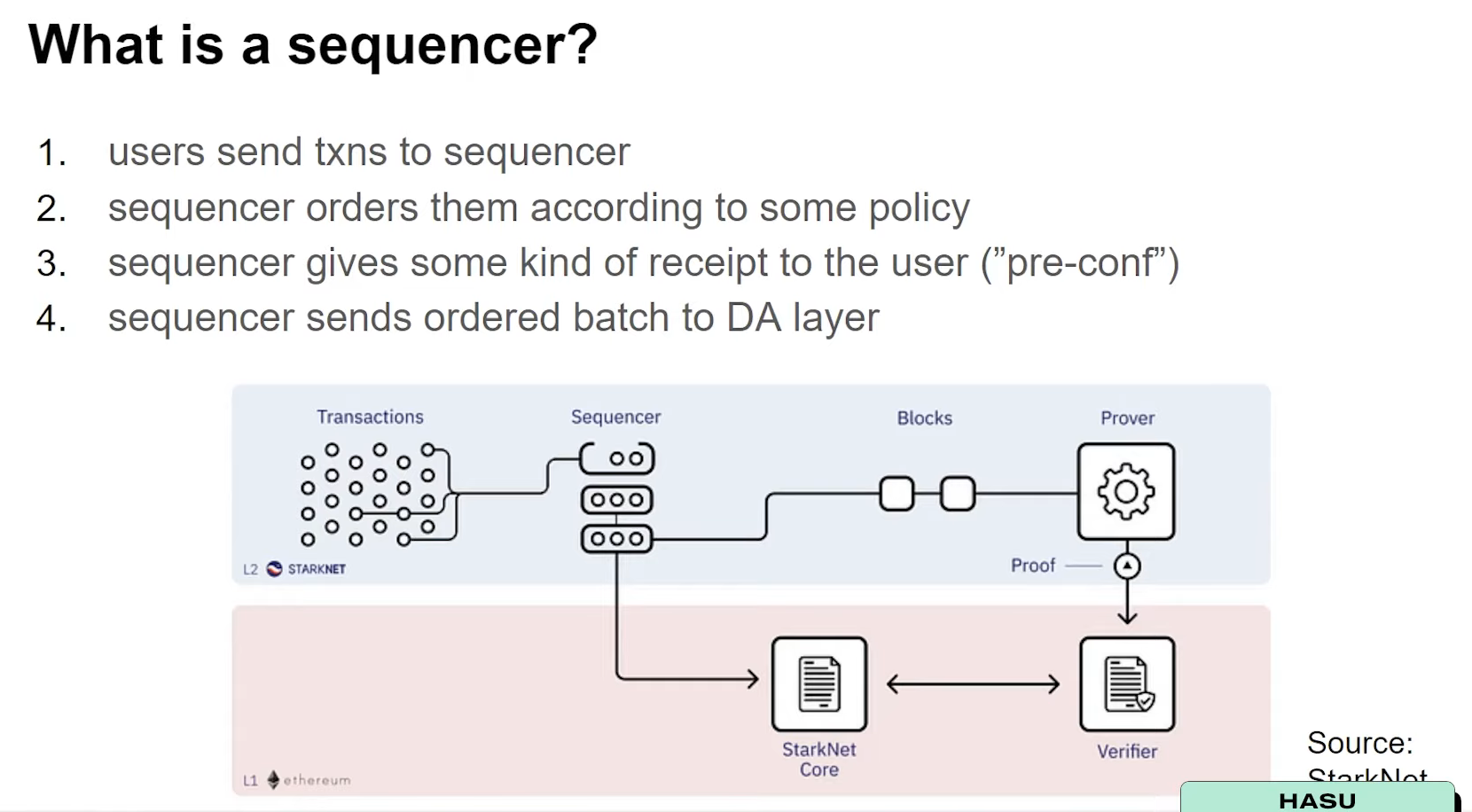

Decentralizing rollup sequencers

What is a sequencer ? (1:30)

There are basically 4 steps involved in a sequencer, which work in pretty much every Layer 2 :

Depending on the Layer 2, there might be some small differences. For example, in Starknet, the sequencer is also the prover so they have even more responsibility, but all of these can be stripped out over time

Why is decentralizing L2 sequencers important ? (2:30)

We didn't even started to imagine the end state of Ethereum scaling. Currently, rollup providers aren't just focused on building their own rollups. They have all pivoted to kind of Rollups-as-a-Service.

Therefore, Hasu thinks it's possible to see a future where spinning up a blockchain will look like spinning up a smart contract today, with numerous roll-ups functioning as independent blockchains. The problem is, all of these sequencers are centralized.

How to decentralize ? (4:15)

- The first proposal is "Based Rollups" (L1 or other chain-sequanced) like Vitalik mentionned before

- Shared sequencing, where one blockchain sequences multiple others (Espresso style)

- Sequencer/block auction, where the right to propose the next block or next series of blocks is auctioned off every now and then (Optimism style)

- Random selection from Proof-of-Stake set, where you have basically a consensus mechanism on top of your rollup and a proposer is selected randomly (Stargate style)

- Various committee based solutions, the most common is "first-come, first-served"

Sequencer = L1 Proposer + L1 Builder

If we look more closely, we can see that there are at least 2 components to the sequencer :

- Leader election mechanism (Proposing)

- Ordering mechanism (building)

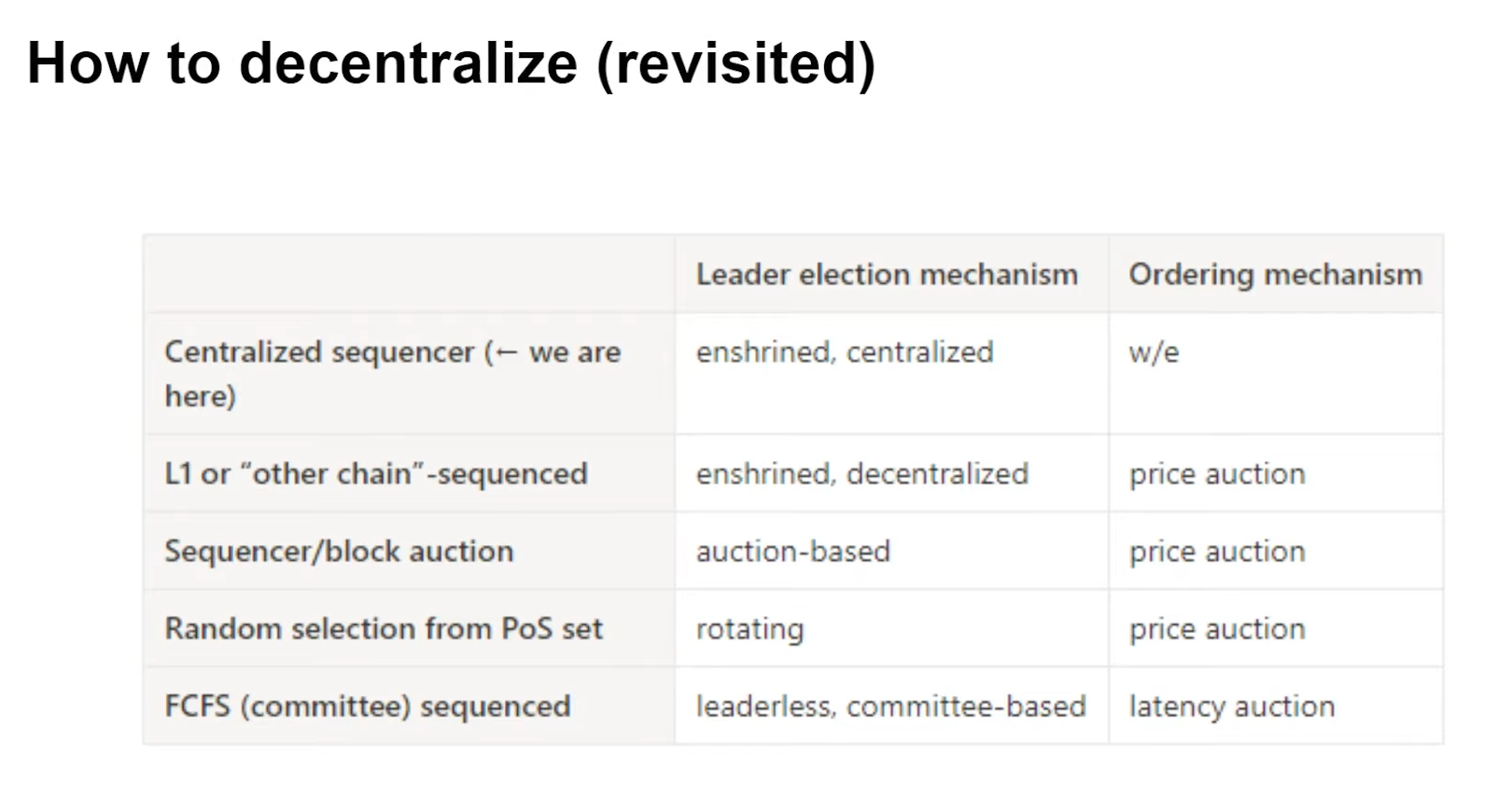

How to decentralize revisited (6:00)

The proposals put forward actually have different lead election mechanisms, different ordering mechanisms. But we also see that current proposals focus mainly on leader election mechanisms rather than ordering mechanisms.

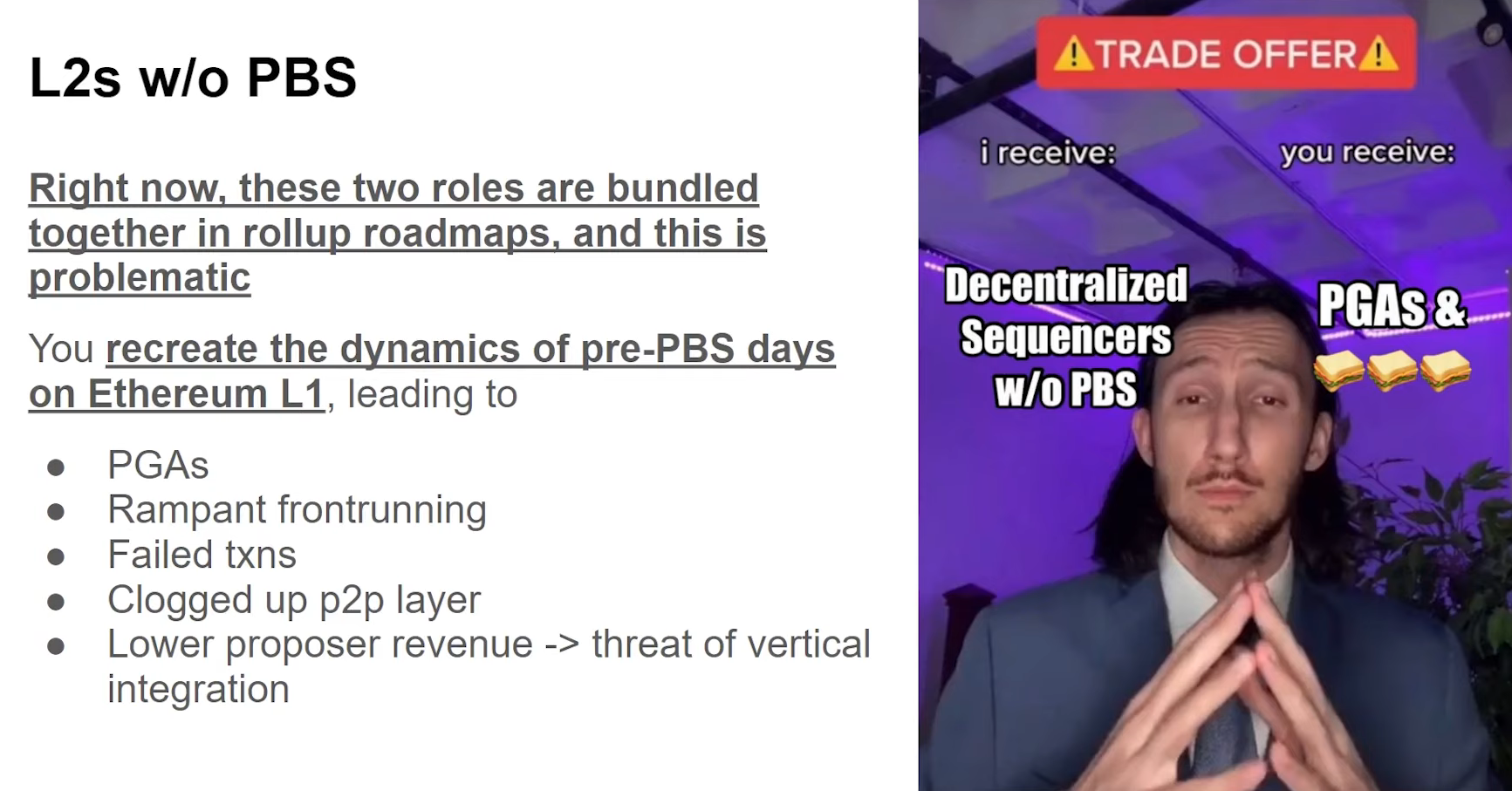

This is a problem, because if we do Layer 2s without PBS, we recreate the dynamics of pre-PBS days from ethereum layer 1 on these rollups.

Put another way, all these problems that we talked about two years ago on Ethereum are now back on rollups, and worse than before because MEV is a big deal today.

Hasu thinks it's more accurate to talk about proposer as a service because that's what it really is. We need to start separating proposer and builder roles on layer 2, just like we did on layer 1

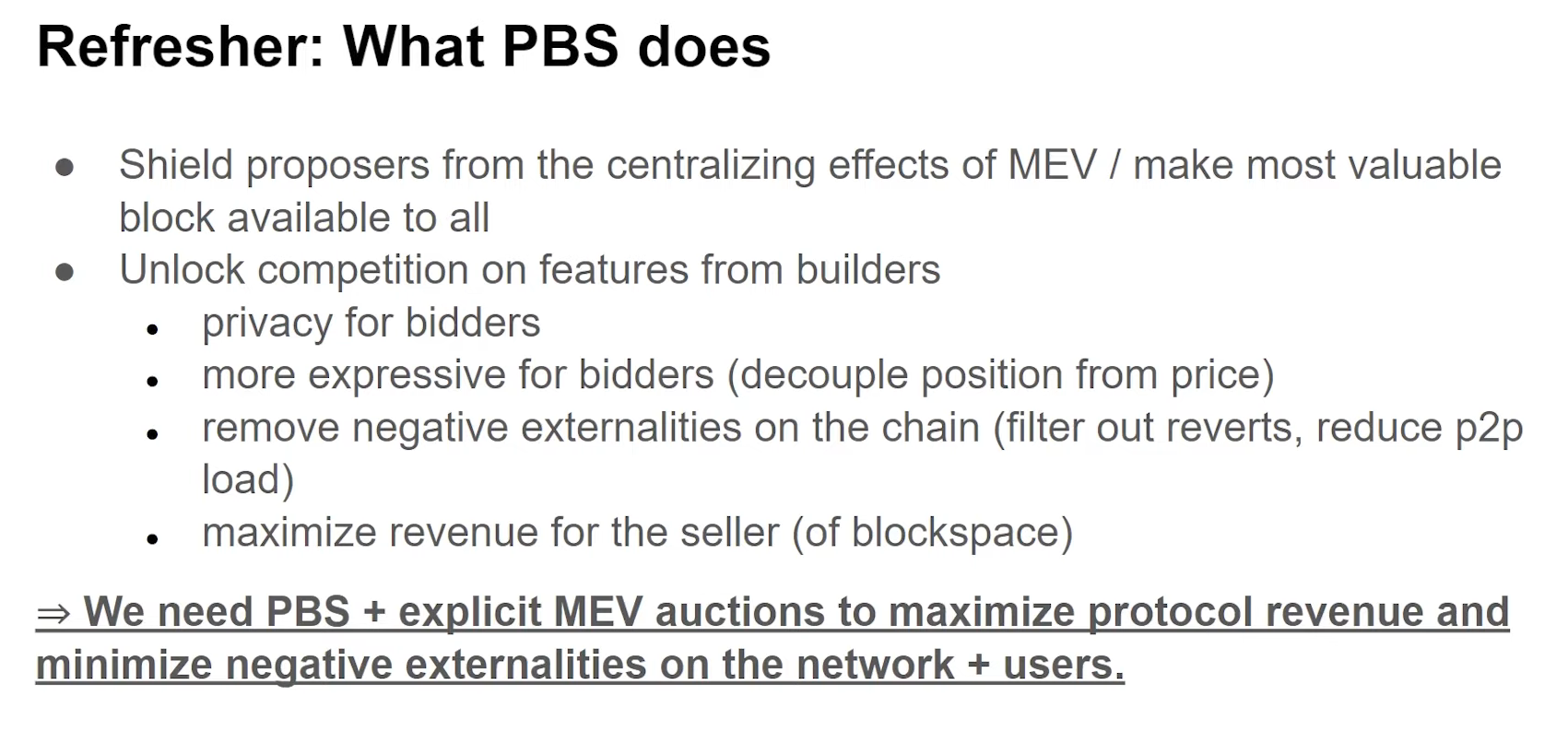

What PBS does (8:00)

Before PBS, we were only able to express basically these two dimensions through a single unit, which was the gas price.

With PBS, whether it's a small solo validator or it's a big staking pool like Lido or Coinbase, they all make the same from MEV and that's a huge achievement

PBS on Layer 2 faces novel challenges

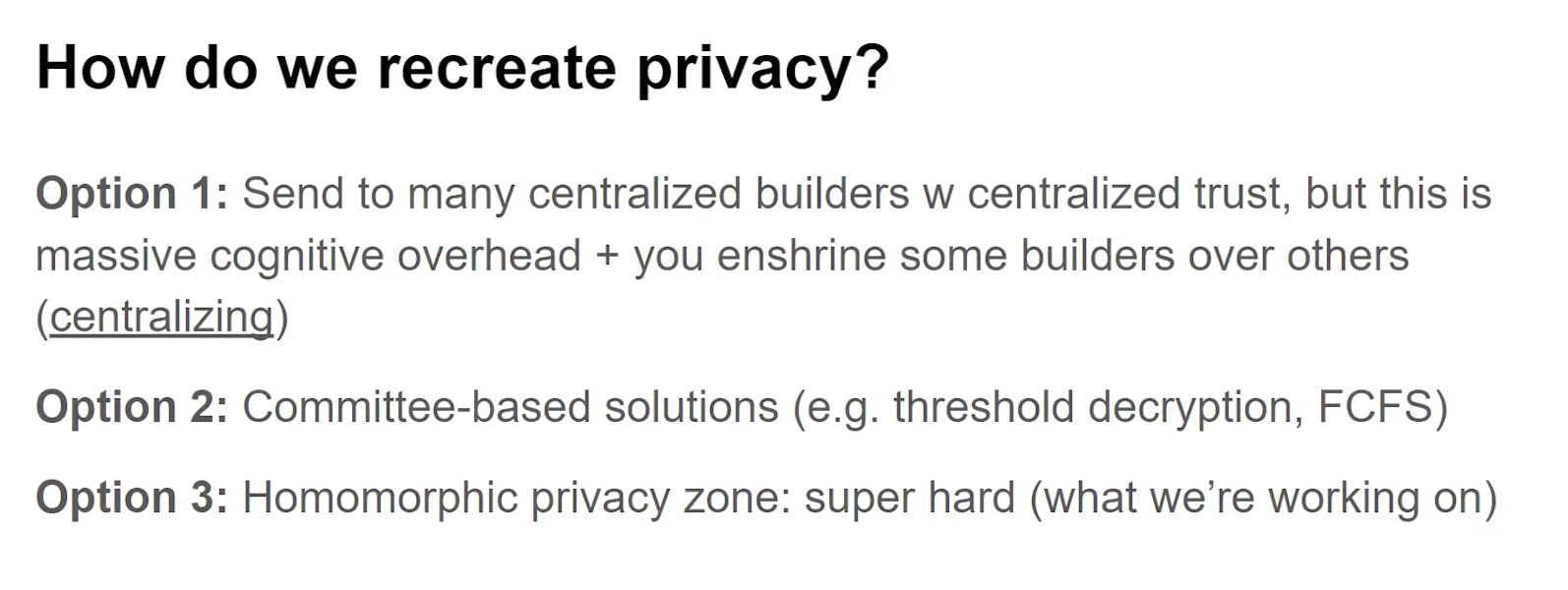

Privacy (9:45)

Privacy is probably the biggest challenge when decentralizing the sequencer. Centralized sequencers make provacy feel easy, but decentralizing is "hard af". We have a very hard choice : do we remove privacy or decentralize it ?

Both paths are very hard and it's not clear that either of them is necessarily better or easier than the other.

- At least these centralized block builders are competing with each other in a sort of competitive market, but you need to decide which builder you trust, and which builder you don't trust

- Threshold encryption or first come first serve models can be used but may still be centralized.

- Sharing the same type of privacy among different parties can provide decentralized privacy.

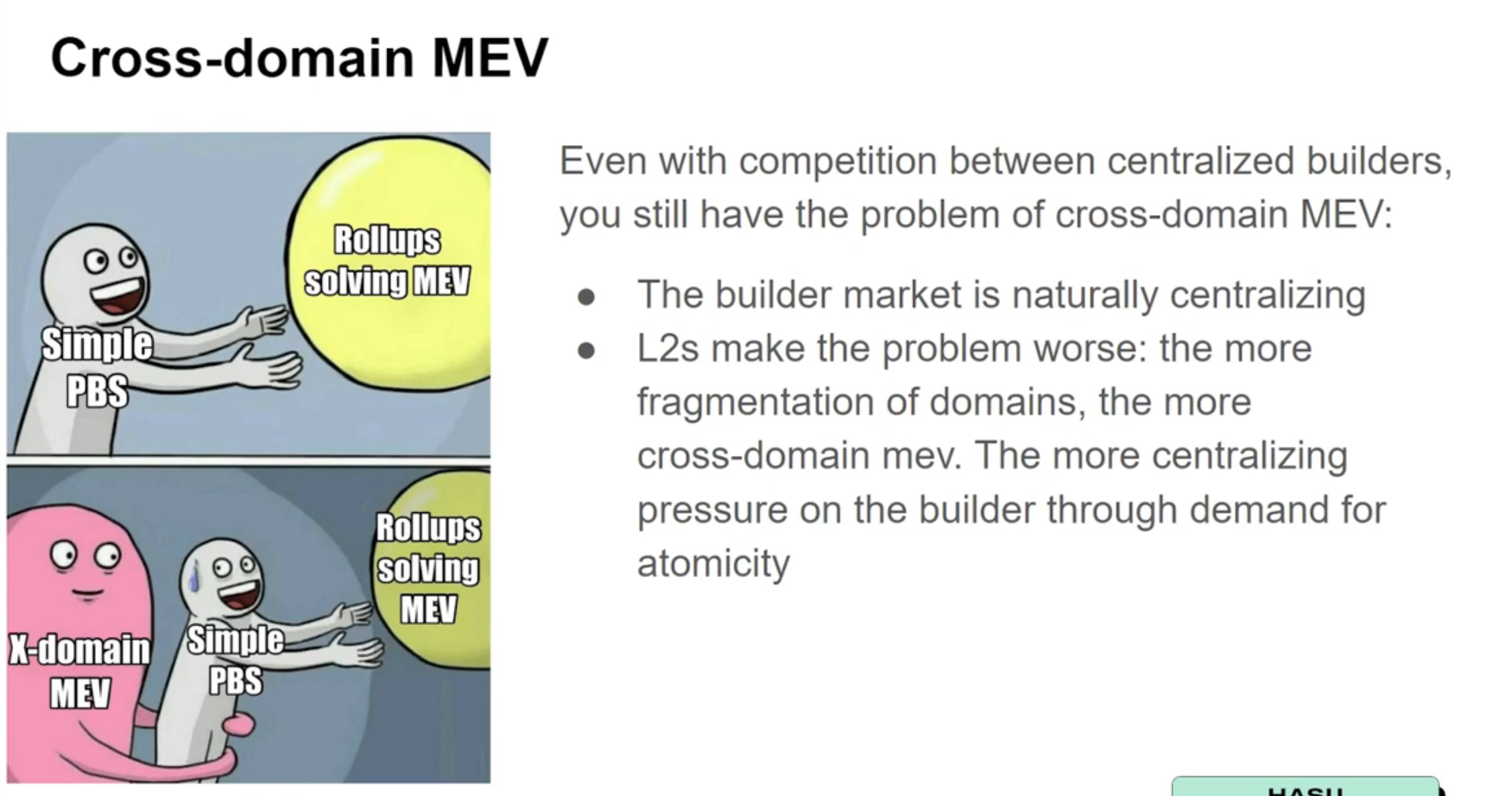

Cross-domain MEV (13:00)

The builder market is already naturally very centralizing due to economies of scale. But the simple existence of Layer 2s make the problem worse because of liquidity fragmentation.

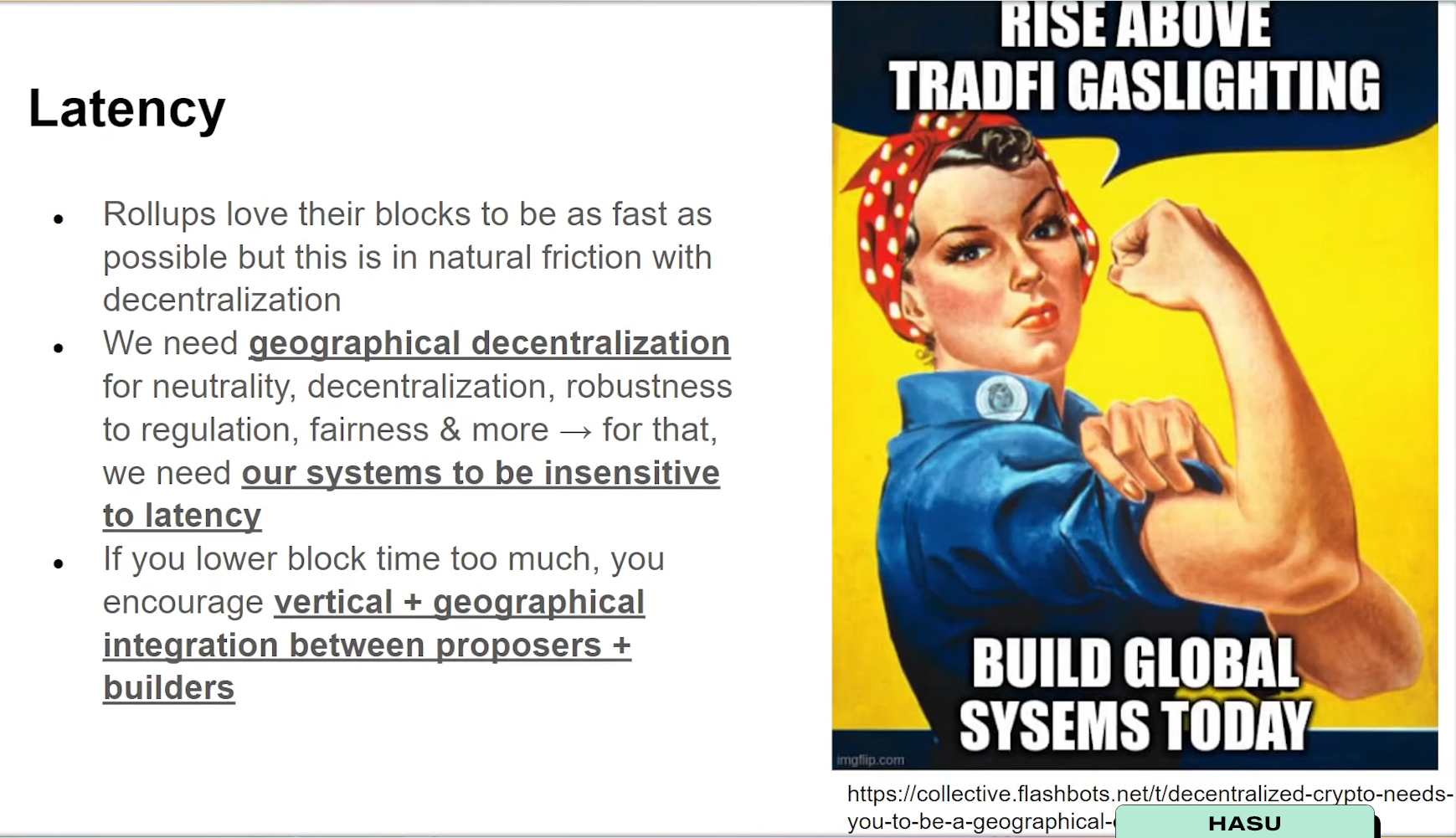

Latency (13:45)

It turned out that users liked faster confirmations so much, they had to switch to a centralized sequencer with faster pre-confirmations. This lower latency is a big friction for decentralization.

We need our systems to be insensitive to latency. If we lower the block time too much, we discourage participation from anyone who is not able to co locate in that same geographical area.

PBS is essential but not enough

SUAVE (14:30)

We also need to look into decentralizing the builder role itself, and Hasu introduced Suave as a proposal aimed at decentralizing the builder role.

In the rollup endgame, we're going to need the decentralized sequencer, going to need the decentralized builder, and we're going to need the private mempool, otherwise it's not going to work out

Conclusion (16:30)

Remember : Sequencer = L1 Proposer + L1 Builder. Separation is great, but we must not ignore the builder part, as we need to decentralize both.

ETHconomics

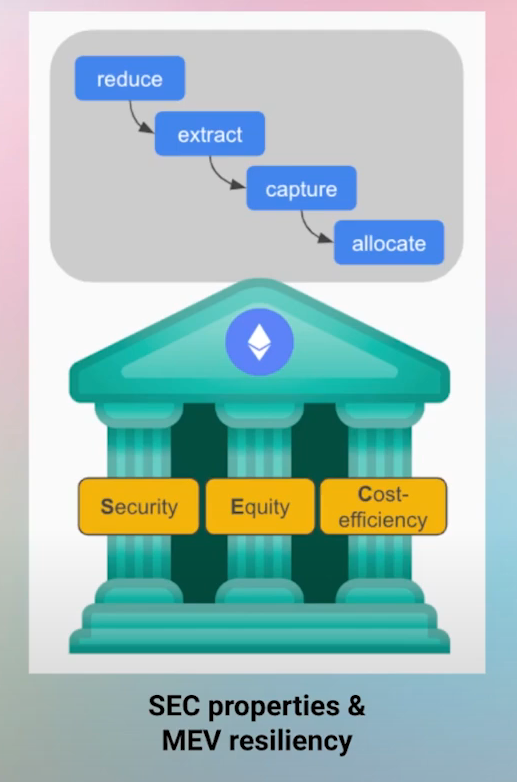

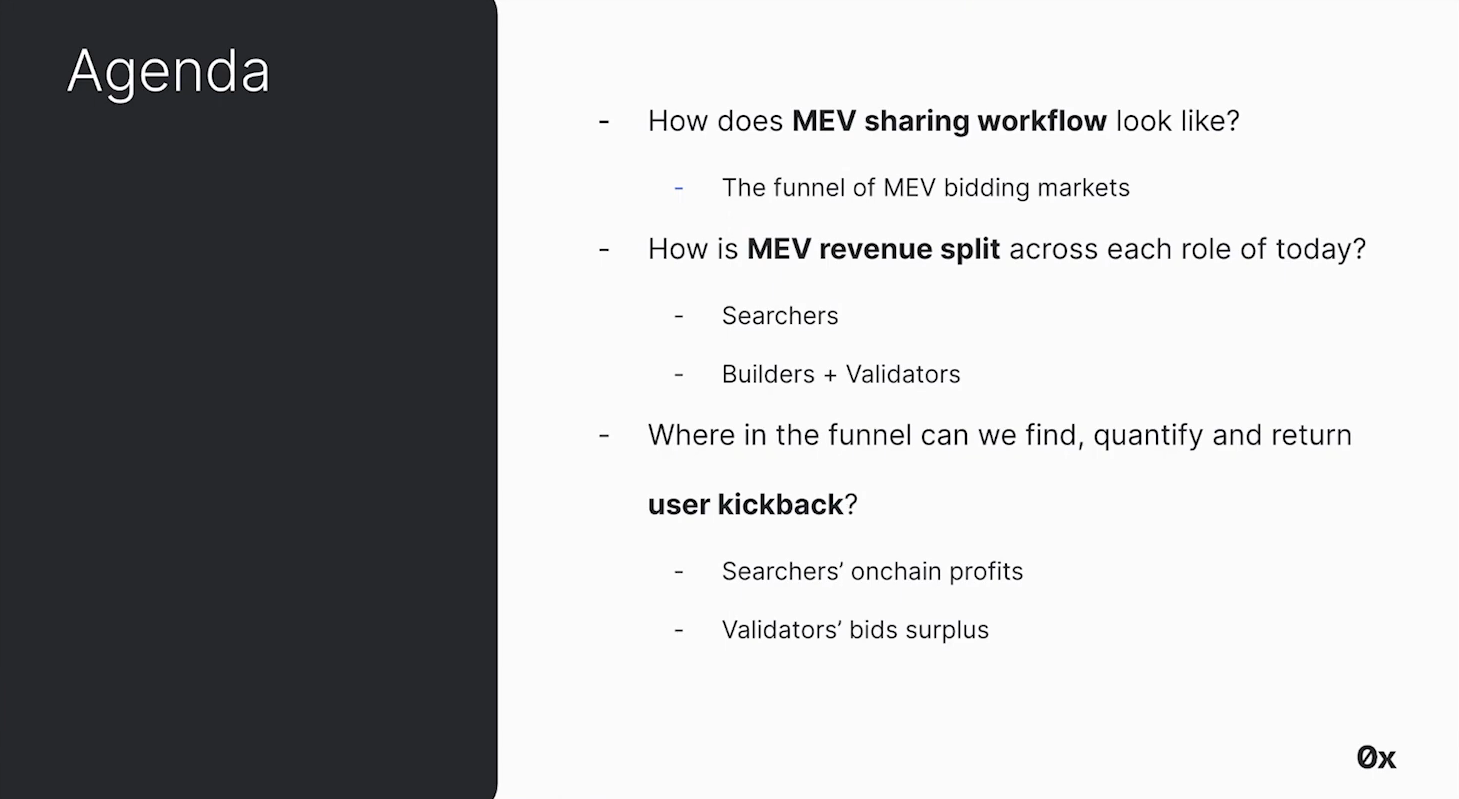

MEVconomics flows (0:30)

MEV is not inherent to the blockchain itself, it arises from the economic games and financial activities built on top of the blockchain.

That said, MEV involves the whole system, so we need to reduce MEV through 4 ways :

- Reduce MEV through clever protocol design at the game level and transaction inclusion level (Most people working in this area are focused on the transaction inclusion game)

- Efficient methods to extract the remaining MEV (today this is largely searchers/builders)

- The extracted MEV must be captured in a trustless way (Order Flow Auctions or other trustless protocols)

- The captured MEV must be allocated properly to optimize incentives (we essentially need to make sure that this flows back to the users)

What should be the goal of MEV allocation ? (5:30)

We tend to think of MEV flows in a supply chain paradigm, but it's more of a complex supply network

We need to measure and define metrics around inclusion delays, validator deviations from honest behavior, etc. Having data on these complex supply network properties will allow designing better protocols

The best way to allocate MEV is like a fair tax based on the externalities different players impose. This minimizes losses for honest users

Rig Open Problems (6:30)

Rig Open Problems (ROP) is an initiative to research and measure the complex properties and metrics of the MEV supply network

We are bringing together various ecosystem players to collaborate on understanding real MEV data, and the goal is using the data to design better protocols and systems

MEVconomics

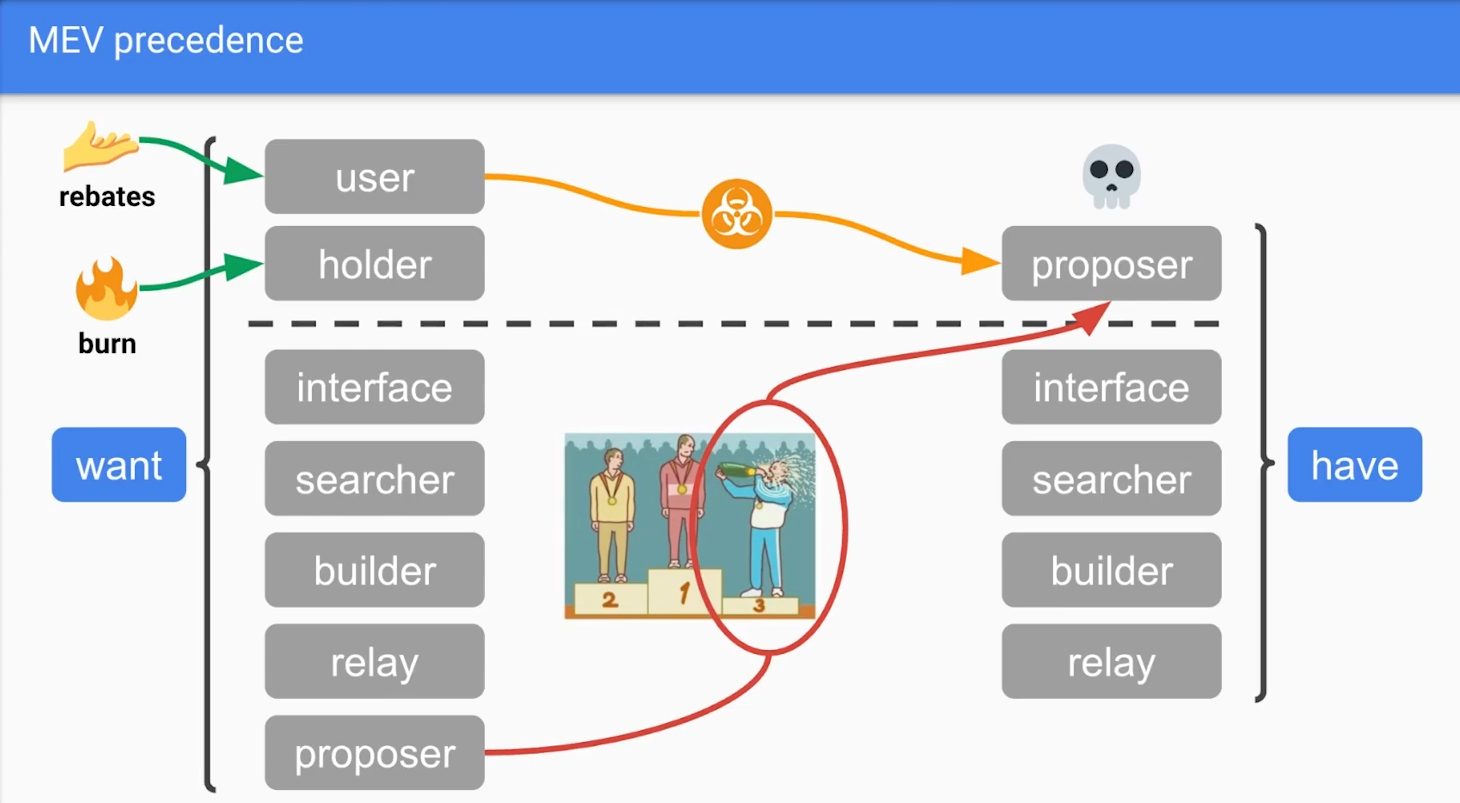

MEV Precedence (7:45)

MEV Precedence is the order in which the MEV should be transmitted to the various parties

Two parties should have top precedence for MEV, according to Justin :

- Top precedence for users. Users initiate MEV through their on-chain activity, so they should capture the value they create.

- Token holders should have next precedence. The way they receive flows is through burn, and we already have base fees burn with EIP-1559. But we can implement further "MEV burn" mechanisms which can redirect more extracted MEV to holders

Proposers have incorrectly top precedence (9:30)

Currently, Proposers just pick the highest MEV bid. And this leads to behaviors that harm users :

- Incentivizes reorgs. Large MEV payouts incentivize proposers to reorg and steal high value blocks

- Centralization. Since most proposers lose the "lottery", they need to pool MEV, which leads to centralization

- "Rug pulls". Pool operators can steal large MEV payouts beyond their collateral/reputation at stake

- Overpaying for security. Excess staking demand during MEV spikes dries up ETH supply needed for decentralized stablecoins etc.

All those bahaviors are called "Toxic MEV", and Proposers get the most from those economic flows, whereas users are penalized.

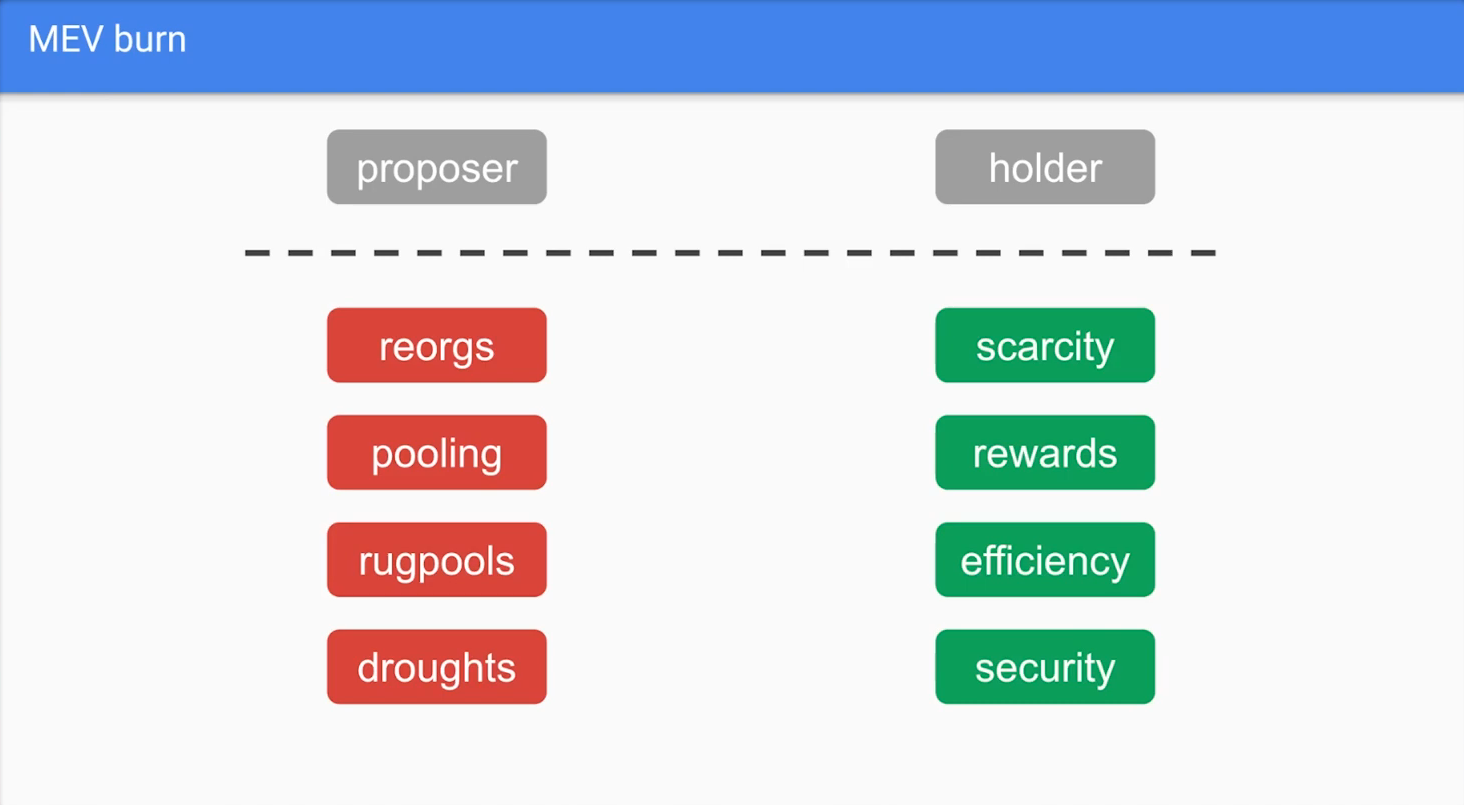

MEV Burn (13:30)

In order to avoid toxic MEV, we have to redirect MEV away from proposers and users/token holders should have top precedence for MEV. One way of achieving this is "MEV Burn"

What is MEV Burn ?

MEV burn consists in burning part of this MEV rather than letting it be captured by malicious actors. In concrete terms:

- Validators (block proposers) select sets of transactions (called payloads) submitted by block builders.

- The builders specify a "payload base fee" which is burned, even if the payload is invalid or revealed too late.

- Validators also impose a subjective "payload base fee floor", based on previously observed base fees.

- Honest validators select payloads that maximize the base fee burned.

In this way, a portion of potential MEV is burned at each block, smoothing out peaks and redistributing MEV to ETH holders. This discourages manipulation of transaction order and improves security & economic sustainability of the network.

MEV burn works in conjunction with EIP-1559 to put a price on two network externalities: congestion (EIP-1559) and contention (MEV burn).

Benefits (17:40)

- Increases ETH scarcity. Burning MEV increases ETH scarcity, pushing it more as the dominant money and settlement layer for Ethereum

- More equal rewards. Currently, the MEV portion makes most people lose money and a few are making money. With MEV burn, everyone earn kind of the same rewards.

- More efficient security, in the sense that you don't need as much issuance to pay for economic security

- Free ETH supply for economic bandwidth. With less ETH locked in staking, more is available for decentralized stablecoins and other economic needs

Protocol Credibility & Principal Agent Problems

PEPC "Pepsi" protocol (20:15)

PEPC (Protocol-enforced proposer commitments) is a proposed mechanism to allow Ethereum validators to enter into commitments with third parties in a permissionless and trustless way.

The idea is to allow validators to make commitments that are enforced by the protocol, so that validators cannot violate the commitments they make. For example, a validator could commit to letting a specific builder create their block. The protocol would enforce this by only considering valid a block made by that specific builder.

How it could work :

- Validators can submit commitments expressed as smart contract code when they propose a block. This code defines the commitments made by the validator.

- Other parties can check this code to verify the validator's commitments before interacting with them.

- The protocol enforces the commitments by only considering blocks valid if they satisfy the commitments made by the proposing validator

PEPC aims to provide the infrastructure for validators to outsource tasks while still retaining security guarantees from the protocol. This could allow more decentralization by letting less sophisticated validators outsourced complex tasks.

Protocol Credibility (21:40)

Protocol Credibility = Protocol Introspection + Protocol Agency

We saw that iterative community-driven protocol upgrades have worked well so far. The problem is, as economies grow more complex, more things get delegated, leading to misaligned incentives across fractured trust domains.. Those are called Principal-Agent Problems (PAP), and they lead to credibility risks.

PEPC introduces "programmable protocol credibility" to flexibly address principal-agent problems. It provides solutions for the protocol to see PAP as more "credible" since the validator set will defend commitments.

However, it may conflict with the goal of minimizing protocol commitments the community must indefinitely defend. In any case, work on credibility and commitments related to this theory are in progress

Q&A

Optimizing MEV allocation to align incentives (26:50)

Ideal MEV allocation is like a fair tax based on the externalities users impose on the system, this aligns incentives and minimizes losses.

But given the complexity of the issues, we only have elements of an answer :

- Clearly separate consensus layer (issuance) and execution layer (fiscal) when managing MEV. Part of MEV should go towards validators to maintain incentives, and other part goes towards users. For example, in the type of allocation that an OFA system would do, there is a direct transfer back to the user

- MEV smoothing (via MEV Burn) doesn't fully solve issues. It doesn't address economic bandwidth drought from excess staking demand, and centralized committees create other risks

- Auctioning validator slots internalizes MEV. Validators pay more if they benefit from MEV at execution layer, and this links consensus and execution layers incentives

The key is using data to dynamically calibrate multi-layered allocation mechanisms that align user, validator, and protocol incentives. MEV burn and validator auctions could work together 👀

Layer 2s (32:20)

About Rollups

- Rollup teams are well-incentivized already with large market caps. They require large teams and take substantial risk

- Rollup capitalism will become commoditized over time. Like electricity and water, less incentivization needed eventually

- Rollups want maximal decentralization. Progressing from centralized sequencers to decentralized

- Rollups need fast execution and decentralization plan. Credible neutrality roadmap vital given network effects

Non-base rollups will dominate short/medium term

About Base Rollups

- Base rollups are part of the decentralization progression. Shifts sequencer from L2 to L1, leading to enshrined rollup

- Base rollups offer credibility, security, decentralization. This aligns with end goal of a mature and maximally neutral rollup

- However, it's unclear how base rollups provide pre-confirmations. But methods like Eigenlayer may enable these services

Base rollups are part of full rollup design space exploration

Do we need enshrined PBS ? (37:45)

MEV space evolves quickly week-to-week. Given its pace of change, we need more research before enshrining :

- See more data, experimentation before committing

- Figuring out optimal shape before needing to use

Alternative trust-minimizing solutions are worth exploring before committing. Relay operators want enshrined PBS, but being a relay operator has risks like hacks, collusion or theft. We need clever techniques to remove relay trust :

- Flashbots is working on using SGX to remove the relay

- Optimistic relaying roadmap incrementally progresses PBS

That said, according to participants, we'll have some sort of reasonable equilibrium in 1.5-2 years

MEVconomics in L2

A closer look at the Sequencer's role

The MEV Land

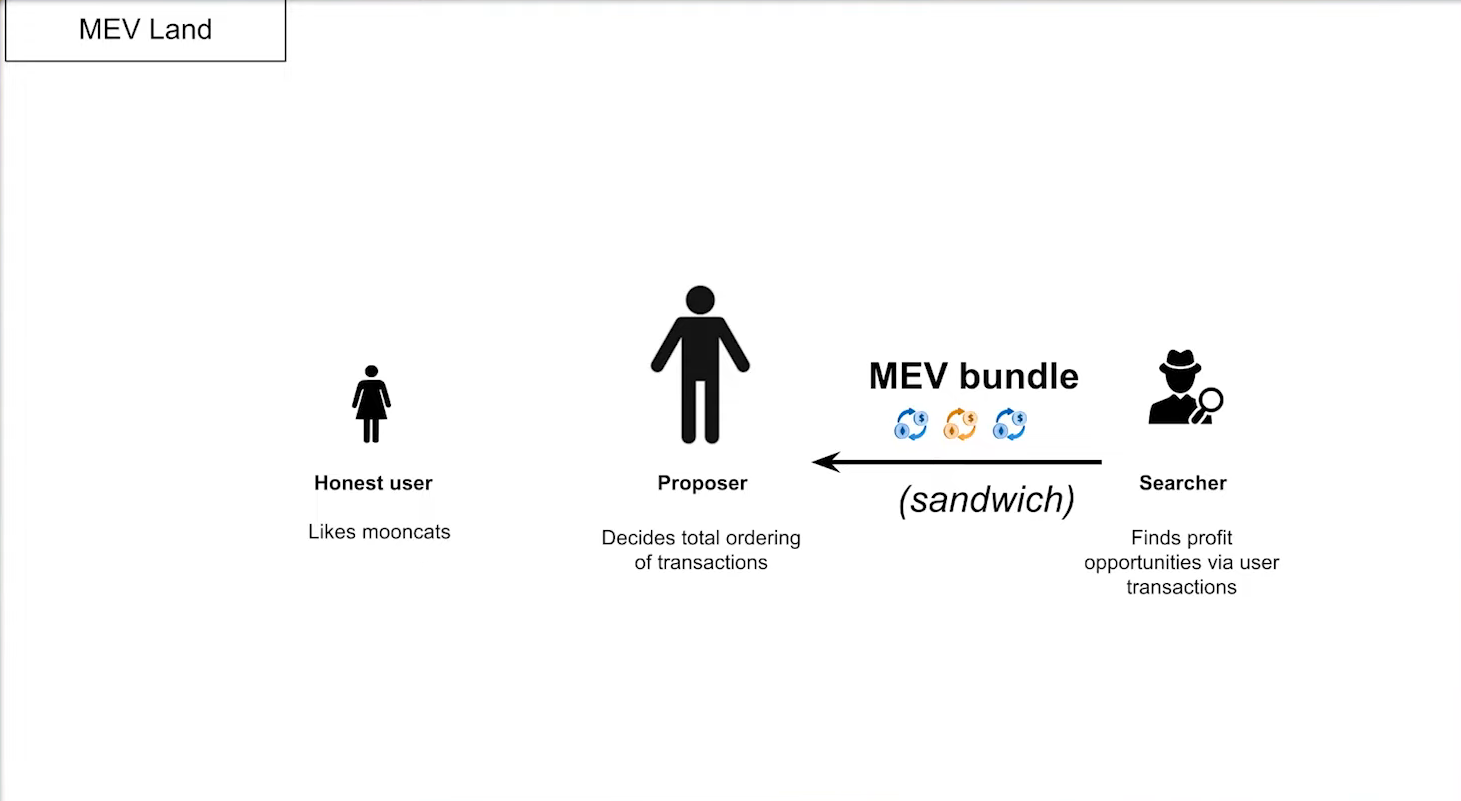

MEV Land population (0:45)

Three agents are involved :

- Honest user : All the users want is to buy/sell their tokens.

- Proposer : Orders pending transactions and decide the final ordering of those transactions in the next block

- Searcher : The MEV Bots that look for MEV opportunities, bundle them up and pay proposers to include them.

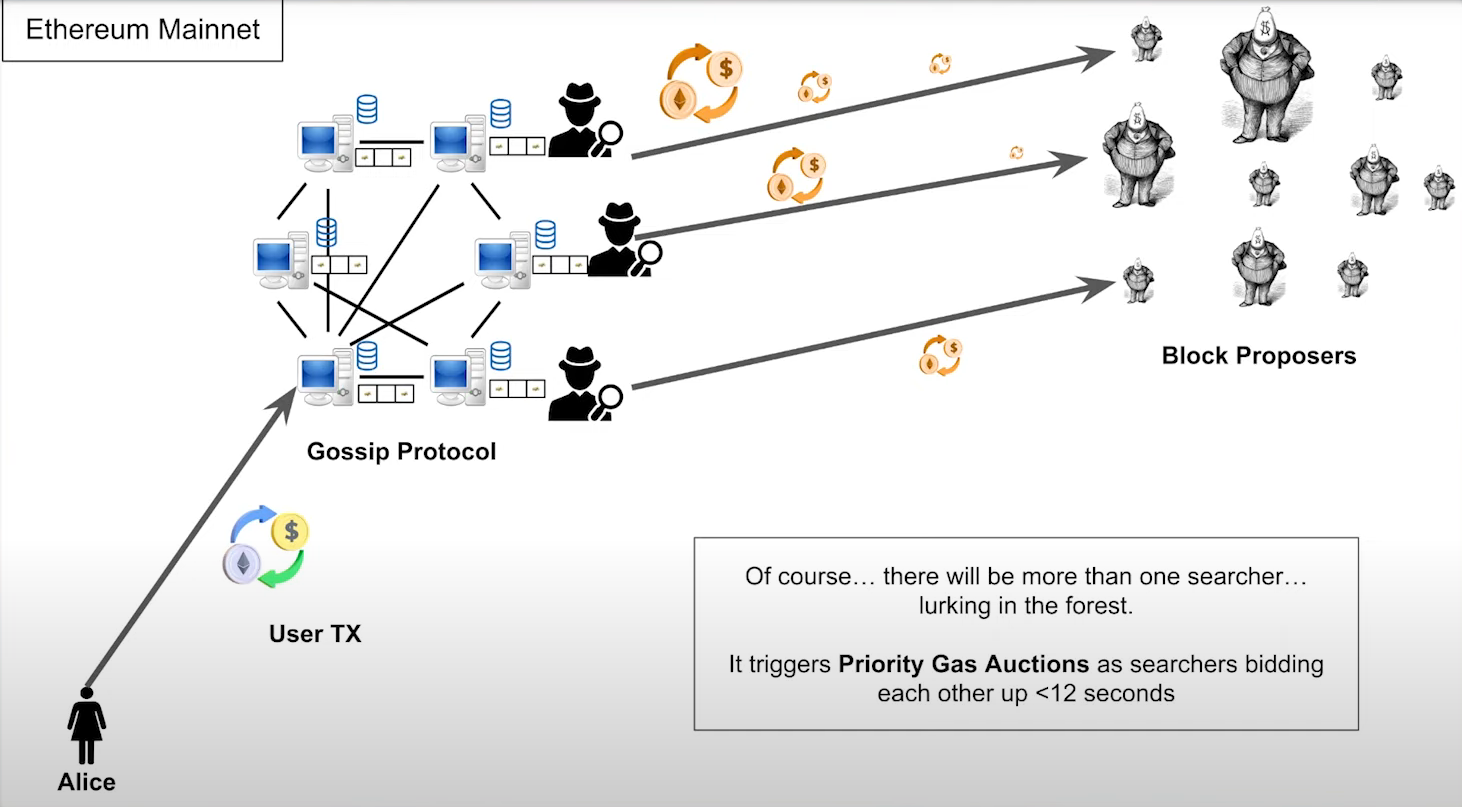

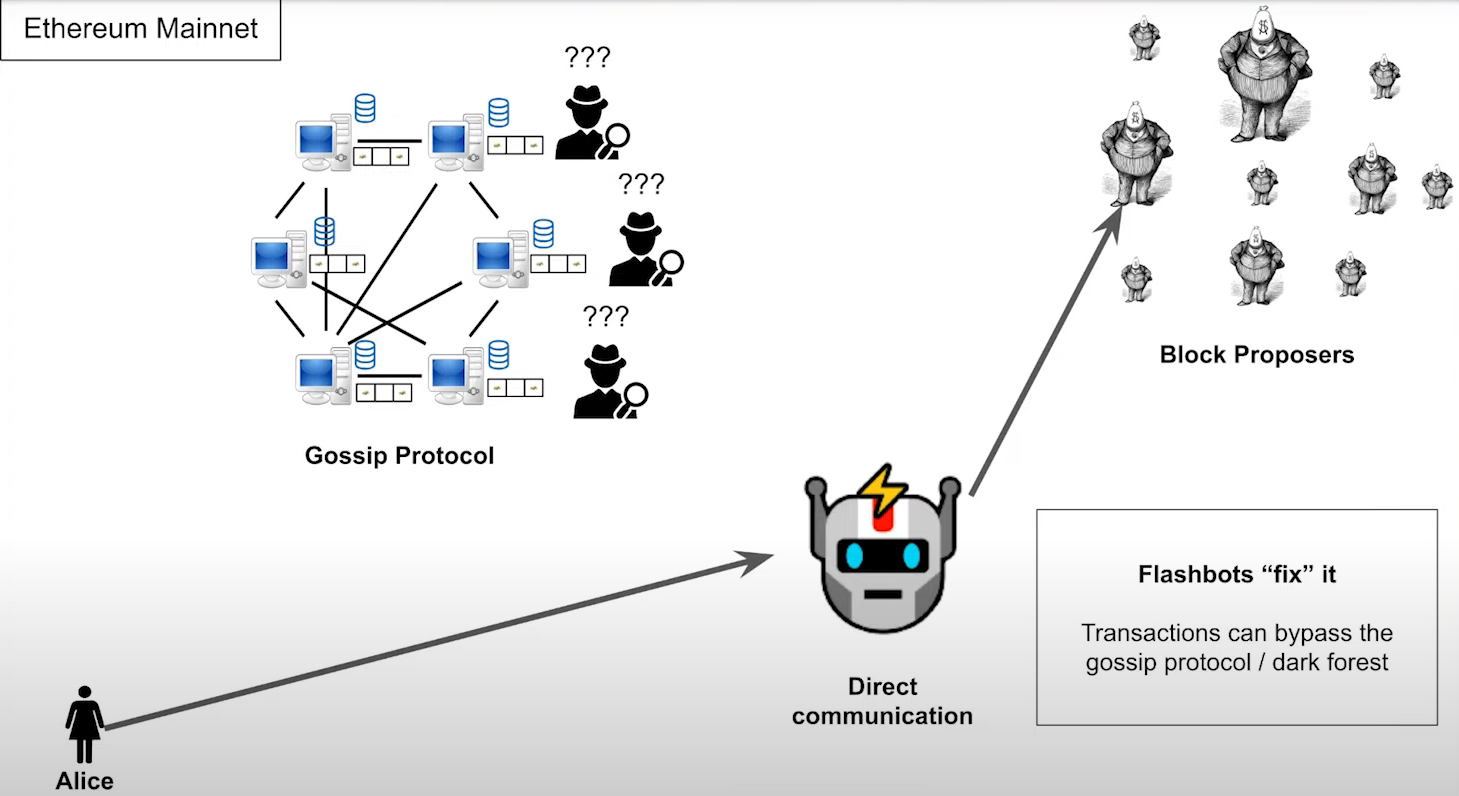

Life Cycle of a transaction before flashbots (1:15)

How does the user get their transaction and communicate it to the proposer using a peer-to-peer network ?

- Alice send a transaction to a peer

- Within, 1-2 seconds, every peer in the network get a copy of this transaction, including their proposers

- They'll take this transaction and hopefully include it in their block.

Problem : this is a dark forest. Anyone could be on it, including a searcher, so they could listen out for the user's transaction, inspect it, find an mev opportunity and then front run the user to steal the profit. This leads to "Priority gas auctions", where the searchers bid each other up.

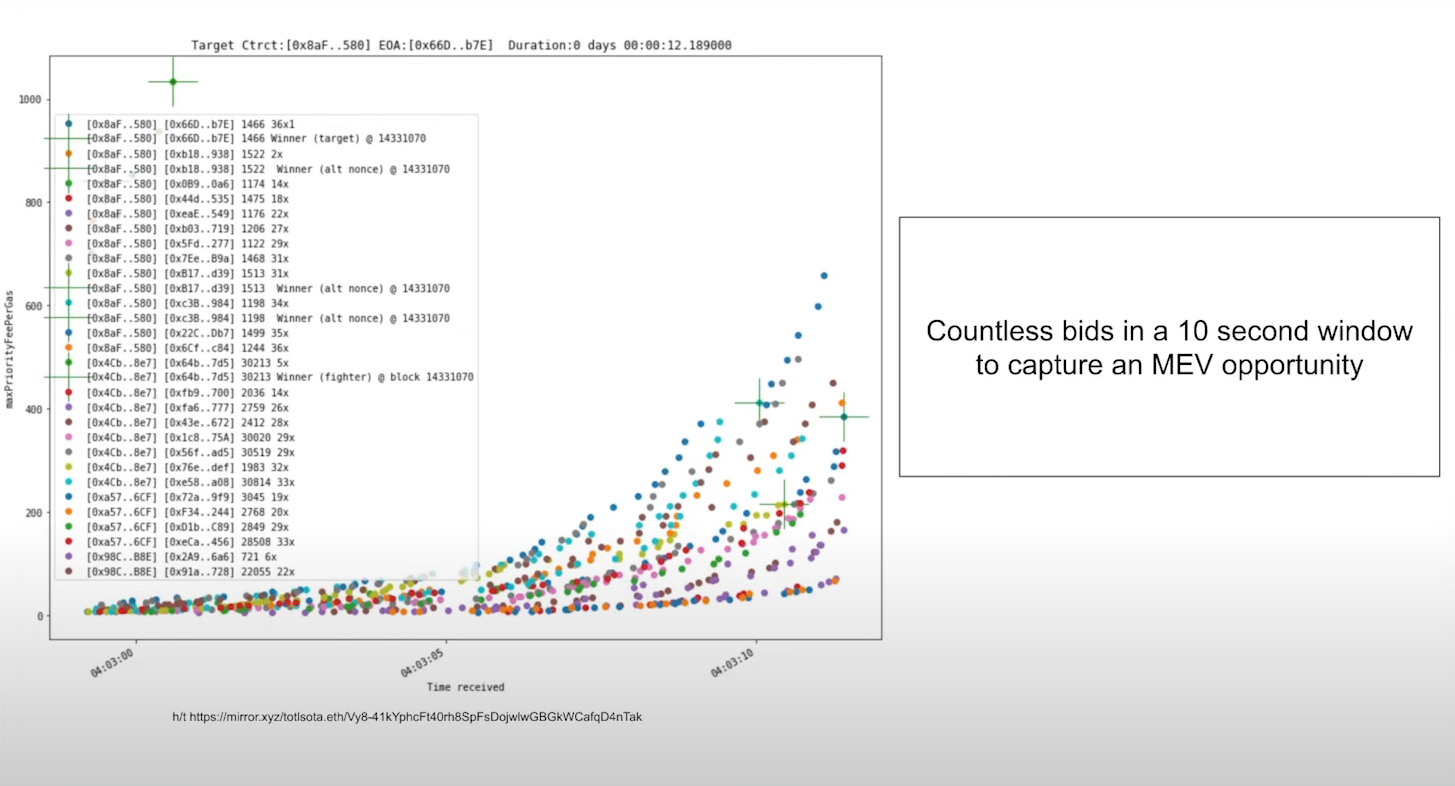

We can see here in the graph within a ten second period there's basically like hundreds of transactions being sent. There are 2 issues about it :

- Priority Gas Auctions are wasteful, as there are lots of failed transactions

- MEV in unrestricted. We're taking the user's transaction, throwing it to the wolves and just hoping it gets to the other side

Life Cycle of a transaction after flashbots (3:30)

Flashbots came as a solution to address unrestricted MEV by allowing users to send transactions directly to Flashbots, which gave them directly to the block proposer instead of going through the peer-to-peer network.

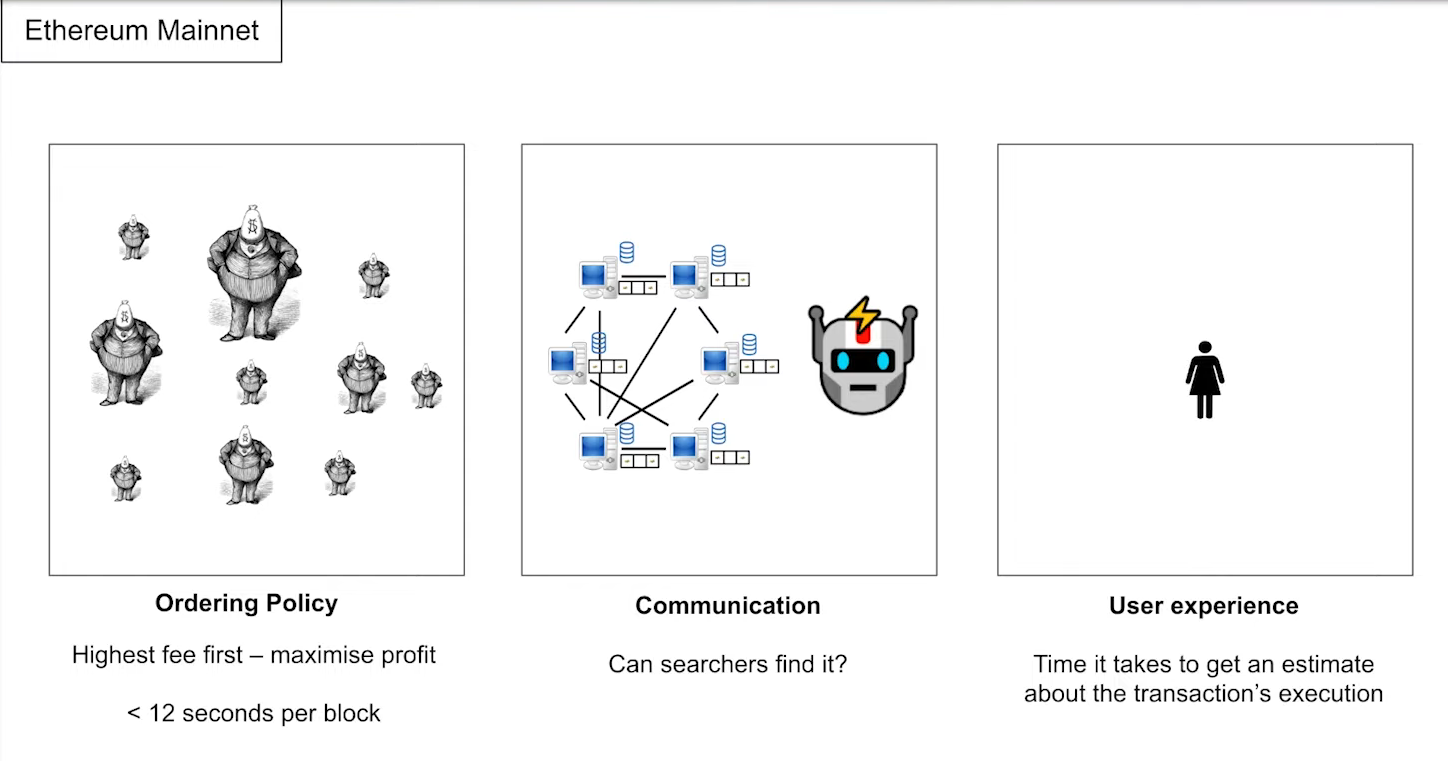

What can we extract from this scenario ? (4:00)

- The ordering Policy : we're picking transactions based on the fee, highest fee first, and than around 12 seconds to do this.

- Communication : How does the block proposer learn about the transaction and how do the searchers find it as well ?

- User Experience : How long does it take for a user to be informed that their transactions confirmed and how it was executed ? (at the end of the day, MEV exists thanks to users)

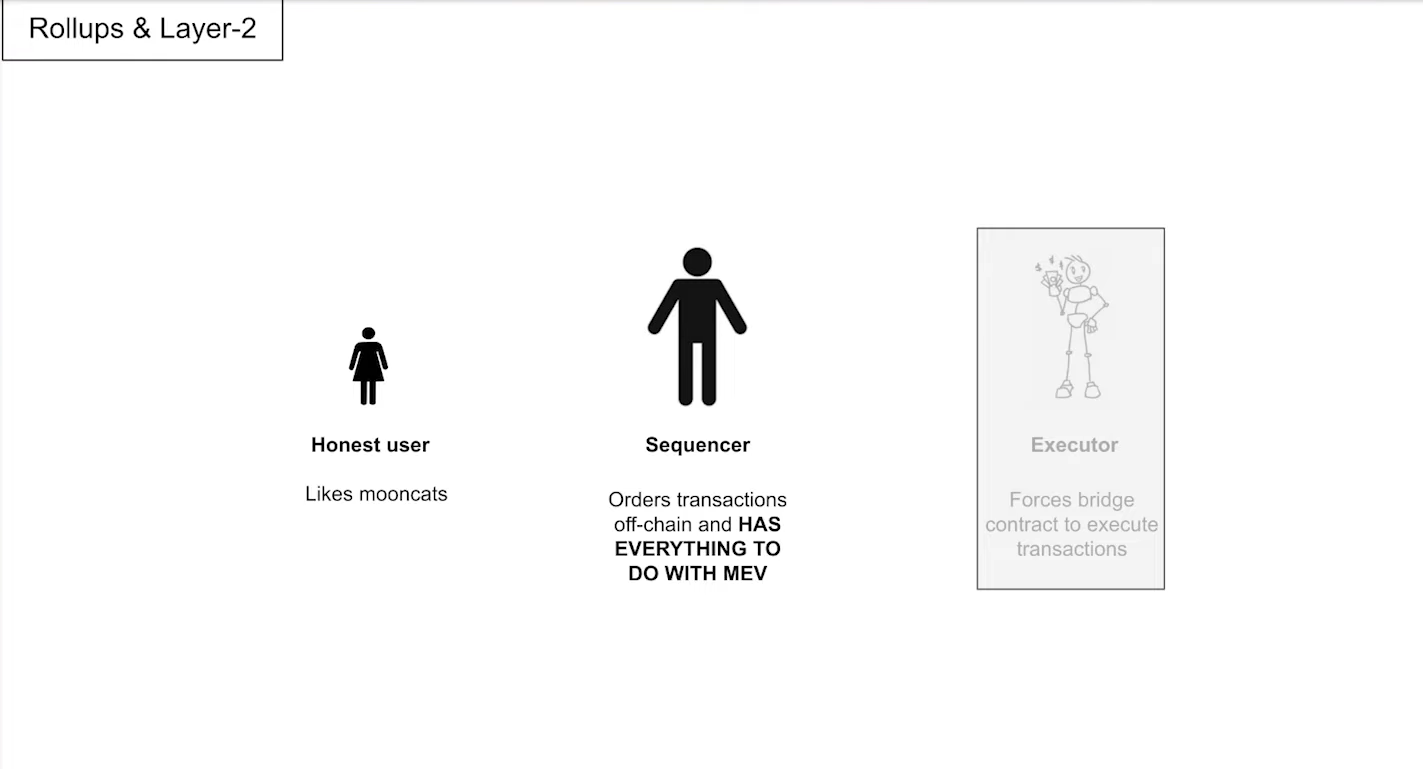

The Layer 2 MEV Land (4:30)

There are three actors in rollup land as well :

- Honest user : Initiates transactions.

- Sequencer : Orders pending transactions for inclusion in a rollup block.

- Executor : Executes transactions.

Sequencer have everything to with MEV, as it decides the list of transactions and their ordering. Also, the lifecycle is pretty similar

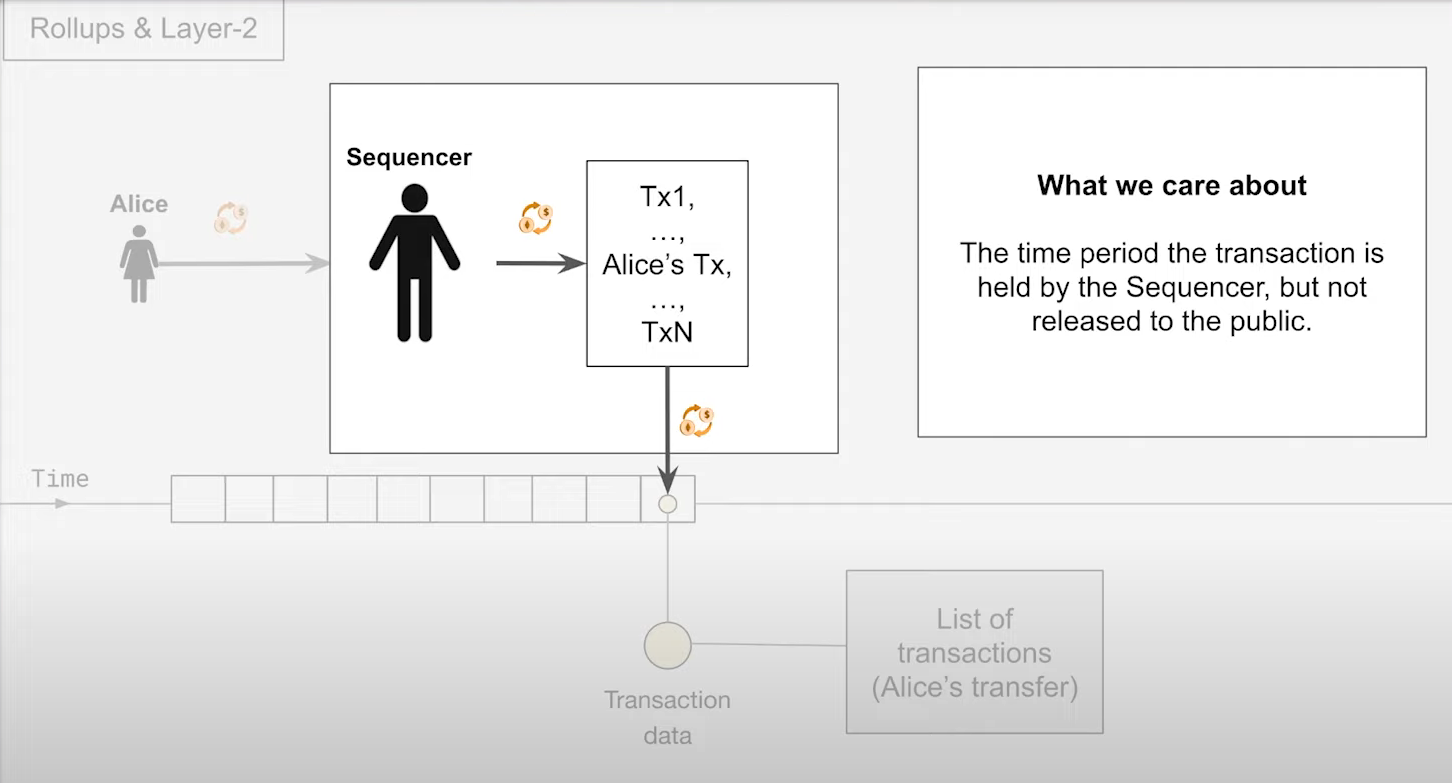

Lifecycle of a transaction in a Sequencer (5:15)

- The Sequencer has a list of pending transactions

- Ordering the transactions according to the ordering Policy

- Post them onto Ethereum

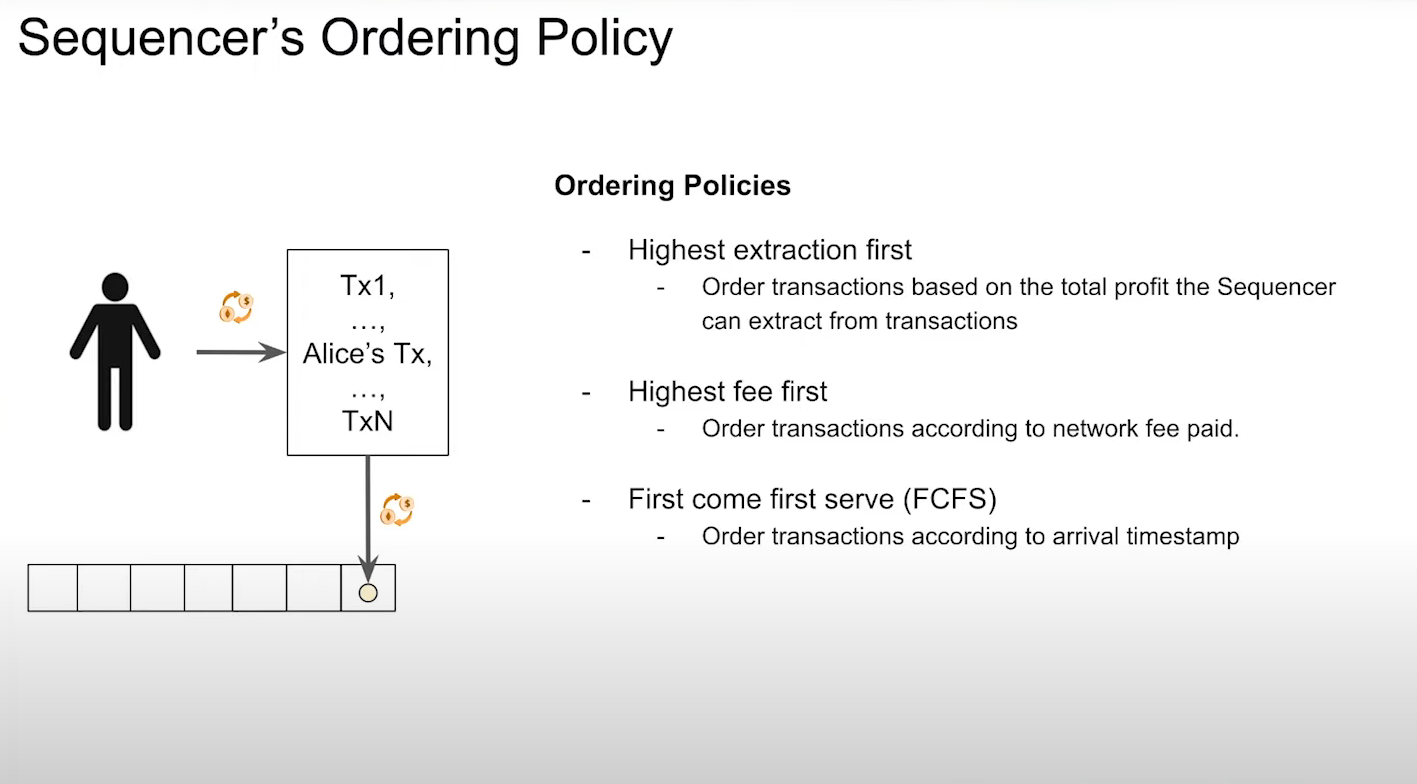

Sequencer ordering policies

Let's admit Alice can communicate directly with the Sequencer. When does Alice get a response from the sequencer and what type of response do they get ?

It's going to depend on how the sequencer decides the order of these transactions, then of course what we care about is the sequencer's ordering policy.

Highest Extraction first (6:15)

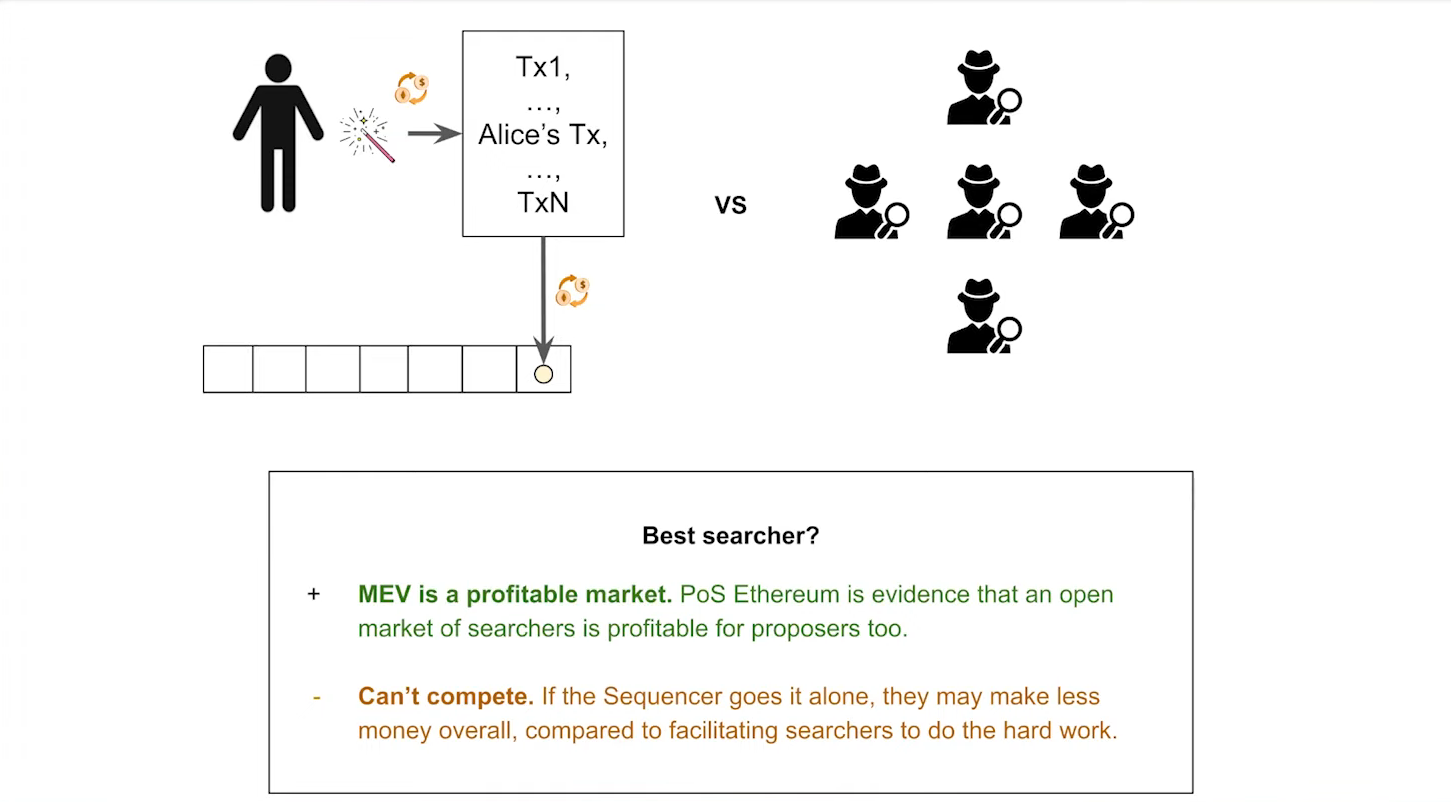

Basically when you talk about sequencers and mev and layer two, this is the first ordering policy that everyone talks about.

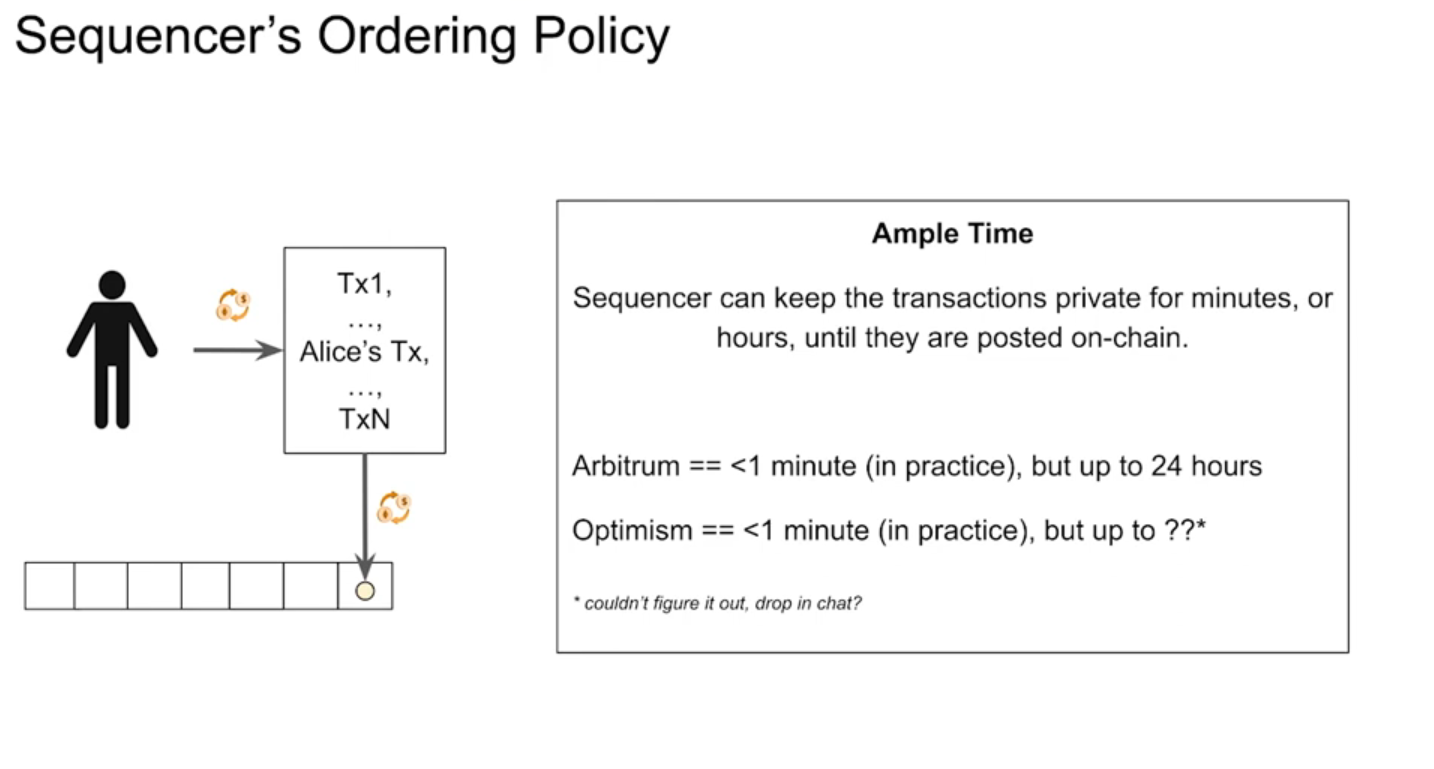

A user can be presented with "free transactions" as the MEV rewards are sufficient. But if we allow the sequencer to extract value for two to 3 hours, well, that sucks for the user

According to Patrick, we don't have to solve the fair ordering problem for now. There's a very good chance that a sequencer can make more money by having an open market of searchers do the hard work as opposed to trying to extract the MEV themselves.

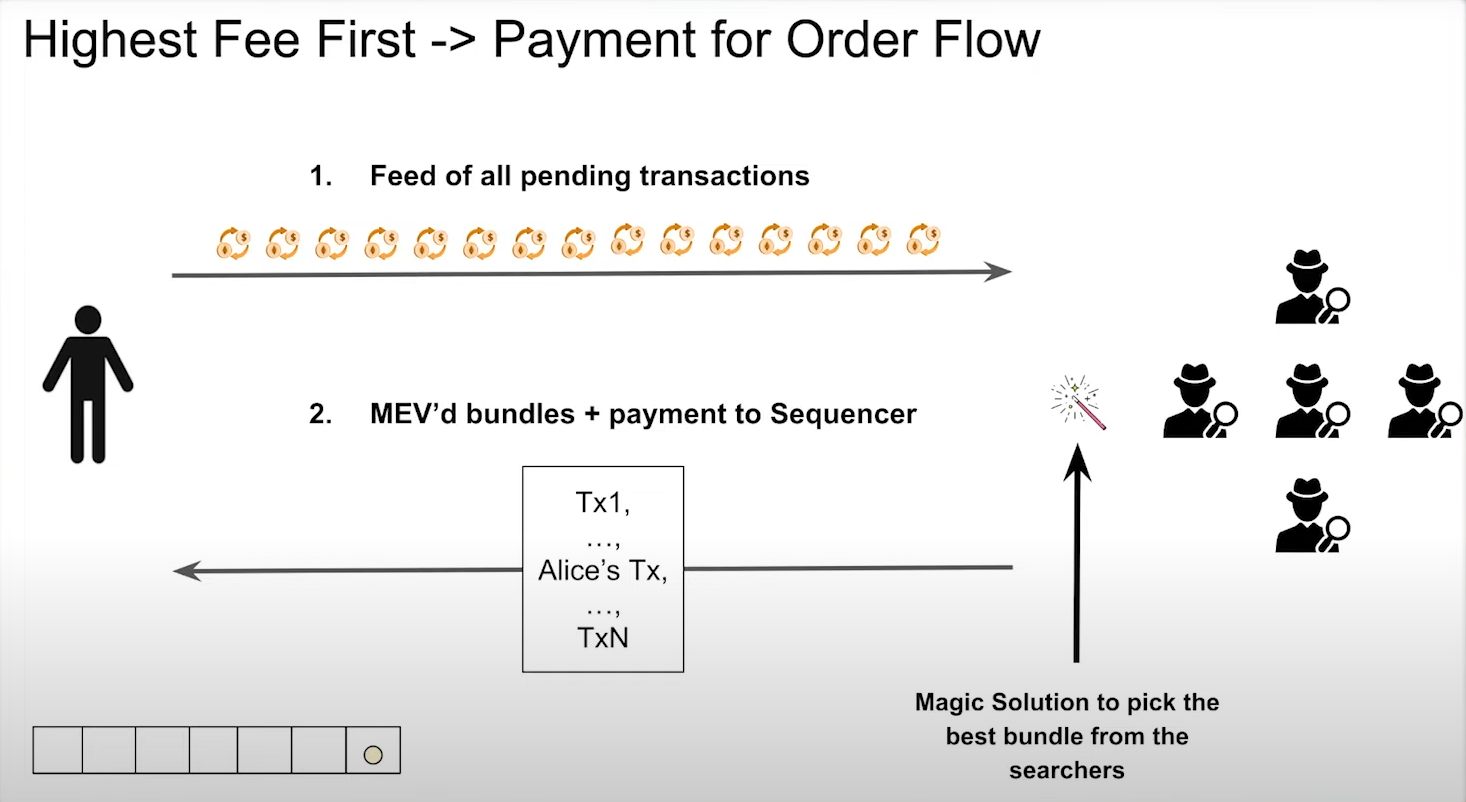

Highest fee first (9:15)

This policy is similar to Ethereum's current system :

- Sequencers receive pending transaction lists from searchers.

- Searchers extract MEV and send bundles to the sequencer with payment.

- Sequencer orders transactions based on the payments received

Same user experience than Highest Extration First : users could still have free transactions because the transaction fee is actually the MEV that's extracted. But again, this could have a long delay.

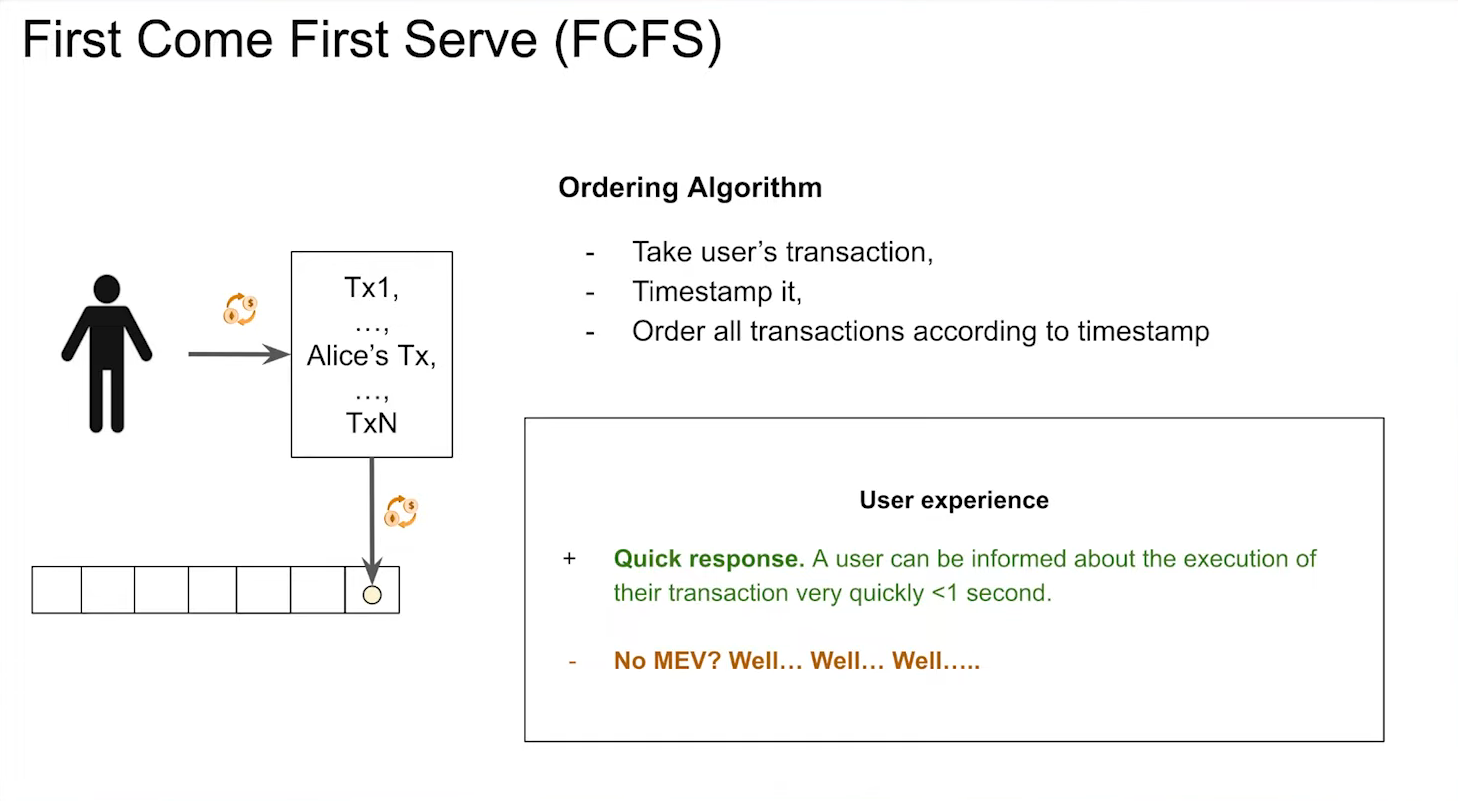

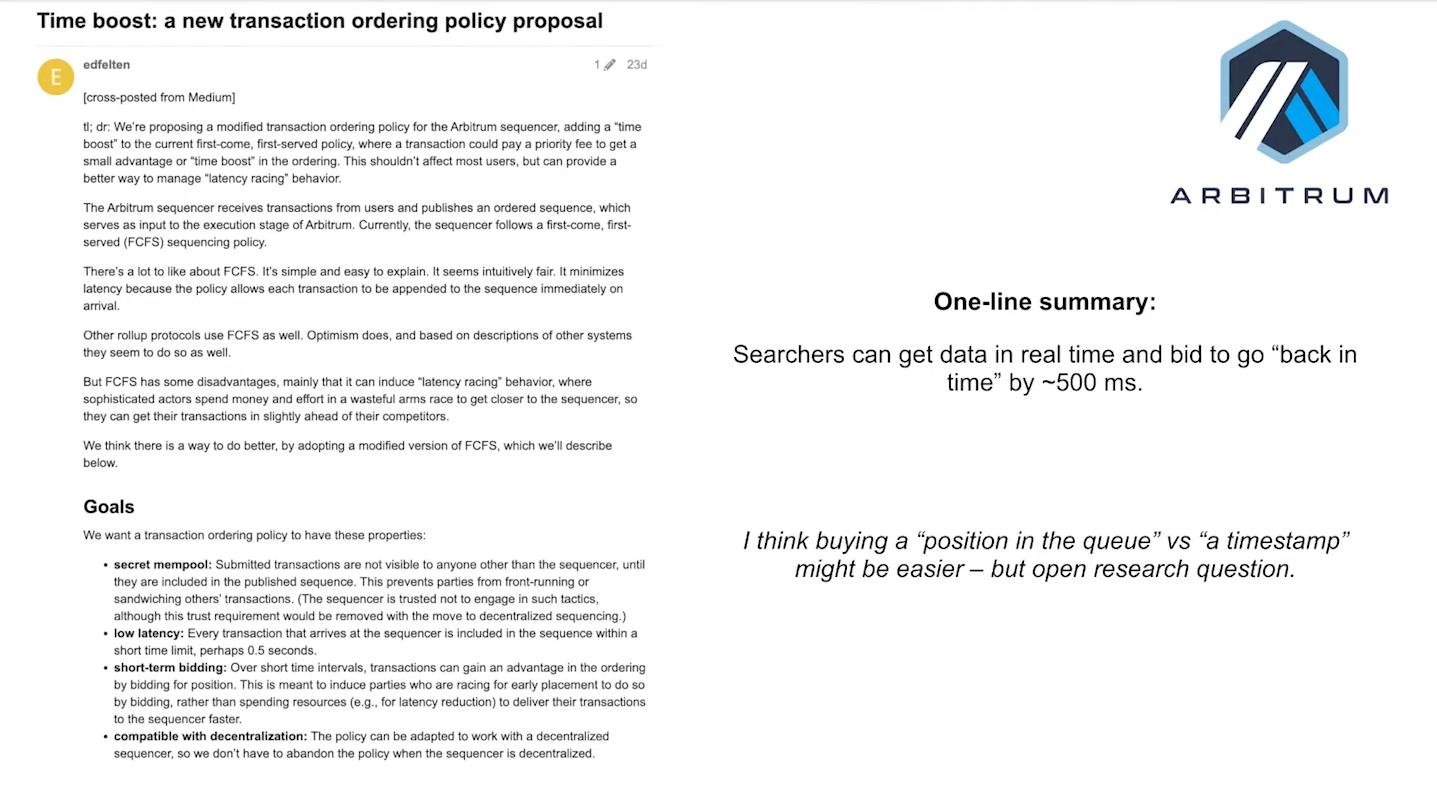

First Come First Serve "FCFS" (10:30)

FCFS seeks to prioritize user experience :

- User will send their transaction to the sequencer

- The sequencer will timestamp the transaction

- Transaction is ordered according to the timestamp

Somehow, it's like transacting on Coinbase : We send our transaction to the service provider and they return back a response to say it's confirmed.

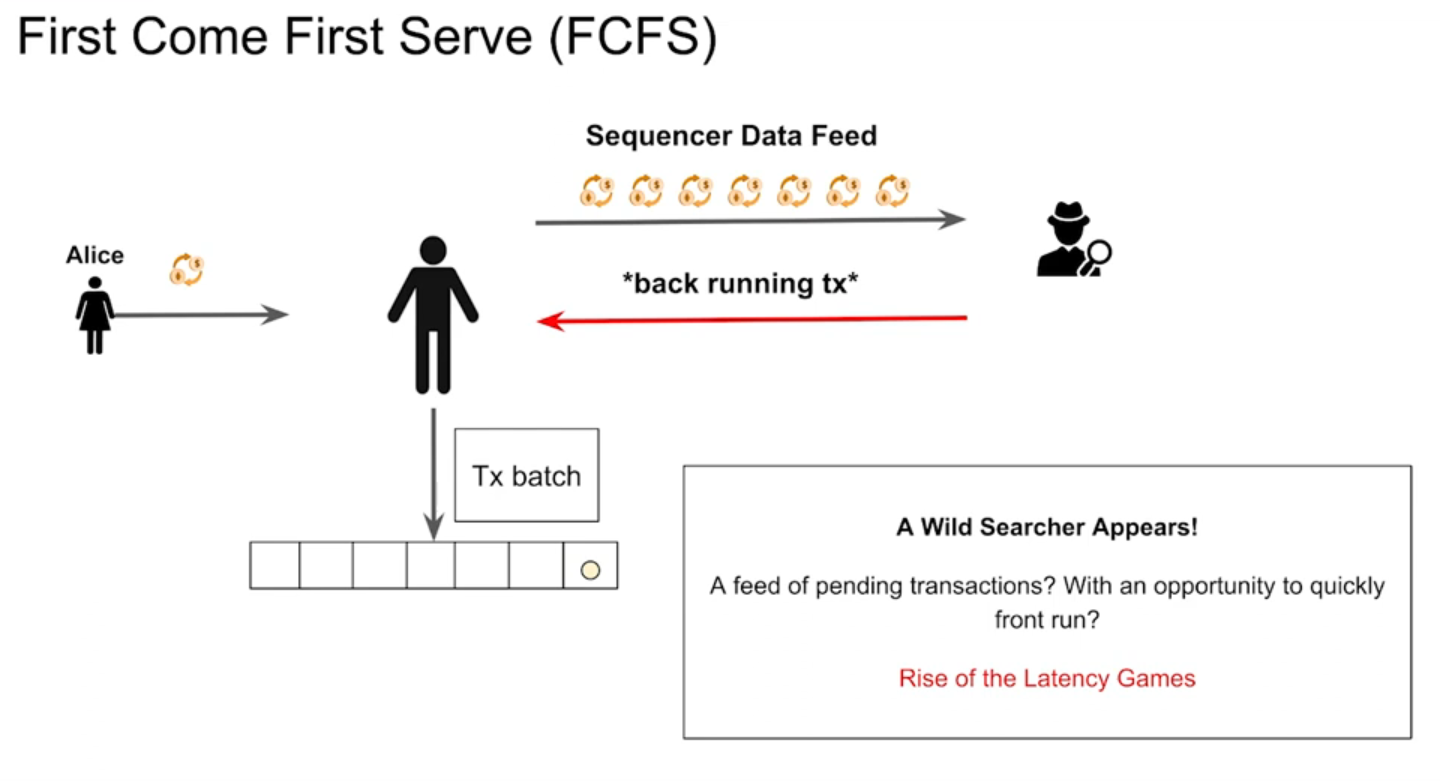

However, it's delusional to think there's no MEV out of this :

When a user gives their transaction, the sequencer will create a little block within 250 milliseconds and then publish that off at a feed.

Now, what happens when you have a data feed that's releasing data about transactions in real time ? Searchers lurk in the bushes

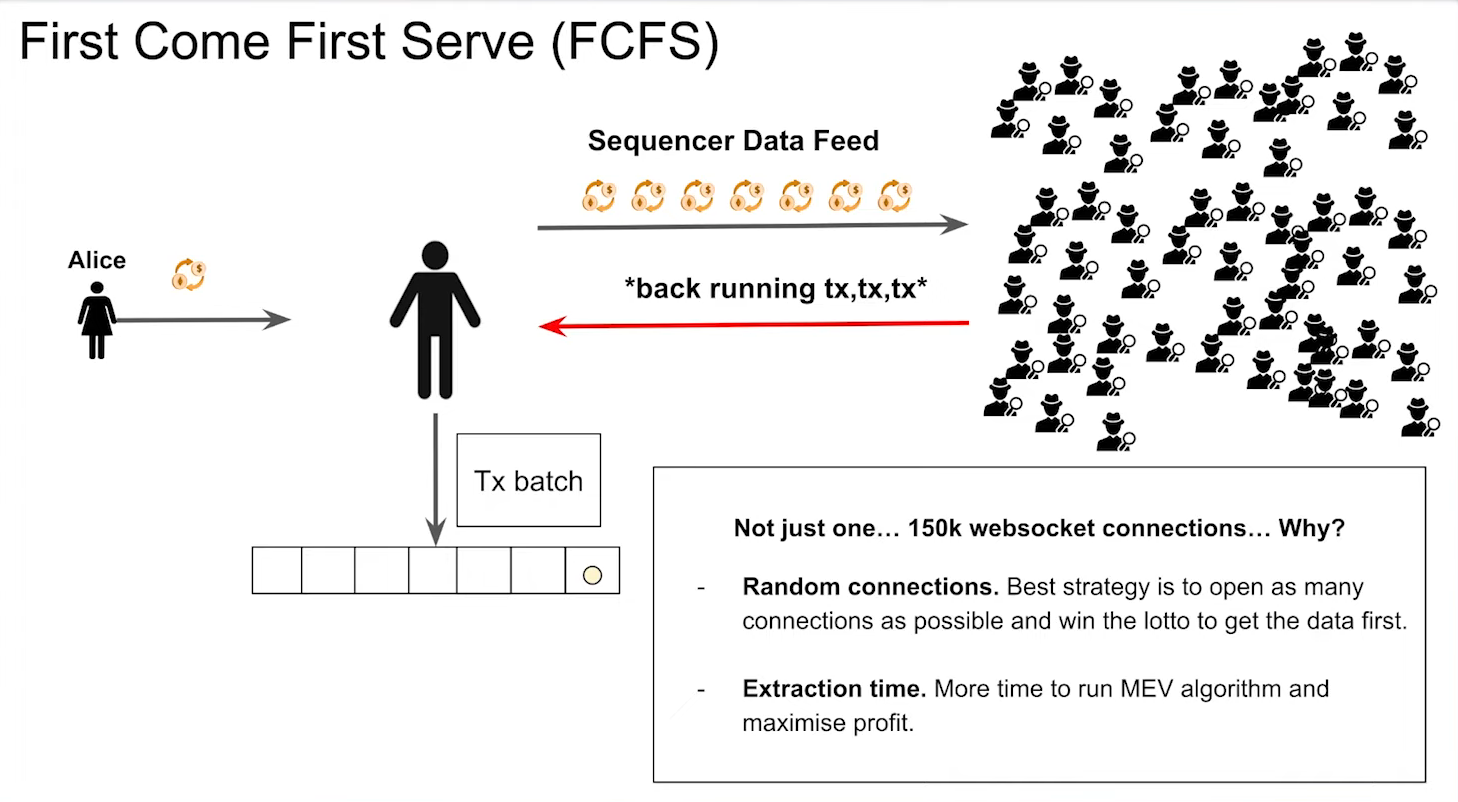

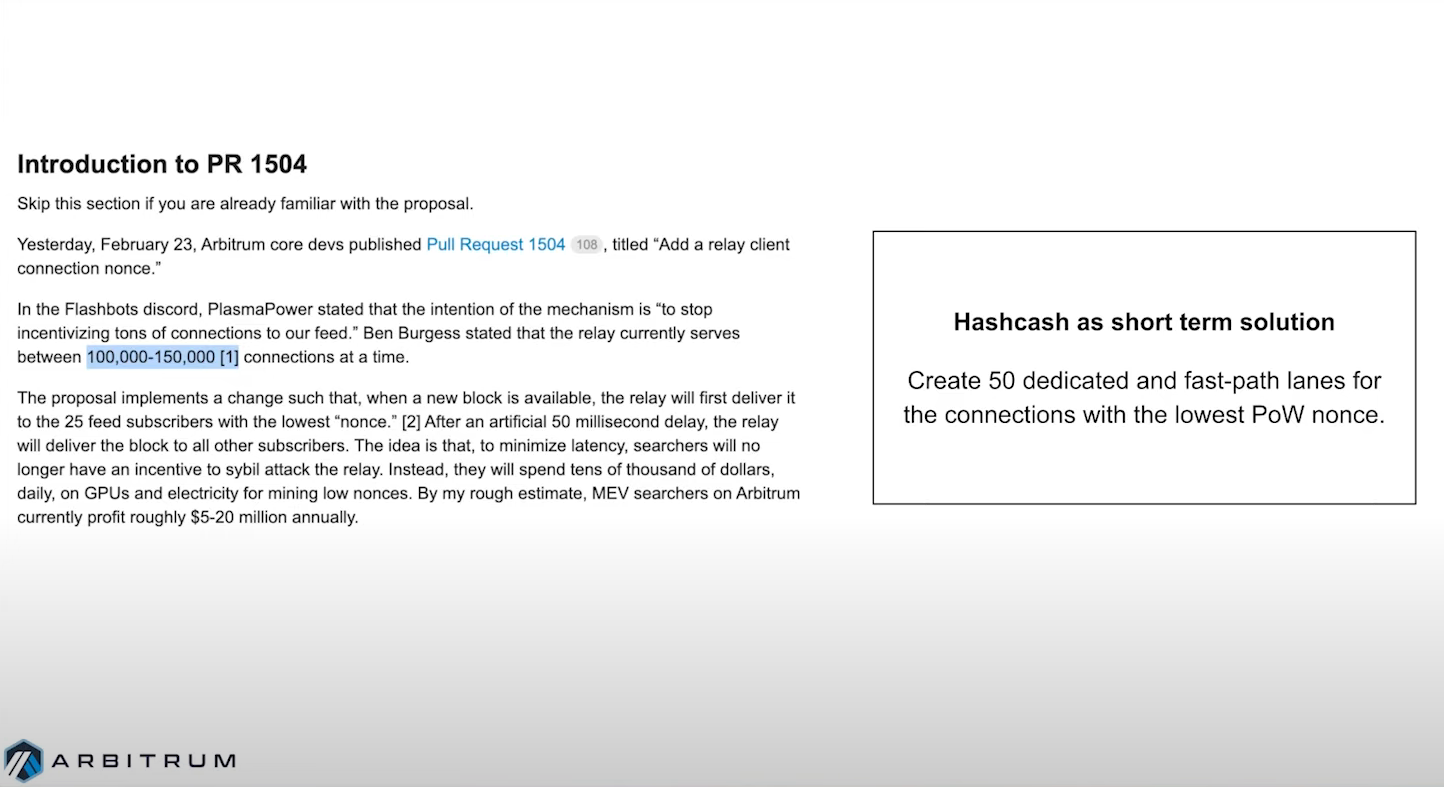

Whoever gets that transaction data first is the one who wins the mev opportunity. And this leads to the rise of latency games because you don't end up with one searcher, you end up with 150,000 WebSocket connections (as Arbitrum experienced). A solution to this is "hashcash", but this is for short term.

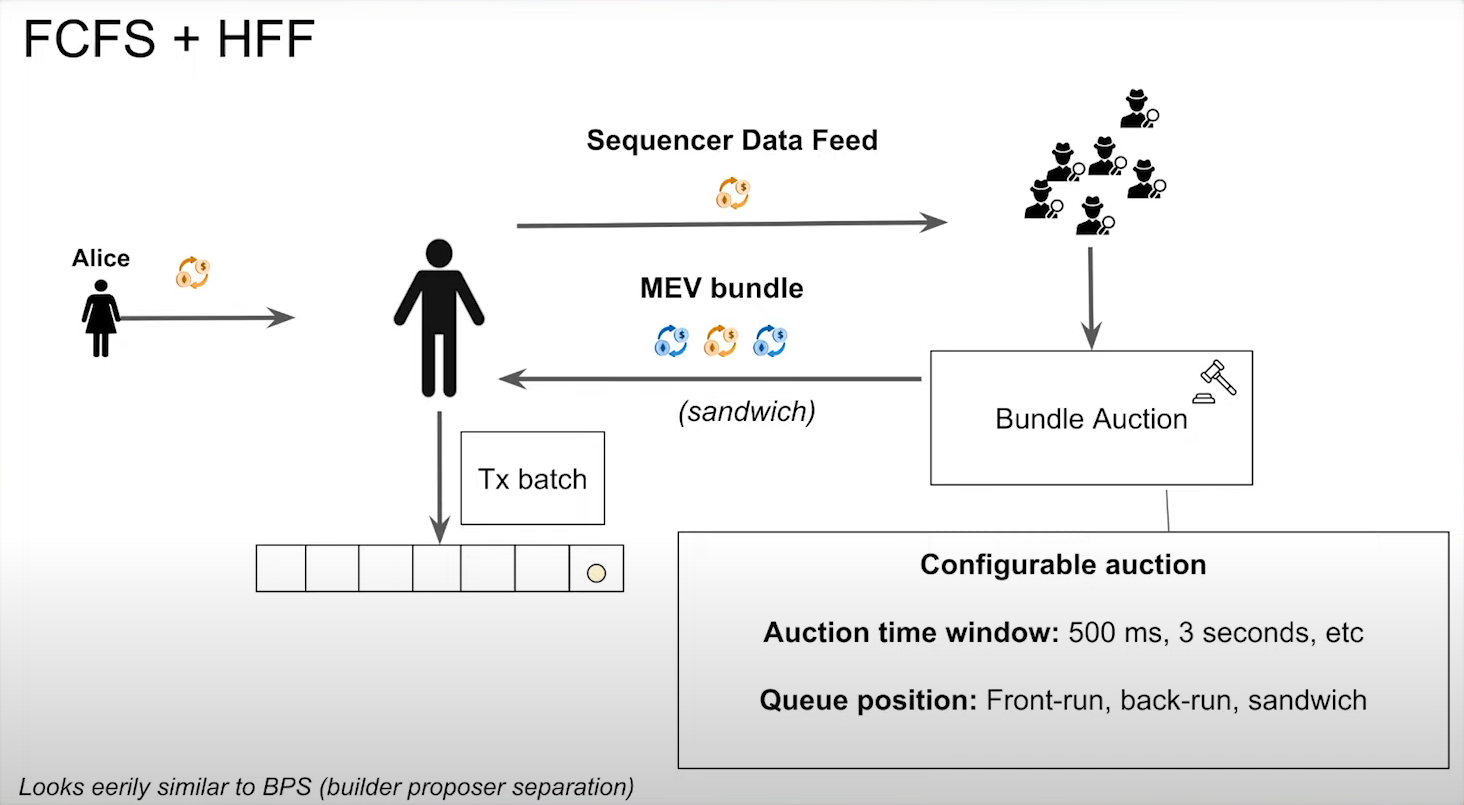

FCFS + HFF (13:00)

A more interesting solution according to Patrick is combining First Come First Serve and Highest Fee First :

- Users' transactions are collected by sequencers who pass them onto searchers in small bundles.

- Searchers participate in an auction by extracting responses from the bundle they received and submit their bundle with payment to the sequencer.

- The sequencer selects the bundle that offers the highest payment and confirms it.

Benefits :

- Fast confirmations for users' transactions (500 milliseconds)

- Searchers have an open market for participating in auctions, allowing them to find profitable extractions within smaller bundles.

- Sequencers can decide which types of extraction are allowed or not (front-running, background transactions, sandwich transactions, or all of the above).

Everything that is said about the FCFS + HFF is to be taken conditionally, because it's still an open problem today

- The "Leader selection algorithm" is basically an algorithm that tells you who is permitted to actually produce new blocks. Blackchains that can't add new blocks aren't useful.

- The "Sybil resistance mechanism" is the consensus mechanism (PoW, PoS...) to prevent spamming

- The "Block validity function" which defines the execution rules of a block

- The "Fork choice rule" to distinguish between two otherwise valid chains

Leader selection and the fork choice rule are linked to the MEV (maximum extractable value), as the leader can capture the MEV via arbitration, front running, etc...And the bifurcation rule can enable temporal attacks linked to MEV.

The Leader Selection Algorithm (3:00)

In a decentralized blockchain, no single leader can halt the chain on their own. This prevents any one party from destroying or controlling the chain

Leaders are generally tied to a consensus mechanism like Proof-of-Work or Proof-of-Stake. This makes it hard to manipulate who becomes the leader :

- In PoW, you have to do a bunch of work

- In PoS, various protocols have preassigned leaders

Fork Choice Rule (5:00)

It may be easier to manipulate the fork choice rule than the leader selection algorithm. Manipulating the fork choice rule could enable attacks like time-bandit attacks (miners rewrite blockchain history to steal funds allocated by smart contracts in the past)

Once an attacker has reordered the chain, they can also engage in front running, arbitrage, etc. within the blocks they now control.

The Modular World (5:30)

Overall, the leader controls the chain and can capture MEV, so preventing manipulation of leader selection and fork choice rules is critical. These may not be that much of an issue in a Layer 1 context, but they're much more flexible in a modular context, and this a concern.

The blockchain ecosystem is shifting to a model with multiple specialized chains and layers that interoperate. This includes shared data availability layers that provide data bandwidth (Celestia), and execution layers like rollups (Abritrum, Optimism, zkSync, Starknet) that run transactions.

The difference between MEVs (6:15)

Cross-domain aka "Horizontal" MEV refers to extractable value from arbitrage and sequencing opportunities between separate execution environments like multiple rollups.

Modular blockchain Stacks aka "Vertical" MEV refers to the extractable value between an execution layer like a rollup and the underlying data availability layer it relies on (this is what this talk is about)

Unlike with horizontal MEV, there are no direct arbitrage opportunities between a data layer and execution layer. This raises the question of why vertical MEV exists as an issue.

John Adler

This "law" suggests that even if MEV is addressed on one layer, it may reappear on another layer in the modular blockchain stack.

Considerations for Leader Selection Algorithms (8:30)

MEV in the rollup should stay isolated there, and not bleed down to the data layer, because data validators will be incentivized to extract any MEV that ends up on the data layer => centalization pressure

For decentralization and modularity, it's better if MEV incentives are compartmentalized in the rollup layers above rather than aggregating on the base data layer

That said, aligning rollup and data layer incentives is an open challenge today. Rollups have their own economic incentives that may not align with the long-term interests of the data layer.

Some examples of Leader Selection Algorithms (12:15)

First Come Fisrt Serve : Participants bid to be the first to post a block blob to become leader. This turns leader selection into an on-chain auction, but brings several problems like plutocratic governance, MEV bleeding down to the data layer, and waste of block space since only one blob matters.

Tendermint-Style : Tendermint's leader rotation uses rollup state, avoiding dependence on the data layer. In addition, Tendermint is fork-free. However, since you may wait for data layer blocks, achieving good liveness and responsiveness is hard

Highest-Priority First : This idea requires the data layer to support priority fields that participants can manipulate. But it essentially becomes first come first serve with the auction happening off-chain, which lacks transparency.

Based Rollups : they use their consensus and MEV boost to improve efficiency. However, MEV boost introduces trust assumptions and Layer 1 still acts as an auction, leading MEV to bleed down.

Further reading (16:45)

Much of the thoughts and proposals around rollup leader selection and vertical MEV originated with the Solana team (Connor, Rutul, Evan, Callum, Gabriel)

This is an active area of research with substantial prior work and ideas already published.

The goal of this talk is to discuss a specific technique to mitigate MEV when transferring assets cross-chain via a bridge

How it works

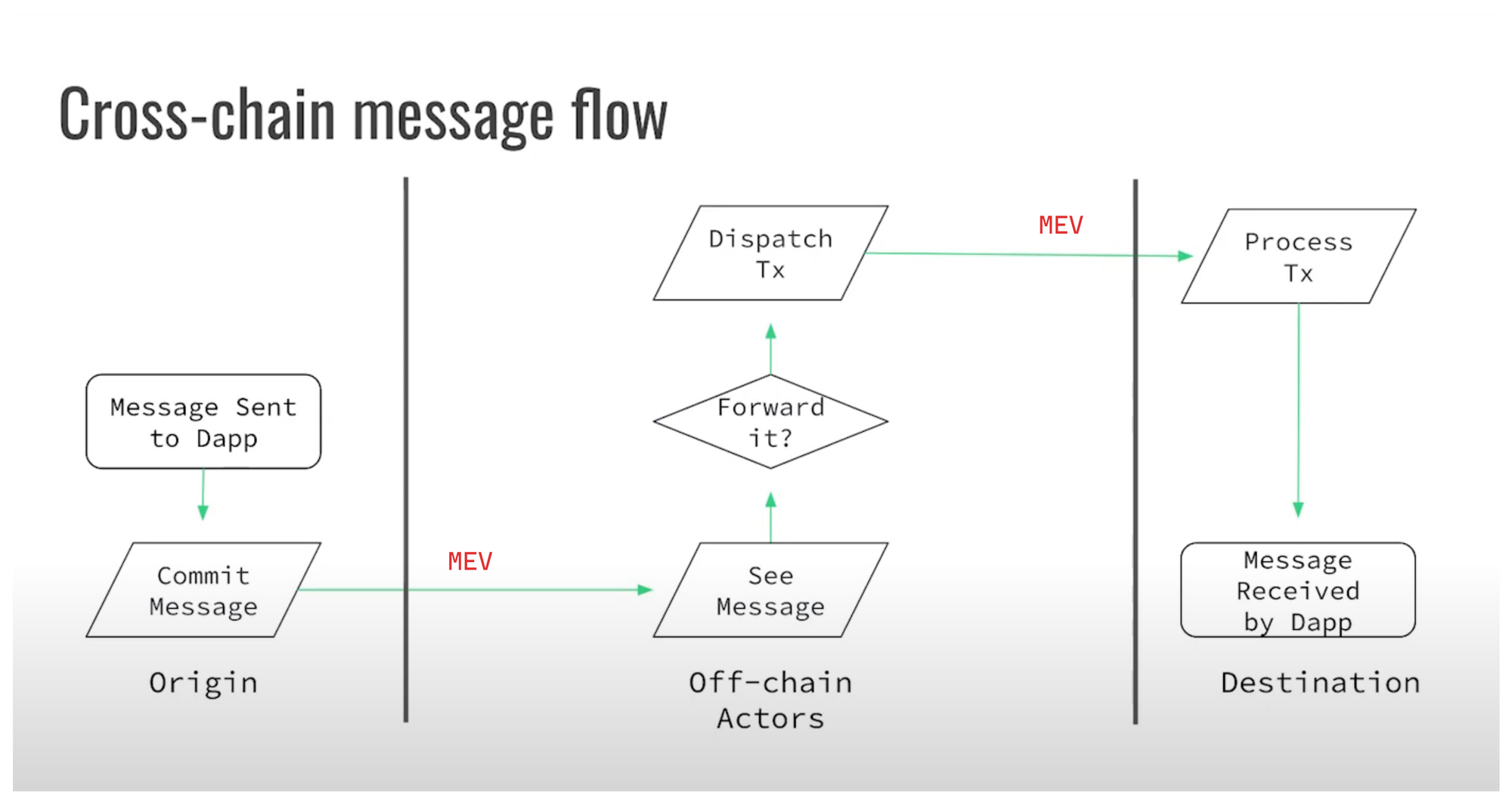

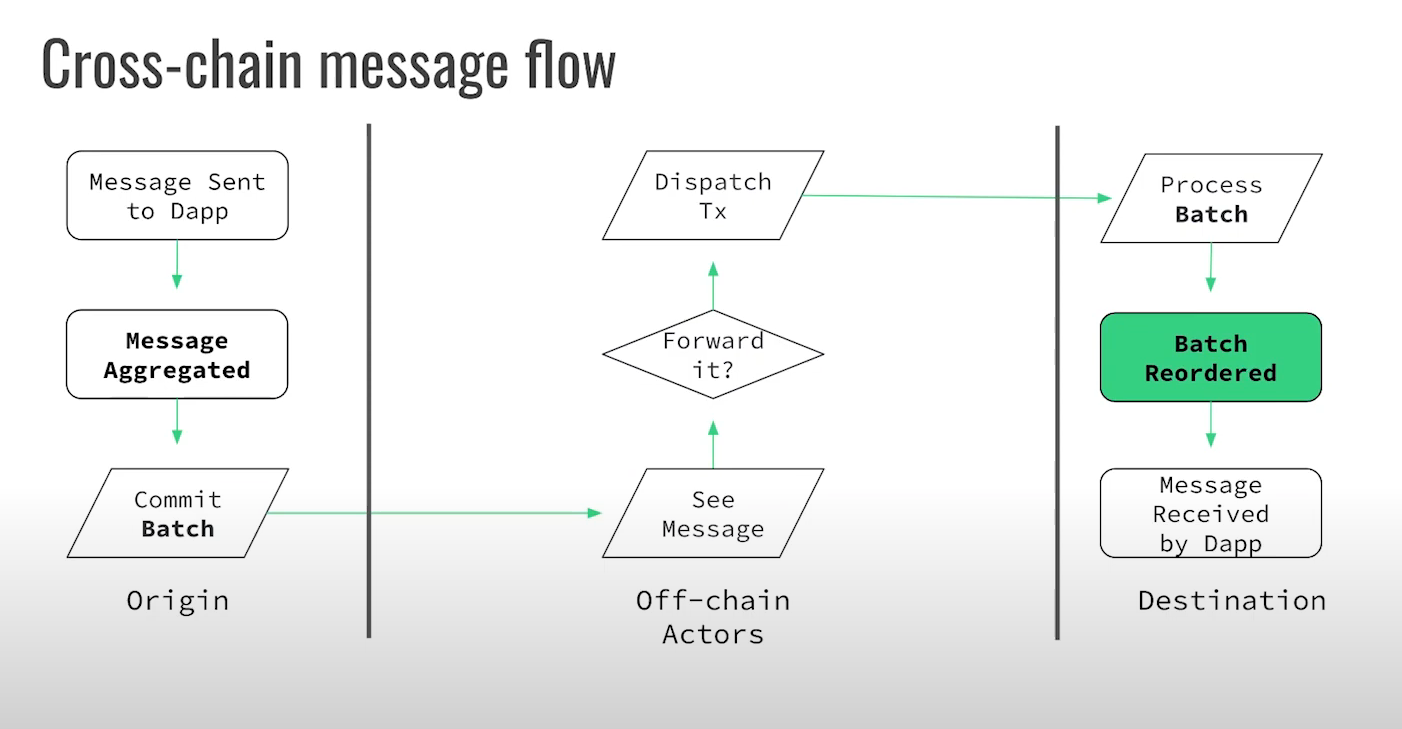

Cross-chain message flow (0:15)

- When a user wants to transfer assets via the bridge, Chain A creates a message representing the transfer

- Off-chain actors monitor Chain A and pick up this transfer message

- The off-chain actors deliver the message to Chain B

- Chain B validates the message came from Chain A and credits the receiving account

Creating the message on Chain A and validating it on Chain B are atomic on-chain operations. However, the off-chain steps of picking up and delivering the message add delays ranging from tens of seconds to minutes. Those delays in the cross-chain bridge process allows significant opportunity for MEV

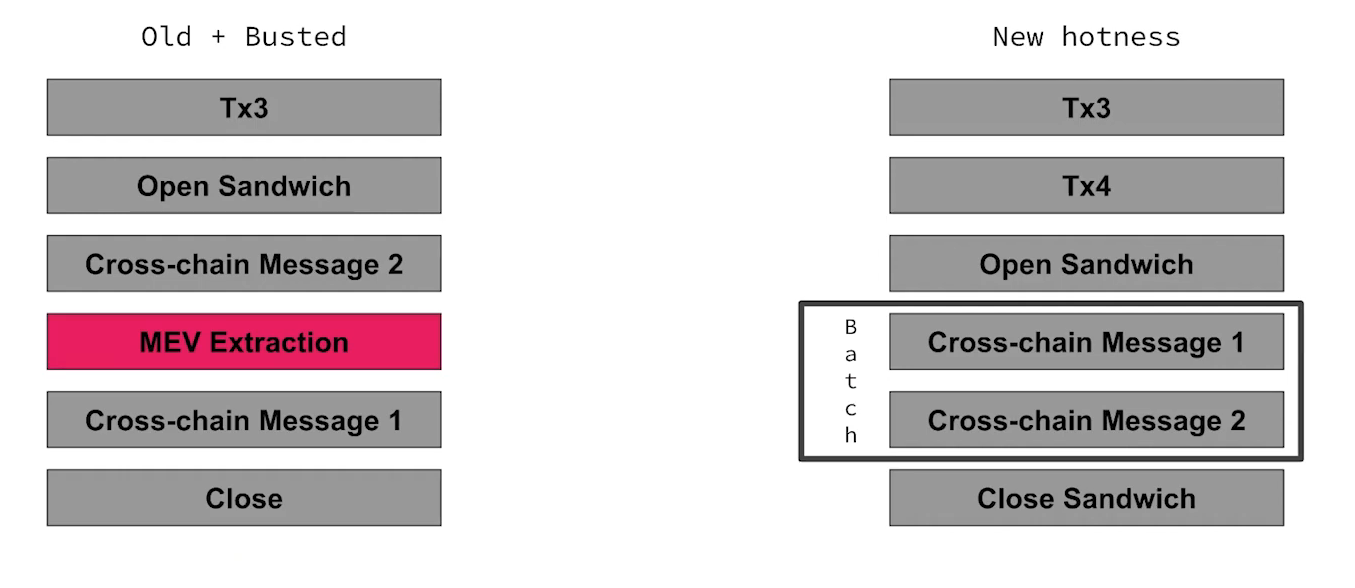

MEV is early access to information (2:45)

Everyone has 10 minutes of warning on what this message does and what it's going to do when it hits the remote chain and how to front run that message most effectively.

So MEV pushes the ordering of a blockchain towards the maximally extractable ordering. What we want to do is mitigate the impact that Reordering and sandwiching have on crosschain messages because crosschain messages are more vulnerable than the average transaction.

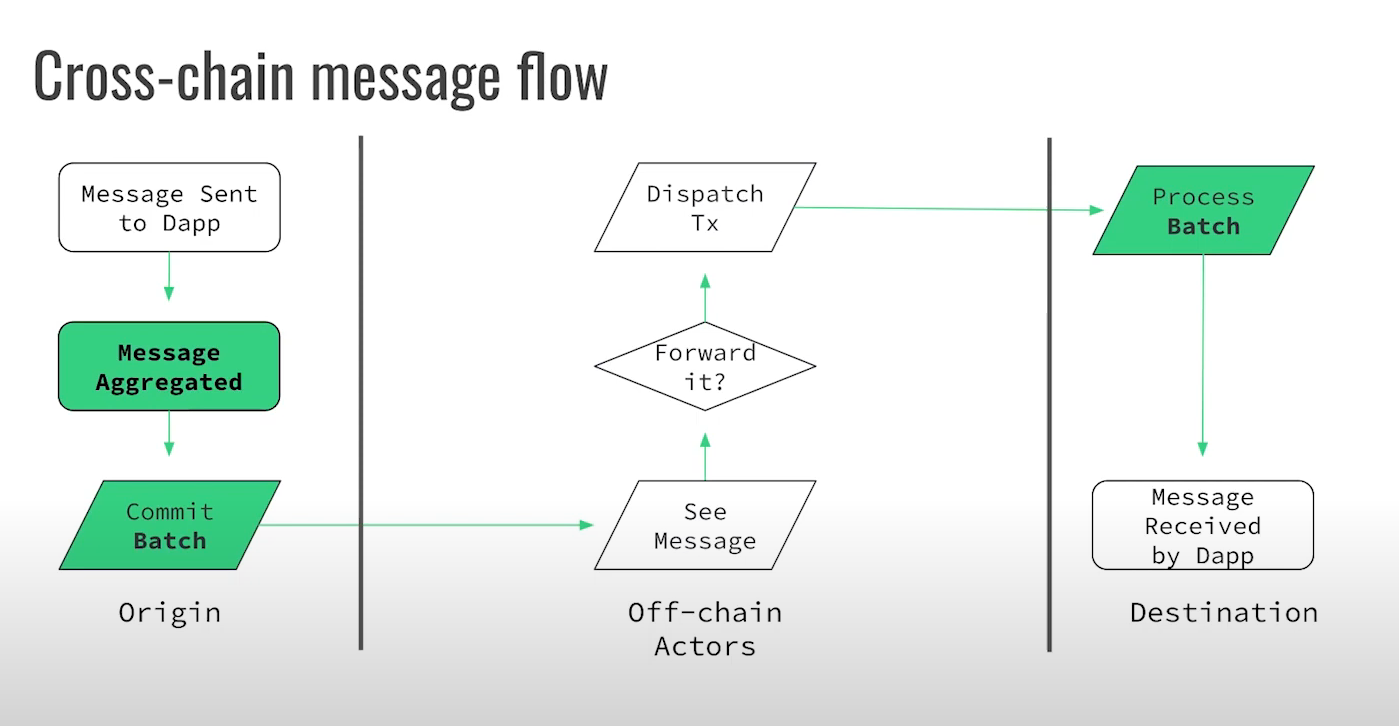

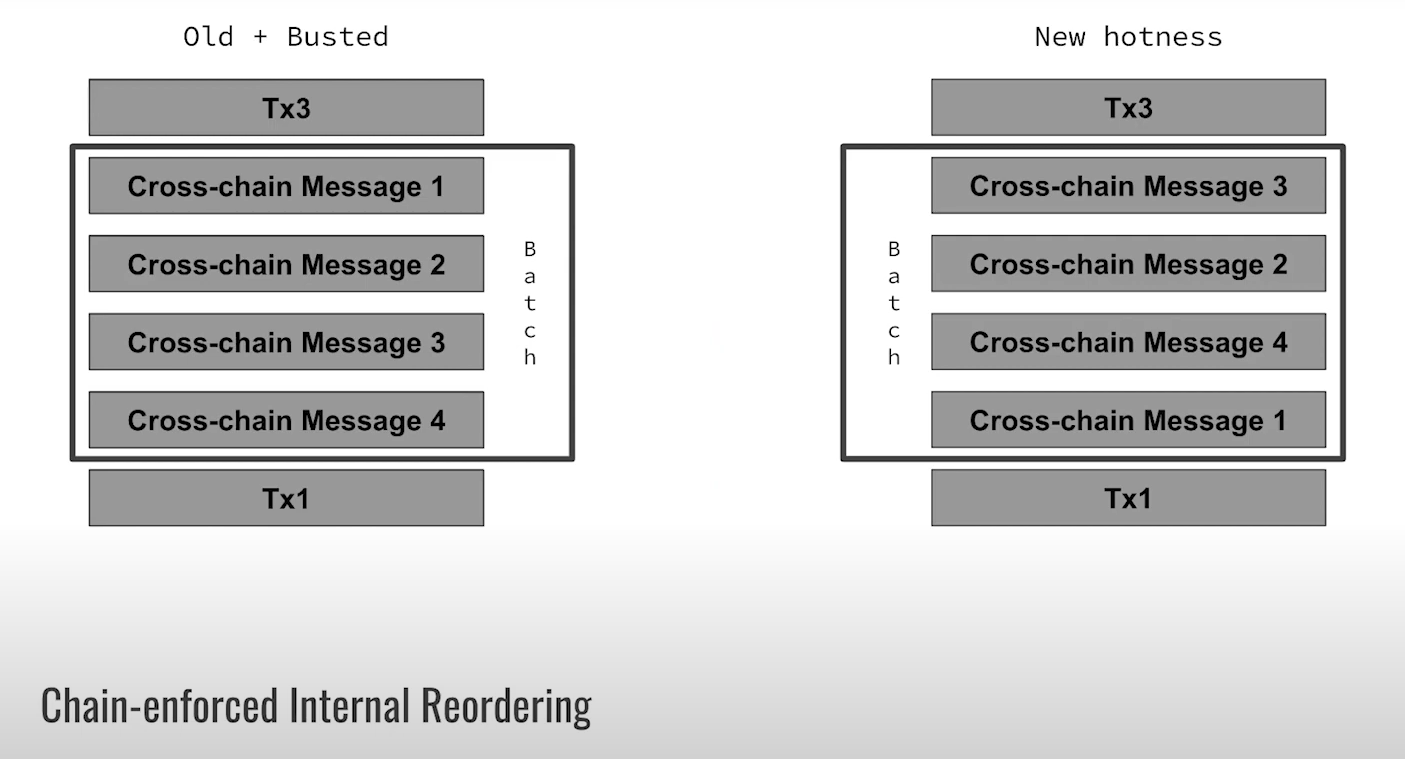

Mitigating Reordering & Sandwiching

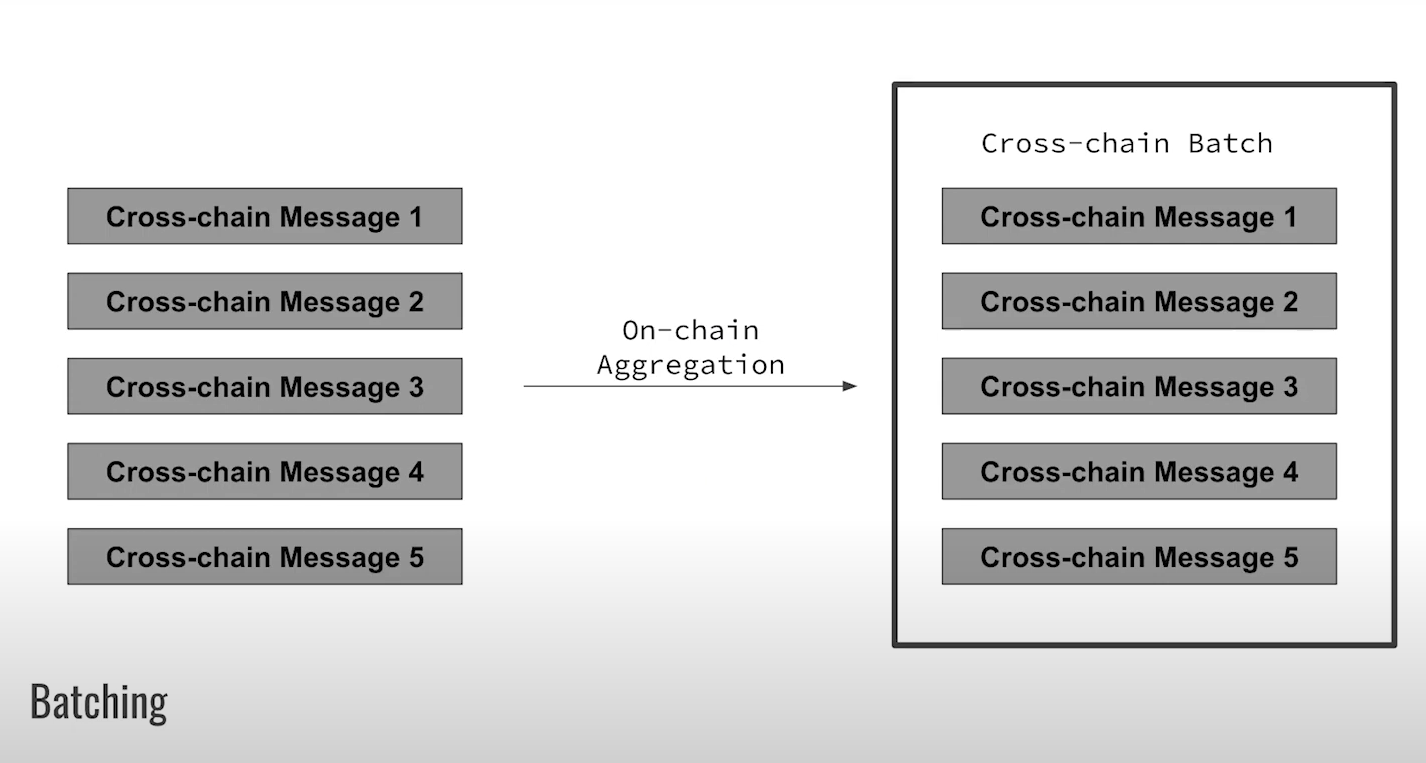

Batch messages (4:15)

- Instead of immediately dispatching cross-chain messages, accumulate them into batches. Specifically, batch messages that relate to the same smart contract or application.

- Instead of making each message a separate commitment, commit to the entire batch

- Dispatch the batch and process it as a unit on receiving chain

By keeping messages in a batch, their relative order is fixed. This prevents reordering the messages within the batch to exploit MEV, and we remove ability to sandwich transactions to extract MEV

This usually introduces a small amount of latency, but as a result you can't be extracted from as easily

Reorder batches upon receipt (6:15)

When a batch hits the destination chain, we can reorder that batch on-chain. For example, use a deterministic shuffle based on receiving chain's block hash.

This technique isn't fully unpredictable, but it still raises costs of manipulating batch order. Searchers could try different shuffles until they get an exploitable order, but that takes work. Speaking of which, we already have a word for that : Proof-of-Work

Optimal extraction is now a PoW problem

So the searcher now has to iteratively repeat some shuffle hash function and then try to extract upon that blob and it has to repeat that many times, trying to find the best shuffling.

Manipulating the randomness is equivalent to doing the PoW. It increases the cost of extraction.

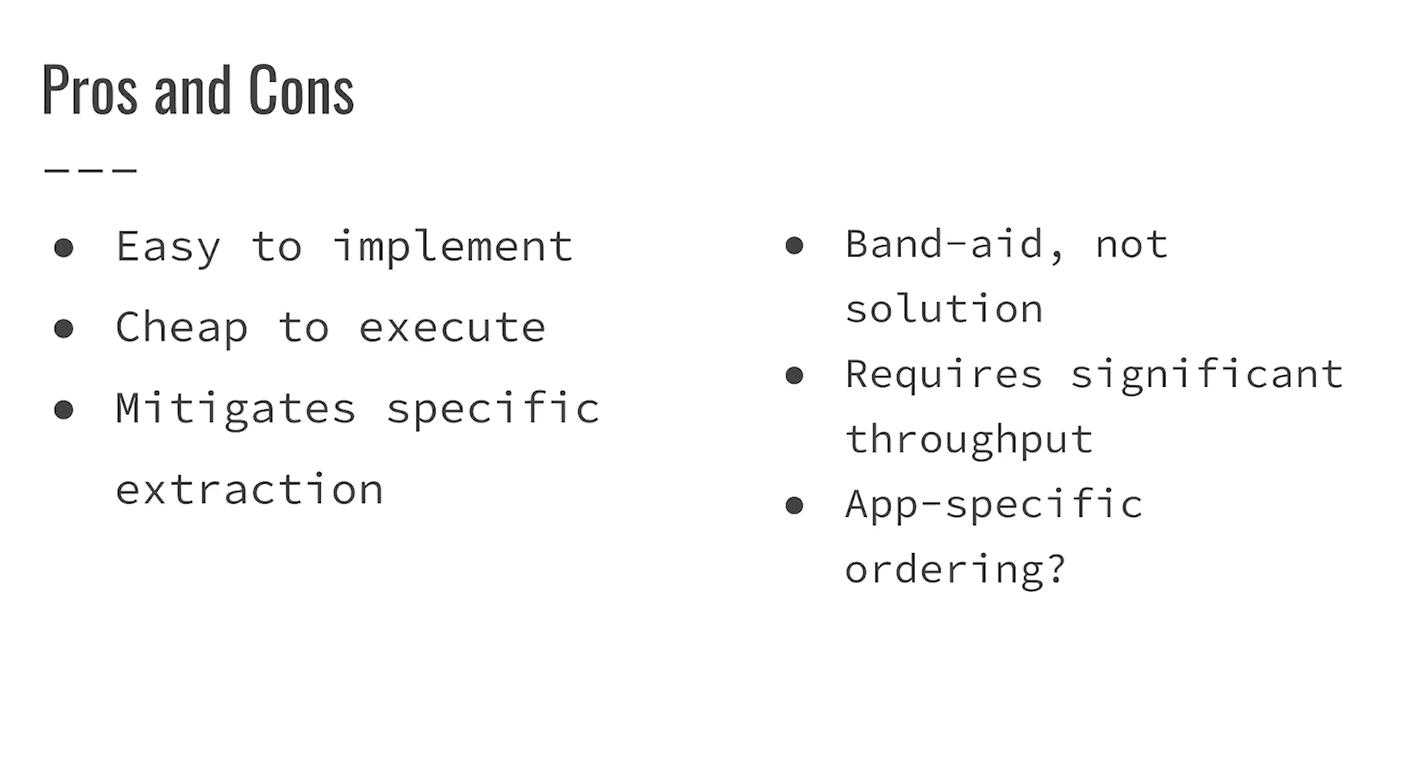

Pros and Cons (8:15)

In other words, we're increasing the costs. But this is only a mitigation, not a fix.

This only works if you have several messages, a significant number that touch the same state, so that shuffling changes the outcome of those messages. Furthermore, They want to know that messages are received in the order they're dispatched and this does not preserve that property

Things we couldn't get into (9:30)

Cross-chain MEV is mostly statistical, as it relies on patterns across many txs over time

MEV is the cosmic background radiation in crosschain in that it is going from everywhere to everywhere all at once.

Discussion with Jon Charbonneau (10:30)

There was a debate around whether rollups are defined by their bridge to layer 1 or not. According to James, rollups includes a bridge by definition, because it needs it to be linked with the Layer 1

When we think about it, rollups stand out because of their inherent bridge. This sets them apart from other rollup types, like Sovereign Rollups which don't have a native bridge. Rollups are a subclass of sovereign rollups with added bridge (Terminology evolved backwards, so is a bit confusing)

The question that we fight about is whether the bridge or the nodes determine the correct state of the rollup. James thinks that nodes decides rollup state, not the bridge :

- Nodes can force bridge to accept anything, but not vice versa

- Nodes decide rollup state, bridge can't force invalid blocks on nodes

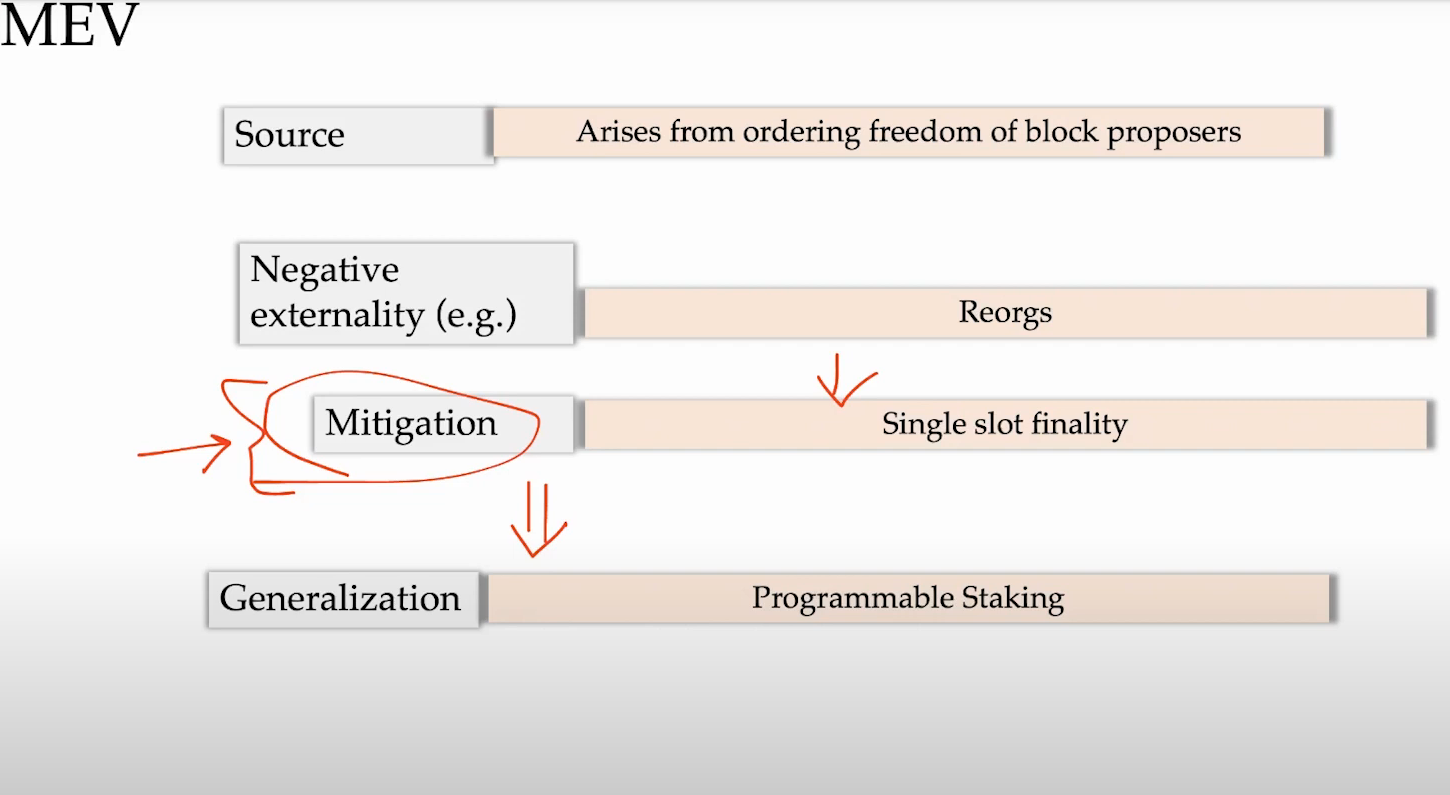

What is MEV ? (1:00)

MEV arises from the freedom of block proposers to order transactions in a way that benefits them. This freedom exists despite constraints from the consensus protocol.

This freedom gives rise to markets as well as negative externalities. Markets emerge around providing MEV services and paying for transaction ordering. However, practices like frontrunning are negative externalities - they impose costs on users and degrade the fairness of the system.

Some systems are easier to extract value than others :

- In PoW, miners can more freely reorder blocks to maximize profits

- In PoS, factors like staked deposits and slashing deter such practices. Stakers risk losing their deposits if they deviate from consensus rules.

- Single-slot finality in PoS chains like Ethereum helps mitigate MEV by finalizing blocks quickly

Programmable staking/slashing could allow proposers to commit to more anti-MEV rules. Therefore, new staking and slashing designs enable interesting new mechanisms to manage MEV, and restaking wan be interesting in that way

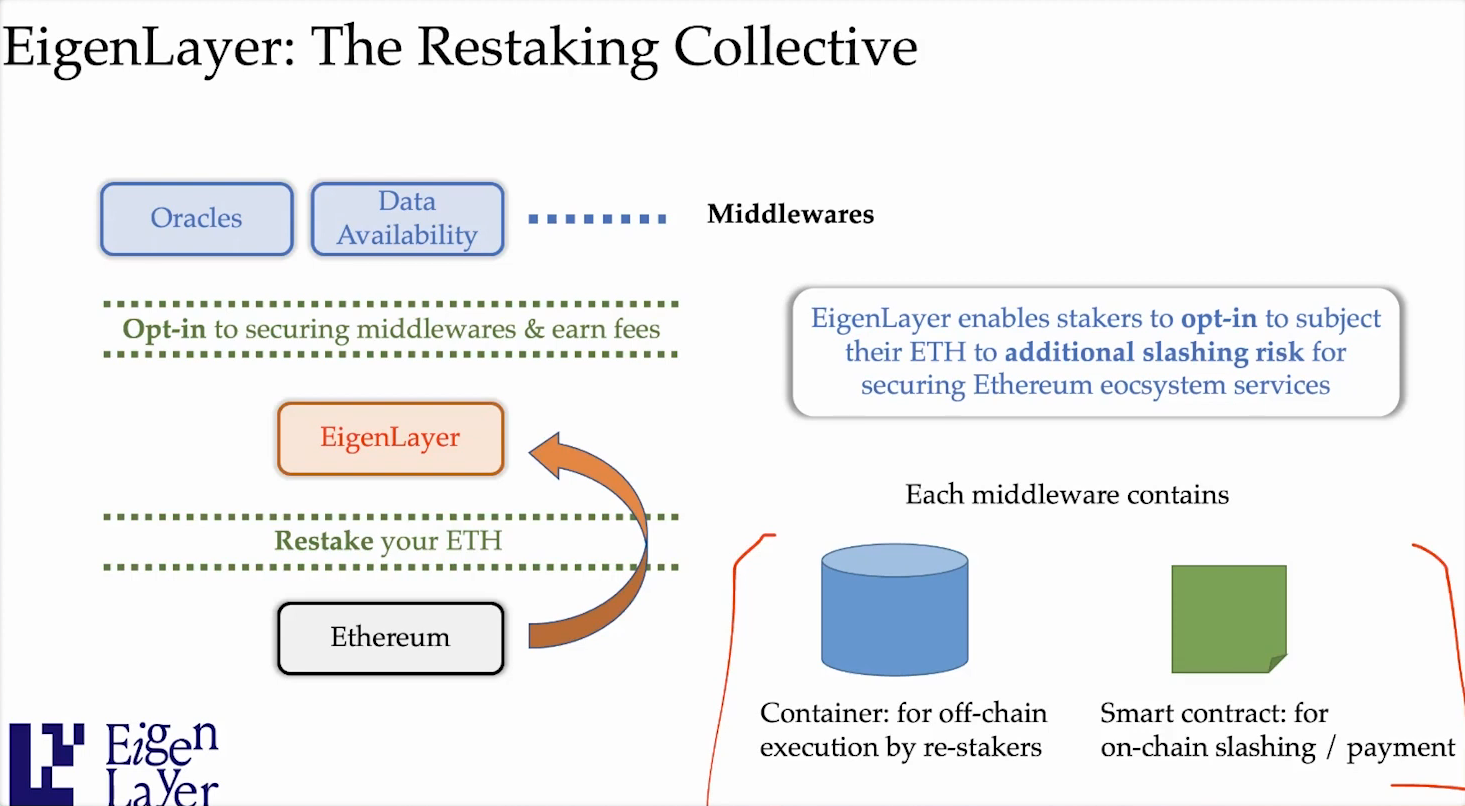

What is "Restaking" (3:30)

Restaking allows Ethereum stakers to make additional commitments with their stake, and this was introduced by EigenLayer, which is a restaking protocol that enables stakers to set withdrawal credentials and opt into new commitments :

- Running middlewares - Stakers can commit to running specific middleware protocols that likely have their own incentives and slashings.

- Transaction ordering preferences - Stakers can commit to following certain transaction ordering schemes, for example anti-MEV schemes that limit extracting value.

- Securing new services - Beyond just securing the Ethereum base layer, stakers can commit stake to secure other auxiliary services/blockchains

It's called "restaking" because stakers are reusing their Ethereum stake for additional commitments, not just securing the base layer.

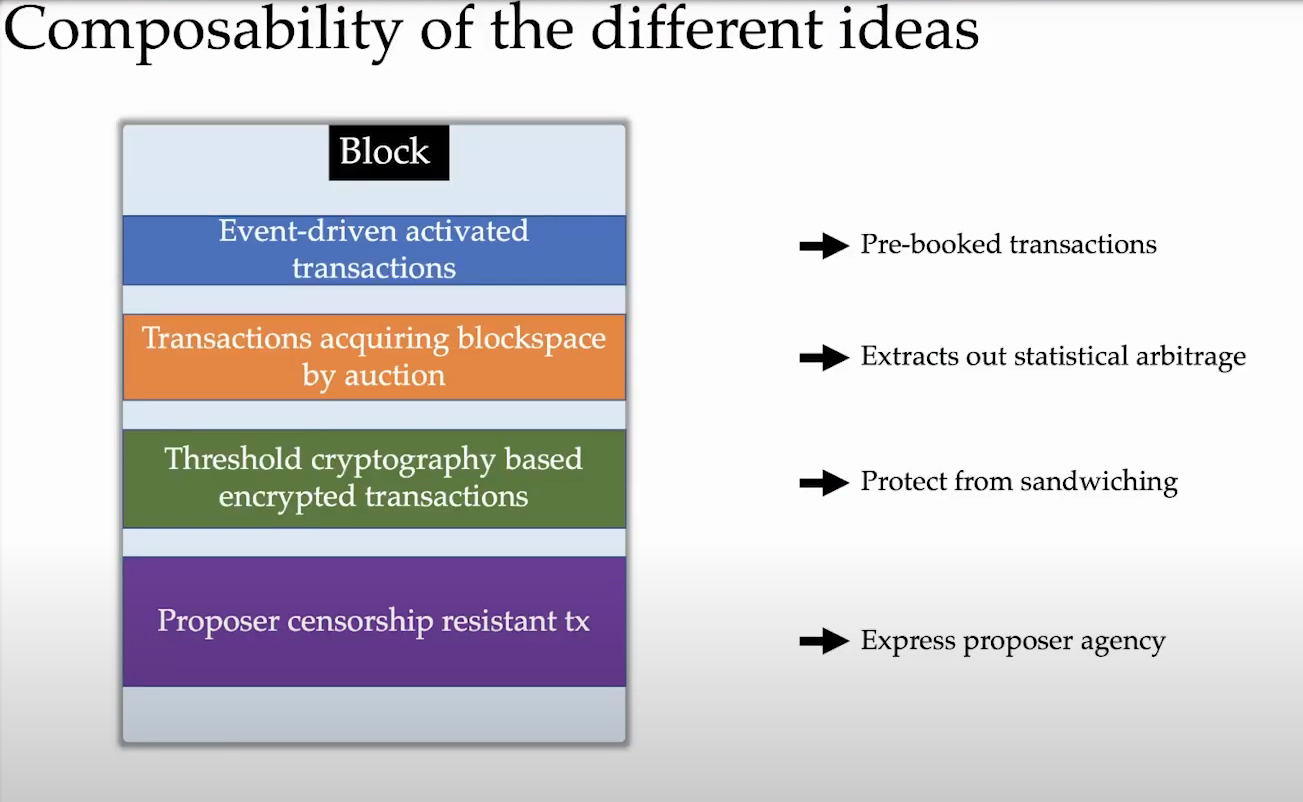

Use cases

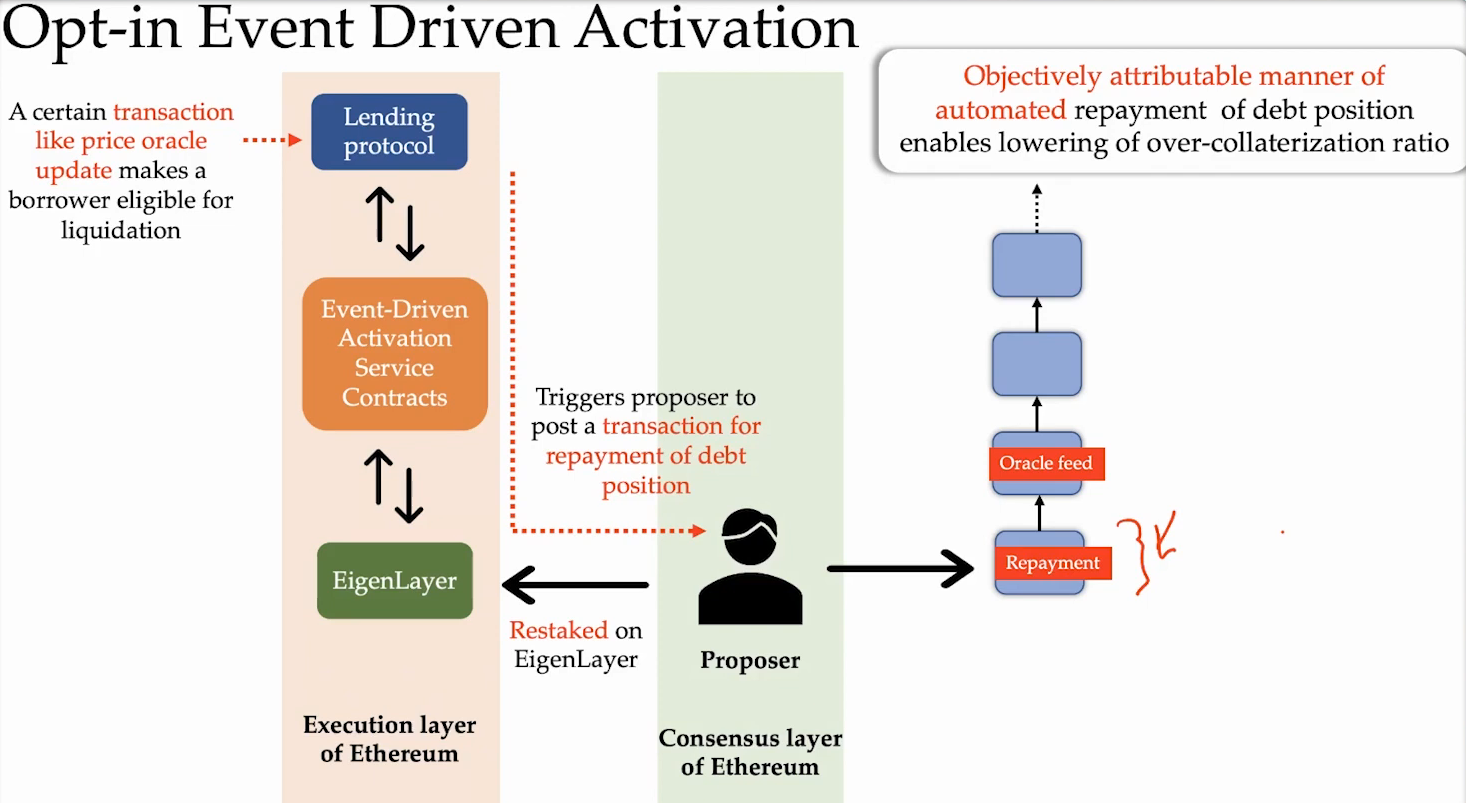

Event Driven Activation (5:30)

Some events like liquidations in a lending protocol or NFT Mints are based on events that occur on Ethereum blockchain.

These event-driven actions currently happen off-chain by various services like Gelato, Keepers, and Chainlink. They monitor the chain, detect condition changes, and try to broadcast transactions in response. However, there is no direct linkage between these monitoring services and the Ethereum block proposers who could include the desired transactions.

With Eigenlayer, stakers committing to event services can be obligated to include transactions responding to events. If the event happens in the Ethereum state, the staker must include the associated transaction or face slashing

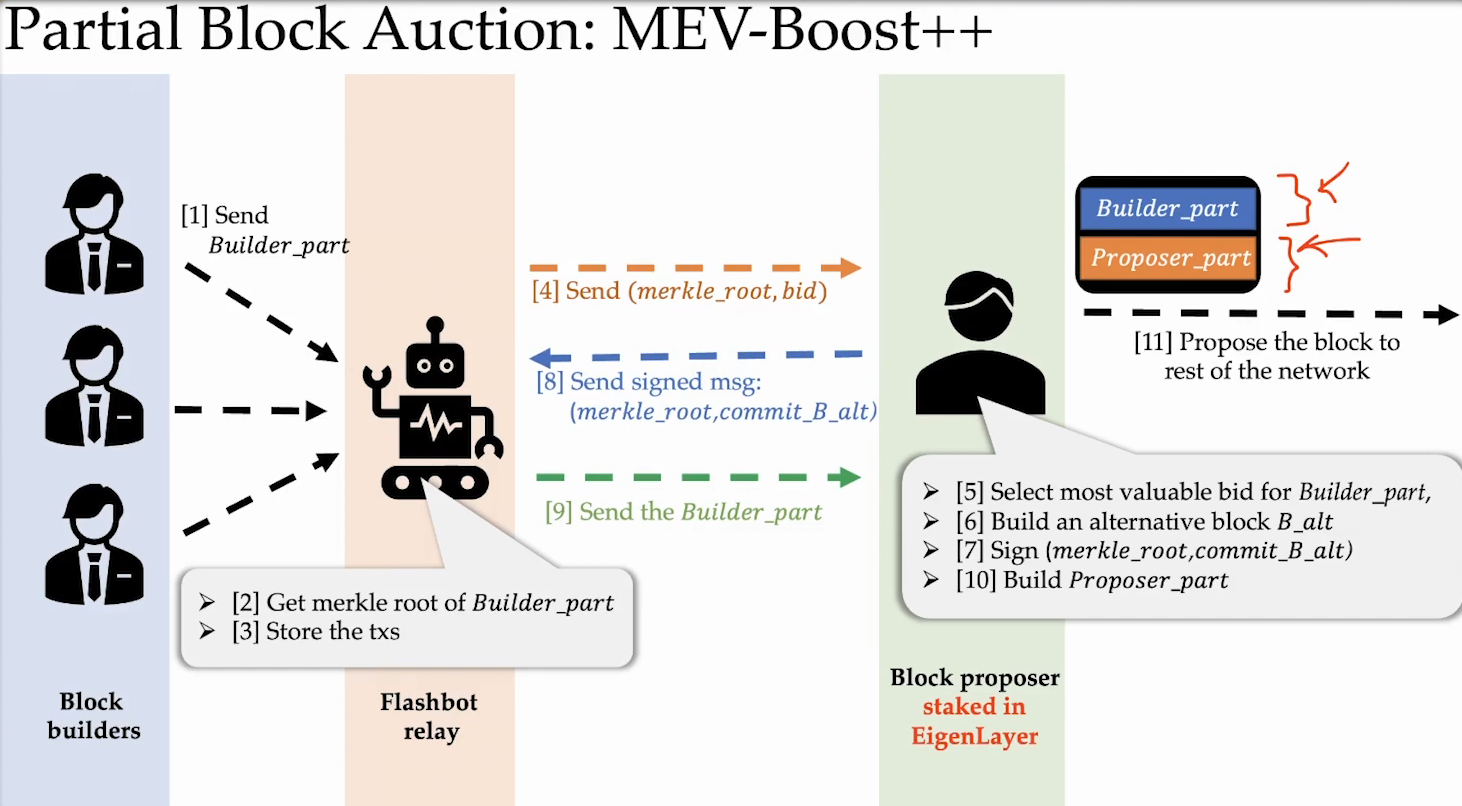

Partial Block Auctions (8:30)

Partial block auctions allow block builders to purchase only a portion of a block from stakers, rather than the entire block like in current MEV Boost.

Currently, the entire block is sold to prevent stakers from modifying or censoring the purchased block contents, which they are cryptoeconomically committed to including.

With Eigenlayer, stakers can commit to including a prefixed "builder portion" of the block : The staker retains freedom to include any transactions in the remainder of the block. This lets stakers sell a chunk of MEV to builders but still include some of their own transactions :

- Stakers can express more agency by including chosen transactions.

- Retains decentralization of block building across stakers.

- Allows stakers to capture some MEV value from the block

This balanced approach aligns incentives between decentralized stakers and professionalized MEV builders.

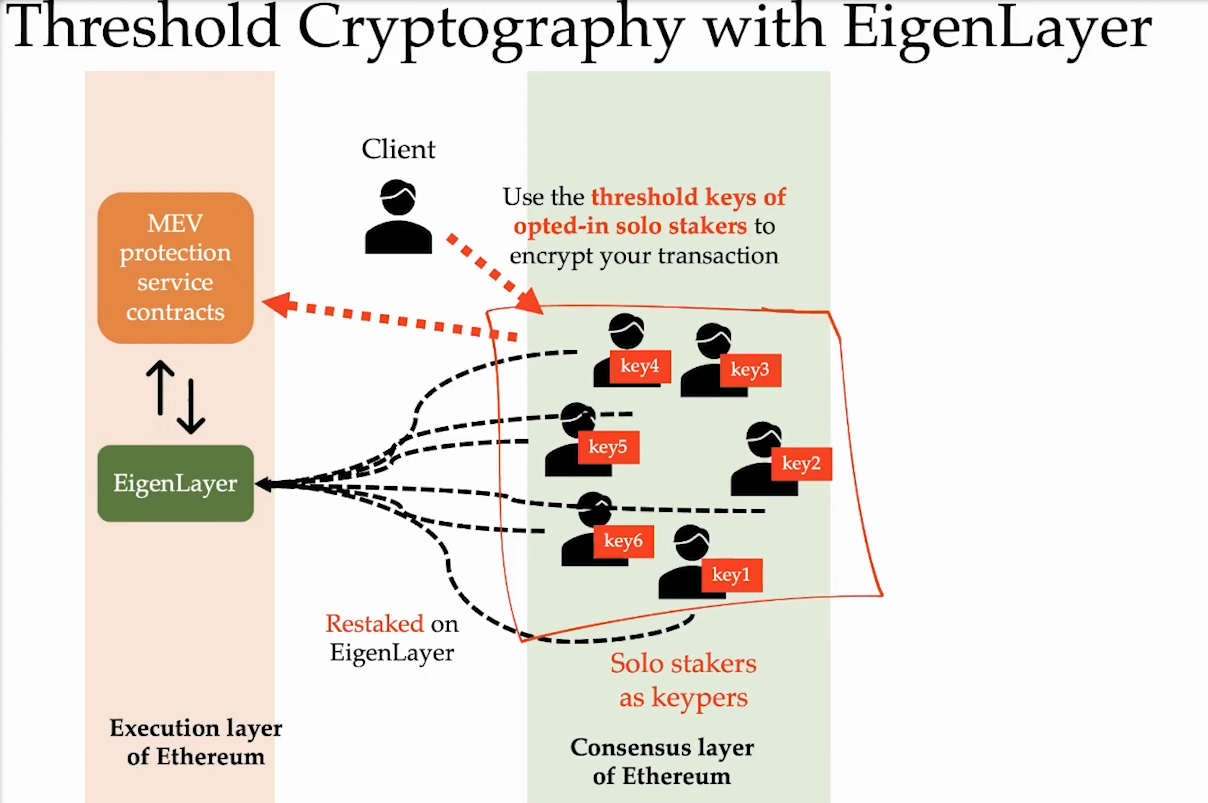

Threshold Cryptography (11:00)

We can also use threshold encryption with EigenLayer restaking :

- Transactions can be encrypted via threshold cryptography and split into shards shared among restaked nodes.

- Block proposers commit to only include the decrypted version of the encrypted transactions. If the decryption key is revealed, the proposer must include the decrypted transactions. Else, they can get slashed.

- There is an honesty assumption that the threshold keeper group will reveal keys. If keys aren't revealed, proposers can waste block space instead of getting slashed. This disincentivizes the keeper group from withholding keys.

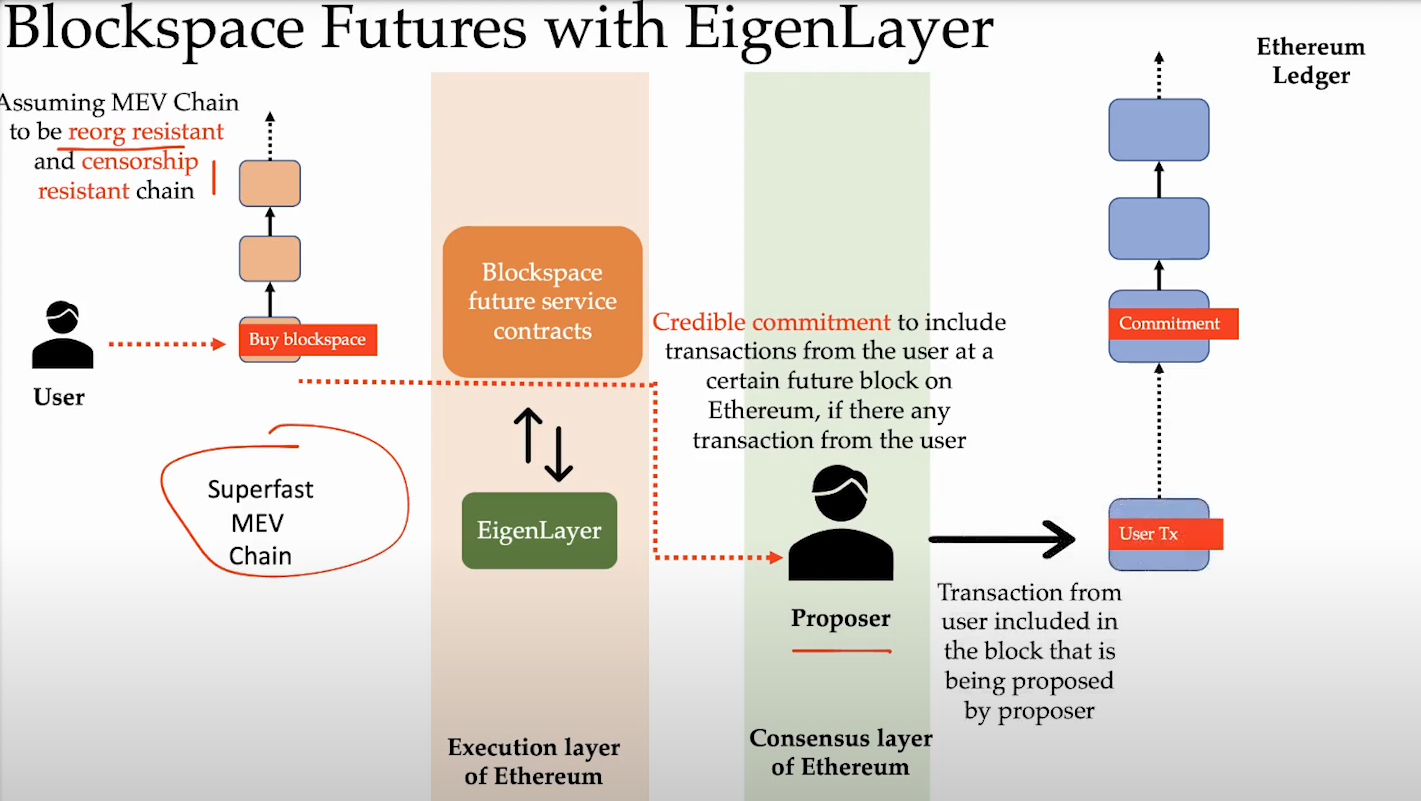

Blockspace Futures (13:15)

Blockspace Futures allow reserving future block space from validators :

- Transactions are sent to a fast sidechain for priority inclusion.

- If a transaction is included on the sidechain, Eigenlayer validators must include it in a future Ethereum block.

- Validators make commitments to honor sidechain-included transactions.

This guarantees Ethereum inclusion for priority transactions.

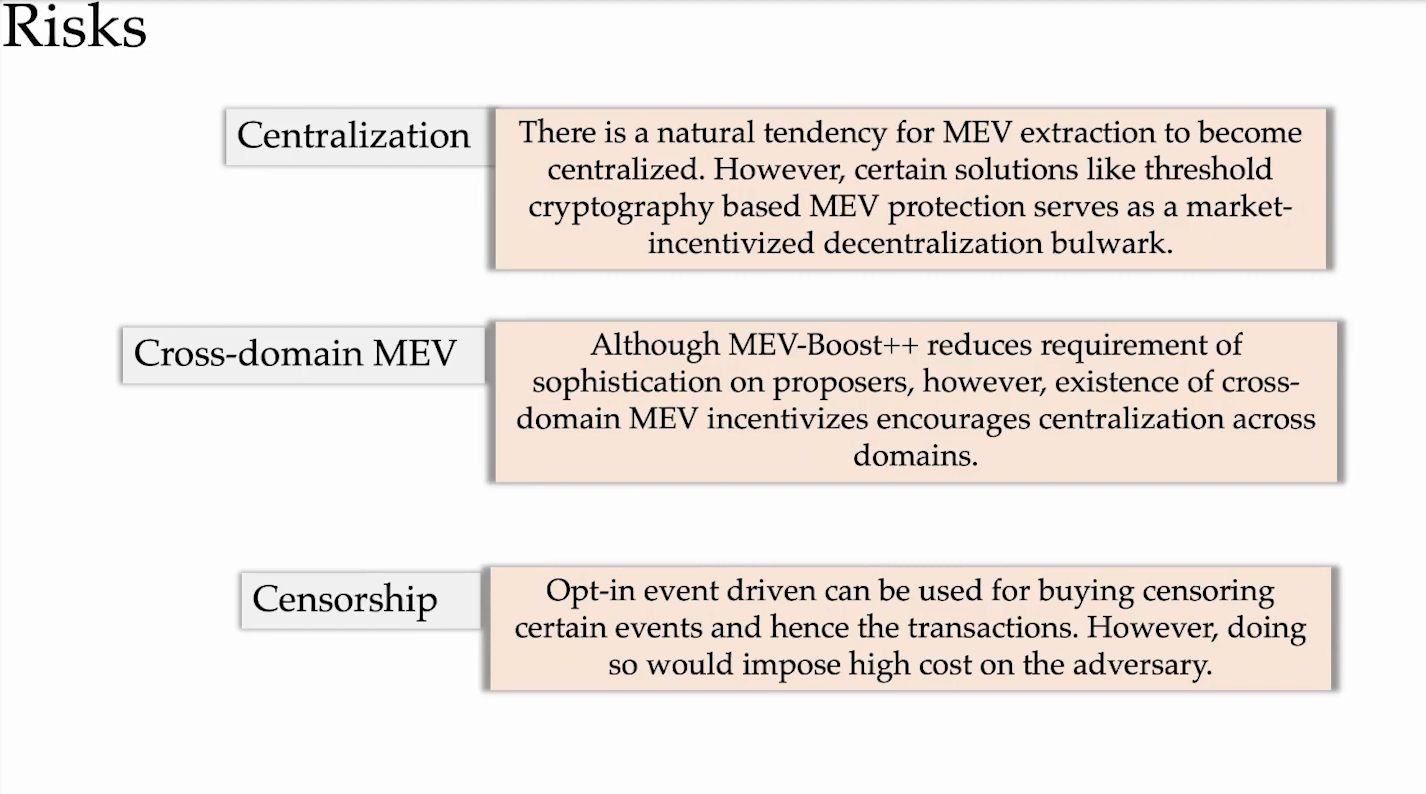

Risks (14:45)

Centralization risks (0:30)

Both restaking and liquid staking protocols currently face centralization risks, as there are a limited number of operators controlling the major protocols :

- For liquid staking, most of the market is dominated by a small number of platforms

- For restaking, there is also the risk that a few sophisticated actors come to dominate restaking services if it's profitable. Strong incentives could motivate more operators to become "sophisticated restakers", reducing heterogeneity.

Economic VS Decentralized trust (2:00)

Decentralized trust requires both sufficient financial incentives/economics and true decentralization across nodes. Relying on just one is dangerous

Some services like financial underwriting only require the economic incentives of staked assets for security. But others need decentralization for censorship resistance, secret sharing, threshold cryptography etc.

Decentralization of liquid staking providers (4:30)

There are inherent tradeoffs between decentralization of operators and scalability of the protocol. Fully decentralized providers like Rocket Pool sacrifice some scalability while more centralized providers like Lido achieve greater scale

Complete decentralization may not be feasible, but improvements can be made through technical solutions and protocol design :

- Reputation systems : Track and quantify operator reputation based on metrics like uptime, performance, stewardship of funds.

- Delayed Deposit Threshold (DDT) to limit risks from new/unproven operators

- Staking Routers : Funnel staked funds through a decentralized routing protocol to assign operators (Lido)

- Distributed Validator technology (DVT) : spread out the validation work across different nodes.

The Lido staking router model may represent the end goal for achieving both sufficient decentralization of operators while retaining scalability of the protocol.

Modular ecosystems don't help (9:00)

We're already moving to this kind of extremely complex ecosystem of chains and we're inevitably going to have to solve these allocation problems no matter what participant we are

And based on that, Tarun thinks figuring out what the collateralization looks like will change over time, especially if a lot of Lido node operators are restaking to also provide sequencing services for particular roll ups

Sequencing transactions (10:45)

Basic sequencing roles like transaction ordering and data availability can be designed to be stateless, simply requiring ordering transactions in blocks. However, the high bandwidth demands seen by early sequencers raises concerns about whether the infrastructure demands differ substantially from a high-throughput chain like Solana.

The lack of MEV auctions on shared sequencers so far exacerbates bandwidth issues by incentivizing dumping of transactions.

Overall, the relationship between staking value flows and participation incentives in different protocol roles deeply affects centralization risks. Misalignments can lead to centralization.

Rewards (15:00)

Centralization of stakes towards restaking protocols can occur even if the restaking rewards are only slightly higher than Ethereum staking rewards, as we've seen with adoption of MEV boost. The yields don't necessarily have to be very high.

Actively incentivizing decentralized nodes and committees with higher rewards is an option to counter centralization pulls, but algorithmically identifying decentralization is challenging :

- Decentralization oracles can help steer node participants towards services with specific decentralization needs

- Secret leader election protocols can help select decentralized nodes anonymously, but there needs to be a baseline of decentralization first. The state distribution needs to be uniform over unique identities, for the election to be meaningful

- Threshold cryptography for decentralized committee election is an unexplored but promising research direction for the future

It's wiser to wait for these nascent technologies to mature before integrating them into critical high-value systems like Ethereum and its staking.

Leverage (27:00)

Overall, leverage is an external factor protocols must design for :

- We can view the "leverage" created by restaking as a tranche-style insurance model

- Liquid staking tokens already carry implicit leverage since they are used as collateral in DeFi lending markets to borrow other assets.

- Even in Proof-of-Work, mining farms were highly leveraged, borrowing against their ASIC machines to finance purchasing more mining hardware

Protocols cannot control the leverage users take externally, so margins of safety are critical in design

Some design space still remains for innovation after initial liquid staking breakthroughs like staking routers and delayed deposit thresholds are established

Shared Sequencers (33:45)

Shared sequencers capture the MEV value that would normally accrue to rollup-specific sequencers, so rollups lose this benefit by adopting shared sequencing. There needs to be realignment of incentives for rollups to use shared sequencing if they lose their native MEV.

Shared sequencing should theoretically create additional interoperability MEV that doesn't exist today. The key is having transparent reporting of each individual rollup's marginal contribution to the total MEV generated by the shared sequencer.

Another option is estimating the counterfactual solo MEV that rollups could generate on their own, and incorporating that into fees. Randomly dropping rollups to measure impact on MEV can help estimate marginal MEV

Cognatorial auctions are possible but likely too expensive in practice for widespread adoption.

Rollups x Eigenlayer (39:45)

Allowing rollups to switch sequencers on a per-block basis introduces competition between sequencers to bid up MEV revenue paid to rollups. This constant competition could help align incentives, though viability needs assessment.

Making protocols aware of external slashing events, like Ethereum being aware of Eigenlayer slashing, will be possible soon through upgrades like smart contract triggered withdrawals. This awareness improves security.

However, bugs in hastily-added native commitment schemes could result in mass slashing. Ethereum may not want reliance on subjective slashing veto committees that exist today.

A prudent path seems to be allowing experimentation on opt-in overlay protocols first (Layer 2s ?), before formal adoption into Ethereum. This allows time to develop and vet any new staking logics first.

Intro

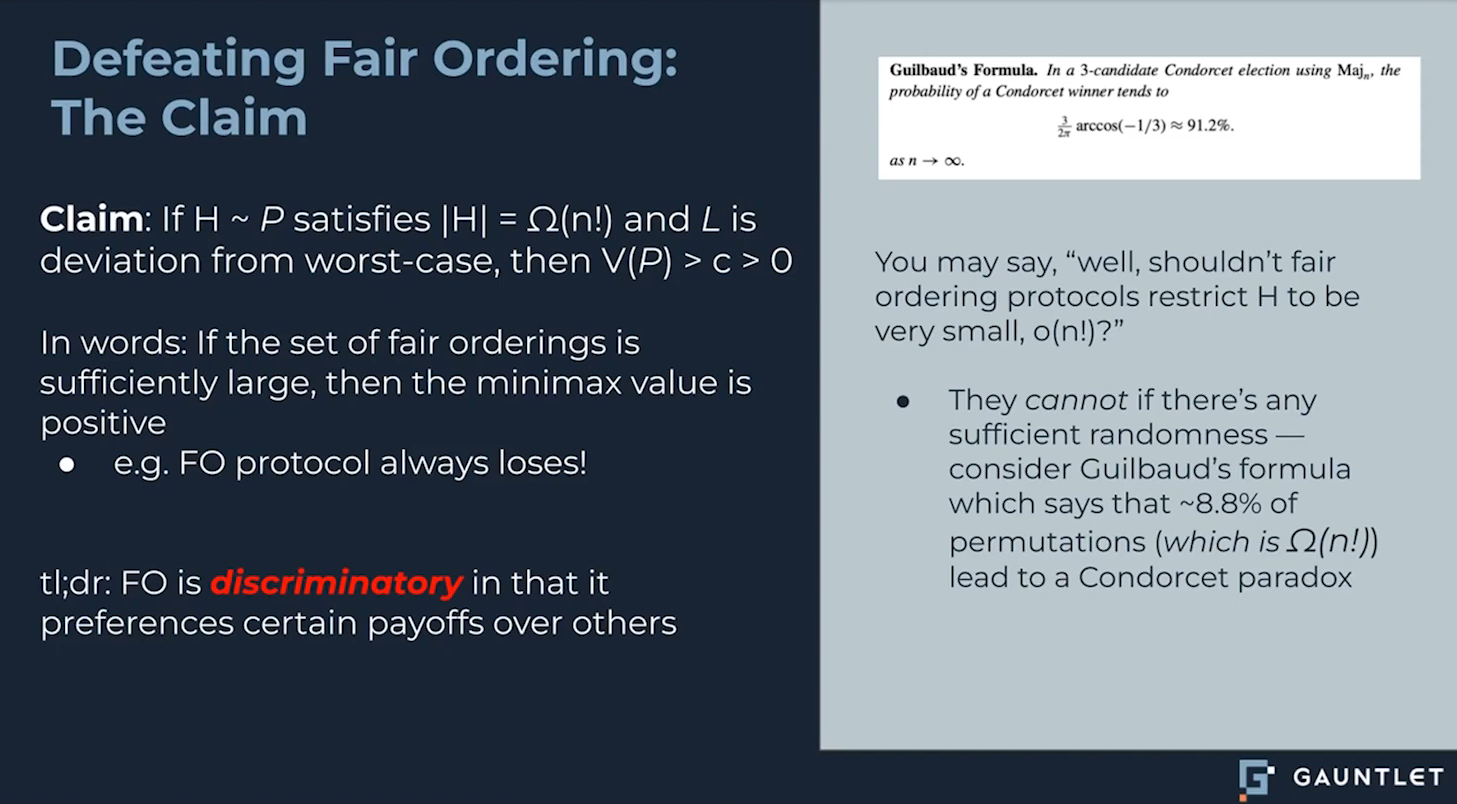

The idea of using concepts from social choice theory to make blockchains more fair through transaction ordering is interesting, but there are problems with how it has been implemented so far :

- Fair ordering mechanisms guarantee certain transaction orderings but don't analyze the economics or costs

- It's unclear if the extra work required by validators to enforce fair ordering has economic value or is harmful

The main goal is to dive deeper into the economics of fair ordering to see if there is real net value, given the costs and tradeoffs. Tarun just want to point out issues, not attack previous work

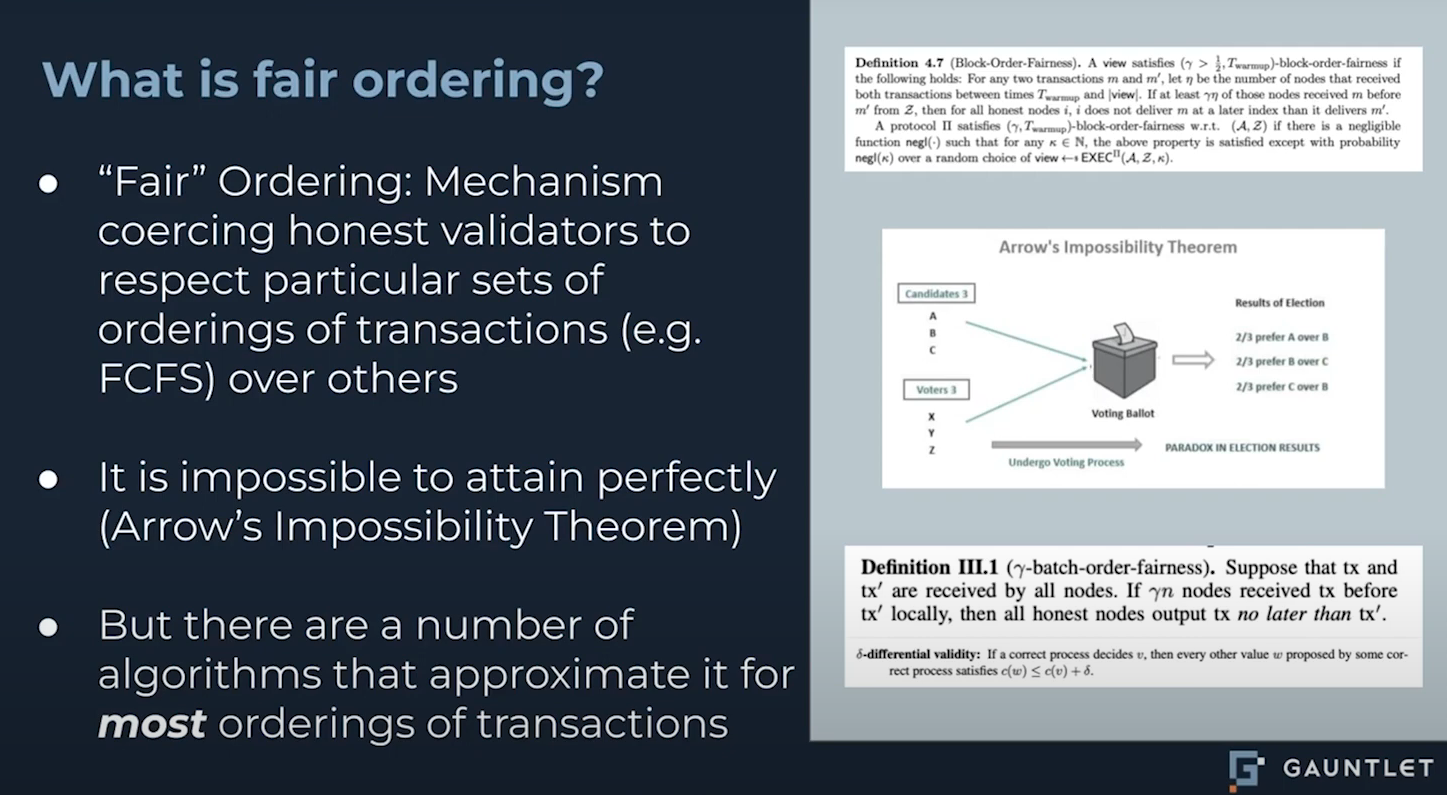

What is "Fair Ordering" ? (1:45)

Kenneth Arrow's impossibility theorem proves perfect fairness is unattainable in ranked voting systems, which are analogous to validators ordering transactions.

Fair ordering algorithms try to approximate majority votes, but can't fully escape limitations like centralization and "dictatorships" where one validator has too much influence.

The problem lurks in fairness definitions. These definitions describe the properties of the partial transaction orders that result from fair ordering. However, they do not quantify the economic value or rent extracted per partial order, whereas MEV is an economic profit.

Fair ordering is a lie (3:30)

Themis, Acquitus, and Quick Block Ordering claimed Fair Ordering eliminates MEV. Intentions are good, but it faces several problems :

- It doesn't make sense to be agnostic to all applications when some generate regular MEV (AMMs) and others generate spiky MEV (liquidations)

- Fair ordering is not universally applicable to all applications. Because MEV profiles differ across applications, what improves fairness for one app may hurt another app.

- It may be harmful to force validators to do extra work without economic payoff

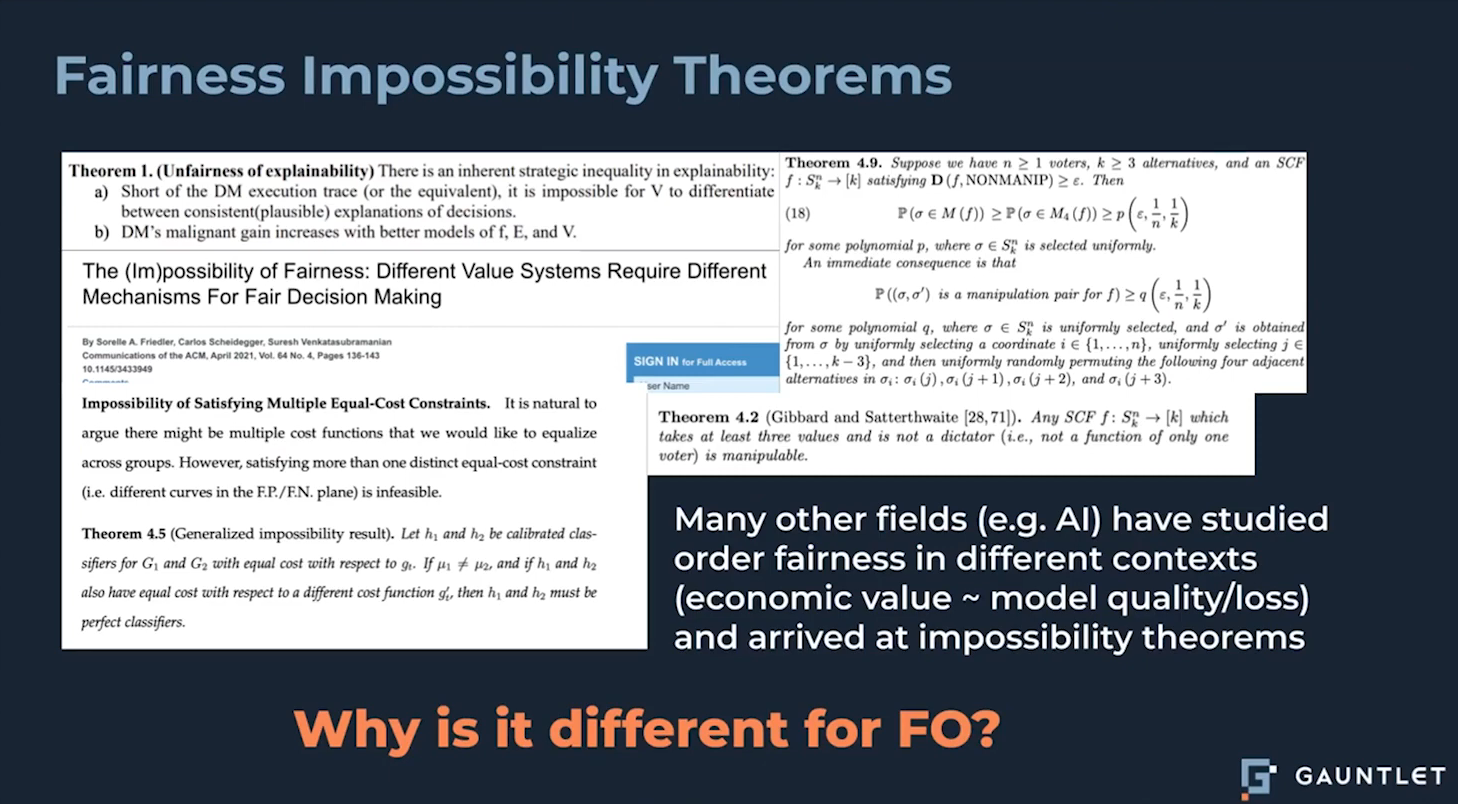

Furthermore, Other fields like decision theory and AI get impossibility results when studying order fairness for objectives like model quality. The same should be expected for Fair Ordering's impact on the objective of MEV's economic value.

How to break Fair Ordering

Given the above issues, the talk will now dive into the details of why fair ordering is fundamentally problematic

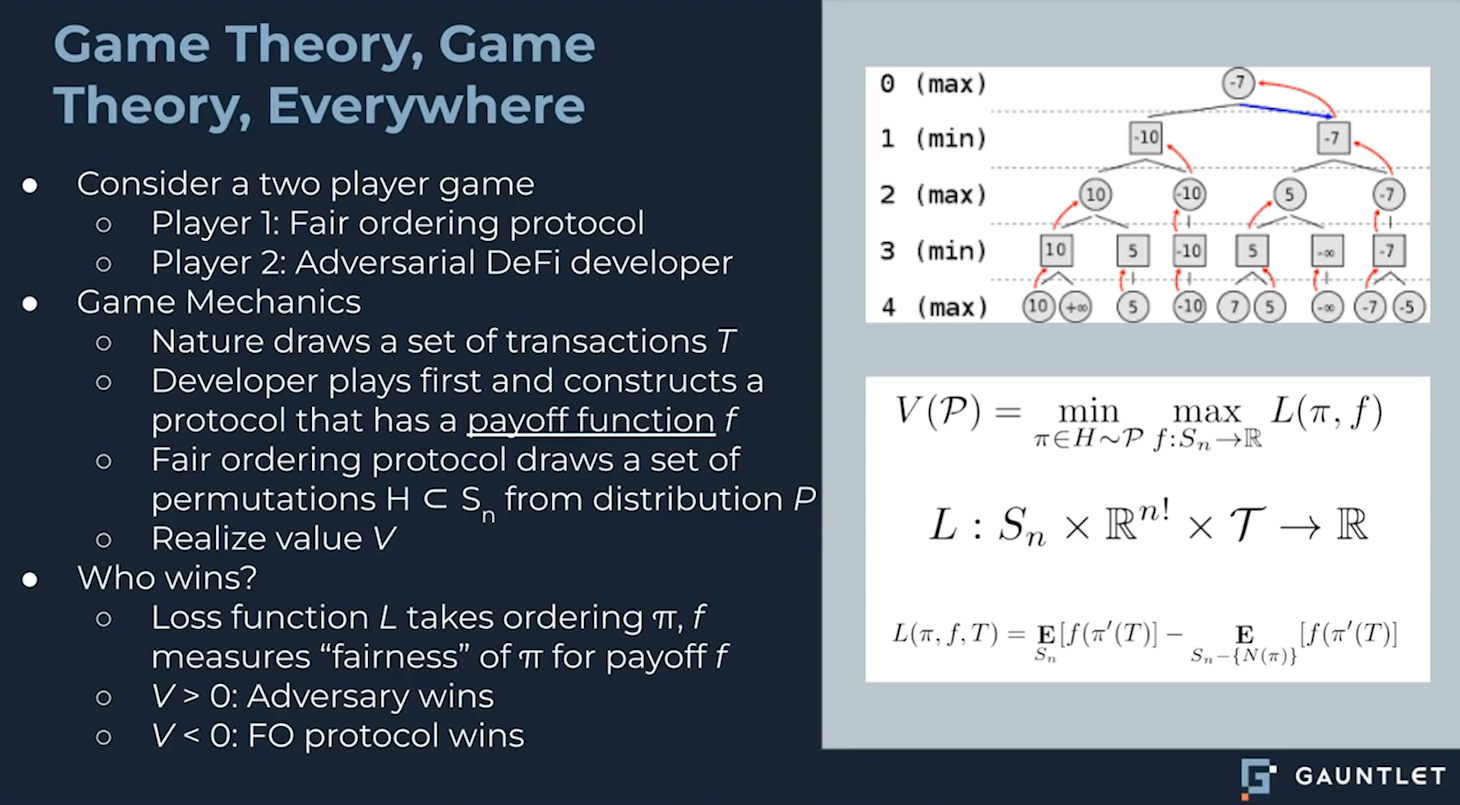

Game Theory (6:00)

Let's consider a two player game :

- The fair ordering protocol that constrains which transaction orderings are allowed

- An adversarial DeFi developer trying to design a protocol that exploits the fair ordering

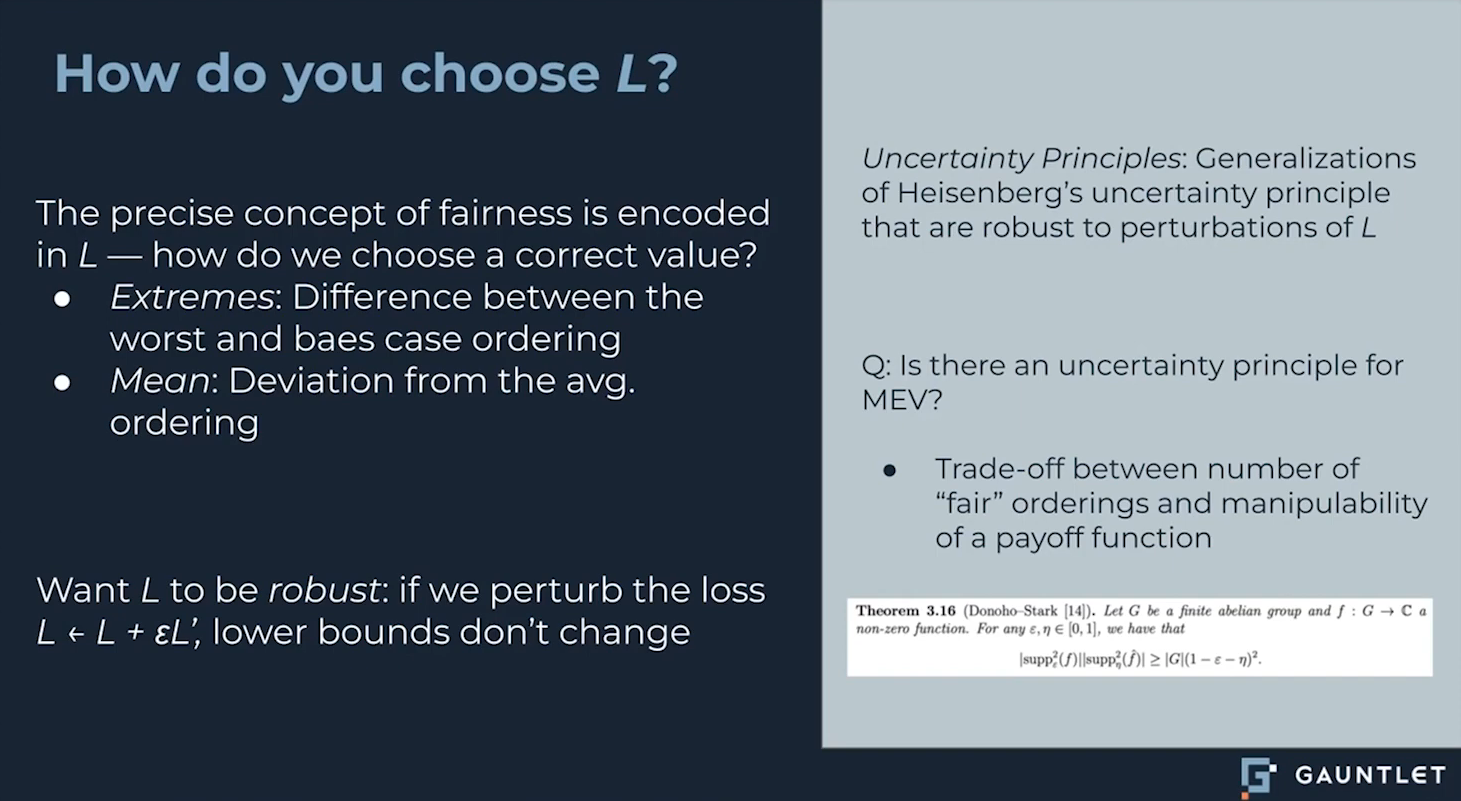

Following the game mechanics (described above), we analyze this game using a min-max loss function that aims to measure fairness. The choice of loss function affects notions of fairness.

- If the game value is positive, the developer was able to exploit the fair ordering

- If negative, the fair ordering limited exploitation.

The more constrained the set of allowed orderings, the more power the fair ordering protocol has to win.

Why does this game represents Fair Ordering protocols ? (8:45)

Fair ordering protocols permit a large set of transaction orderings, making it easy for adversarial developers to find ways to exploit the protocol. On the other hand, Restricting the set can limit exploitation.

Combinatorial constraints from the allowed ordering set bound the game value.

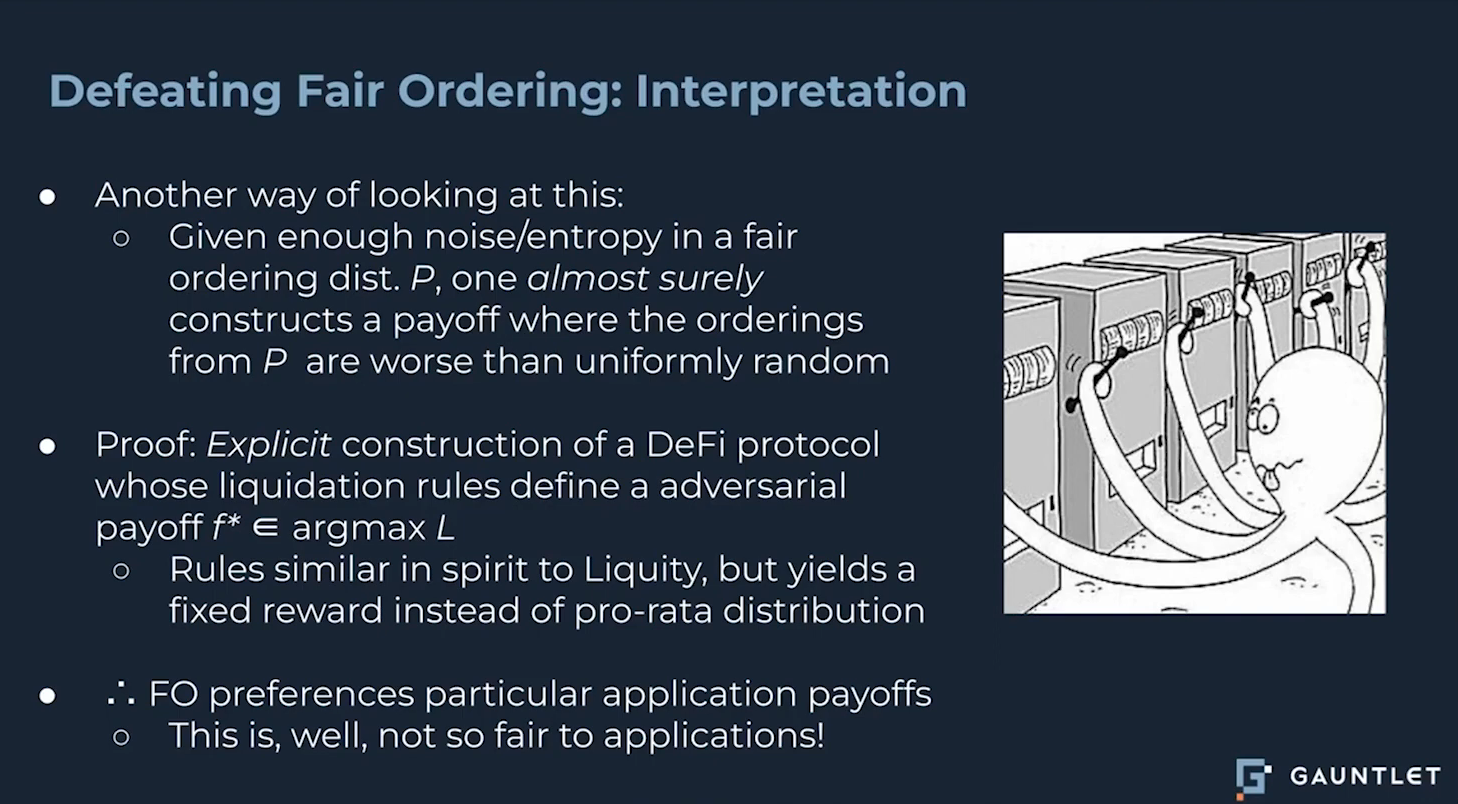

How this game breaks Fair Ordering ? (9:30)

Given sufficient randomness in orderings, adversarial payoff functions can be constructed to exploit fair ordering.

A DeFi Protocol with liquidation rules that perform poorly on fair ordering's allowed permutations proves that Fair Ordering implicitly picks winners/losers by preferencing some apps over others.

And preferencing some apps is, well...Not fair

About Payoffs

Payoffs represent economic value to users from transactions. Some examples :

- AMM payoffs : The economic value gained by users of an automated market maker protocol based on the transaction order.

- Liquidation payoffs : The economic value from liquidations being triggered based on asset price thresholds and the transaction order

In general, payoffs aim to quantify the profits, rents, or economic welfare generated for users by the execution of transactions in some order

The tradeoff which discriminates (12:15)

The more constrained the set of allowed orderings, the less flexibility adversarial developers have to construct payoff functions that exploit certain orderings.

However, overly restricting orderings makes it difficult for fair ordering protocols to accommodate randomness from things like network latency. This can violate assumptions and lead to unintended consequences.

These constraints create a tradeoff between set size and manipulability. Shrinking the set reduces manipulation but makes the protocol too rigid. On the other hand, expanding the set increases adaptability but enables more manipulation.

If the Fair Ordering protocol allows a sufficiently large set of orderings, the min-max game value is positive, meaning the adversarial DeFi protocol does worse. Hence, Fair Ordering is discriminatory

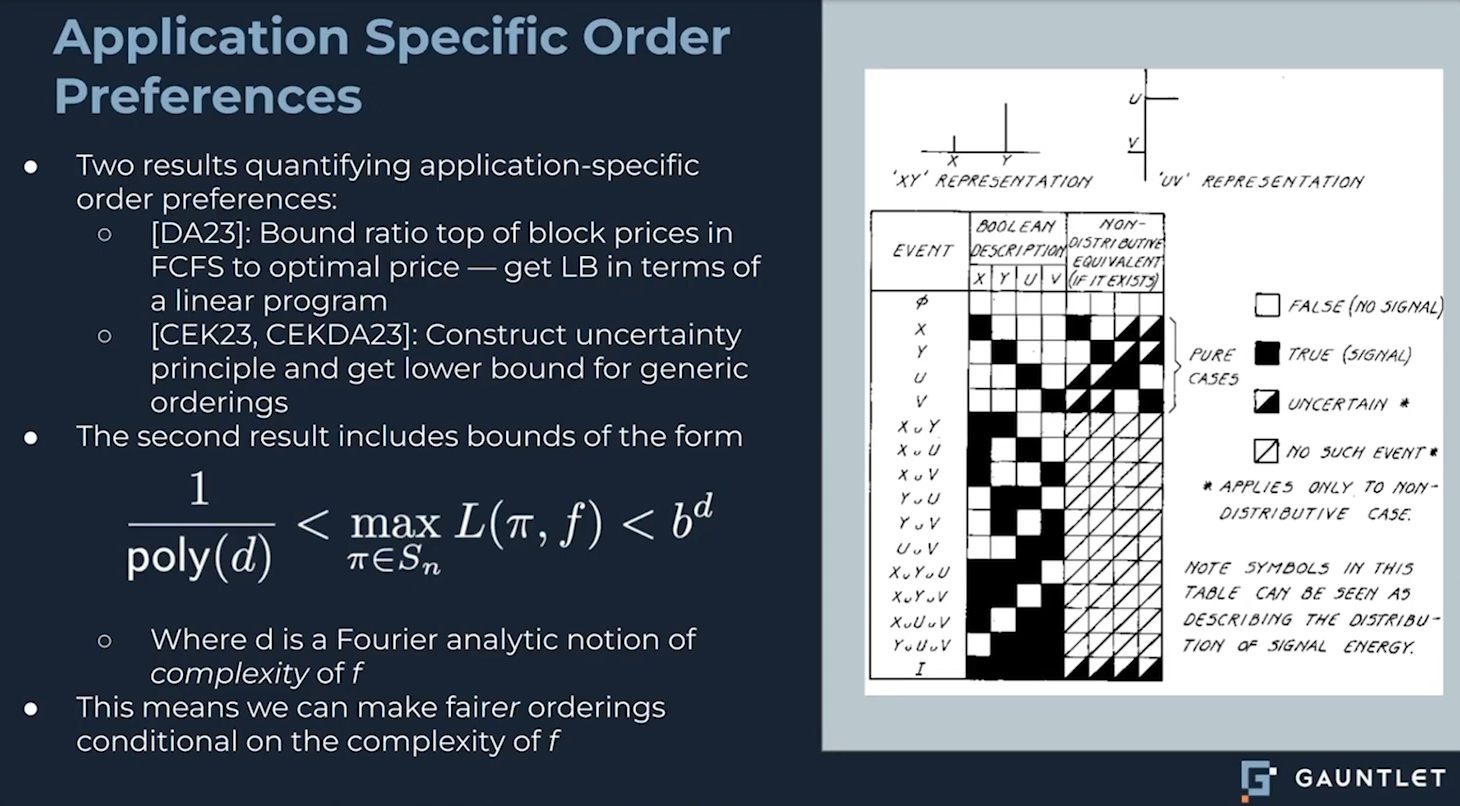

Let's be optimistic (16:30)

Rather than one universal approach, order preferences tailored to specific applications could be more effective, for 2 reasons :

- A research looked at first-come-first-serve (Arbitrum style) just for the top transaction and formally proved it is always suboptimal versus the best ordering

- The lowest possible manipulation depends on how complex the payoff function is. In other words, simpler smart contracts can be fairer

Instead of seeking perfect universal fairness, we can analyze specific simple policies or simplifying smart contracts.

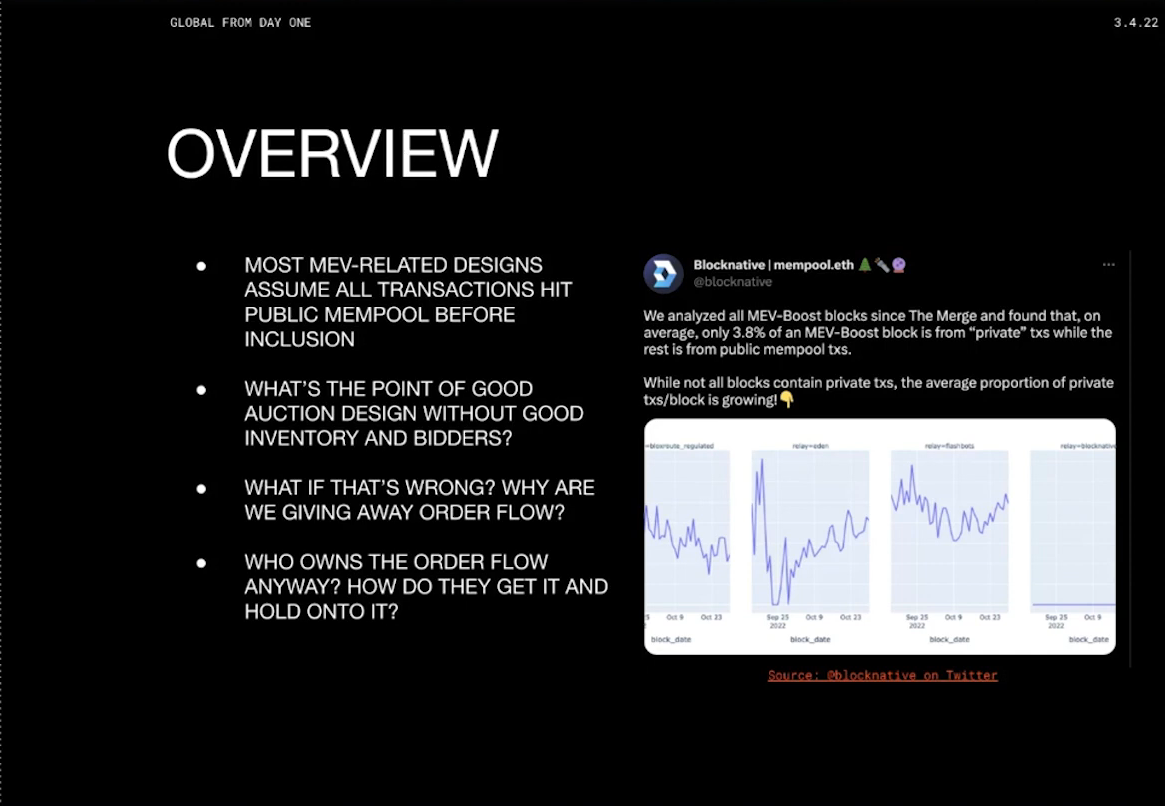

Overview (1:30)

Most mev research has focused on the current system where users sign transactions that get broadcast to the public mempool. However, there are signs this model is shifting, with more transactions going through private mempools. Analysis by BlockNative showed 3.8% of transactions don't hit the public mempool and the percentage is likely higher now.

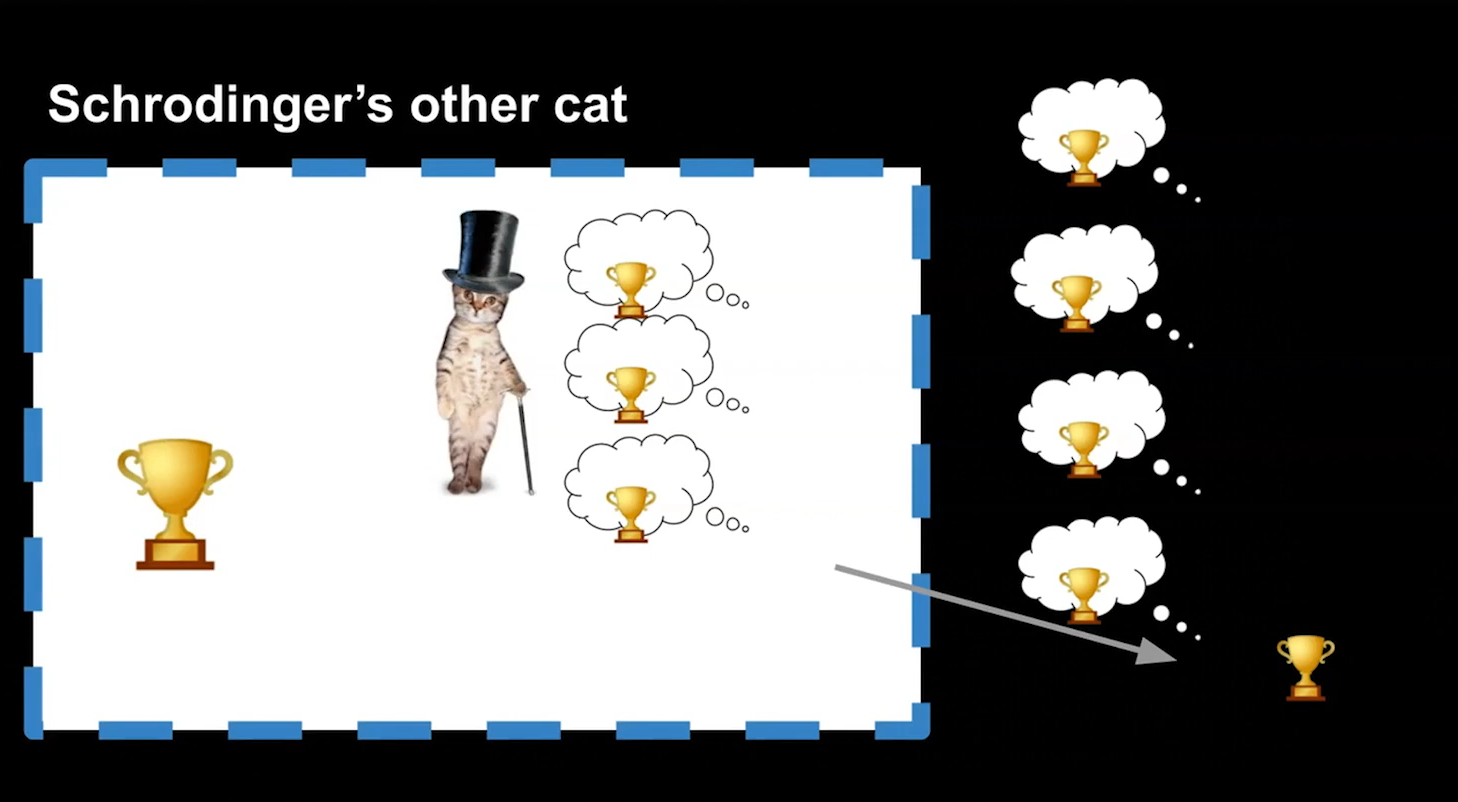

The standard mev supply chain relies on searchers seeing transactions in the public mempool, but more private mempools mean searchers can't access that transaction data. So assumptions about how MEV works are becoming outdated

Researchers have proposed better mev auction designs to make things more fair, but these designs only work if transactions are public. If we don't have incentives to encourage people to participate in the auctions they kind of end up going to waste.

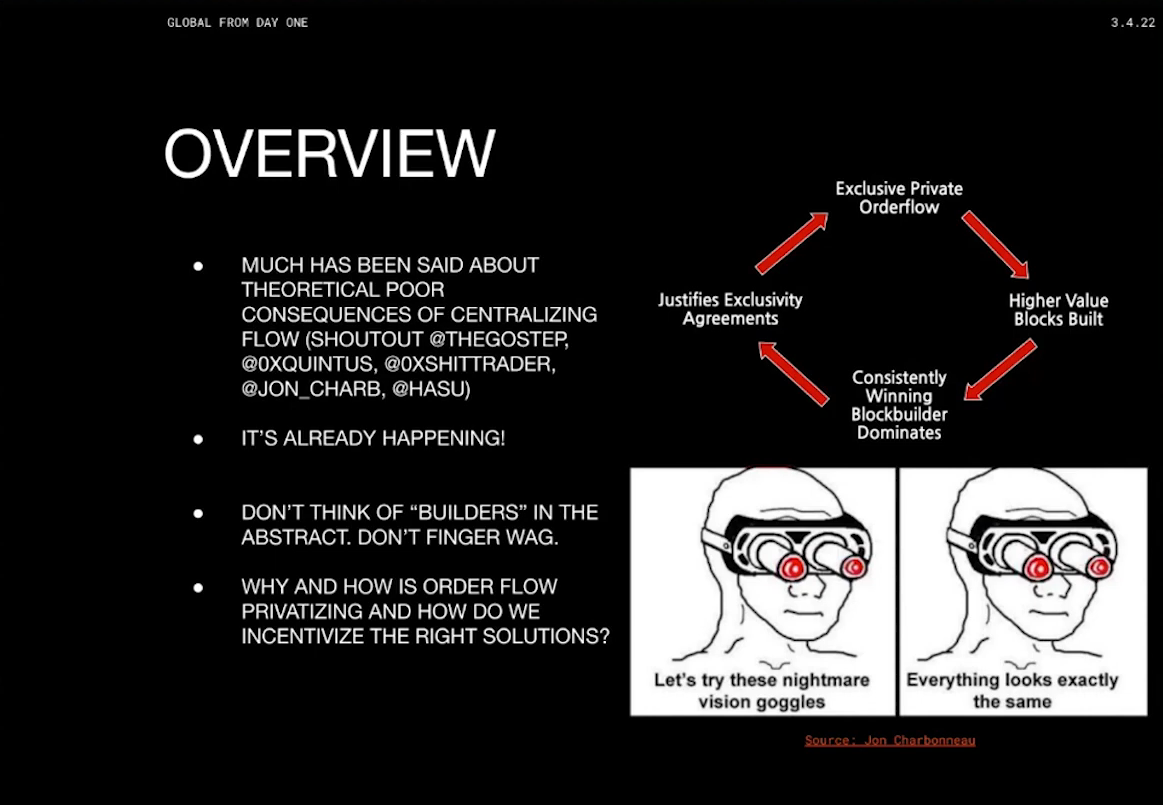

Most discussions just complain that privatization of order flow is harmful, as it leads to centralization of block building with a few big players and creates a flywheel effect (described above)

But instead of criticizing, we need to understand why and how privatized order flow is made. From this point, we can get how to incentivize decentralized participation

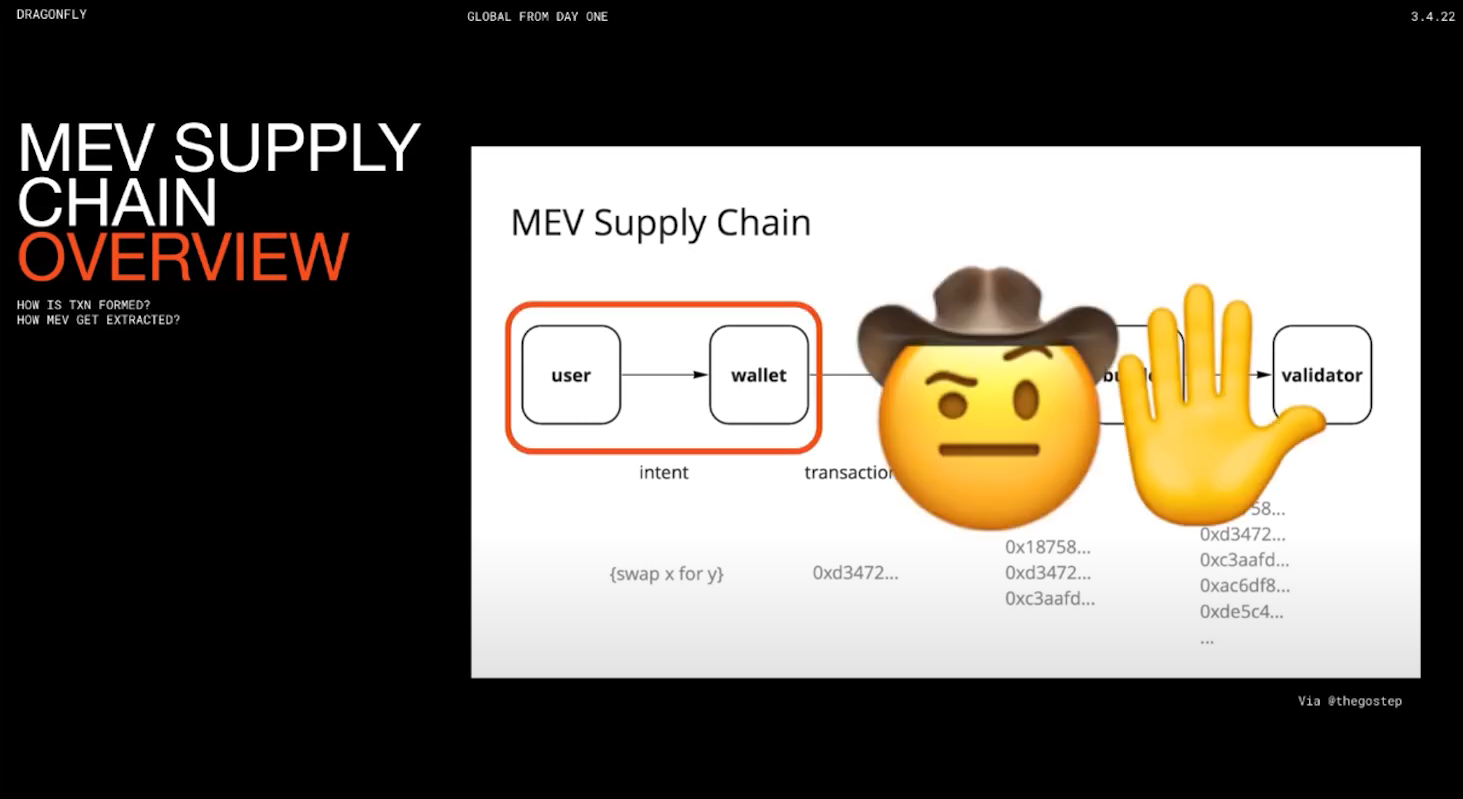

MEV Supply Chain overview (3:30)

Standard mev supply chain is user -> wallet -> searcher -> builder -> validator, but this view is too simplistic. The wallet to searcher step is where there is the most leverage and negotiating power

Being further upstream in the supply chain confers more leverage. For example, wallets have leverage over searchers in terms of which transactions get passed on.

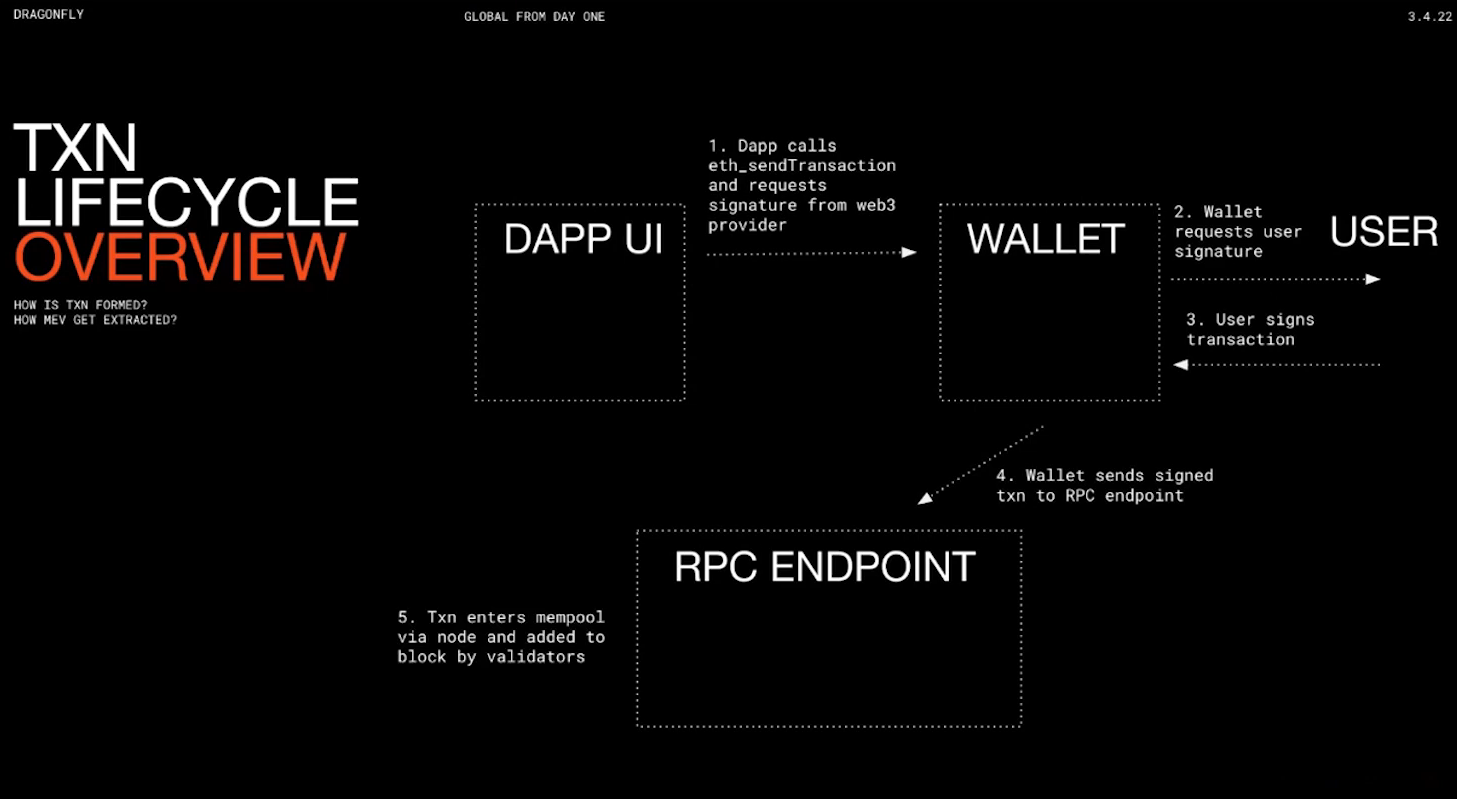

So this is how transactions get formed :

- UI sends signature request to wallet

- Wallet asks user to sign

- User sends signed transaction to wallet

- Wallet sends transaction to RPC endpoint

- Enters public mempool

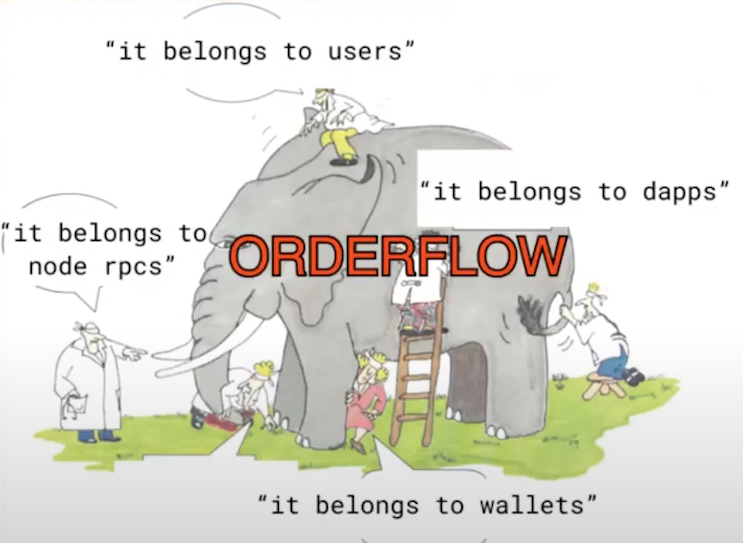

Who the fuck Order Flow belongs to ?

All parties in the transaction lifecycle want to capture more of it for themselves. And this flow sort of started to break down because of that.

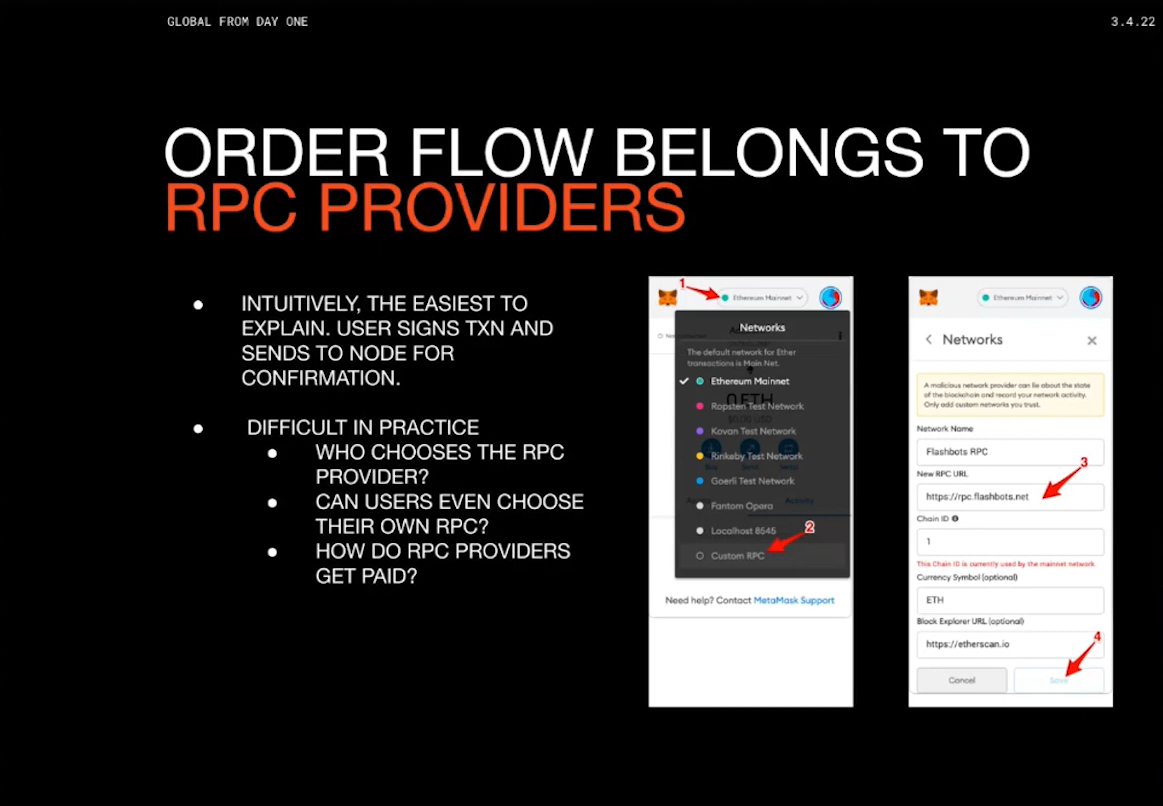

RPC Providers (5:15)

Common perception is RPC providers control order flow, but custom RPCs face challenges :

- Building custom RPCs has tough barriers to entry for new projects

- Many wallets don't allow easily changing default RPC provider

- It's unclear how custom RPC providers can monetize or get paid

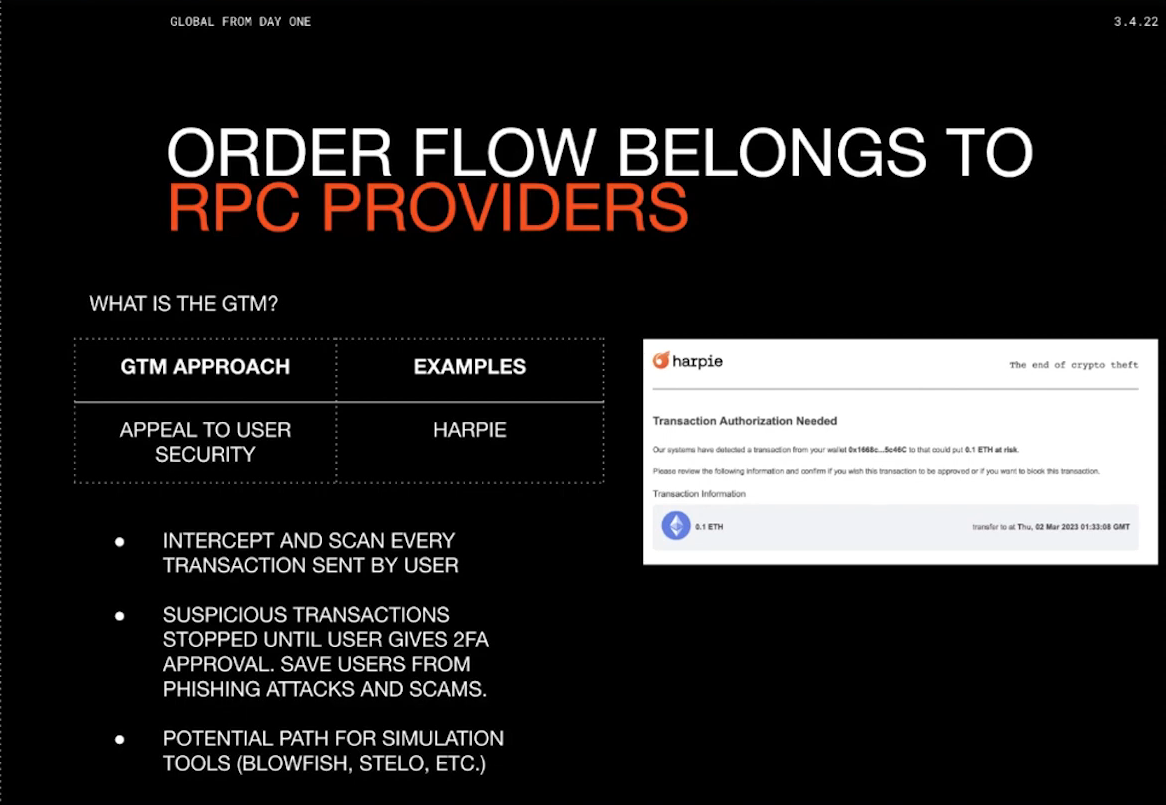

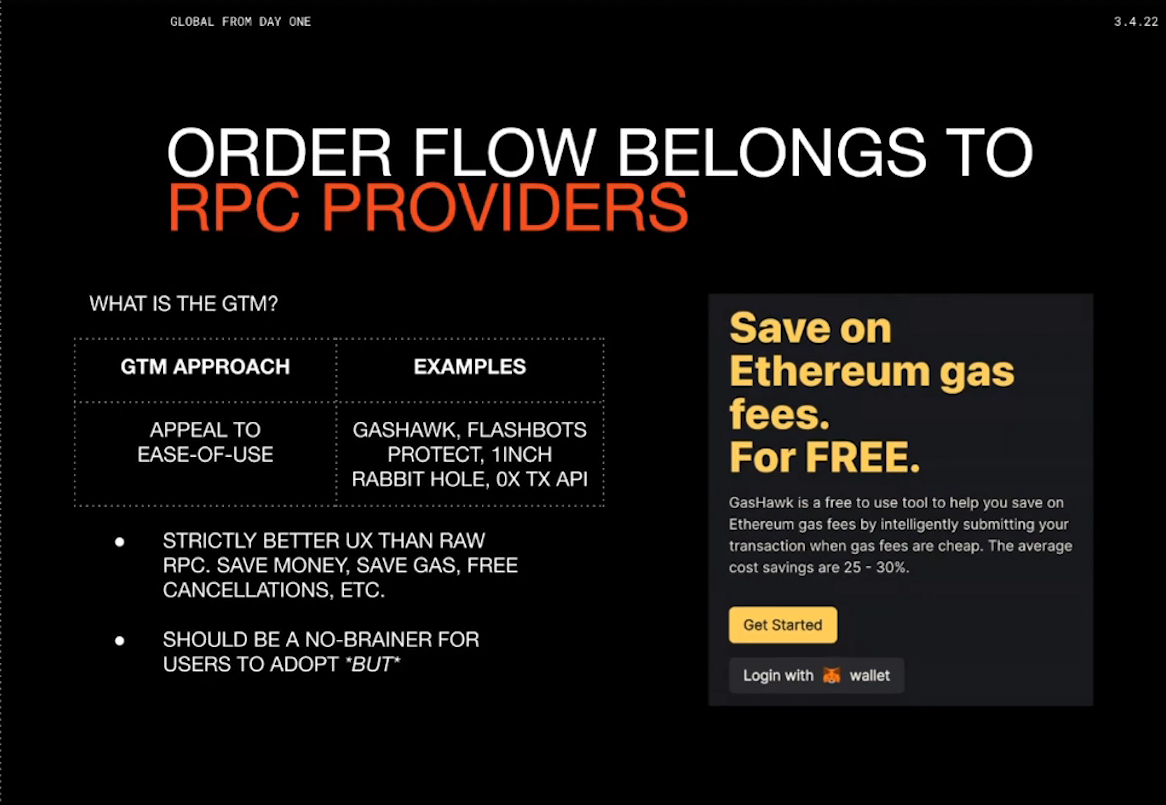

One option is security-focused RPCs : they can provide extra security vs just blasting txs into public mempool, intercept and scan each transaction for risks (Harpie) and Offer options like 2FA approval for high-risk transactions

Another option is RPCs focused on user experience : they improve ease of use compared to existing providers (Infura, public RPCs...). For example, they can Optimize gas prices, avoid failed txs, or wait for price quotes.

Both options should be superior to existing RPCs, but difficult to get users to change default providers. So instead of having users switch their RPC over, we can sell directly to wallets :

- Wallets have more leverage, new monetization options

- Can outsource mev extraction to others

- Users may get some mev share, improving wallet stickiness

- New revenue stream for wallets beyond swap fees

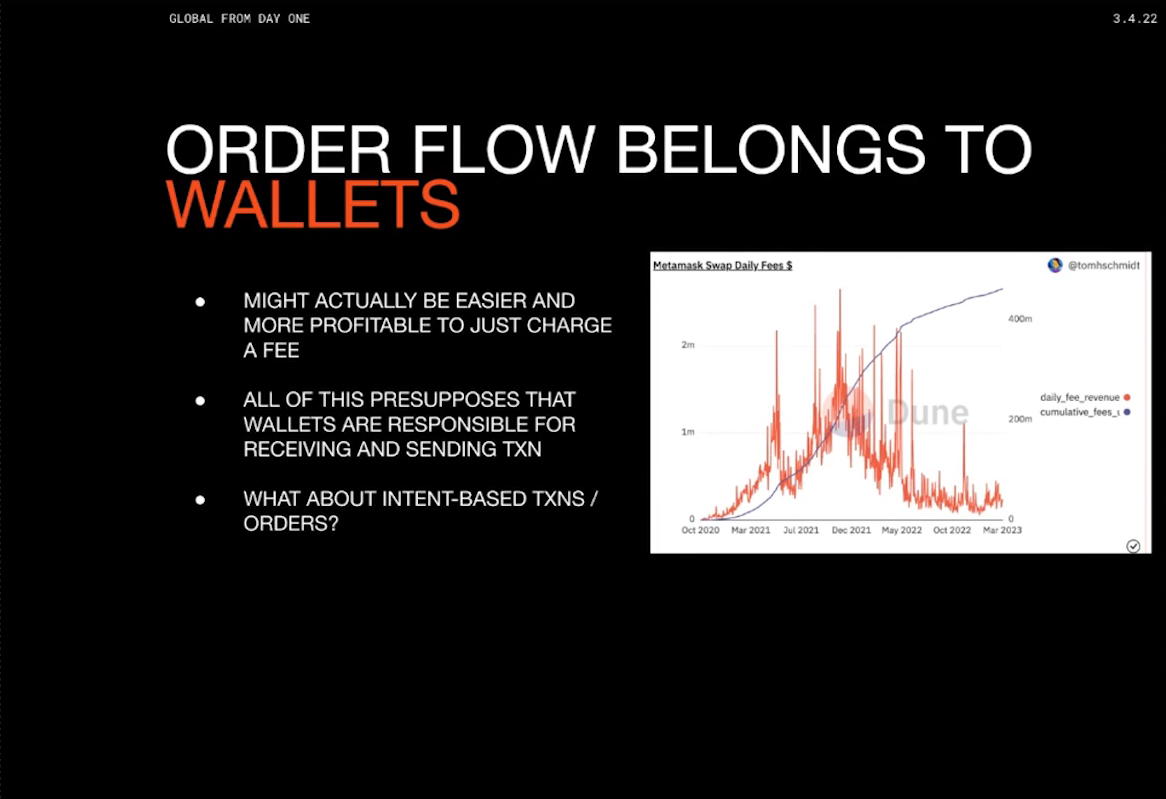

Wallets (9:00)

Wallets have a lot of leverage : they control where signed transactions get sent to, and they decide which RPCs get access to the order flow. Furthermore, they need to find alternate sources of revenue (not all wallets can have a Metamask Swap)

Order Flow is a relevant source of revenue, because not all order flow is created equal. Order flow from bots/arbs has little extractable mev, but less price sensitive users generate more MEV.

Therefore, Metamask is swallowing up the RPC providers and having their own layer on top of them, where they can again provide a lot of these UX security benefits as well but also potentially extract MEV

But having our own layer on top of RPC providers is centralized. The question is, why should wallets open up MEV extraction instead of doing it themselves ? Spoiler alert : we don't need answers right now

Wallets swap fees are more lucrative than mev extraction for now, so wallets aren't prioritizing MEV monetization. Discussion assumes users send txs to wallet and wallet controls flow...For now

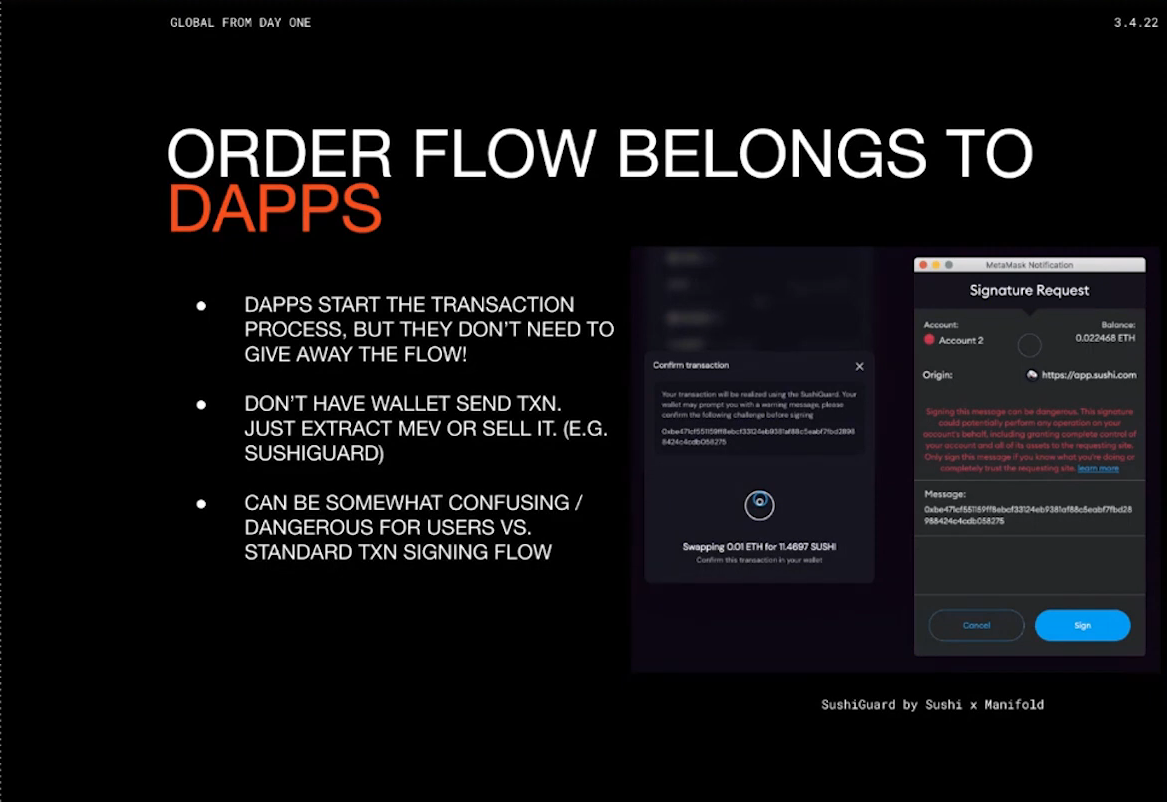

Dapps (11:45)

Dapps can initiate signing without a wallet transaction. User signs a hash representing intention, which is not an actual transaction. One example is Sushi with "Sushi Guard", where user signs intent and Sushi Guard extracts MEV. So the wallet doesn't actually see this transaction in this flow and therefore the RPC providers don't even see this transaction

According to Tom, this is not optimal :

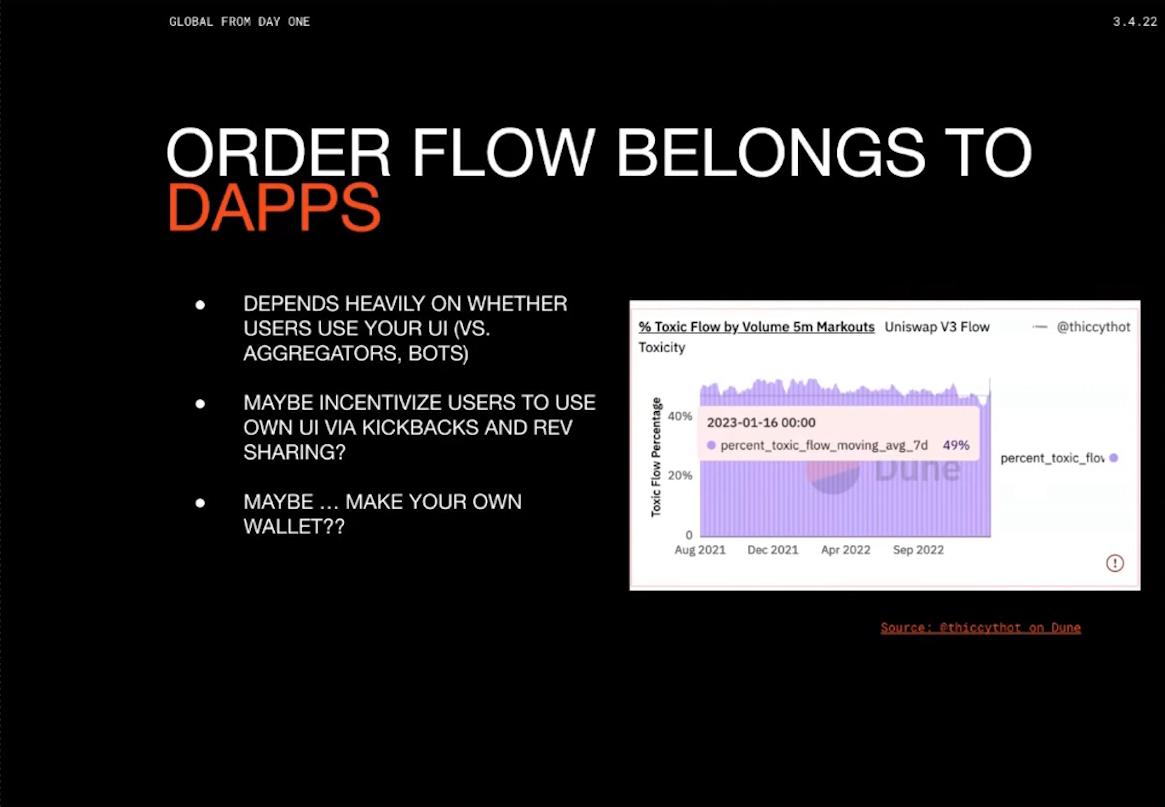

- A message can, given certain conditions, be malicious. It's risky for users to sign things they don't understand.

- We don't control transactions that much if the majority of volume is going through a third-party (aggregator, bot...)

Takeaways (14:15)

- Upstream supply chain has more power, that's why everyone is trying to move it up. Actors are behaving rationally, not maliciously

- Current MEV systems assume transactions are public, but this assumption is decreasingly correct

- Early data suggests private mempools are a growing problem

- We don't really want a world where centralized entities control more of the stack (MetaMask does self extraction with swaps + becomes a builder)

- We need to make open/fair MEV auctions to avoid centralized private pools, but we can't rely on benevolence

- More research needed to quantify public vs private mempool issues (we're in a similar spot as before MEV Explorer existed)

Open Questions

- Where extracted MEV ultimately flows (users, wallets, builders) ?

- Do incentives exist for actors to provide open order flow ?

- Would competition lead to sharing MEV with users ?

Intro

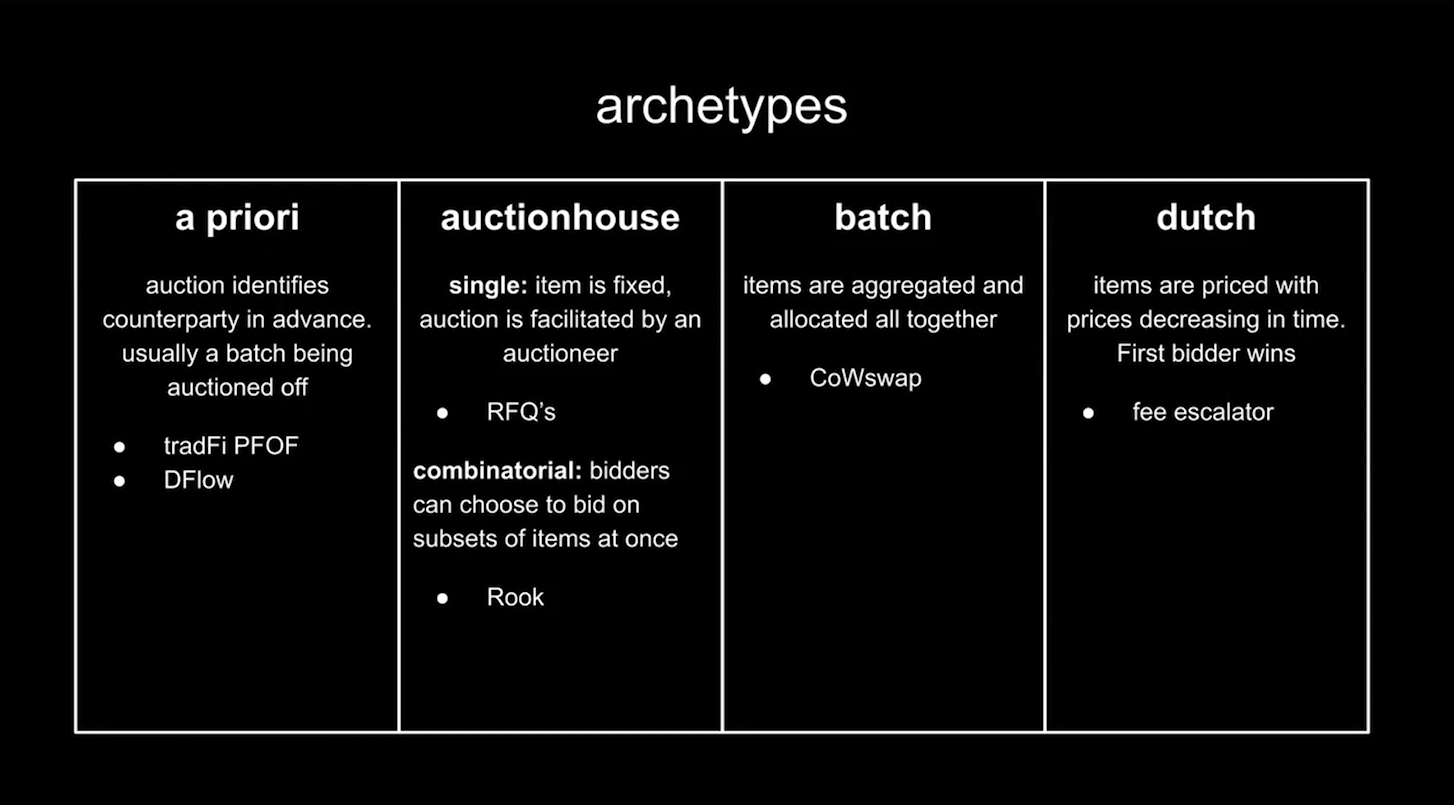

An "auction" can be defined more broadly than just price discovery. It can be a mechanism for discovering the best way to interact with blockchains through competition along dimensions other than price.

Quintus wants to give an overview and direction for exploring a broader design space of auction mechanisms beyond what people have discussed

Auctions (1:40)

Reverse auctions are an example where participants compete on dimensions like speed or reliability rather than price. Auctions are useful here because we don't know the best way to accomplish users' goals

The ideal end state would be having an auction mechanism between users and blockchains that helps users accomplish their goals in an optimized way

In this talk, we will not talk about the entire MEV system, but focus on Order Flow Auctions specifically (the green bar)

Taxonomy (3:15)

- Sealed bid auctions determine winner in advance, resemble traditional finance methods

- Auction houses have variations where people bid on single items. Items often relate to what users are trying to execute on-chain (combinatorial auctions allow bidding on subsets of items)

- Batch auctions are similar to combinatorial auctions but batch composition is fixed

- Dutch auctions involve bidding over time