MEV Workshop at SBC 23 Part 4

Summary of MEV Workshop at the Science of Blockchain Conference 2023. Videos covered are about research problems for the next generation of MEV solutions

Intro

TCB stands for "Trusted Computing Base." It refers to the parts of a secure system that are responsible for maintaining security. Recovery means restoring security after a failure or vulnerability.

So TCB Recovery is the process of restoring the security of a system after its TCB has been compromised in some way.

SGX (Software Guard Extensions) are trusted hardware enclaves provided by Intel CPUs. They allow sensitive computations to be executed in a secure environment isolated from the main operating system.

But SGX has had some recent vulnerabilities like the Downfall attack. The text says these SGX issues motivate a discussion of how TCB Recovery applies in this context.

This talk seeks to explain TCB Recovery in detail as it relates to SGX, since they believe this concept is poorly understood despite being very important.

Recap

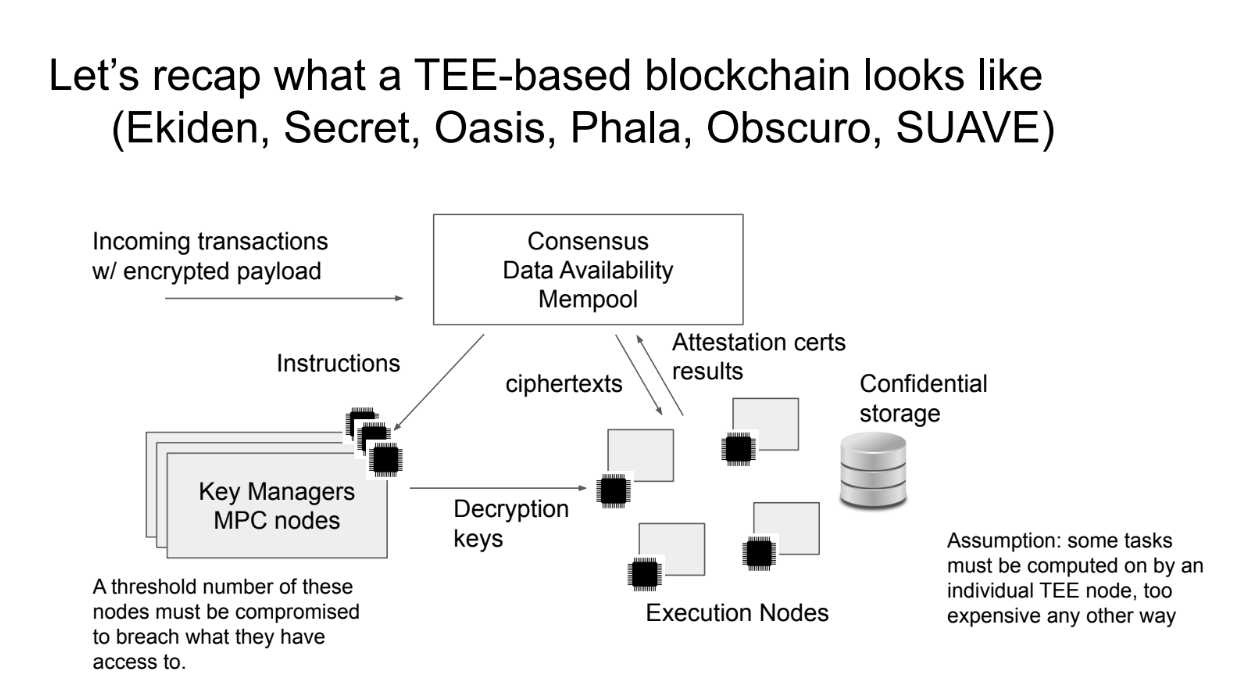

What a Trusted Execution Environment (TEE) looks like ? (1:40)

A blockchain architecture that uses trusted execution environments (TEEs) like Intel SGX typically has these main components :

- Public blockchain for consensus : Nodes run a consensus protocol like proof-of-stake to validate transactions. TEEs are not required for consensus.

- TEE nodes for private data : These are nodes with Intel SGX or another TEE. They can store and compute on sensitive data without exposing it.

Two main ways to use enclaves : Multiparty computation among a threshold of enclave nodes for key management, This provides more security since you'd need to compromise multiple nodes. Or individual enclave nodes for performance when doing computations on private data.

The general flow is :

- Consensus protocol handles policies, node registration, transactions

- Key manager TEEs hold root keys and secrets

- Execution TEEs run computations on private data

The problem about SGX (3:50)

SGX enclaves are appealing for performance, collusion resistance, etc. But SGX has a history of major security failures that break its security goals :

- CacheOut

- SGAxe

- AEPIC leak

- Downfall...

So architects need to design systems that can recover security after SGX failures.

WTF is a TCB recovery ?

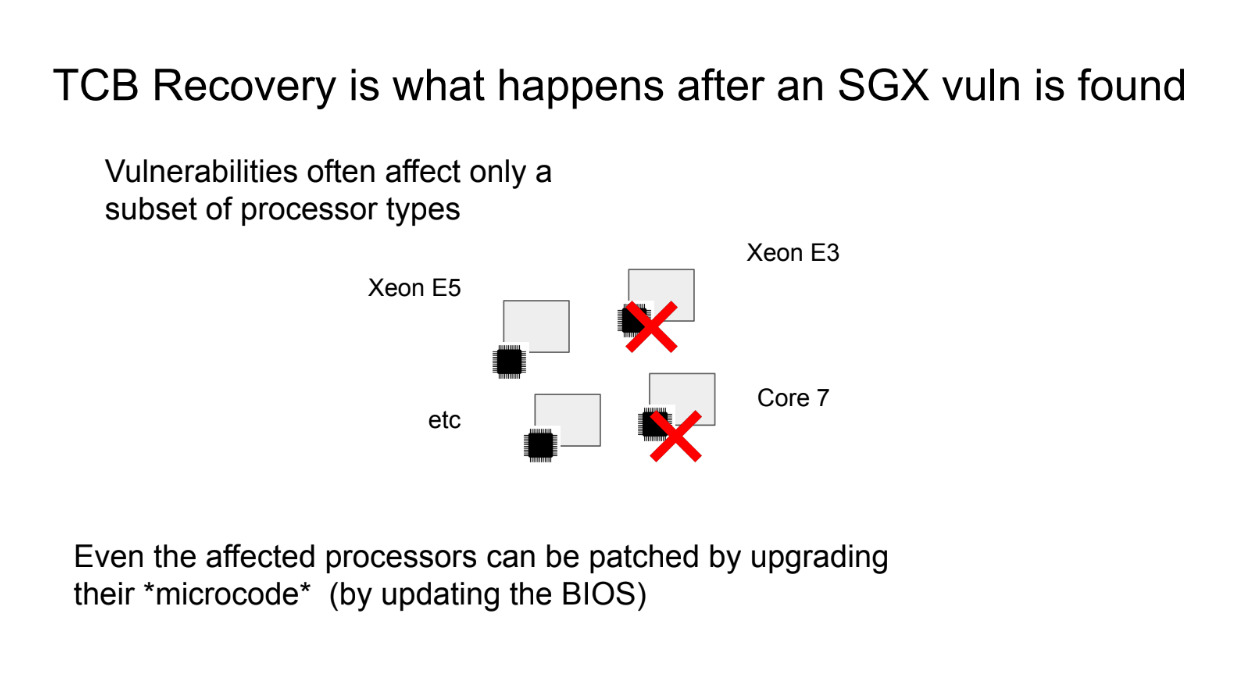

What happens after a SGX vuln is found (4:35)

The concept of TCB Recovery relates to how blockchain systems that use Intel SGX or other trusted execution environments (TEEs) can recover security when new vulnerabilities are discovered in the TEEs.

Some more detail (5:45)

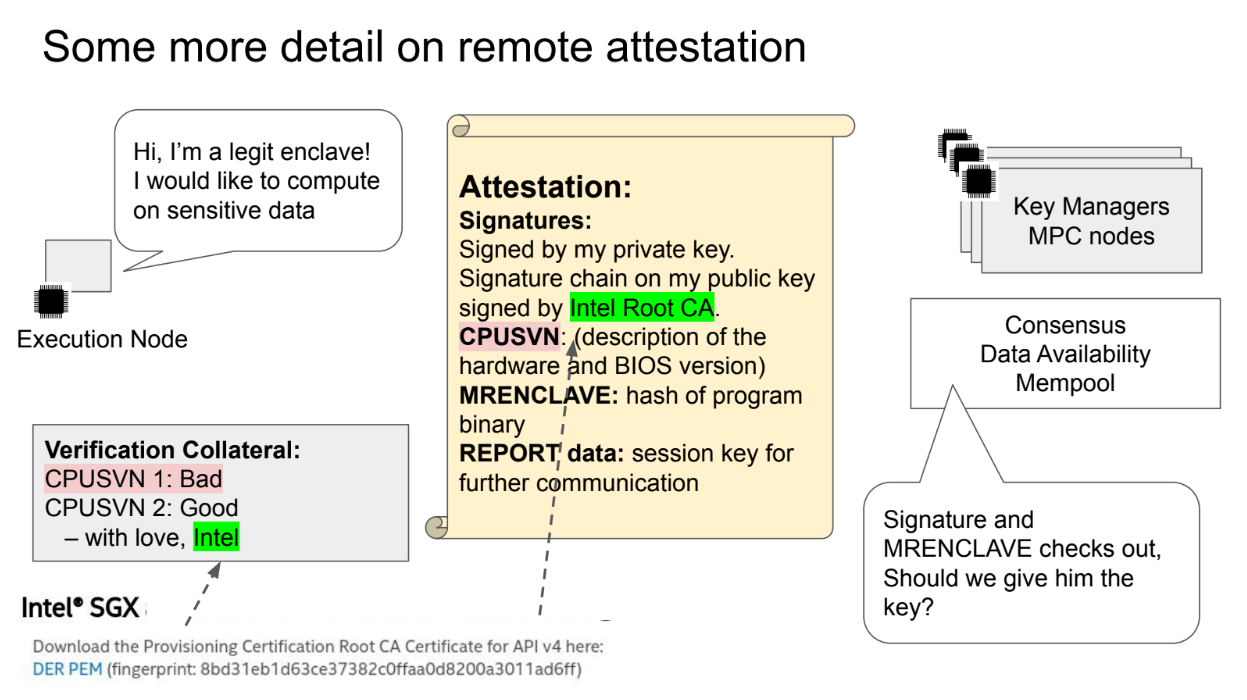

When an SGX enclave node wants to join the network, it generates an attestation report to prove it is running valid software in a real enclave.

What report contains :

- A certificate chain going back to Intel's root keys

- The enclave's public key

- The CPU security version number (details on hardware/firmware)

- The MR_ENCLAVE measurement (hash of the enclave software)

- Custom report data like the node's public key

Existing nodes in the network verify the attestation report :

- Check the certificate chain is valid back to Intel

- Check the CPU security version is not known vulnerable

- Check the MR_ENCLAVE matches expected software

- Verify custom data like the public key

If valid, they approve the new node and provision secrets/data

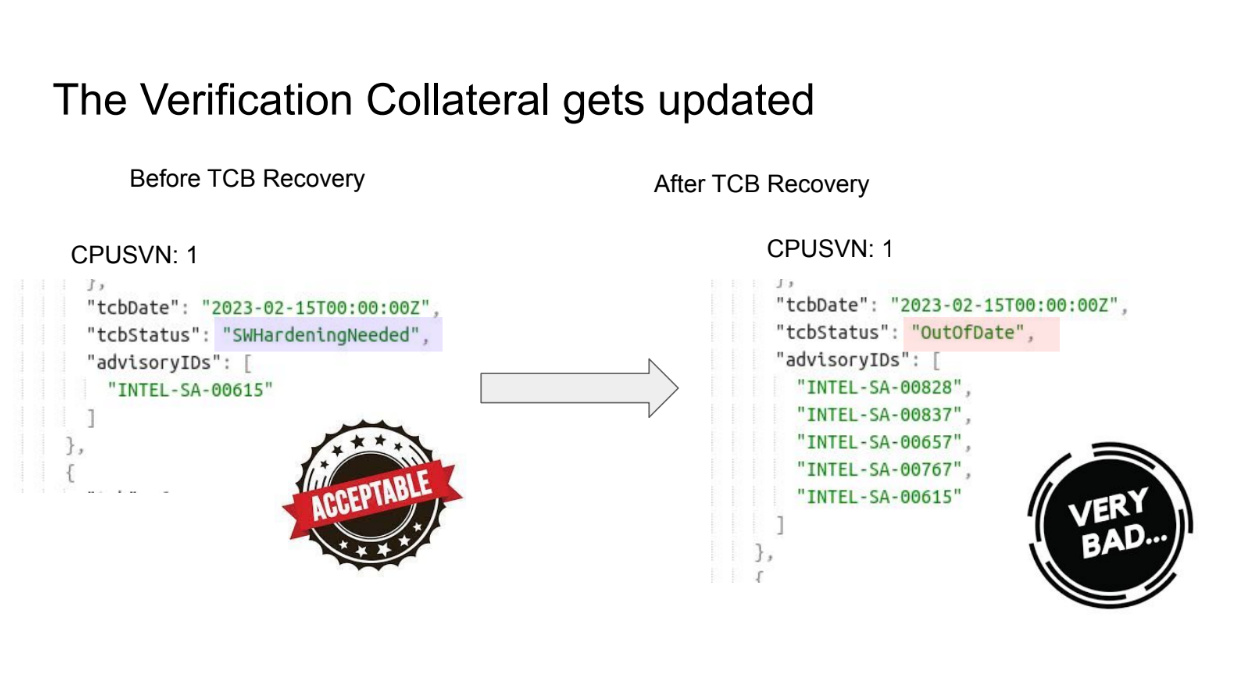

The verification collateral gets updated (8:00)

When an SGX vulnerability happens, Intel provides microcode patches & updates the CPU security version, and Blockchain nodes update their policies to reject old vulnerable versions and allow new patched versions

This allows restoring trust in SGX enclaves after patches are applied through updated attestation policies.

What to do when an SGX vulnerability is found ? (9:00)

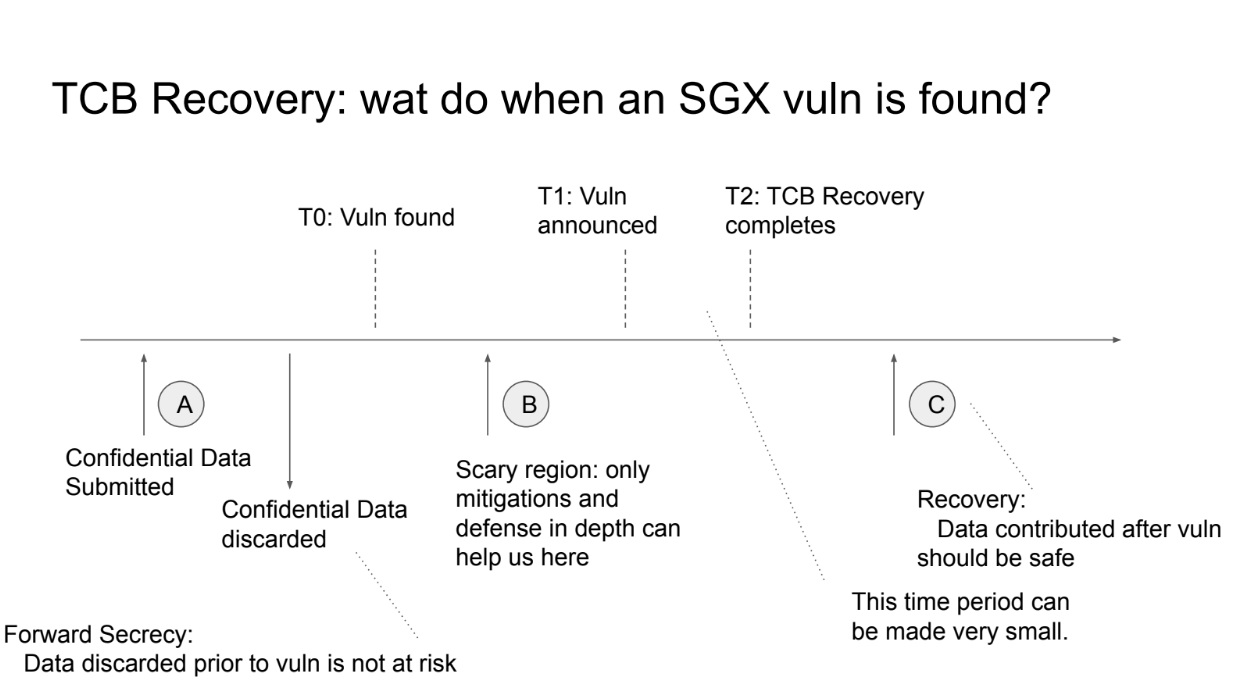

- At time A, sensitive data is submitted to the system

- At time T0, a vulnerability is found by attackers but not public yet

Forward secrecy means enclaves delete keys to discarded data, so past data is protected if deleted before T0

- Data between T0 and public disclosure is at risk since attackers know the vulnerability

- After public disclosure, Intel provides patches and updates to block vulnerable configurations

- After TCB Recovery completes at T2, new data under new keys should be secure again

Blockchain systems also need to update policies to reject old versions and allow new patched versions. This should happen quickly after disclosure to minimize the risky window

Observations from Downfall (11:05)

Intel made info to identify vulnerable configurations quickly available, but there were bugs, like case sensitivity, that made it inconsistent. Not all systems were quick to block vulnerable configurations

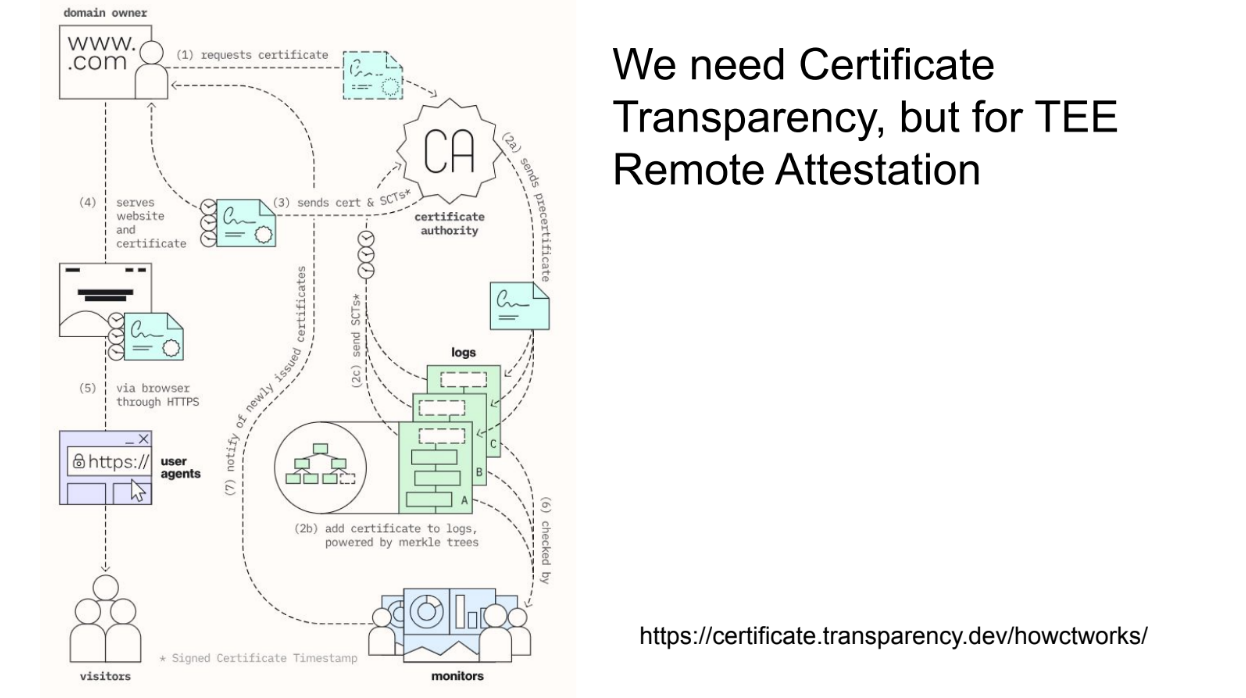

Need for Certificate Transparency (12:10)

So Andrew suggests having a public mirror/repository for these packets. This is like "certificate transparency" where browsers check that certificates are logged before trusting a website. This creates accountability for CAs since invalid certificates get recorded

Similarly, a public log of Intel's attestation packets would:

- Provide a consistent source of info on vulnerabilities

- Catch any errors or misinformation from Intel

- Enable accountability if Intel incorrectly flags configurations

- Allow blockchain systems to make informed decisions on what to trust

We are missing best practices (14:00)

- Mitigate : Use threshold cryptography where possible - require breaking multiple enclaves not just one. Restrict physical access to enclaves by locating them in reputable cloud data centers. Require frequent remote attestation from enclaves to maintain trust

- Compartmentalization : Instead of giving someone the keys to the entire house, only give them the key to the room they need. This way, even if they misuse it, they can't access the entire house.

- Key Ratcheting : Rotate cryptographic keys immediately after patching vulnerabilities. Also, employ forward secrecy by having enclaves prove they deleted old keys

- Key Rotation: Imagine changing the locks on your doors regularly. This means that even if someone had the old key, they can't use it anymore. But sometimes, changing these "locks" or "keys" needs a major system update.

- Transparency: It's vital for these systems to be clear about how they're operating. These systems should let users know about their security practices

Open Questions (17:30)

One specific area of interest is combining the best parts of two tech areas :

- Hardware enclaves, which are a type of secure area inside a computer where data can be safely processed.

- Homomorphic encryption (FHE) and Zero-Knowledge Proofs (ZKP), which are advanced techniques for ensuring data privacy and security

Andrew is concerned about relying solely on big tech companies like Intel and AMD for these solutions. Rather than just rely on Intel/AMD, blockchain communities could design their own custom hardware for smart contract execution and tamper resistance.

There are still open design problems in managing threshold vs non-threshold enclaves and key management.

Even if technologies like SGX are functioning correctly, there might be other ways (side channels) attackers could use to gather information. Andrew mentions liking "oblivious algorithms," which are designed to prevent giving away any hints about the data being processed.

Conclusion (19:20)

TEEs are like secure rooms in a computer where data can be processed safely. They're not easy to use, but sometimes they're the only option when you need super high security. Especially for applications where many users access shared data, but they don't know the actual values of that data.

Even though SGX had some issues, Andrew believes it's still worthwhile to build systems around it. There's more work to do in ensuring these systems are safe, and those who design decentralized systems (systems where control is spread out rather than in one place) play a key role in this.

Q&A

You mentioned that during the "downfall" event, there was new information released simultaneously with the public vulnerability being disclosed. Can you explain that further ? (21:00)

When the AEPIC leak vulnerability was made public, the necessary information to use certain functionalities securely wasn't made available immediately. Typically, there's a wait time after a vulnerability is disclosed before you can safely use certain features.

However, for "downfall", the info was available immediately, but it wasn't consistent. Furthermore, there was a 12-month period before the public knew about this vulnerability, which was longer than the 9-month period for the AEPIC leak.

What are some ways to prevent physical tampering in hardware designs ? (22:30)

While there are many secure hardware options out there, like the secure element in iPhones, they don't always have "remote attestation" (a way of verifying the hardware's trustworthiness over a network), which is crucial for certain applications.

It's a challenge to combine networking and remote attestation effectively.

How can we trust the manufacturing process of these secure hardware devices, ensuring they're made as per the open source designs ? (23:30)

Open source designs allow us to know what the hardware should look like, but it doesn't guarantee that the actual hardware follows those designs.

One idea is to design hardware that can be analyzed (even if it destroys it) to ensure it matches the design.

To counteract the loss from destruction, many units can be made, and a random selection can be destructively analyzed. If most pass, it boosts confidence in the rest. However, this idea is just a starting point and needs more development.

Do we always have to trust Intel as the root of trust for secure systems ? (25:45)

For certain systems like SGX, yes. But in the future, using multiple trusted entities, like Intel and Microsoft, together might be a better approach to boost trust and security.

Intro

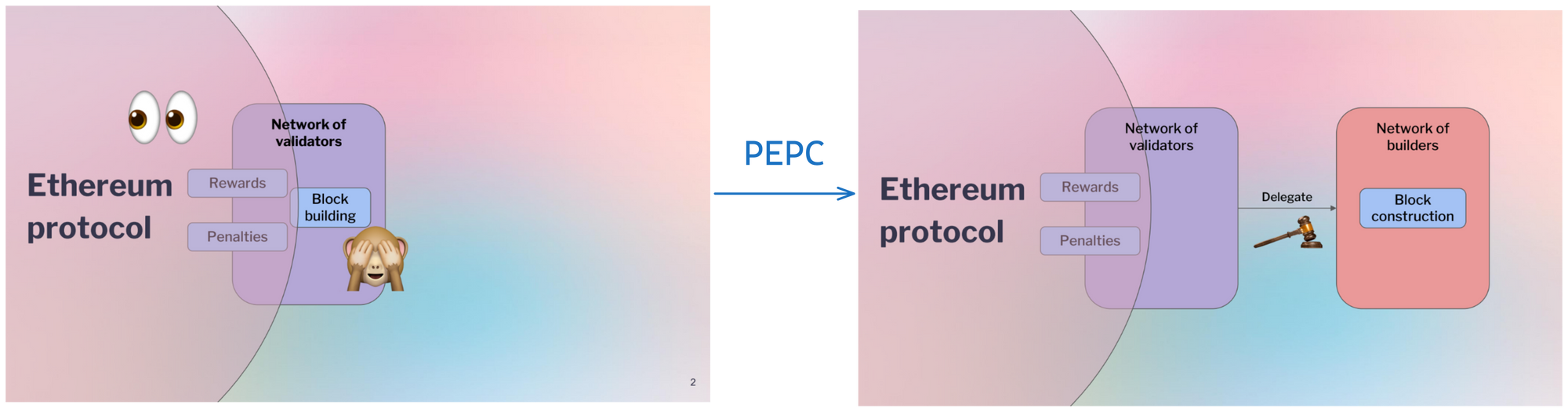

What is PEPC ? (0:20)

Currently, Ethereum has some control over validators. However, Ethereum doesn't see everything validators do, especially when they build new blocks of transactions to add to the chain.

This has led validators to separate their roles. There are "proposers" who are validators staked in Ethereum, and "builders" who actually construct the blocks on behalf of proposers. Builders do this because block building requires sophistication to optimize profits.

The Topic (1:25)

Barnabé worked on this and felt there could be dangers with validators outsourcing block building.

Robust Incentives Group don't think Ethereum should just accept this separation of roles and enshrine it on-chain. Doing so would force acceptance of builders and the specific way they interact with proposers.

Barnabé worries block building being so bundled into one builder could centralize them too much. He wrote a long post explaining concerns about the bundled auction approach before DevCon. Not many people read it though, and the reaction was muted.

Today's talk (2:50)

PEPC Intro

Disclaimers (3:15)

PEPC is not a real system yet. It is still just an idea they are thinking about

PEPC cannot exist until the enshrined Proposer-Builder Separation (ePBS) is real, and ePBS is not real yet either. Many people are still working on making ePBS happen.

The good news is PEPC could be tested without making official protocol changes. The speaker calls these "diet PEPC" versions. They allow trying out PEPC flexibility without invasive protocol changes.

PEPC is still an active research topic. It is not on the Ethereum roadmap or set to definitely happen. There could be good ideas from PEPC research that are eventually implemented, but nothing is certain yet.

Coke is better anyways (the drinkable one) :)

Intents of PEPC (4:55)

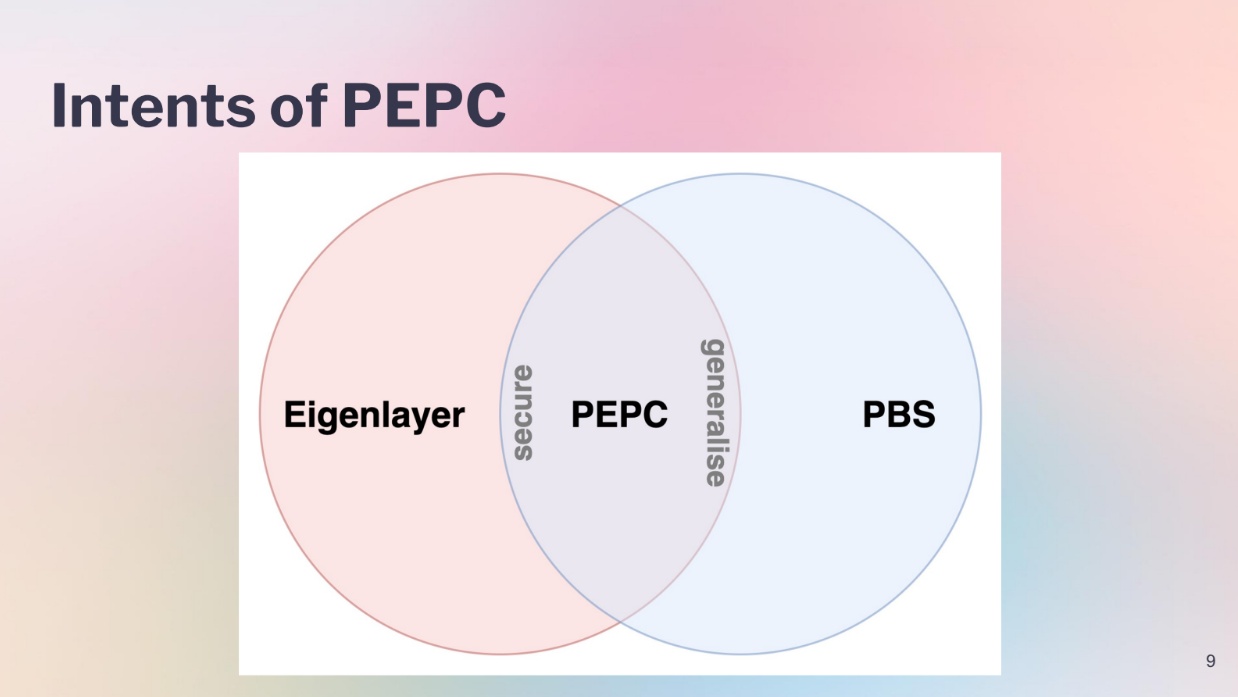

In general, PEPC aims to :

- Generalize ePBS by allowing more flexible proposer-builder interactions, like having multiple builders work on parts of a block.

- Improve security for some use cases (like Eigenlayer) by preventing invalid block proposals, not just slashing after.

PEPC sits in between fully generalizing ePBS and fully replacing Eigenlayer. It takes some ideas from each.

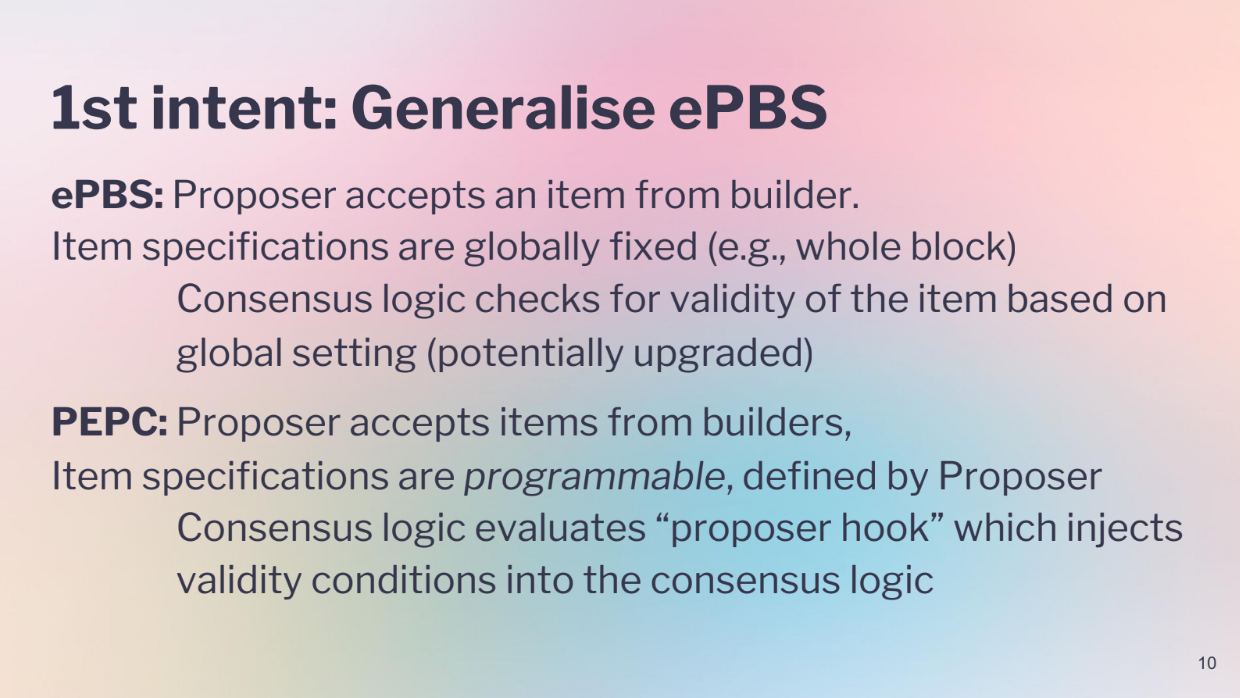

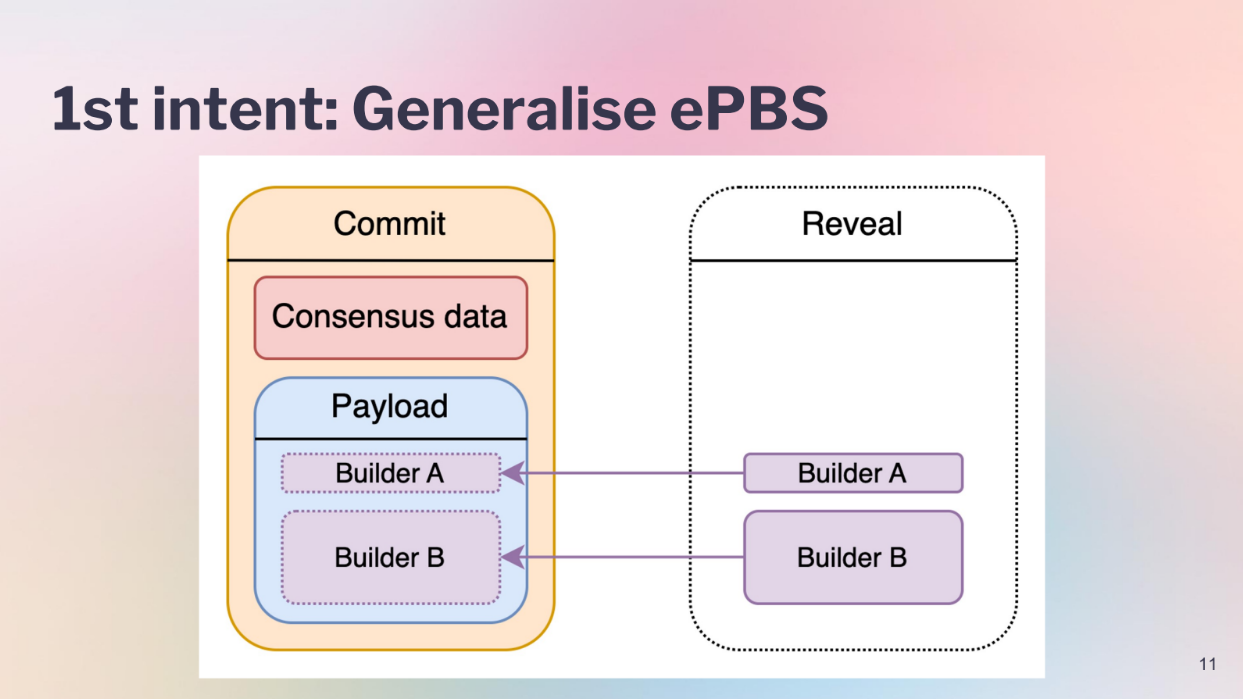

Generalize ePBS (7:45)

Currently in ePBS, the consensus logic expects full blocks from builders. This is set globally.

PEPC proposes instead having a generic evaluation function. This would allow the proposer to specify conditions for a valid block at creation time. If those conditions aren't met, the block is ignored.

Barnabé calls this a "proposer hook" : a way for proposers to inject some programmability into Ethereum's state transition function.

Let's give an example of a two slot PBS design:

- In slot 1, the proposer makes a "commit block" that contains consensus data and a template payload, committing to use parts from Builder A and B.

- In slot 2, the builders reveal their parts, which are matched to the template.

From Optimism to Pessimism (9:50)

Barnabé then discusses Eigenlayer, which enters validators into commitments and slashes them if not followed. He sees 3 Eigenlayer use cases:

- Economic security from high value slashing

- Decentralization from multiple independent entities

- Proposers committing to block contents

PEPC aims to improve Proposers committing to block contents. Making proposer commitments unbreakable by disallowing invalid blocks, rather than slashing after.

PEPC news

Some ways to test PEPC comcepts without full protocol changes

PEPC-Boost (11:25)

- Use the existing MEV-Boost relay infrastructure

- Have relays check validity of proposer commitments

- Motivated by the top of blocks being valuable for MEV like sandwich attacks

- Could divide blocks into a top section auctioned for MEV, and a generic rest built by any builder

- This might make auctions more competitive and force unbundling of builder functions

PEPC-DVT (13:00)

- Use Distributed Validator Technology (DVT) nodes to encode commitments

- DVT lets validators have multi-sig control over their keys

- The DVT network signs blocks only if commitments are met

- Prevents proposers making invalid blocks

PEPC-Capture (14:00)

- Uses "parallel proposer" idea from Dankrad for commit blocks

- Multiple proposers make commit blocks and bid on their expected value

- Attesters pick highest value commit block to finalize

- Provides credible signal of block value to enable MEV burn

PEPCU (15:45)

PEPC is coming up with many different "flavors", just like how the Marvel Cinematic Universe has many different movies :

- Prof. PEPC - Coming November 2023

- The PE and the PC - Coming February 2024 (Plays on Proof of Work vs Proof of Stake)

- PEPC 2 : Electric Boogaloo - August 2024 (Referencing an old meme sequel title)

- PEPCFS (First Come First Served Cold) - March 2025 (A blockchain ordering mechanism)

- PEPSpspsps - September 2025

Therefore, should we call Barnabé "PEPC Man" ?

Barnabé encourage people to check out their work and get in touch if they want to help figure out and expand on the PEPC idea

Q&A

About Domothy's idea about having different commitment proposals for blockchain, it sounds like a market of commitments, which is cool. But doesn't this also make things complex for others using the blockchain ? (16:45)

Yes, it can get complex for those using the blockchain, not just the proposers. Right now, there's a software called Mevboost that makes this process simpler for proposers.

If we add too many different commitments, it could get confusing, but people would soon figure out which commitments work best. The idea is to avoid making huge changes to the blockchain every time we want a different commitment, but it can be complex.

That's why we're taking it step by step. We're testing smaller changes like PEPC-Boost first to see how they work.

So, about this PEPC-Boost thing, it seems to be going against the trend of making blockchain more efficient. We want to decrease delays and improve speed, right ? But PEPC-Boost seems to be adding more validation checks. Also, how will it work with other relay systems ? (19:00)

Good question. The idea behind optimistic relays is they trust blocks without validating them. If there's an error, there's a penalty system.

PEPC-Boost, on the other hand, is more cautious and checks blocks. There might be a way to make PEPC-Boost optimistic too, which means it could be just as fast as other relays. We're still researching that.

I'm trying to understand how these commitments work in the blockchain. Is there a way to limit what they can do ? And how do you ensure they don't slow everything down ? (21:10)

Think of commitments as a set of rules that need to be followed. The 'hook' checks if these rules are followed. We can design these rules using Ethereum's coding system.

The challenge is making sure they don't take up too much computer power, so everything remains fast. We need to protect against any commitment that might slow down or harm the system.

As someone who studies how blockchain consensus works, if these commitments can be anything, how do I ensure the system remains safe and efficient ? (23:10)

The safety of the system still relies on established protocols. The commitments, like PEPC-Boost, are more about the interaction between applications and the blockchain's core system. The main safety and efficiency protocols remain unchanged.

Examples of interaction between industry and academia (2:00)

Microsoft Research and Google Research hired academics to come work for them for a couple years. This helped bring new ideas into industry research. Some researchers stayed on long-term.

Amazon has a scholar program where academics can come work directly with teams at Amazon, while still being affiliated with their university. This gives them ownership of real products, unlike some other industry-academia collaborations.

Much research has emerged from industry or open source communities, not academia. This is different than many other fields. It allows high quality research outside of universities.

But there are downsides. It may contribute to less solid theoretical foundations in the field.

Alejo feels industry is passing responsibility to academia to formalize problems like MEV. But academia needs more concrete problems formulated by industry to work on.

Who is responsible for formalizing problems ? (5:30)

Andrew thinks formalization has traditionally been done more by academics. Cryptographic is something that really used to be mostly the innovations of it done in academia rather than industry. That's a little bit changed recently with ZKP and everything taking over

Industry undervalue just how easy it is to invite yourself to just show up and give a talk at any university.

According to Mallesh, tt's the academics' responsibility to show interest in engaging with these problems. But industry can't expect academics to already have dreamed up perfect solutions. Formalizing problems takes time and work.

Industry needs to be involved in an ongoing process of conversations and collaborations with academics to bridge the gap between real world problems and formal academic research.

Neha comes from a systems background where they build systems without much math, so formal academic research doesn't always mean math, theorems, and proofs.

But it's still formal research because it involves carefully framing problems, describing solutions, understanding related work, having a methodology, and showing your solution works. The key is having a hypothesis and showing it's correct, so others can verify it. She doesn't see industry as rigorous as academics.

The Pitfalls (11:00)

There are 2 extreme pitfalls to avoid in academia-industry collaborations :

- Hiring a brilliant academic, giving them money, and expecting a solution in 6 months. This "lone genius" approach often doesn't work.

- Hoping the academic will rubber stamp or validate your existing solution. This underutilizes their expertise.

Successful collaborations are more of a "give and take". The results may not directly solve the company's problem. But academic papers can help add shape and structure to the problem space. Non-formal solutions that "just work" may emerge too.

Open problems in crypto/blockchain research (12:50)

MEV is a good use case for privacy technologies. It shows how leaked information can be exploited economically, motivating privacy solutions like zero knowledge proofs.

Fast fully homomorphic encryption that can run in a distributed way would enable more effective MEV prevention architectures.

Analyzing incentives and designing mechanisms/game theory is key for getting decentralized actors to behave properly in crypto systems. More research is needed on crypto economic incentives.

Research into scalable, low latency system architectures is still needed.

MEV implications for blockchain architecture (18:15)

Crypto has opened up new research questions around combining mechanisms, game theory, and cryptography that weren't considered legitimate before. MEV creates practical problems that require these solutions.

There needs to be a variety of models for academia-industry engagement, since different academics and companies have different goals. Short-term visiting positions in both directions help alignment.

Academia has an issue where people "lick the cookie but don't eat it" : they solve a problem just enough to publish but not fully address it. Going deeper on partly solved problems is challenging but impactful.

Industry and academia define a "solved problem" differently. Industry needs real implementations, while academia needs novelty. Overall, there are still open questions around aligning academic and industry incentives.

What motivates and incentives academics ? (23:05)

Money, reputation, and status matter, but point out some differences for academics versus industry. Academics have slower feedback cycles through the publication process rather than direct financial gains.

Crypto/blockchain has unique appeals that drive academics :

- Impact : academics are driven by a desire to make an impact and improve the world based on their own theories and values.

- Intellectual freedom : interest in tackling complex, meaningful problems which anyone can contribute

- Seeing idead come to live : the ability to rapidly test and implement new mechanisms and ideas, rather than just theorize about them

Improve academic incentives with crypto (27:15)

Incentives in academia are broken :

- Slow research cycles due to the publication process

- Academics doing unpaid work for journals

- Replication crisis and questionable research practices

- Researchers favoring incremental work over high-risk, disruptive work to protect careers

What crypto can do :

- Blockchain data immutability could provide provenance and credibility to research data.

- A "crypto science foundation" could fund projects focused on innovation and democratization, correcting issues with existing funding bodies

New crypto-native institutions could compete with and provide an alternative to existing academic power structures. The discussants believe there is momentum and opportunity for cryptocurrency to transform incentives in the broader scientific world.

How to get more academics working in crypto/blockchain ?(31:20)

The mentality is very Manichean : Most academics are either not interested at all, or spend almost all their time on it.

Academics tend to follow trends and peer interests, so crypto is not yet mainstream in most fields. Furthermore, the public narrative about crypto focuses on scams, theft, and hype rather than the interesting technical problems.

But academics go through phases of interest, so crypto could gain more attention once progress is made on technical problems.

Overall, the challenges are changing the public narrative to highlight opportunities, and demonstrating technical progress to gain more academic interest over time.

Bridging the communication gap (37:00)

There is a communication gap between crypto practitioners and academics who could help solve problems :

- Concepts and ideas in crypto are often poorly explained or documented, mostly living in places like Twitter threads. This makes it hard for academics to build on existing work.

- Crypto has developed its own language which sometimes diverges from academic usage, causing confusion. More systematic writeups are needed to translate ideas into academic language.

- Academics need clear, structured explanations to understand and contribute, not just informal discussions.

This is a common issue when new technical communities emerge. Both sides need to work to build mutual understanding given the divergent languages.

Making detailed writeups (41:50)

Academics need more than just Twitter threads to understand crypto concepts, but full academic papers also have high overhead. Blog posts or detailed Twitter threads can work if :

- Key terms are clearly defined

- Comparisons are made to related work

- Tradeoffs and limitations are explained, not just benefits

PhD students spend lots of time deeply understanding previous work before contributing. Explaining this context helps others learn.

Final message (44:30)

Communication and assumptions have gotten better on both sides compared to the past. Academics now have a stronger grasp of the core principles and constraints in crypto systems.

There is growing interest from academics to contribute, but it takes time and effort on both sides to find productive collaborations and convey problems/solutions across disciplines.

Crypto practitioners are very busy and can't individually mentor all interested academics. But writing clear explanatory documents and being patient to find good matches can pay off long-term.