MEV Workshop at SBC 23 Part 3

Summary of MEV Workshop at the Science of Blockchain Conference 2023. Videos covered are about MEV across the stack

Quintus wants to provide a new perspective on how to think about "MEV" or "maximum extractable value" in blockchain systems like Ethereum.

To understand potential solutions, we first need to understand the core source of the unfairness and inefficiency

This is an Ethereum-centric view, but believes the points apply to other blockchain systems too.

The issues of MEV (1:10)

- Power asymmetry : Miners/validators earn a lot of money from MEV activities while regular users don't. This is seen by some as unfair.

- Economic inefficiency : Some MEV activities impose extra "taxes" on users, make trading more expensive

- Technical inefficiency : MEV can cause things like failed transactions, extra protocol messages, and uneven validator yields. This leads to technical problems like incentives to reorg and centralization.

- Revenue sharing : Applications want to capture some of the MEV value they create, which relates to the fairness issue.

Quintus wants to focus mostly on power asymmetry and economic inefficiency issues in this talk.

Informational asymmetry

Computation and information (2:30)

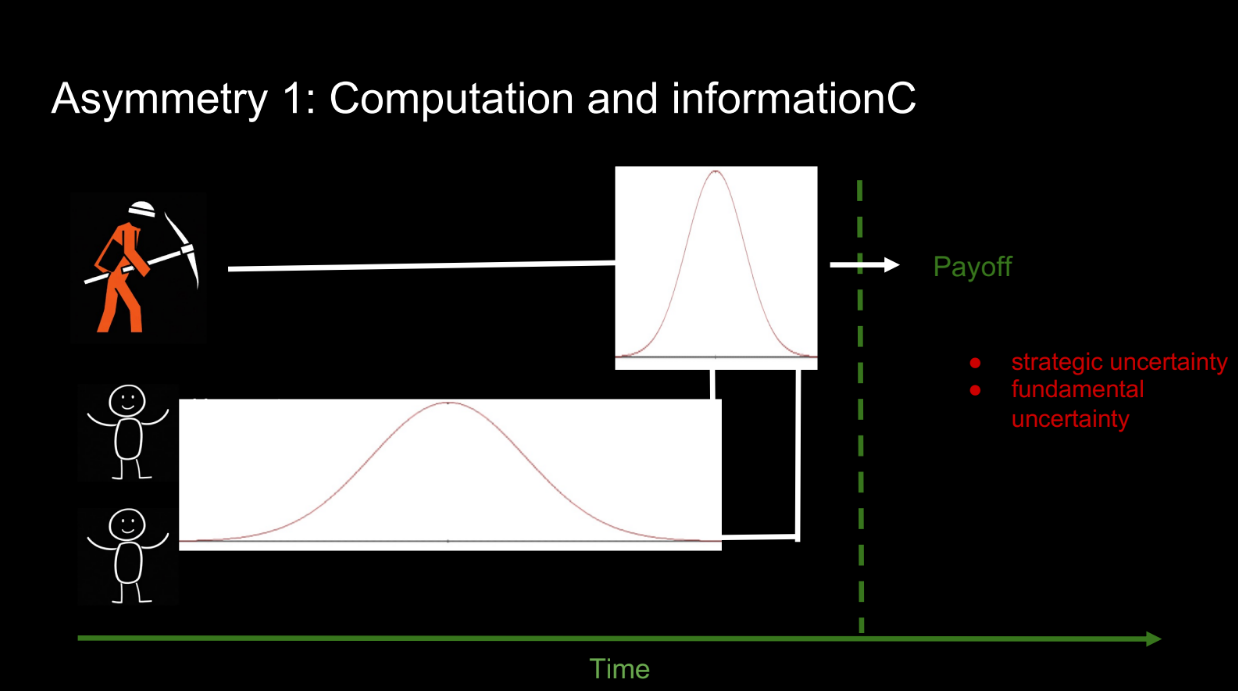

Quintus wants to explain the core issue behind MEV unfairness and inefficiency. Let's use a simple model with 2 actors:

- The miner (stands for any block producer)

- Users

Both the miner and users want to maximize their profits when the next block is produced. Their profits depend on:

- The block contents

- External state (like exchange prices)

There are 2 stages:

- Users submit transactions

- Miner orders transactions and produces the block

This shows an asymmetry:

- Users face lots of uncertainty about other transactions and external state

- The miner has much less uncertainty since they see all transactions and produce the block later

There are two types of uncertainty:

- Strategic - What other people are doing

- Fundamental - External state like prices

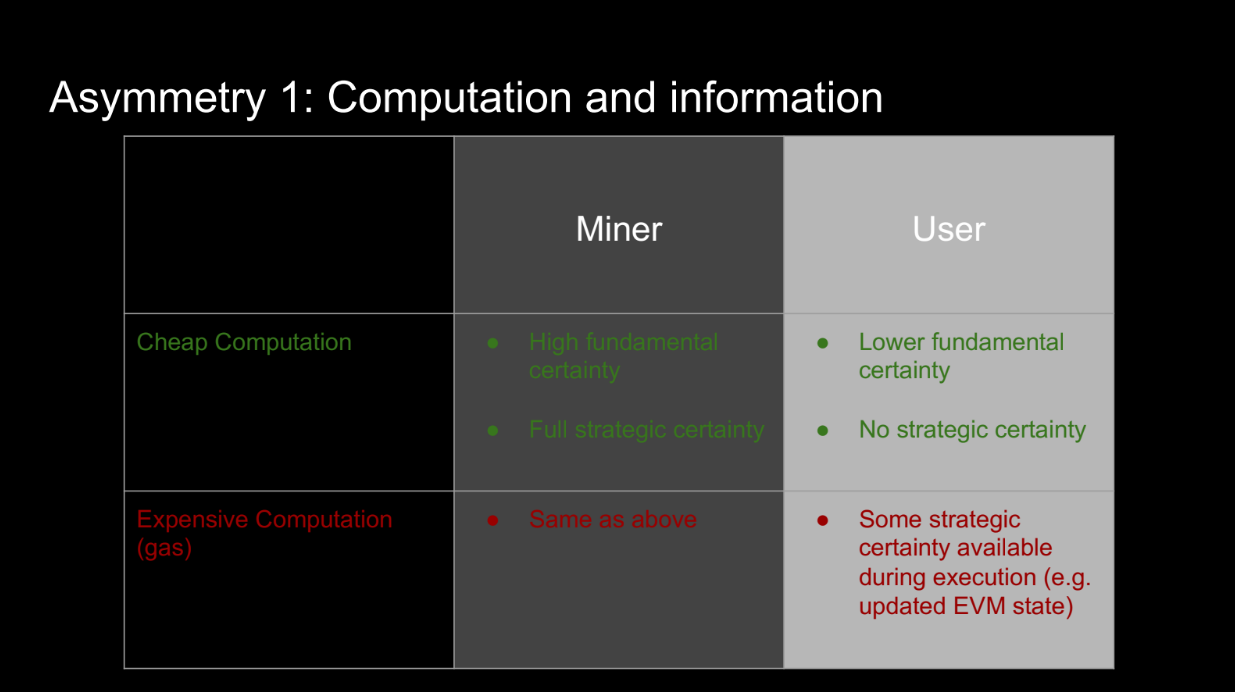

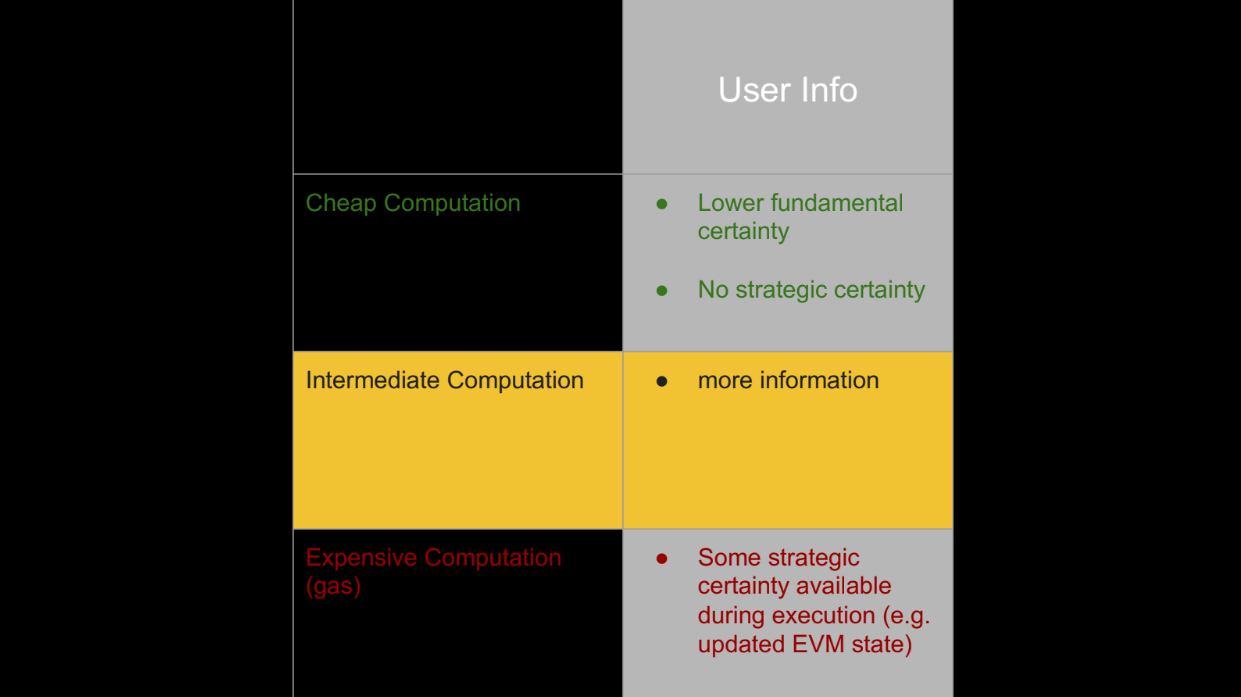

Users have more of both VS the miner. This information asymmetry advantages the miner. Additionally, Miner can do computations locally and cheaply with full info, and users must pay gas for computations with less info

Even though users can do computations on-chain, this is expensive. The miner's ability to do cheap local computations with full information adds to their advantage.

Information asymmetry

Deciding Outcomes (5:50)

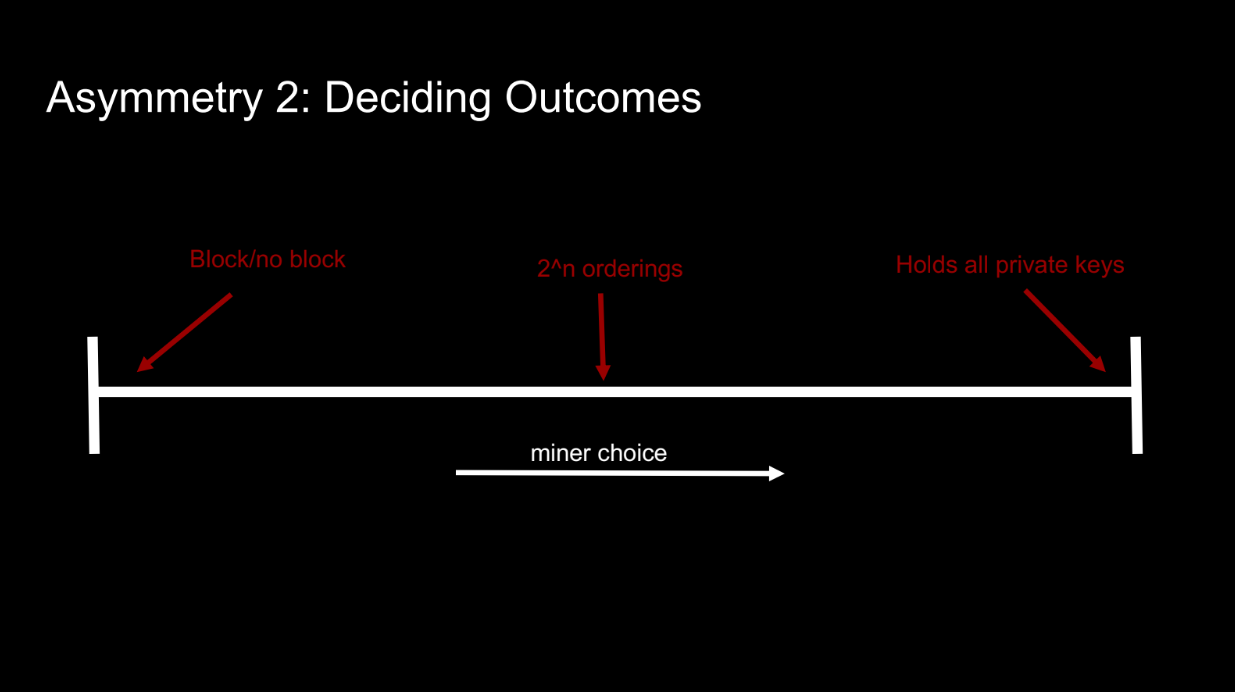

Another asymmetry is the miner's ability to choose between different block outcomes. For example:

- Producing empty VS full blocks

- Ordering transactions differently

- Controlling all private keys (like an exchange)

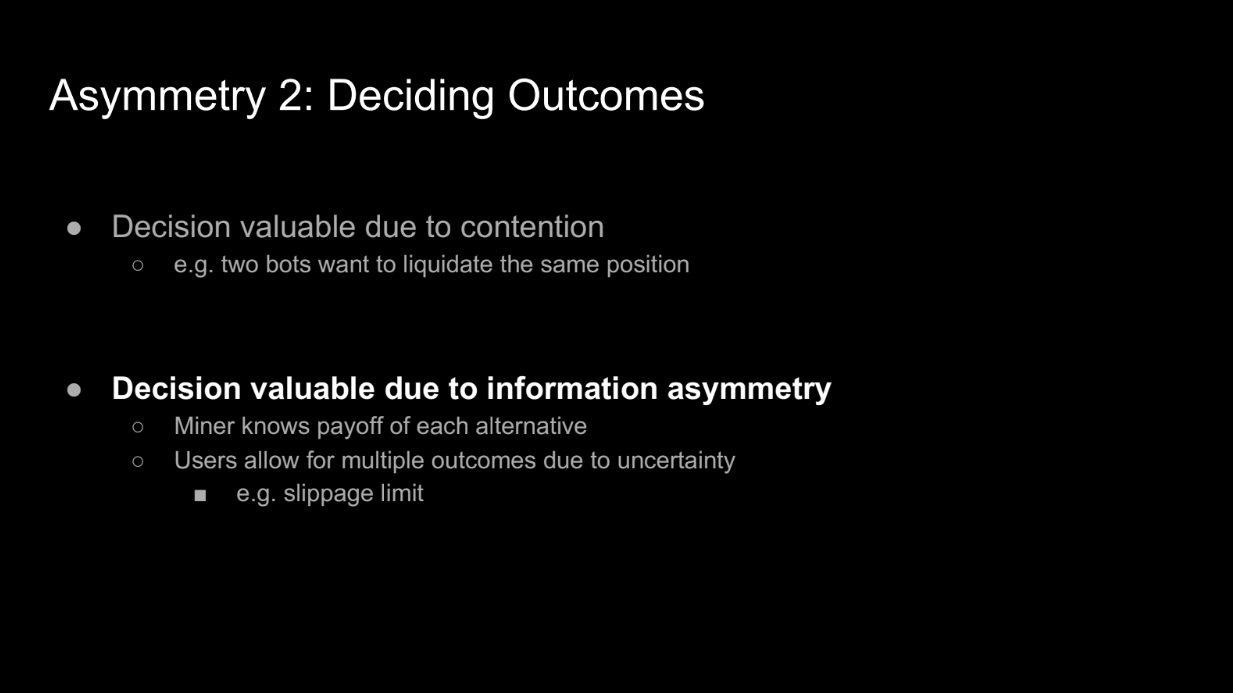

Two main factors affect the value of the miner's decision making ability:

- Fundamental contention - situations like arbitrage opportunities that users compete for access to. The miner can decide the winner.

- Information asymmetry - The miner knows the value of each outcome. But more importantly, users lack information so they entertain multiple outcomes via things like slippage limits. This gives the miner many options to choose from.

So information asymmetry enables the miner's valuable decision making ability. Without it, there would be less options to choose between.

Classes of solution (8:15)

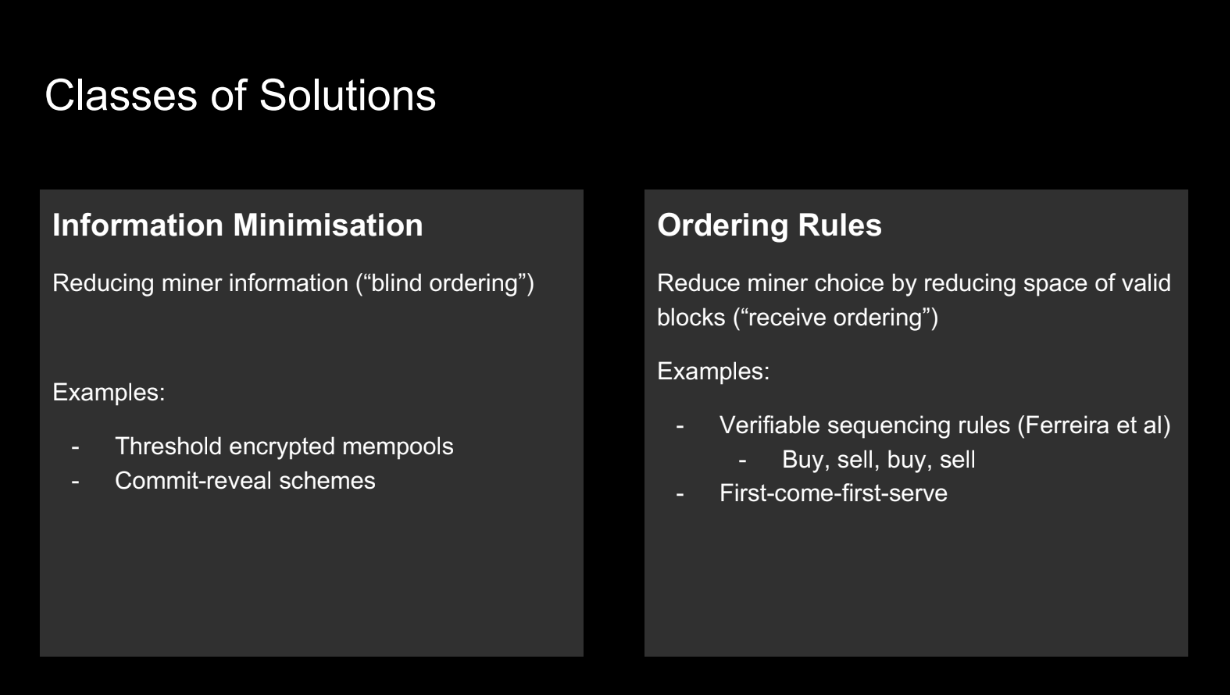

Past solutions to this asymmetry focused on:

- Minimizing miner information - techniques like threshold encryption and commit-reveal schemes.

- Reducing miner choice - ordering rules like first-come-first-serve that limit valid block space.

So the main approaches were minimizing the information miners have and limiting the choices miners can make. This aimed to address the unfair asymmetry.

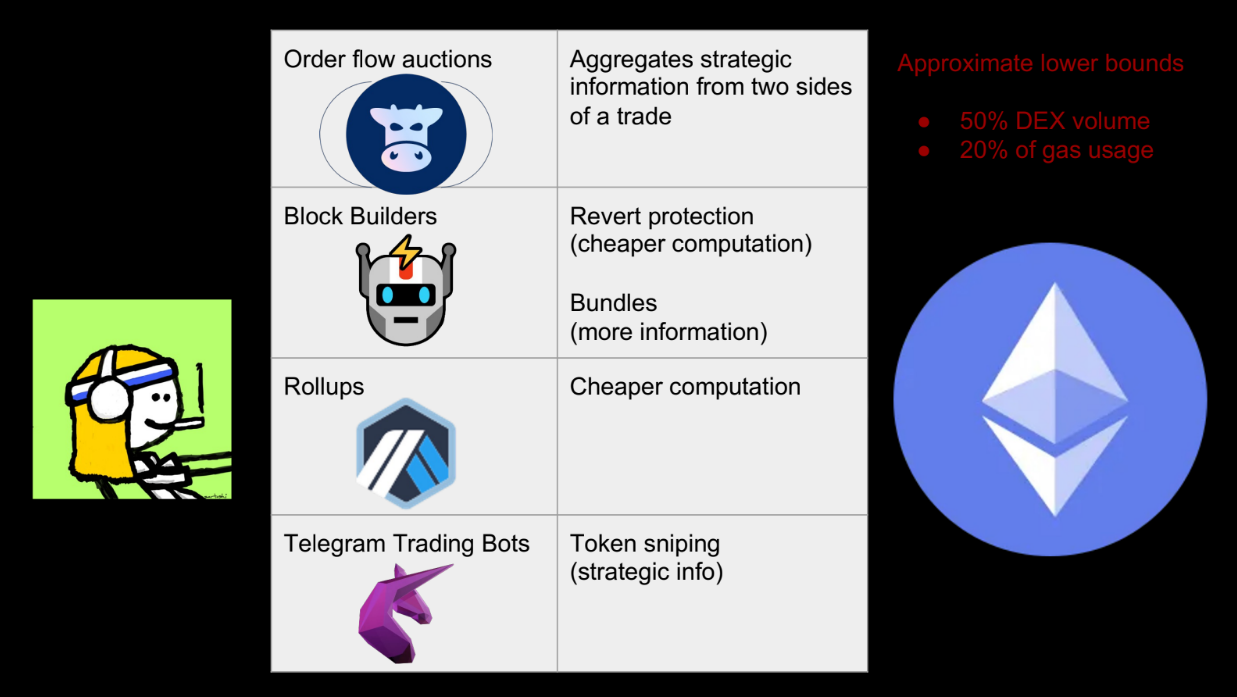

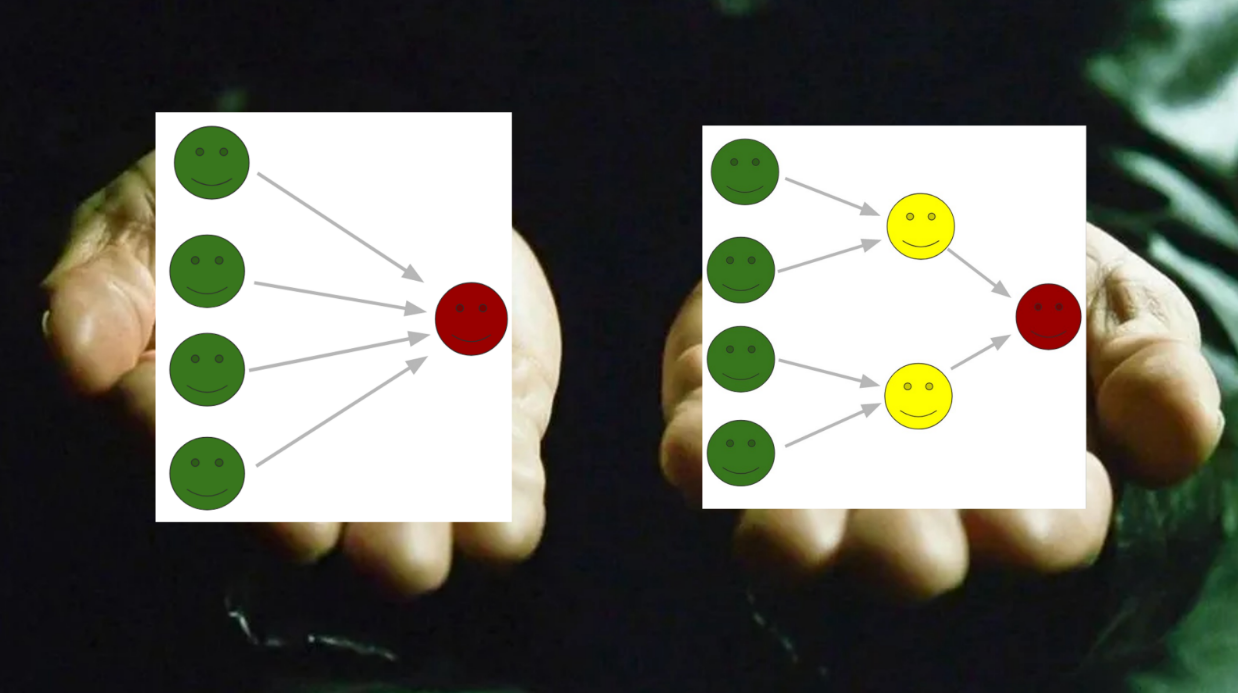

But these past solutions don't reflect what's happening in practice on blockchains like Ethereum. Instead, we see lots of intermediate services emerging that give users more information and computation.

Intermediate computation

So a different approach is needed, improving user capabilities rather than just limiting miners. This allows users and miners to better compete on a level playing field.

Instead of reducing the miner's information/actions, a new approach is emerging : improving users' information and making computation cheaper via "intermediate" services.

Some examples (9:45)

- Order flow auctions (like CowSwap) : aggregate orders off-chain for better pricing

- Blockbuilders : provide revert protection so users can try multiple trades

- Bundles : sequence transactions so later ones can use info from earlier

- Rollups : cheaper way to find optimal trades

- Telegram bots : aggregate user info for trades/snipes

These give users more info and cheaper computation. Over 50% of DEX volume goes through these services

New intermediaries coming (12:40)

The old view of only miners and users directly interacting is limiting. Adding intermediaries to our mental models will allow better reasoning about real-world blockchains.

The key mental shift is accepting that intermediaries are emerging between miners and users. We can't just think of two actors anymore. This better reflects reality and how blockchains operate in practice.

New problems

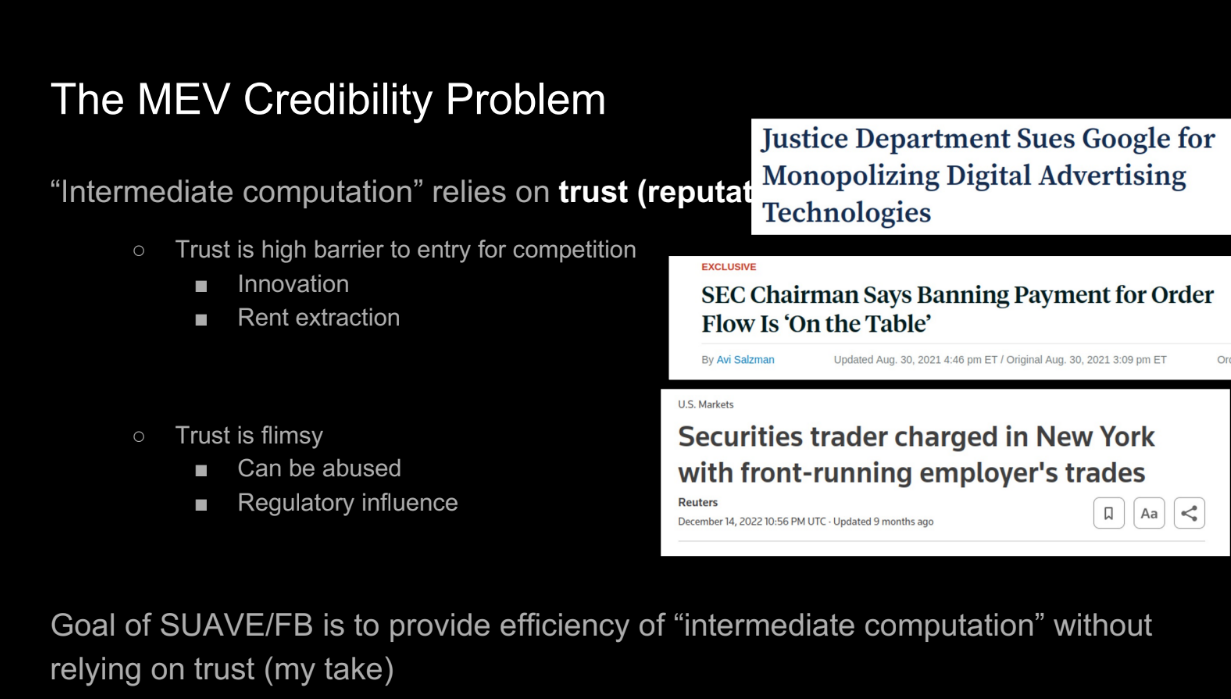

The MEV credibility problem (13:10)

These intermediate services solve some MEV problems, but they rely on trust and reputation, not cryptography. This causes :

- High barrier to entry. New services need to build trust and reputation. Less competition means potential for monopolies, rent extraction, and stagnation.

- Trust is fragile. It can be abused or lost suddenly. We want systems where properties are guaranteed by design, not by actors' decisions.

- Trusted intermediaries can still abuse power or be manipulated. We want "can't be evil" not "don't be evil."

Examples like traditional finance show trusted intermediaries aren't enough to prevent abuse. The goal is efficiency through intermediate services, but with properties guaranteed cryptographically, not via trust.

Examples like traditional finance show trusted intermediaries aren't enough to prevent abuse. This avoids the issues of reputation, fragility, and centralized power that purely trusted solutions have.

Open questions (16:25)

In summary, there are three key issues to think about going forward:

- The credibility/trust problem : How can we get the benefits of intermediate services without relying on trust and reputation ?

- Studying the asymmetries formally : The speaker discussed information and computation asymmetries loosely. How can we study these formally and rigorously?

- Implications for blockchain design : If we assume intermediaries will emerge, how does this change desired properties of things like ordering policies? Do they still achieve their intended goals?

The speaker hopes this talk inspired people to work on these problems and provided a useful perspective on intermediaries and MEV.

Q&A

When intermediaries compete in PBS auctions, how do we prevent them from pushing all the revenue to the block proposer (validator) by competing against each other ? (18:00)

The speaker clarifies that "all the revenue" going to the proposer provides value accessible to everyone in the stack. If miners built the block directly they could also access that value.

The Cost of MEV

Quantifying Economic (un)fairness in the Decentralized World

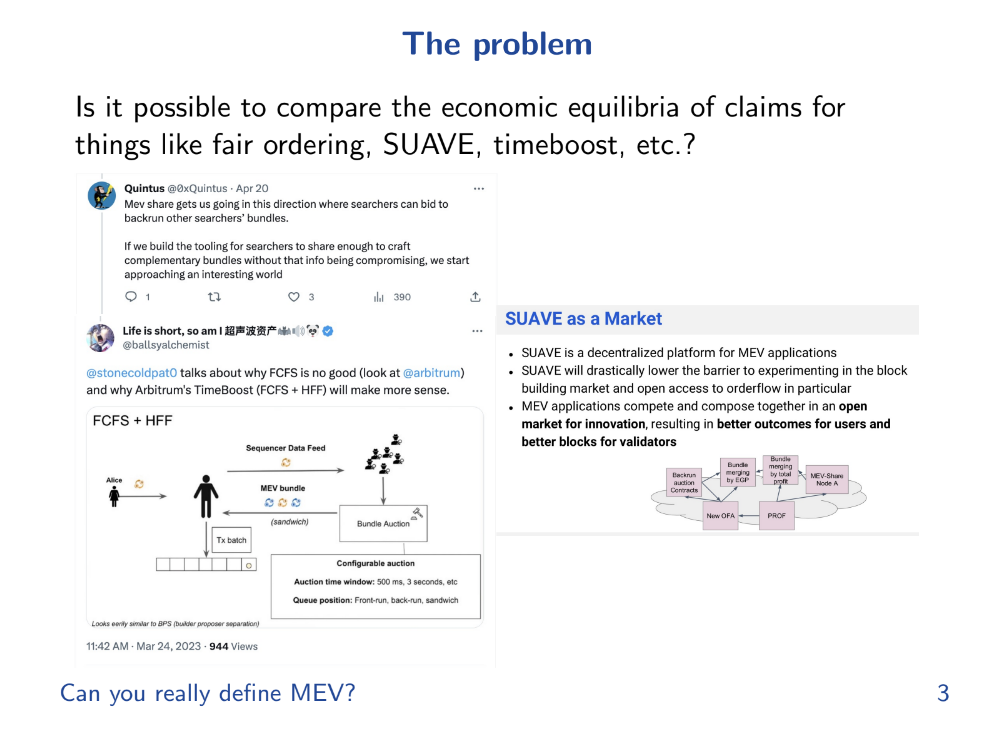

Can you really define MEV ?

There have been many talks discussing qualitative and quantitative aspects of MEV, but this talk will take a more formal approach.

There’s a challenge in defining MEV correctly since many different definitions and formalisms have been proposed, each with its own set of assumptions and outcomes, making it hard to compare them or reach a common understanding.

What do we really want ? (1:40)

Some fair ordering methods (like FCFS - First Come First Serve) haven’t lived up to expectations. Tarun also mentions wanting to explore and compare new concepts like Suave and Time Boost rigorously.

A key question is how much "fairer" one set of orderings is compared to another in terms of the payments made to validators. We define "fair" as meaning the worst case validator payment is not too far from the average case.

The talk aims to explore fairness among different ordering mechanisms, especially in terms of payments to validators

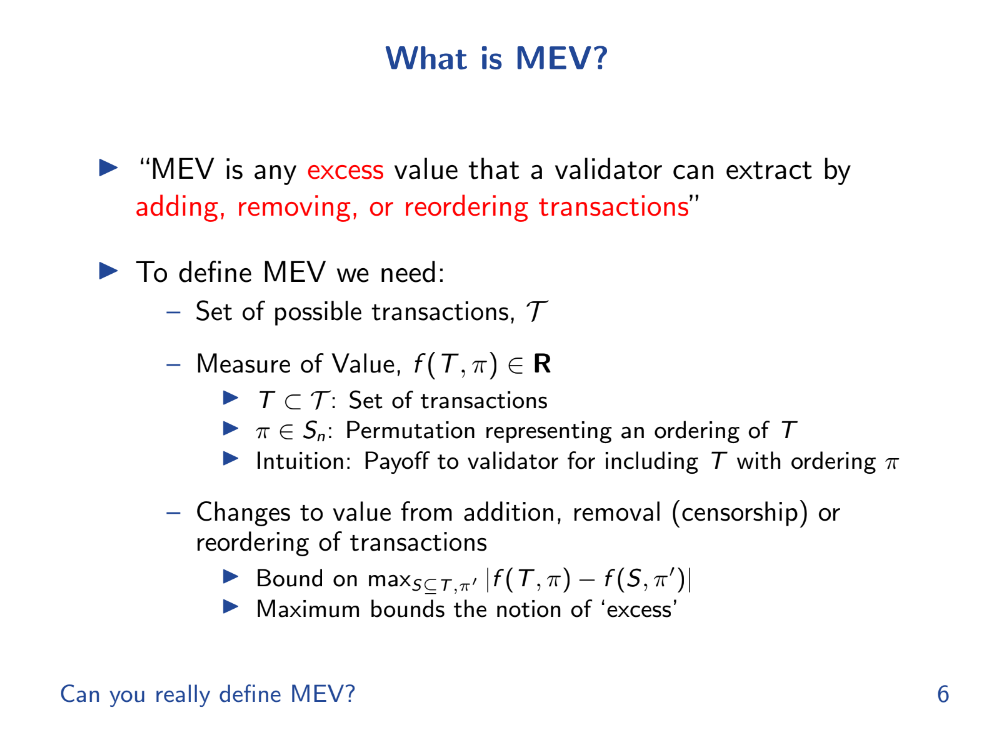

What is MEV (formally) ? (2:50)

MEV is any excess value that a validator can extract by adding, removing or reordering transactions.

To outline a formal mathematical framework for defining and analyzing MEV concretely, 4 things are needed :

- A set of possible transactions named T

- A payoff function f that assigns a value to a subset of transactions T and an ordering π. This represents the payoff to validators.

- Analyzing how f changes over T and π to define "excess" MEV.

- Ways to add, remove, or reorder transactions that validators can exploit.

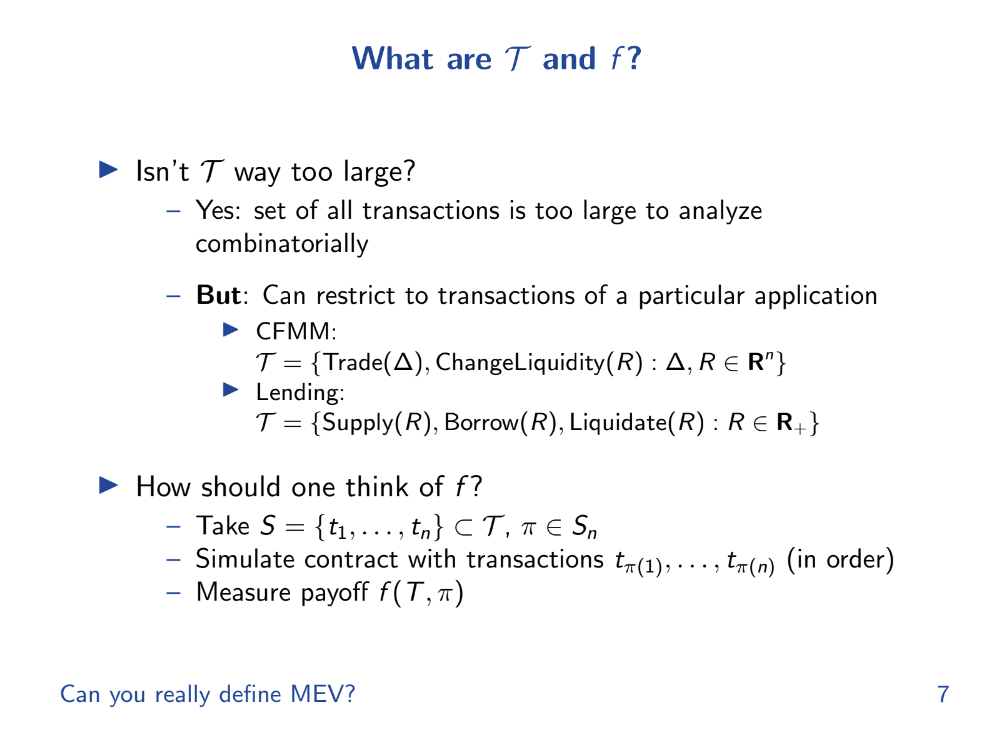

T could be extremely large, making analysis difficult. But for specific use cases, T may be restricted to make analysis feasible.

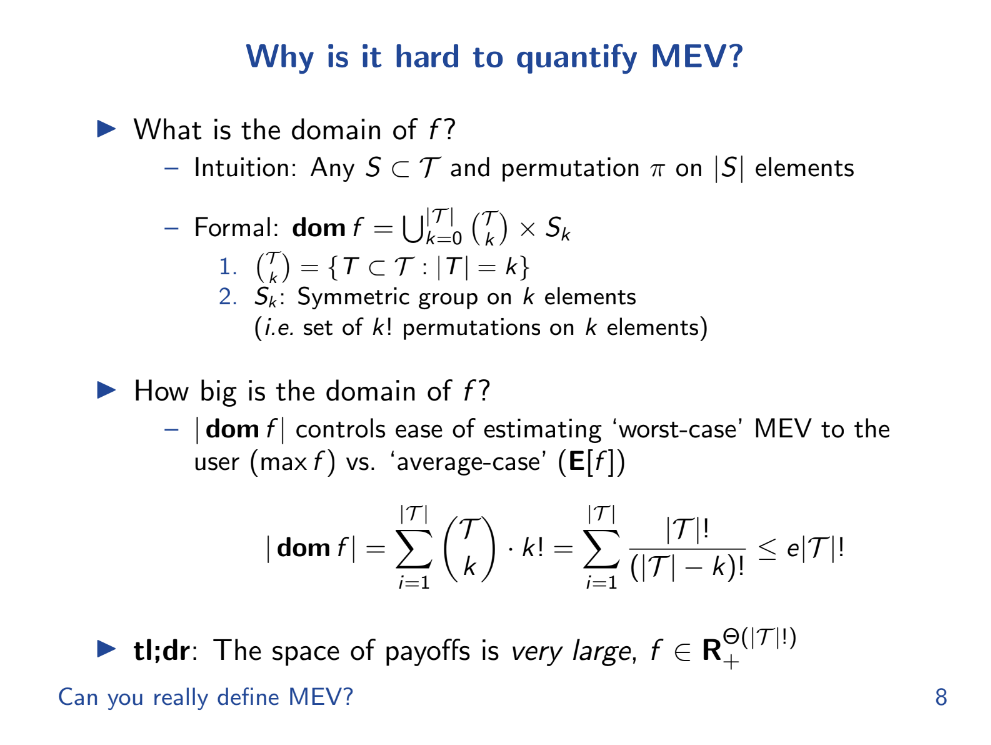

Why is it hard to quantify MEV ? (5:40)

Several reasons why it is hard to quantify MEV :

- Very large transaction space. MEV depends on the set of all possible transactions (T) that could be included in a block. This set is extremely large, especially as the number of blockchain users grows. Quantifying MEV requires looking at all possible subsets and orderings of T.

- Complex valuation. MEV also depends on the valuation (payoff) function f that assigns a value to each transaction subset and ordering. This function can be very complex and determined by market conditions. It's difficult to precisely model.

- Private information. Validators have private information about pending transactions and the valuation of transaction orders that external observers don't have.

- Changing conditions. f depends on dynamic market conditions. In other words, the valuation of transactions is constantly changing as market conditions change. We must quantify a moving target

- New transaction types. As new smart contracts and transaction types are introduced, it expands the transaction space and complicates valuation.

Fairness

We're using the term "payoff" to represent the benefit or outcome someone gets from a certain arrangement of things (like the order of transactions).

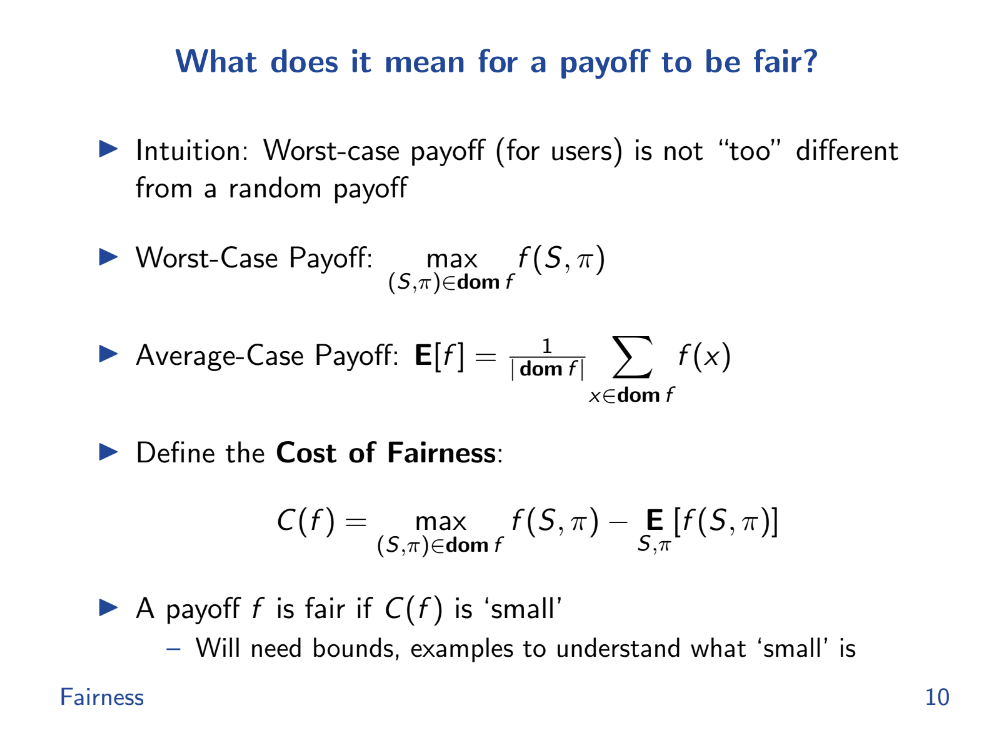

What does it mean for a payoff to be fair ? (6:40)

According to Tarun, "fairness" is where the worst case payoff is not too far from the average random payoff. This represents a validator's inability to censor or reorder transactions.

- Worst Case Payoff: Imagine if someone cheats and arranges the deck to their advantage; this scenario gives them the highest payoff possible. It's the best they can do by cheating.

- Average Case Payoff: This is what typically happens when no one cheats; it's the average outcome over many games.

- Cost of Fairness: It's like a measure of how much someone can gain by cheating. It's the difference between the worst case and the average case payoffs.

A situation is more "fair" if this cost of fairness is small, meaning there's little to gain from cheating

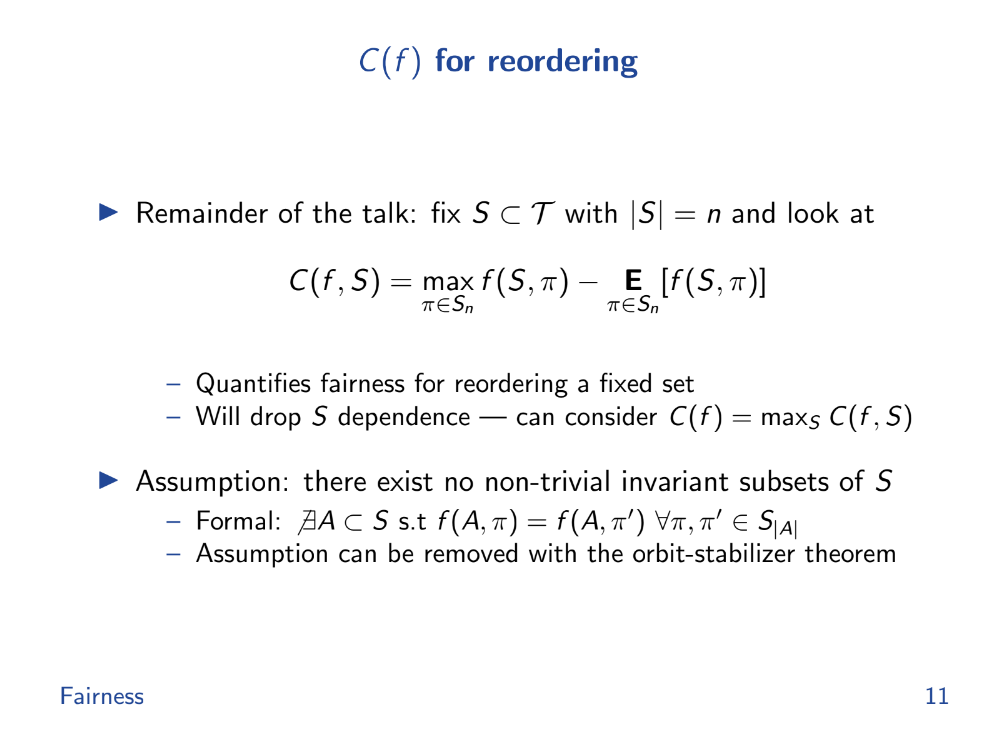

Cost of Fairness for reordering (7:50)

We want to quantify how much extra they can extract. The Assumptions are as follows :

- Fixed set of possible transactions

- Focus only on reordering for now

- No permutation subgroups that leave payoff invariant

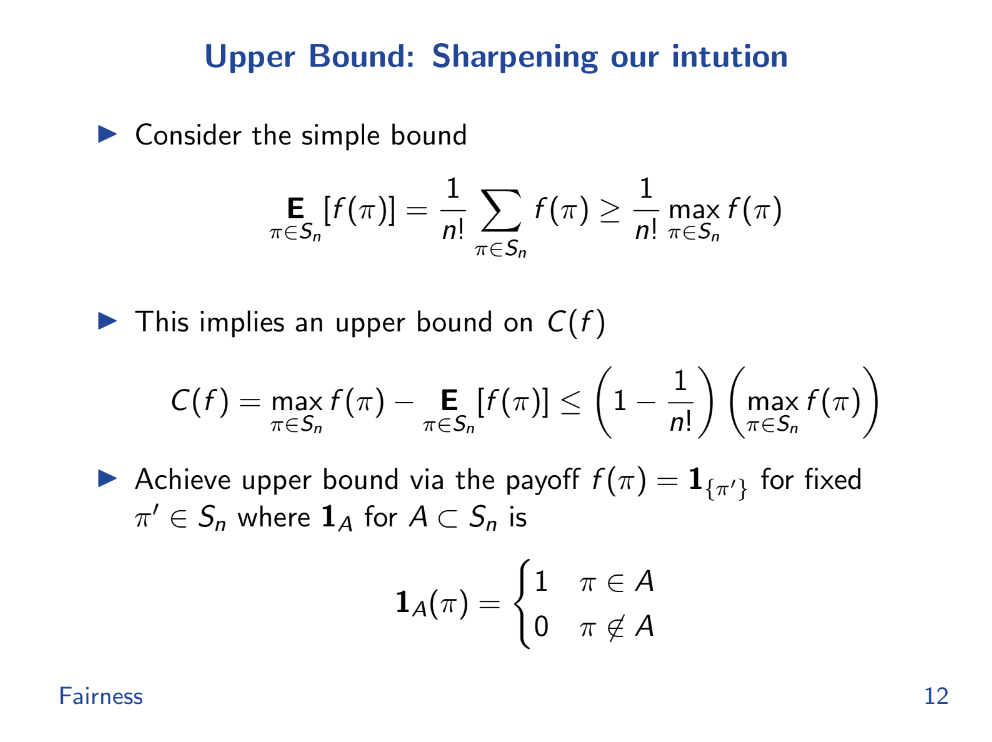

Upper Bound (8:45)

With those assumptions :

- The max possible unfairness happens when payoff is 1 for one order, 0 for all others.

- In general, unfairness is bounded by : (Max payoff - Average payoff) < Max payoff * (1 - 1/n!) where n is number of transactions

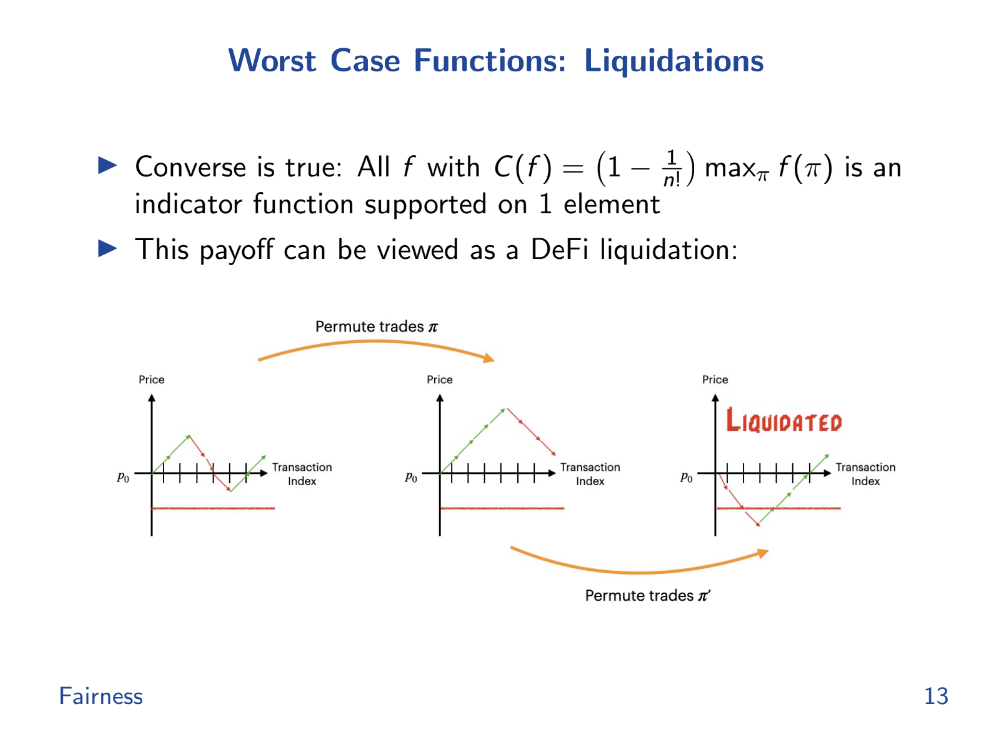

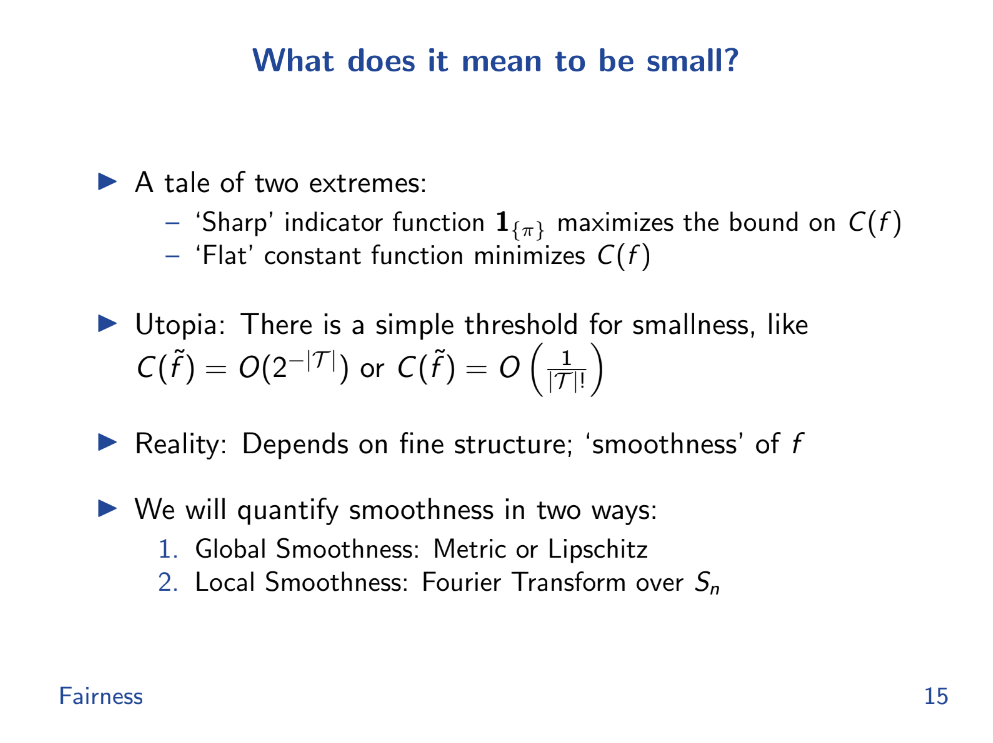

Worst case functions (9:30)

DeFi liquidations represent a kind of "basis" set of payoff functions that generate high costs of fairness.

The order you execute these trades can affect whether you lose a lot of money at once (get liquidated) or not. Different orders or "permutations" of trades have different outcomes, and some might hit a point where you get liquidated, while others won't.

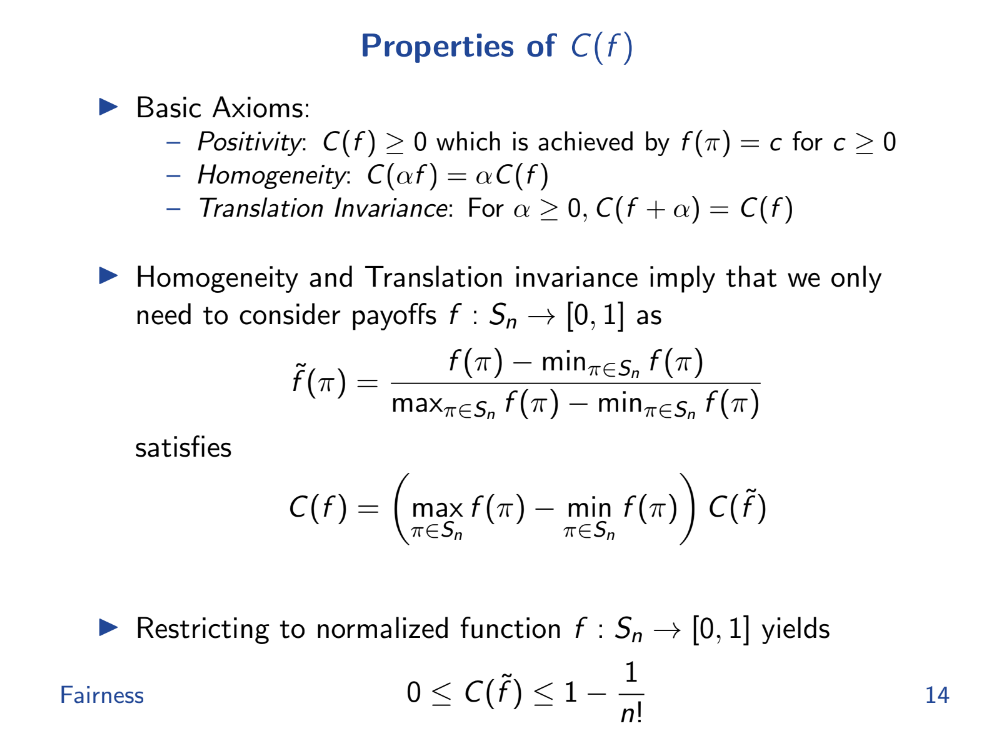

Properties of Cost of Fairness (10:25)

- Fairness is defined as the difference between worst case and average payoffs.

- Perfectly fair means same payoff for any order. Perfectly unfair means payoff only for one order.

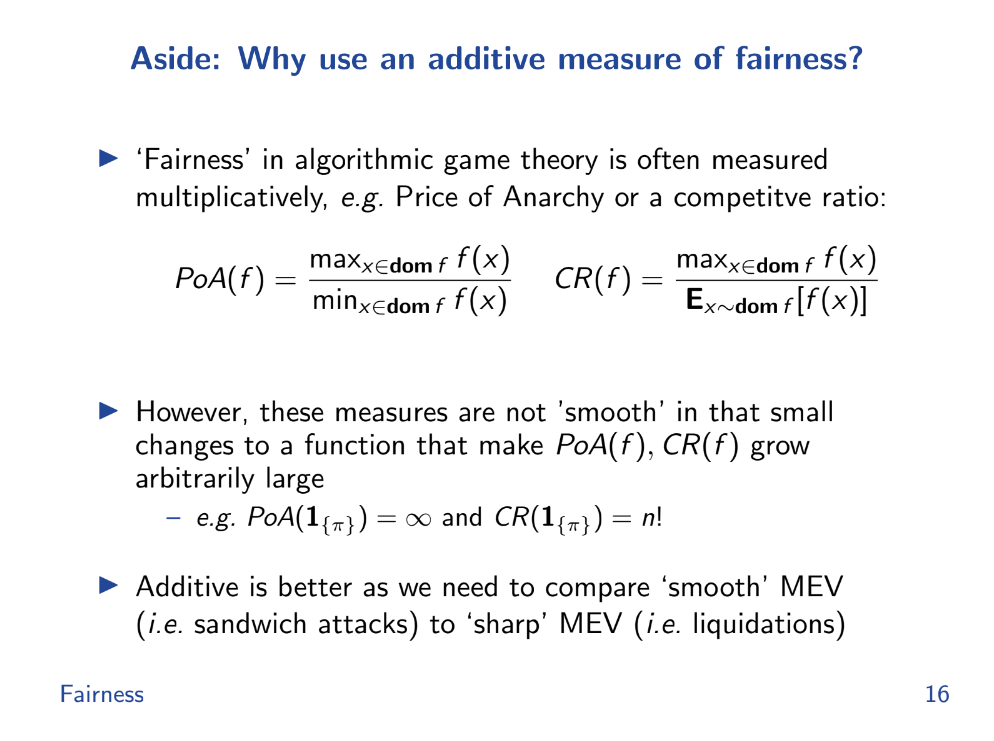

Why use an additive measure of fairness ? (12:20)

The problem is, Ratio measures like max/min or max/average can become exaggerated or unbounded for extreme payoff functions like liquidations. So we need an additive measures of fairness that quantify how much the payoff changes between different orderings.

Additive means difference between max and average. By using an additive measure, it's easier to analyze and understand the variations in payoff and thus, the level of fairness in the system, making it a more reliable method in such contexts.

Smooth Functions

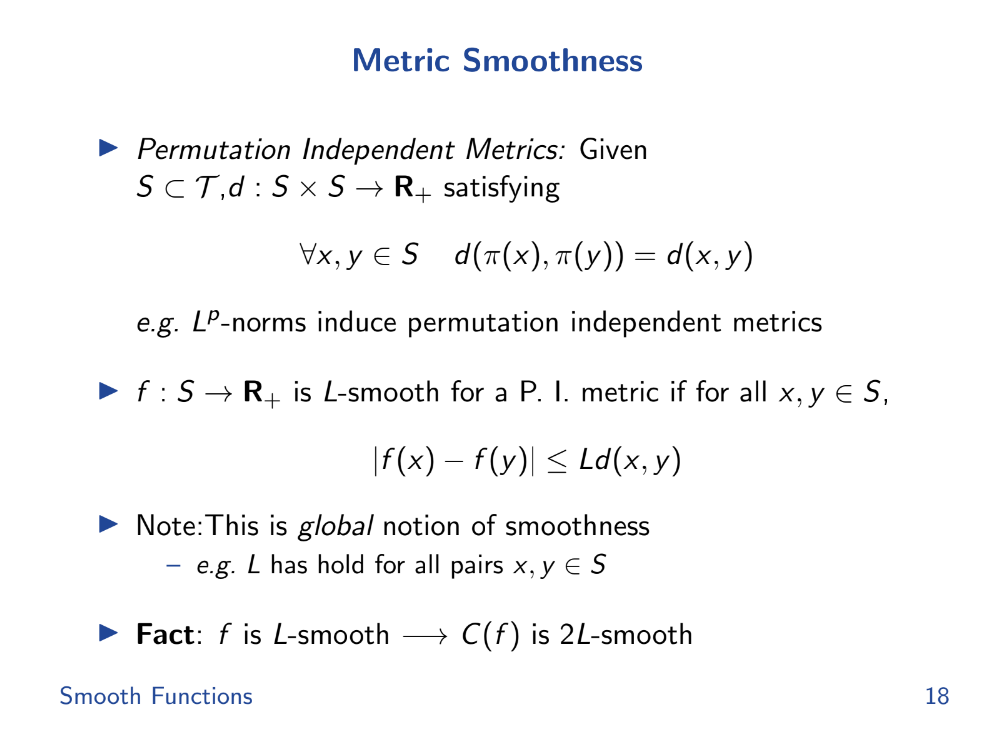

Metric smoothness (13:20)

In calculus, there's a concept called Lipschitz smoothness which helps to measure this predictability. If a function is Lipschitz smooth, the amount its value can change is limited, making it more predictable.

We can define similar "smoothness" metrics for AMMs based on how much their prices change in response to trades.

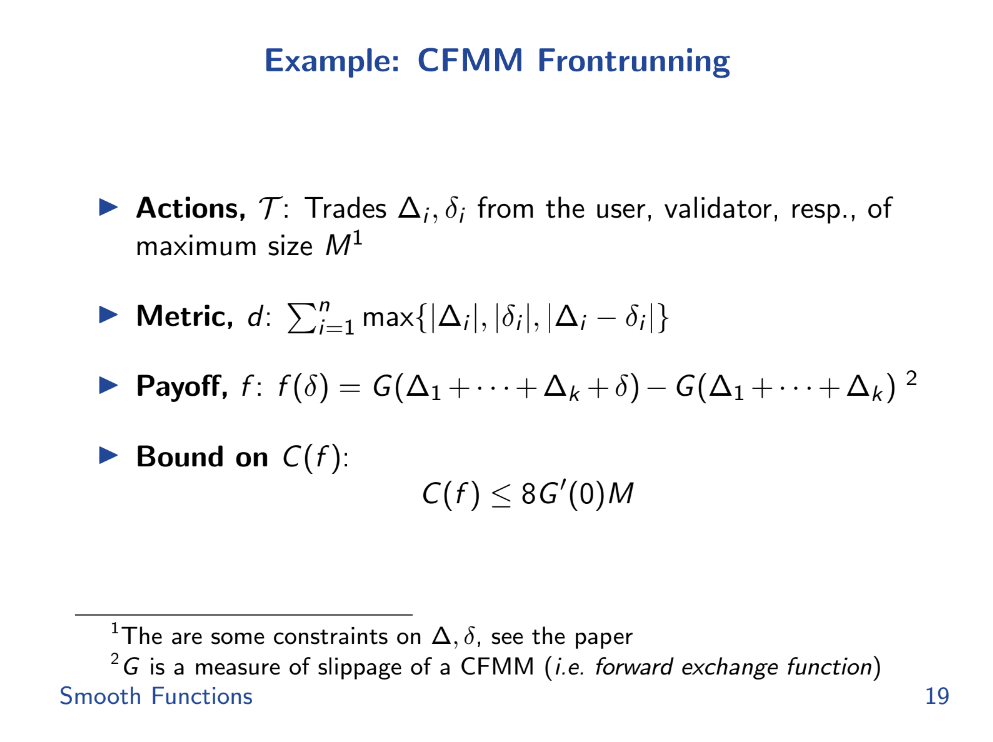

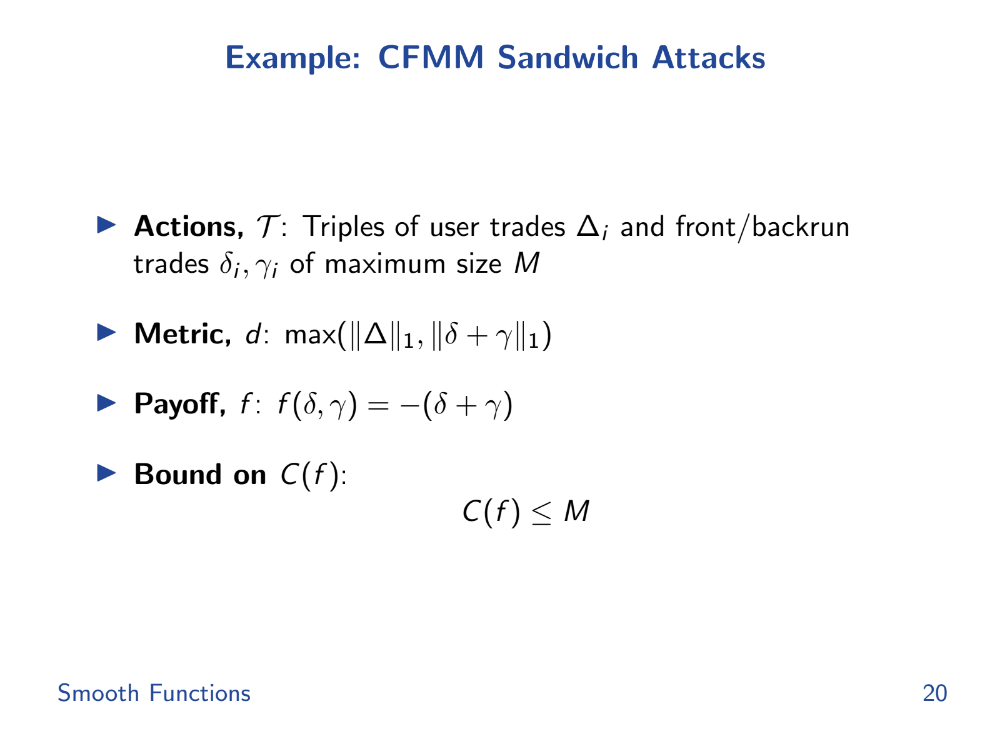

Examples (14:15)

Intuitively, an AMM with smoother price changes (lower gradient) should be less vulnerable to attacks like front-running that exploit rapid price swings. We can mathematically upper bound the potential unfairness or manipulation in an AMM by its smoothness metric.

For front-running, the smoothness of the AMM's pricing function directly controls the max-min fairness gap. But surprisingly, sandwich attacks actually have better (lower) bounds than worst-case front-running in some AMMs like Uniswap.

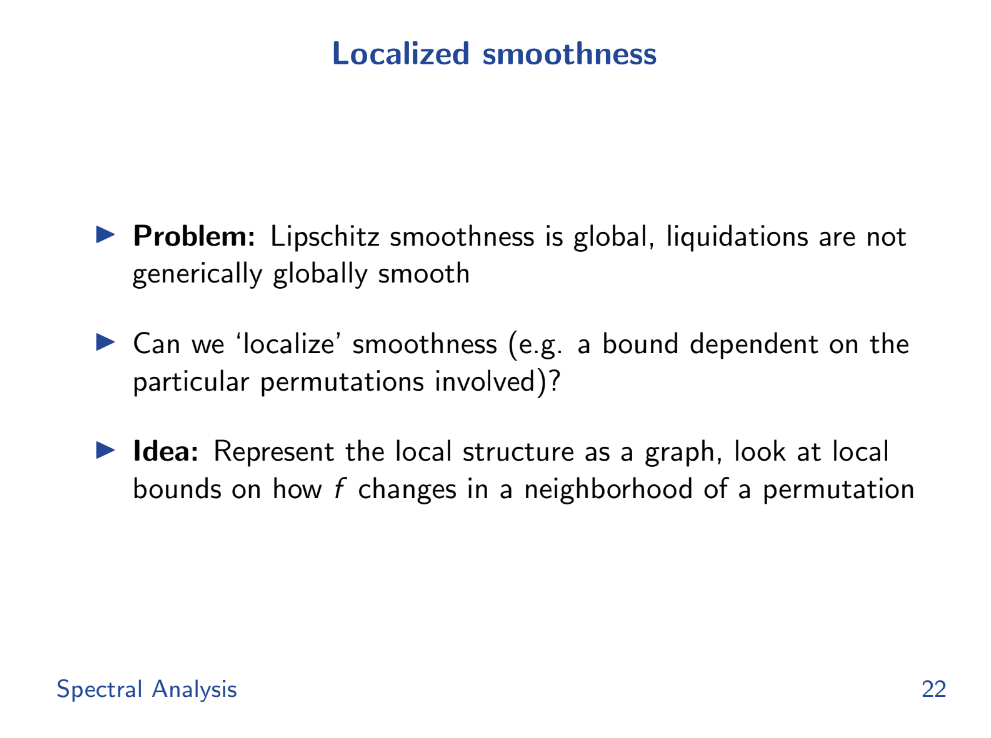

Spectral Analysis

Localized smoothness (15:35)

We'll shift from global "smoothness" metrics to more local ones. Global metrics compare all possible orderings of transactions, but local metrics only look at small changes.

This matters because in real systems like blockchain lanes or state channels, you often only care about local fairness - like between two trades in a lane, not all trades globally.

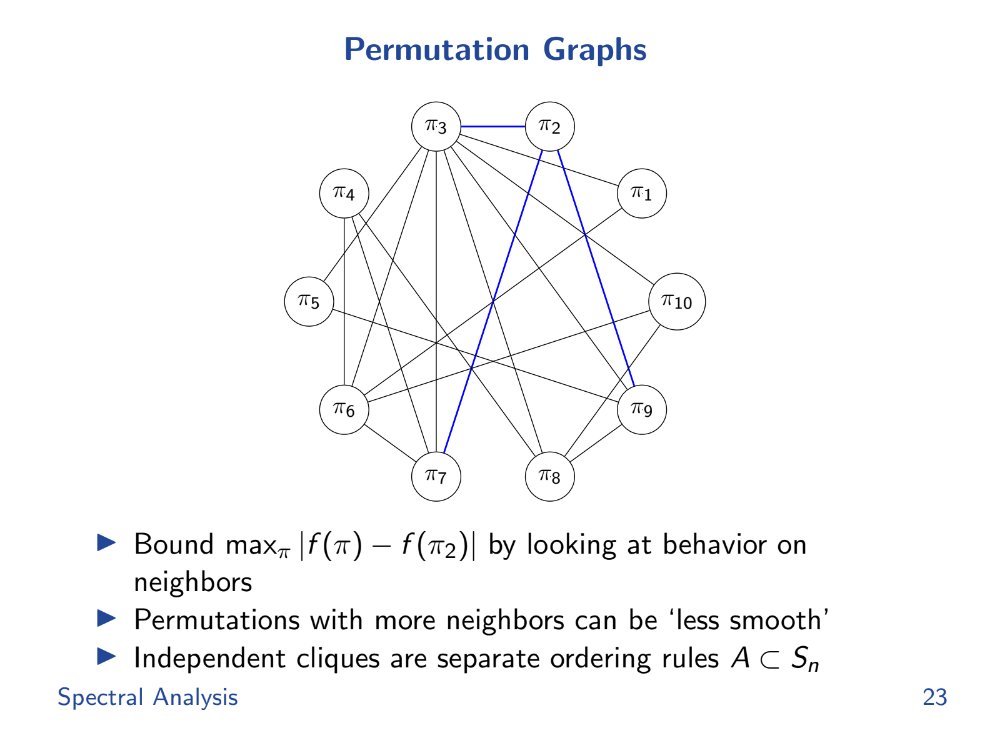

Permutation Graphs (16:30)

To help study this local smoothness, Tarun brings in a field of math called spectral graph theory. It envisions the valid transaction orderings as a graph, with edges for allowed transitions between orderings.

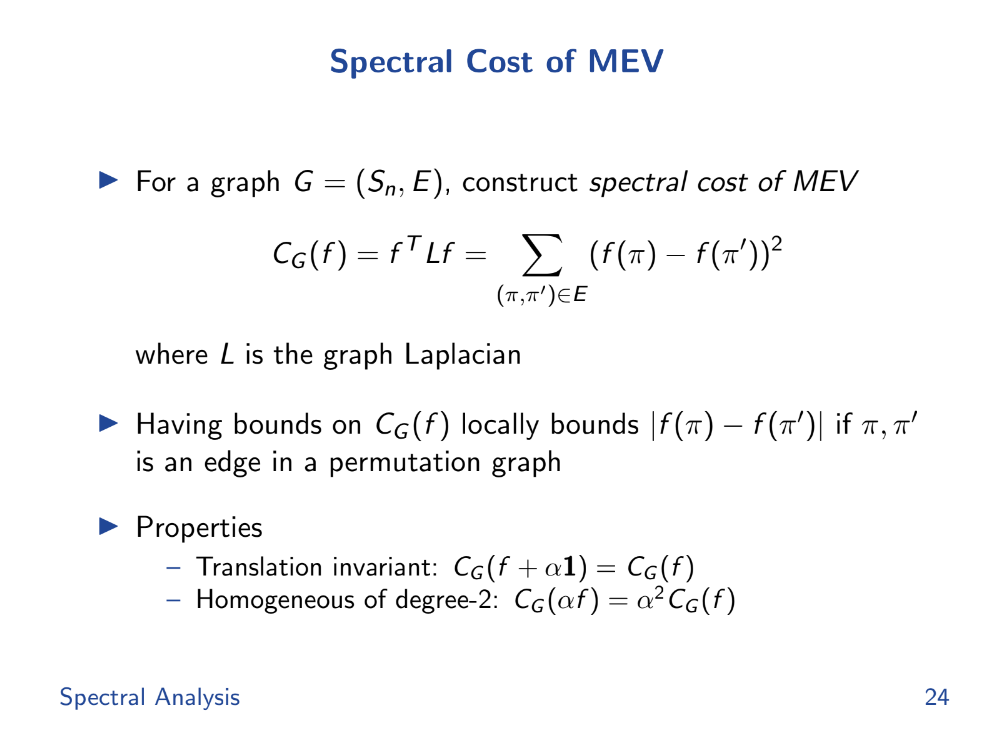

Spectral cost of MEV (17:20)

It defines a "spectral cost of MEV" as the difference in payoff between connected nodes. This measures local smoothness, not all alternatives globally.

Tarun introduces a function Cg and use some mathematical tools like eigenvalues and Fourier transform to analyze and bound the deviation in outcomes.

These tools help measure how "smooth" or predictable the system is with different orderings.

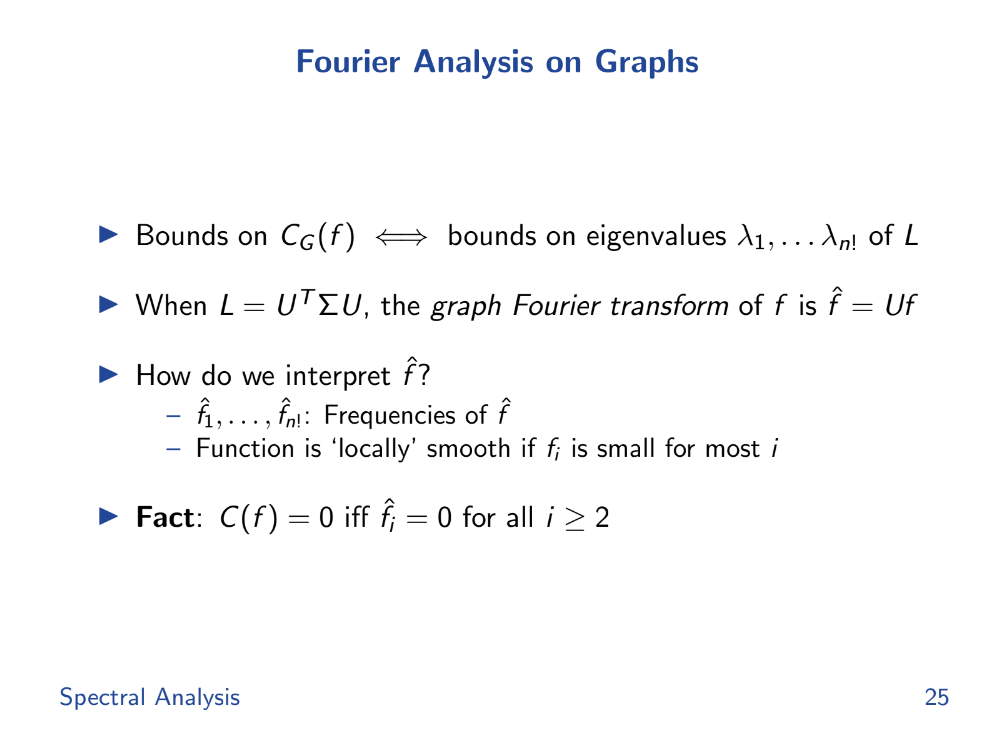

Fourier Analysis (17:50)

If the high frequency Fourier coefficients are zero, the payoff function is constant, then perfectly smooth and fair. The spectral mean of the eigenvalues bounds unfairness cost.

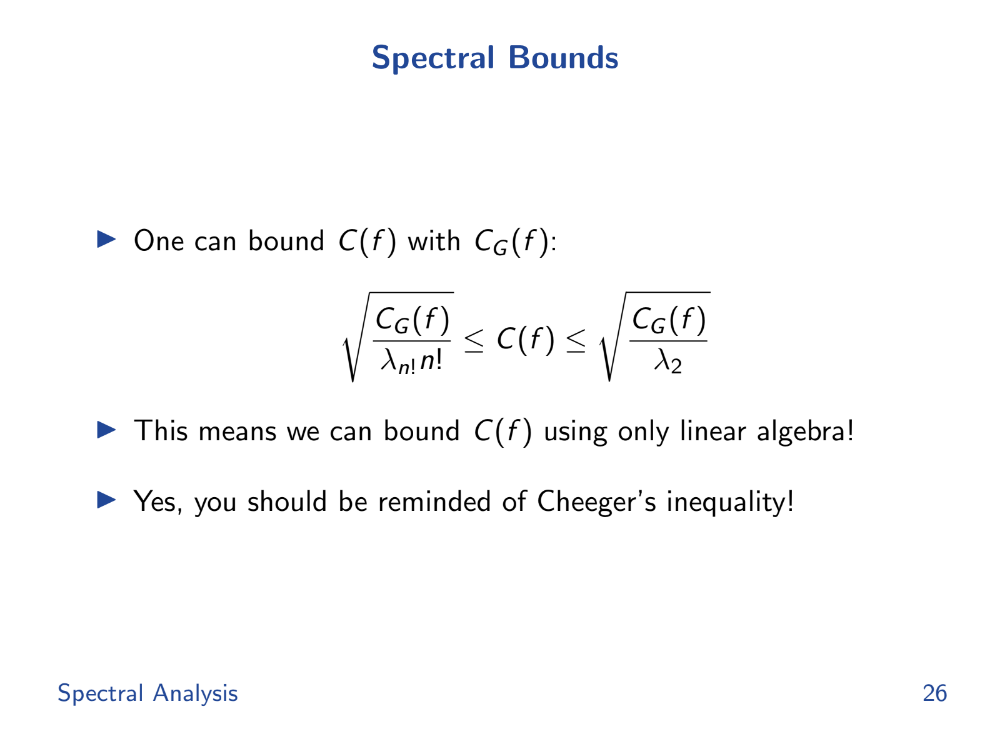

Spectral bounds (18:30)

The spectral bounds, derived from the eigenvalues, help in bounding the local cost. By bounding the local cost, they can ensure that the deviation in outcomes remains within acceptable limits, thus ensuring fairness.

So if we can analyze the spectrum of the graph Laplacian L, we can bound payoff differences between local orderings. This gives a "certificate of fairness".

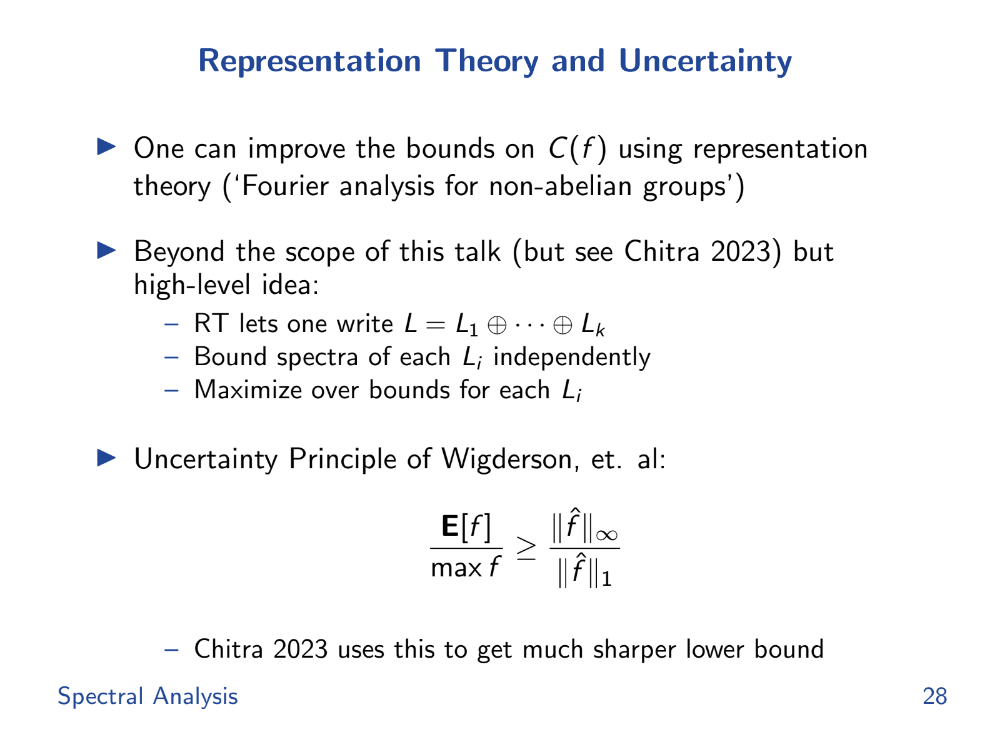

Representation Theory and Uncertainty (20:00)

There are still challenges :

- The graph can be huge, making eigenvalue analysis costly. But for sparse graphs there are shortcuts.

- The bounds are loose. But there are ways to decompose L and tighten the bounds.

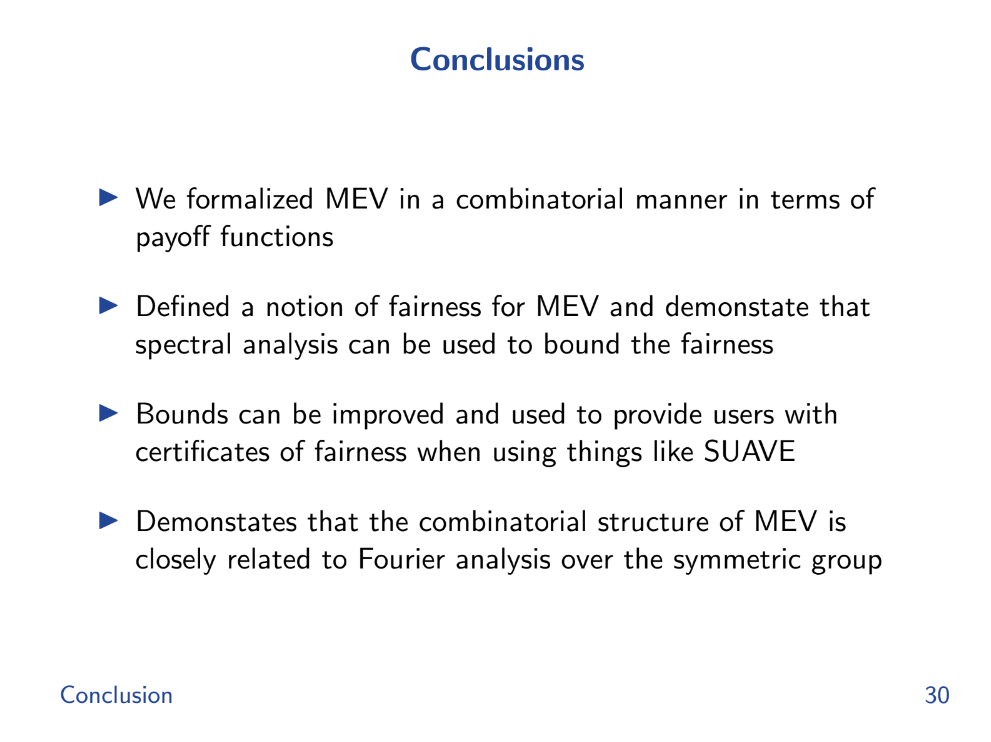

Conclusion (20:20)

Formalized MEV (miner extractable value) using combinatorics rather than programs. This makes the analysis cleaner.

Defined "fairness" as the difference between the worst case and average case payoff over transaction orderings.

We showed how spectral graph theory bounds can limit the smoothness of payoff functions. This helps bound the maximum unfairness.

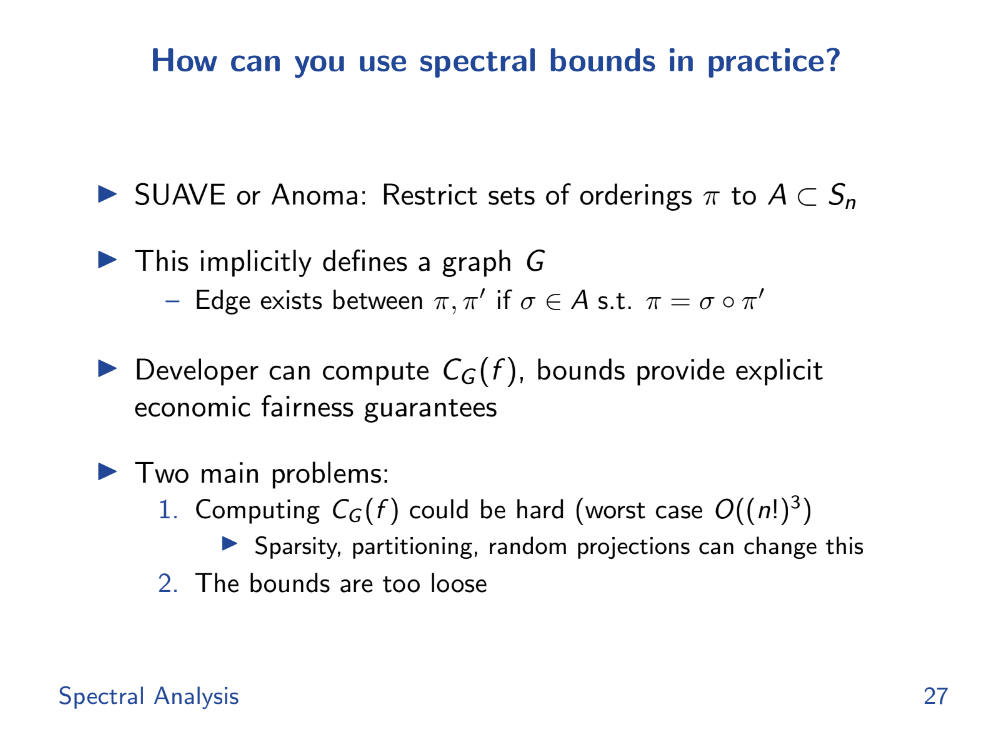

So by doing some linear algebra on the graph of valid orderings, you can get "certificates of fairness" for protocols like Suave or Anoma.

Fourier analysis on the symmetric group of orderings is important for this graph analysis.

Q&A

In Ethereum's decentralized finance (DeFi) ecosystem, liquidations are a big source of MEV. But many DeFi apps now use Chainlink for price feeds, avoiding on-chain price manipulation. So in practice, how much MEV from liquidations still exists ? (21:20)

MEV extraction can be modeled mathematically for any transaction ordering scheme. We can represent any payout scheme (like an auction or lending platform) as a "liquidation basis."

Even if apps use Chainlink to avoid direct manipulation, the ordering of transactions can still impact profits.

Can mechanisms like PBS or PEPC restrict the set of valid transaction orderings, reducing MEV ? (22:45)

Tarun isn't sure about PBS, but he agrees. Solutions like Anoma and SUAVE restrict transaction ordering, which helps bound/reduce MEV.

The key is formally analyzing how much a given constraint improves "fairness" by restricting orderings.

Could you use this MEV analysis to set bounds on a Dutch auction, as an alternative to order-bundled auctions ? (24:00)

Yes, dutch auctions could potentially be modeled to bound MEV exposure. The core idea is representing payout schemes to reason about transaction ordering effects.

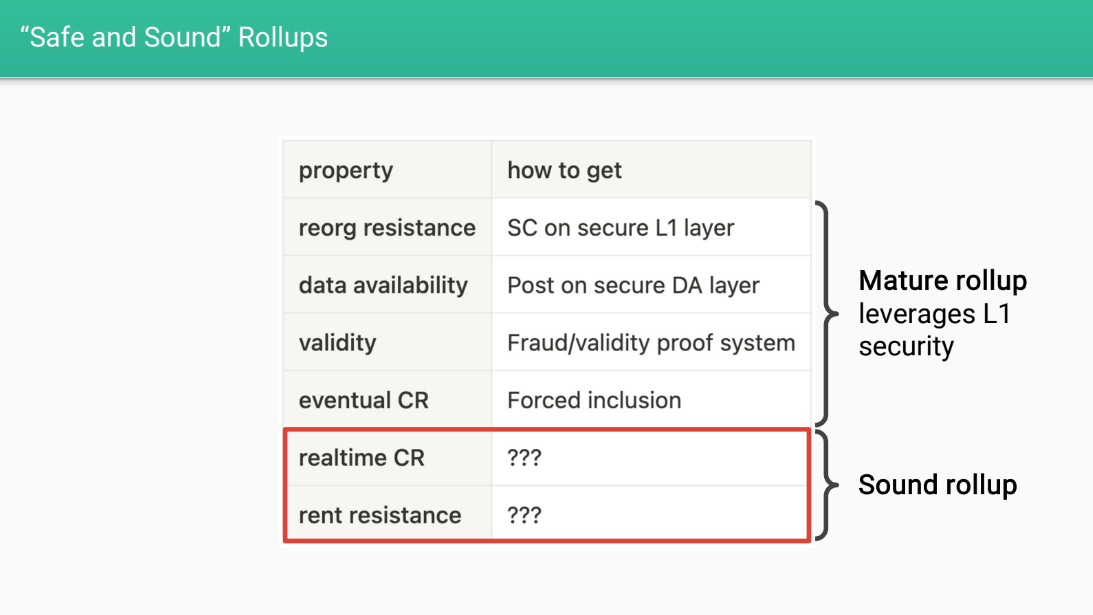

Properties of Mature & Sound Rollups

There are two main types of MEV possible on layer two:

- Macro MEV : where the rollup operator could delay or censor transactions to profit.

- Micro MEV : where validators extracting unnecessary fees from transactions like on layer 1.

Safe and Sound Rollups (1:00)

Davide wants layer 2 rollups to have certain properties to make them "safe and sound".

Firstly, rollups should have security properties inherited from layer 1 Ethereum :

- Reorg resistance : transactions can't be reversed

- Data availability : transaction data is available

- Validity : transactions follow protocol rules

- Eventual censorship resistance : transactions will eventually get confirmed

They should also have economic fairness properties described below

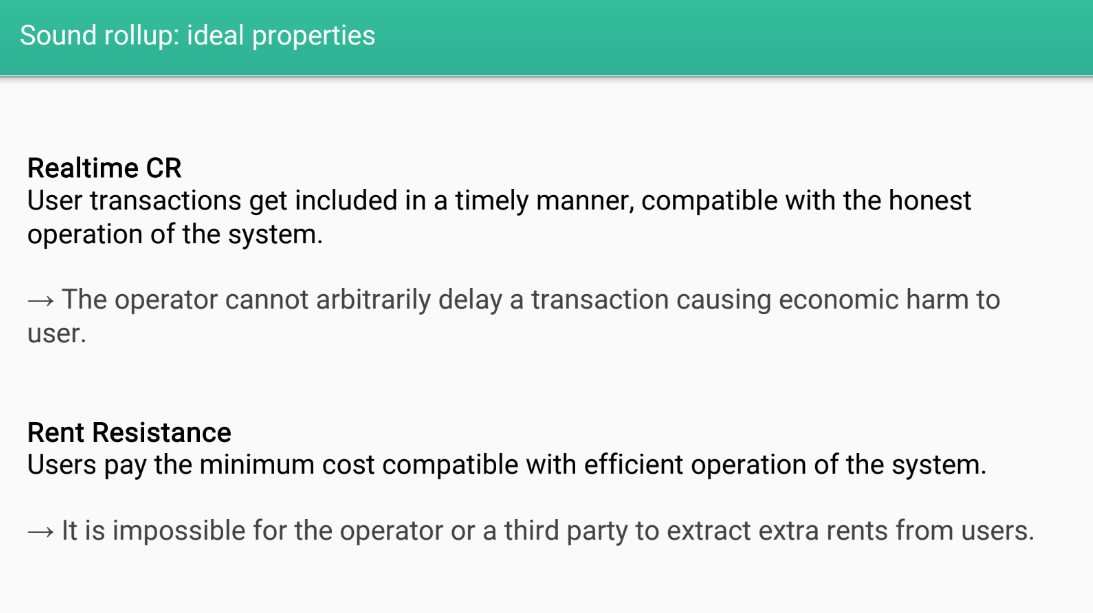

Ideal Properties (2:25)

- Real time censorship resistance : transactions get confirmed quickly without delays

- Rent resistance : users only pay minimum fees needed for operation, no unnecessary rents extracted

With real time CR and rent resistance, the rollup would be more fair and equitable for users

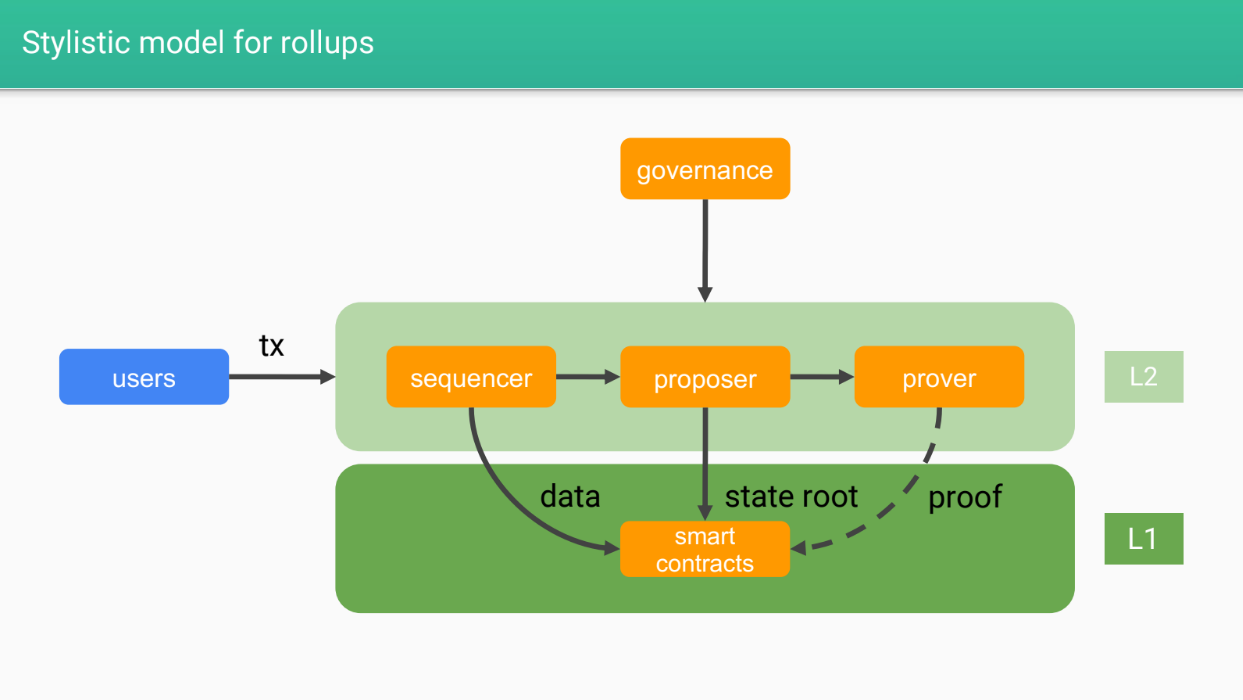

Stylistic model for rollups (3:05)

- Sequencers : Responsible for ordering transactions into blocks/batches and posting to layer 1

- Proposers : Execute transactions and update state on layer 1

- Provers : Check validity of state transitions (optional for some rollups)

- Smart contracts : The rollup logic on layer 1

- Governance : Designs and upgrades the rollup system

- Users : Interact with the rollup, secured by layer 1

The different roles in the rollup system will relate to the potential for "macro mev" and "micro mev" which will be discussed next. The goal is to design rollups to be fair and equitable for users.

Types of L2 MEV

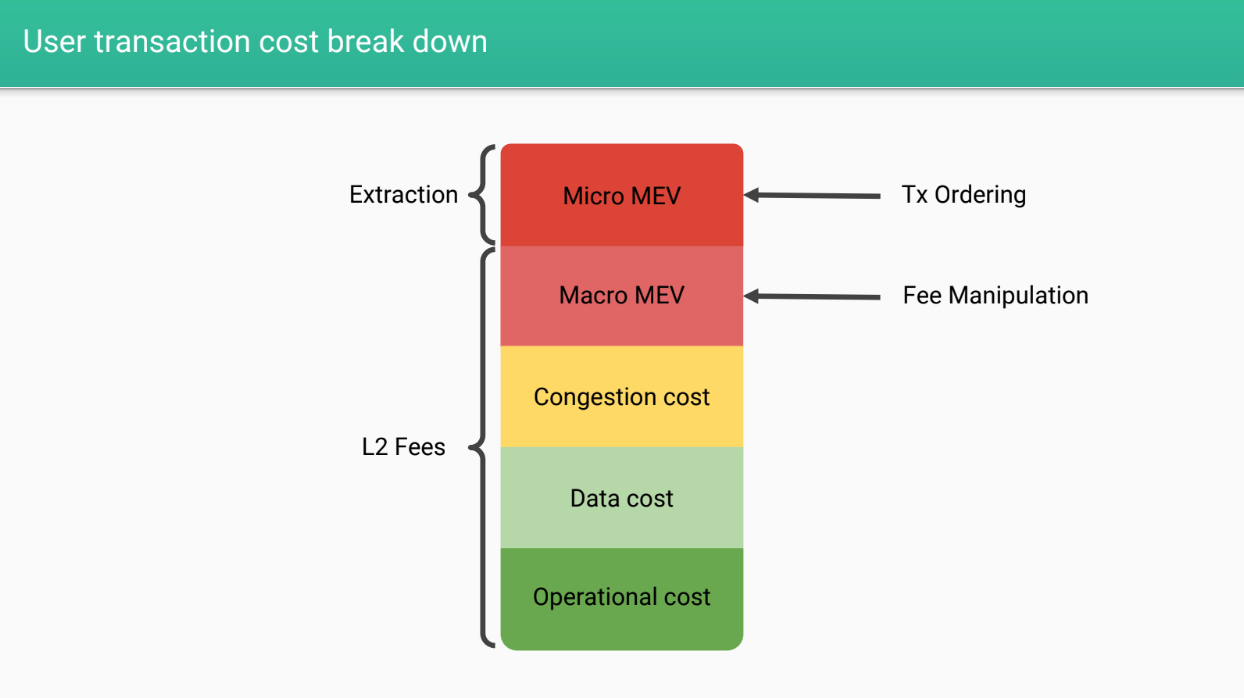

User transaction cost break down (4:30)

When a user sends a transaction on a rollup, they pay :

- Layer 2 fees for operation costs

- Data costs for posting data to layer 1

- Congestion fees for efficient resource allocation

- Potential "macro MEV" : fees extracted by the operator

- Potential "micro MEV" : transaction ordering extraction

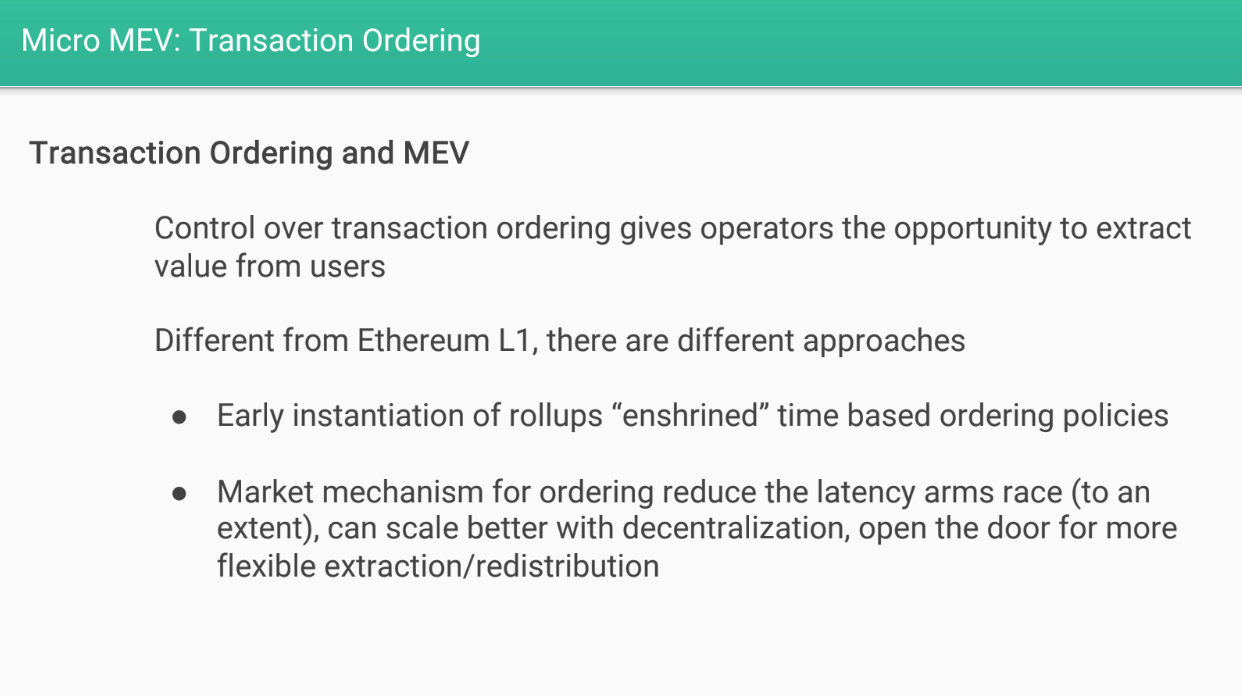

Micro MEV (6:05)

Micro MEV is transaction ordering extraction, like on layer 1. Others have discussed this, so we will focus on macro mev by the operator.

Macro MEV

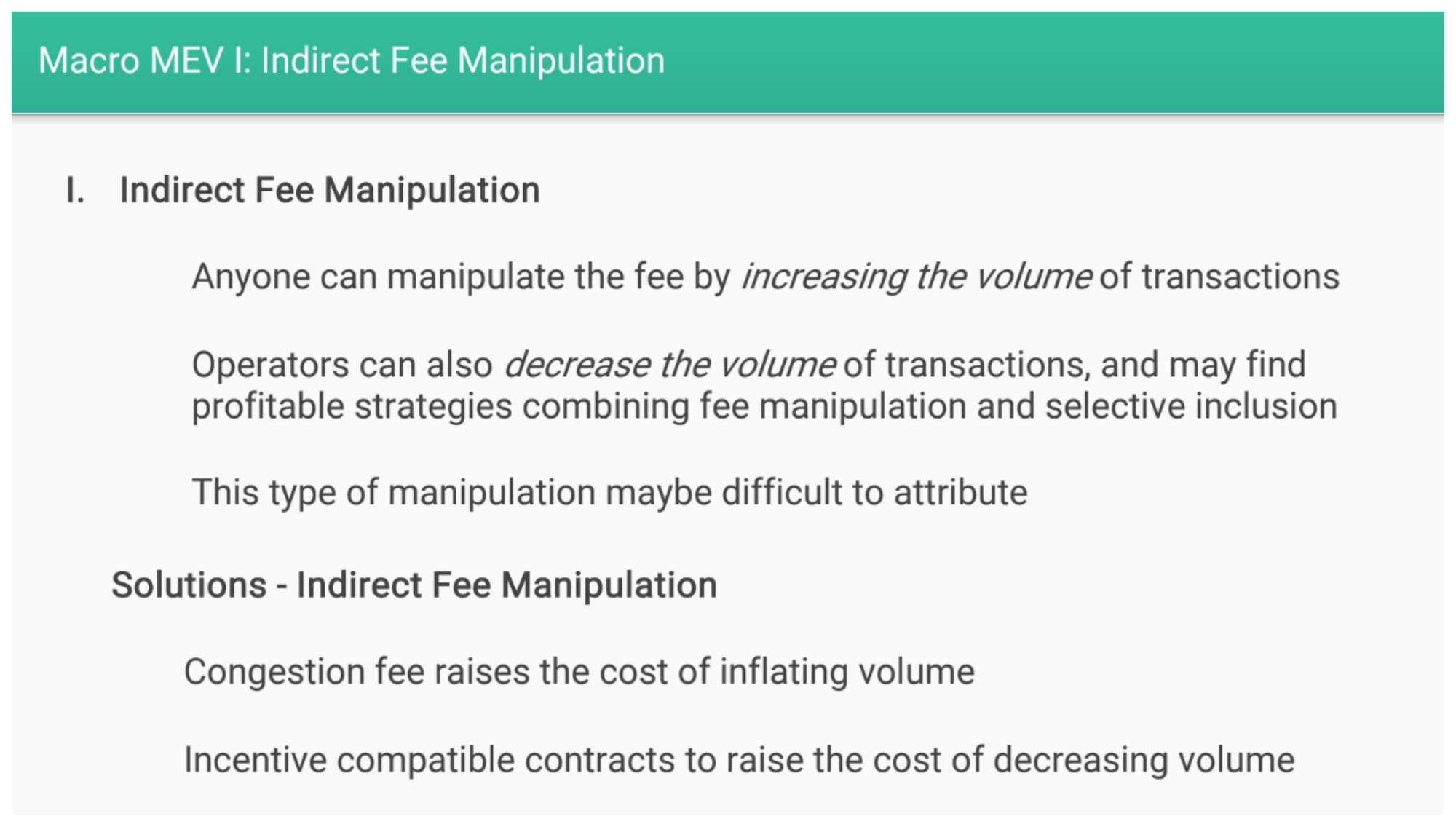

There are 3 main forms of macro MEV:

- Indirect fee manipulation : Increasing or decreasing transaction volume to manipulate fees

- Direct fee manipulation : Operator directly sets high fees

- Delaying transactions : Operator delays transactions to profit from fees

Indirect fee manipulation (7:40)

Anyone can manipulate fees by increasing/decreasing transaction volume. But we can't define precisely who is responsible of this kind of manipulation

Potential solutions for indirect fee manipulation :

- Careful design of congestion fee and allocation

- Governance could create incentives to raise the cost of manipulating volume for the operator

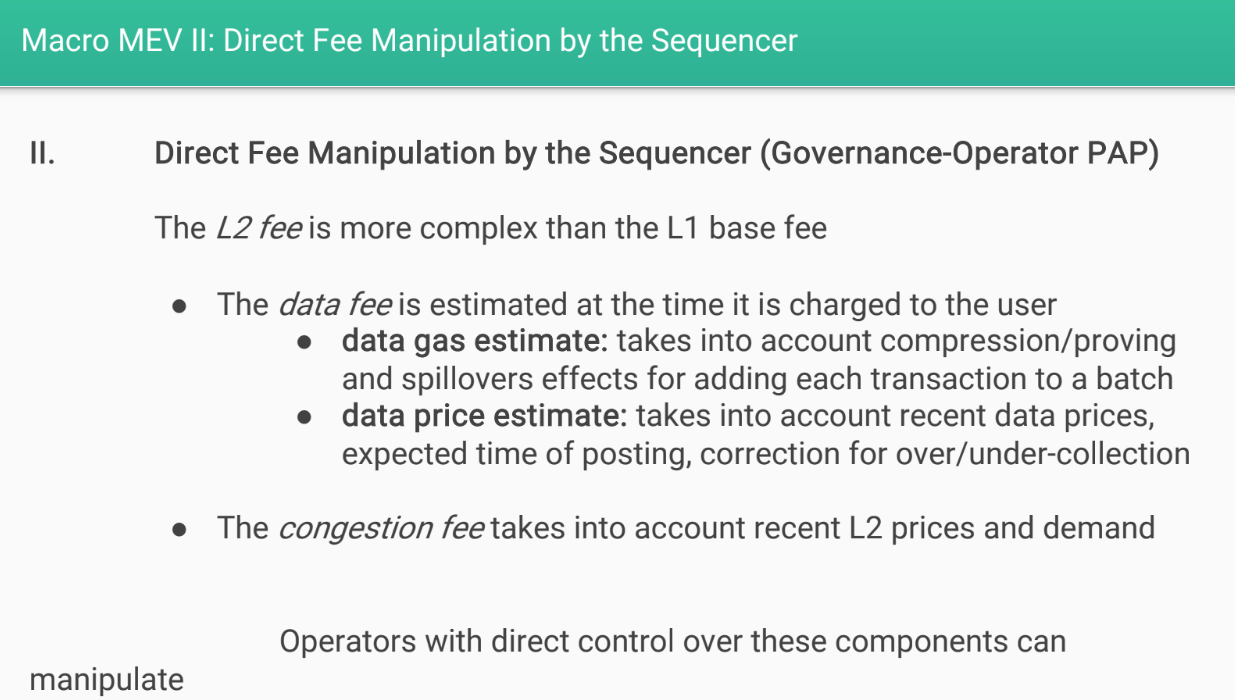

Direct fee manipulation by sequencer (8:45)

Layer 2 systems tend to be more centralized, so they have more control over transactions.

The sequencer controls estimating data fees in advance and setting congestion fees. This provide opportunities to manipulate fees overall, and discriminate between users by charging them different fees

Potential solutions for direct fee manipulation :

- Governance contracts to align incentives of sequencer

- Observability into fees and ability to remediate issues

- Decentralize the sequencer role more

- Proof-of-Stake incentives to prevent bad behavior (L2 tokens ?)

Fee market design manipulation (11:30)

The governance controls the parameters of the fee market. For example:

- With EIP-1559 style fees, governance controls the base fee and tip limits

- Governance could artificially limit capacity

- Or fail to increase capacity when demand increases

This could increase fees and rents extracted from users.

Potential solutions for market design manipulation :

- Transparent and participatory governance

- Align governance incentives with users

- Flag fee market changes as high stakes decisions requiring more governance process

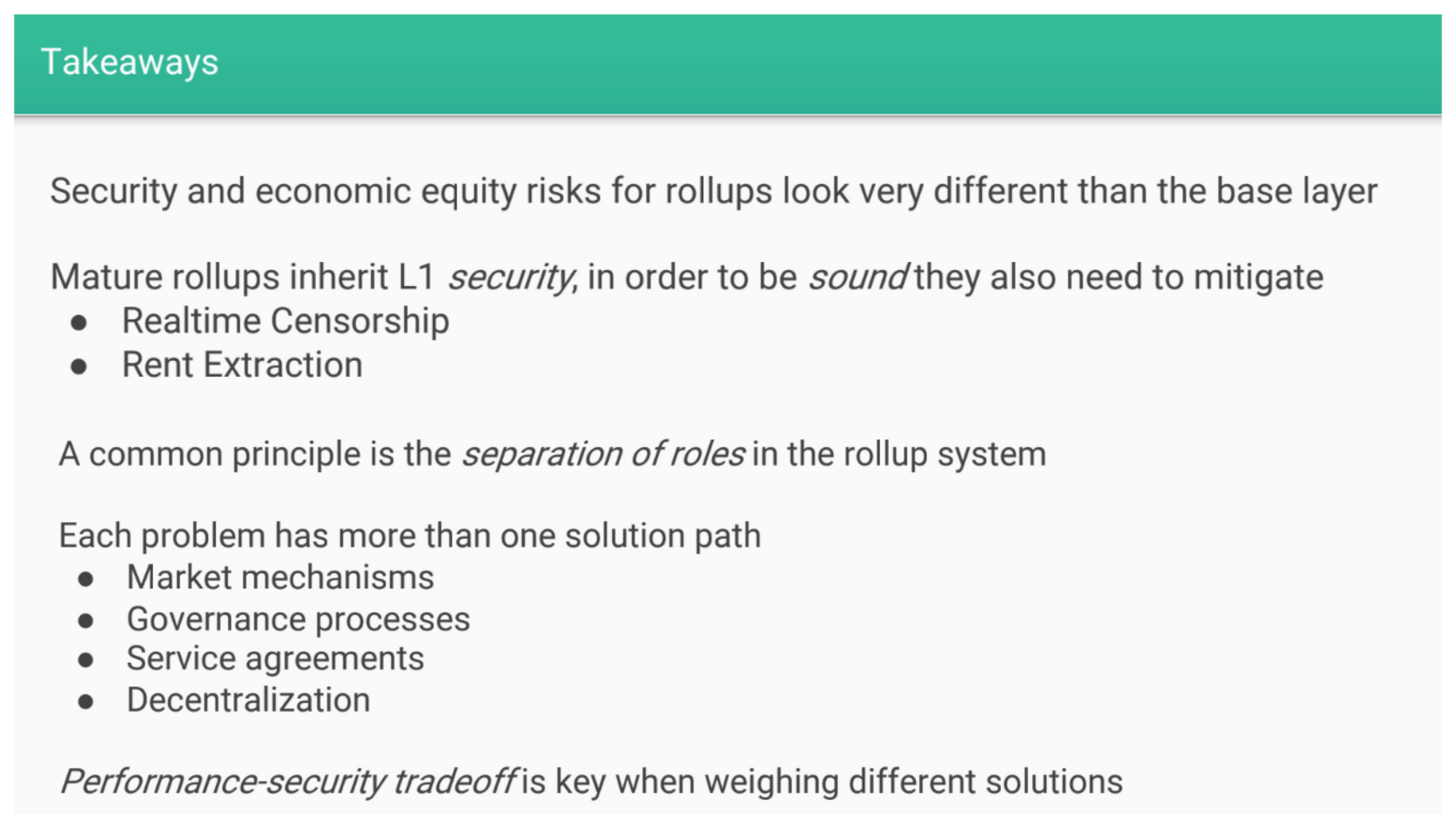

Takeaways (12:55)

Rollups are layer 2 solutions built on top of layer 1 blockchains like Ethereum. They inherit the security of the underlying layer 1, but need to mitigate other risks like censorship on their own.

Rollups need to separate different roles/duties to remain secure and decentralized. For example, keeping the role of sequencer separate from governance.

There are multiple potential solutions to challenges like preventing censorship on rollups. Each solution has trade-offs between performance and security/decentralization.

Rollup operators need to balance these trade-offs when designing their architectures.

They have more flexibility than layer 1 chains since they can utilize governance and agreements in addition to technical decentralization.

Q&A

Do you see a role for a rollup's own token in these attacks that you described ? (15:00)

If a small group controls a large share of the governance token, they could potentially manipulate votes for their own benefit. this is called a "governance attack."

Proper decentralized governance is still an open research problem.

Besides governance attacks, could governance activities enable MEV extraction ? (16:20)

This is a valid consideration : High stakes governance votes could lead to "MEV markets" for votes. It's another direction where MEV issues could arise related to on-chain governance processes.

Transaction Ordering

The View from L2 (and L3)

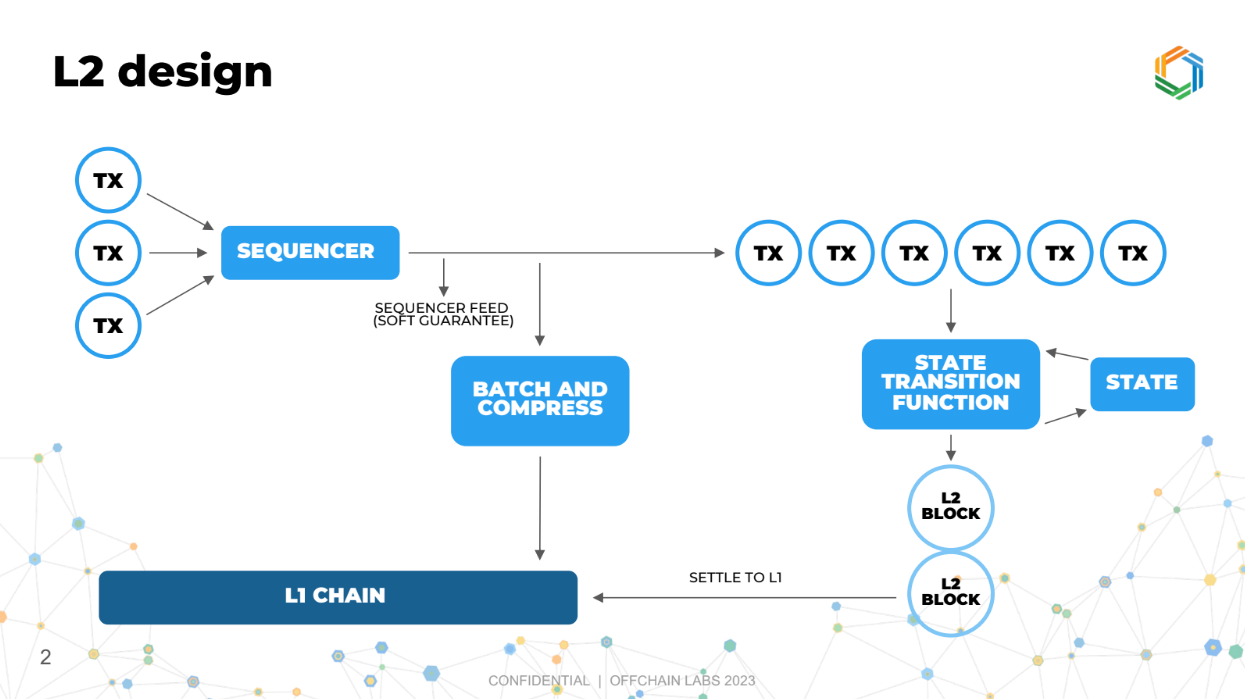

L2 Design (0:00)

The sequencer's job in a layer 2 system is to receive transactions from users and publish a canonical order of transactions. It does not execute or validate transactions.

Execution and state updates happen separately from sequencing in layer 2. The sequencer and execution logic are essentially two separate chains, one chain created by the sequencer to organize transactions, and another chain to process these transactions and update the blockchain

How L2 differs from L1 Ethereum (2:00)

On L2, the sequencing (ordering of transactions) is separate from execution (processing of transactions), which helps in faster processing. Arbitrum has 250ms blocks VS 12 sec for Ethereum

L2 typically has private transaction pools rather than a public pool. This rovides more privacy.

The cost structure is lower on L2, and it being more flexible in design compared to L1 because it's less mature and widely used, hence easier to modify or evolve.

But there is an issue related to how transactions are ordered, which is crucial for ensuring fairness and correctness in the system. We'll not think this problem as solving MEV, but as transaction ordering optimization.

Transaction ordering goals (3:15)

- Low latency : Preserve fast block times.

- Low cost : Minimize operational costs.

- Resist front-running : Don't allow unfair trade execution.

- Capture "MEV" ethically : Monetize some of the arbitrage/efficiency opportunities.

- Avoid centralization : Design a decentralized system.

- Independence of irrelevant transactions : Unrelated transactions should have independent strategies.

Independance of irrelevant transactions (4:25)

Transactions seeking arbitrage opportunities should be able to act independently of each other, if they are unrelated.

This independence makes the system simpler and fairer, avoiding a complex, entangled scenario where everyone's actions affect everyone else.

In an entangled system, having private information could give some participants an unfair advantage, leading to a few big players dominating (monopolies or oligopolies).

We want to prevent this by ensuring transactions are independent of each other, making the system more open and competitive.

New ordering policy

Proposed Policy (6:20)

For transaction ordering, Ed proposes using a "frequent ordering auction"

It involves three key attributes :

- Speed : Orders transactions frequently, like every fraction of a second, and repeat this fast process over and over again.

- Sealed bid auction : Participants submit confidential bids for their transactions.

- Priority gas auction : Bids are offers to pay extra per unit of gas used.

This approach is related to "frequent batch auctions" in economics literature, but called frequent ordering auction here to distinguish it. Frequent batch auctions were an inspiration for this proposed frequent ordering auction system.

Doing priority gas auctions rather than a single bid is important, as Ed will explain later.

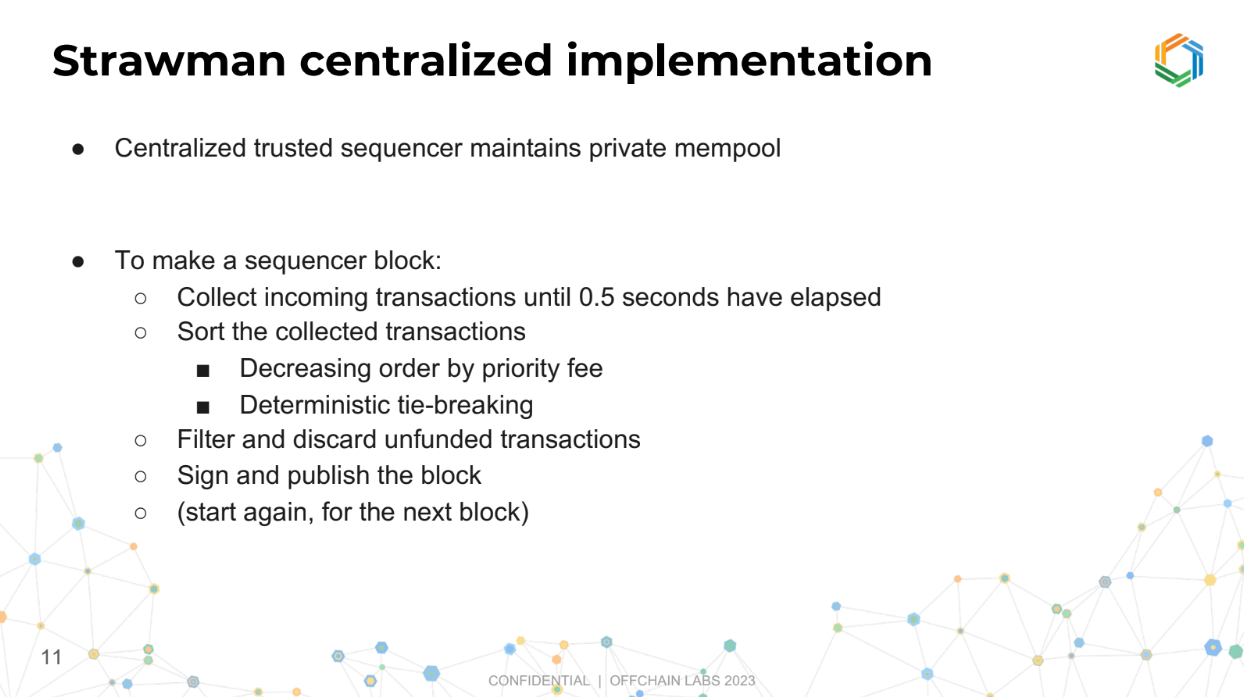

Strawman centralized implementation (7:45)

In this version, there's a single trusted entity called a sequencer that collects all incoming transactions over a short period (like 0.5 second).

Once the collection period is over, it organizes these transactions based on certain criteria (like who's willing to pay more for faster processing).

It also checks and removes any transactions from the list that are not funded properly (like if someone tries to make a transaction but doesn't have the money to cover it).

Then, it finalizes this organized list (called a block), makes it public, and starts the process over for the next set of transactions.

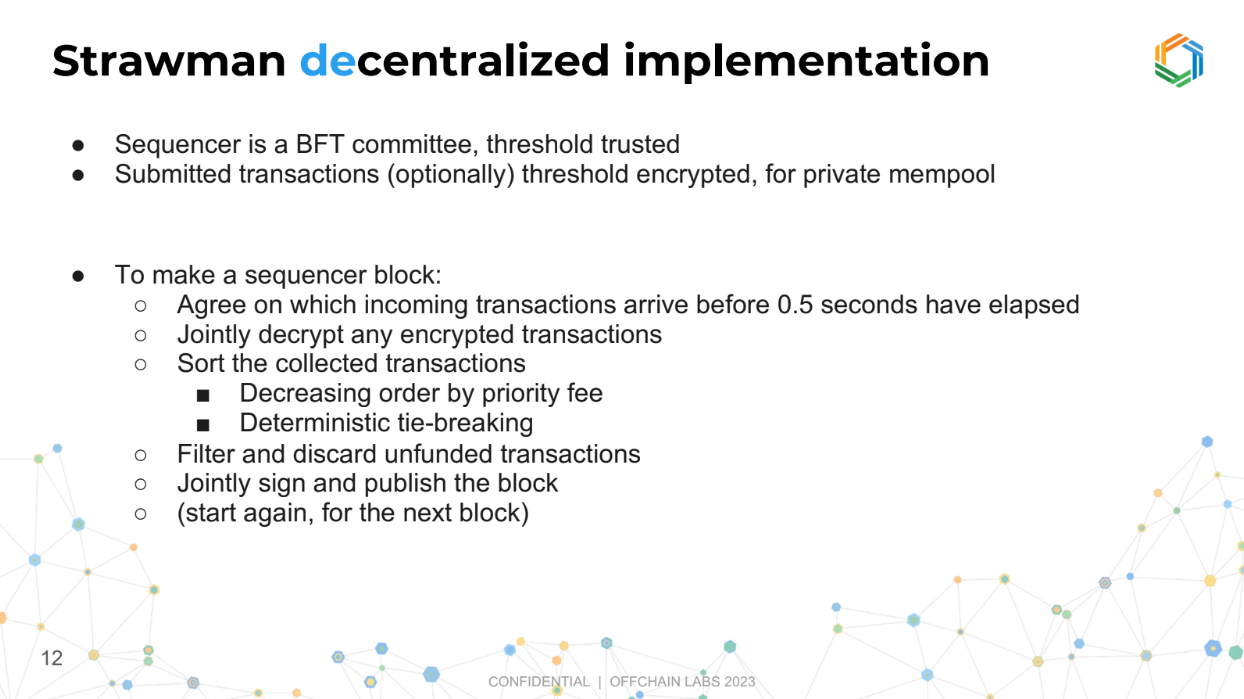

Strawman decentralized implementation (9:30)

Instead of a single trusted entity, a group of entities (a committee) works together to manage the transactions.

They collect all transactions, and once the collection period ends, they jointly organize and check them, similar to the centralized version.

This committee then collectively approves the organized block of transactions and makes it public before moving on to the next set.

Ultimately, governance will decide :

- Centralized for faster blocks and lower latency

- Decentralized for more trust but slower operation

Economics : examples (11:50)

Single arbitrage opportunity

Imagine there’s one special opportunity and everyone wants to grab it. Whoever gets their request in first, gets the opportunity.

It’s like an auction where everyone submits their bids secretly (sealed bid), but unlike regular auctions, everyone has to pay, not just the winner (all pay).

This method is known to be fair and efficient, and the strategies for participants are well understood.

Two independant arbitrage opportunity

There are two separate opportunities (A and B). If you're going for opportunity A, you don’t care about those going for opportunity B, as they don’t affect your chances.

Each opportunity has its own mini auction, making the process straightforward and independent, which they find desirable.

In other words, If you combine opportunities A and B in one transaction, you pay more total gas. This makes the strategy easier to understand and follow, as you only focus on the opportunity you’re interested in, without worrying about others.

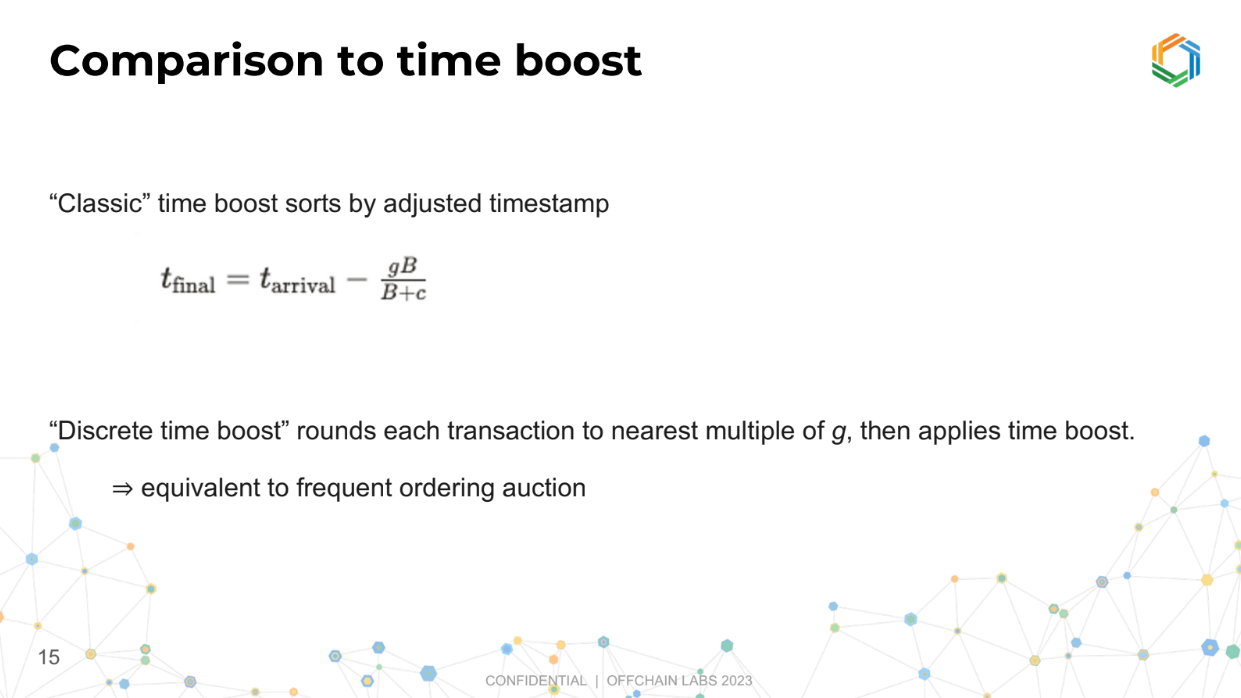

Comparison to Time Boost (14:30)

In Time Boost, the actual time a transaction arrives gets adjusted based on a formula. The formula considers your bid (how much you're willing to pay), a constant for normalization, and the maximum time advantage you can buy. Even if you bid a huge amount, the time advantage caps at a certain point.

A variation called Discrete Time Boost rounds the transaction's time to a fixed interval and then applies the same formula.

We can think of the frequent ordering auction as a frequent batch auction (grouping transactions together for processing) applied to blockchain, or a modified version of Time Boost. This is up to us.

FOA with bundles (16:25)

This is a new idea about allowing people to submit bundles of transactions together. The sequencer (the entity organizing transactions) should accept these bundles.

Guarantees for bundles :

- All transactions in a bundle will be in the same sequencer block.

- Transactions in a bundle with the same bid will be processed consecutively in the order they are in the bundle.

This just requires a tweak to the deterministic tiebreak rules to make sure this happens, but they believe it's a straightforward adjustment.

Implementation notes (17:25)

The L2 chain should collect the extra fees (priority fees) from transactions since it's already equipped to do so. This way, there's no need to create a new system to handle these fees.

But currently Arbitrum doesn't collect priority fees for backwards compatibility. This was done because many people were accidentally submitting transactions with high priority fees which didn't provide any benefit, so to keep things simple, they ignored these fees.

The solution is to create a new transaction type that is identical but collects fees. It's also important to support bundles (groups of transactions processed together), and plan to include this feature

Q&A

Transaction fees don't go to the rollup operator, but to a governance treasury. Doesn't this incentivize the operator to take off-chain payments to influence transaction order and profit (called "frontrunning") ? (20:00)

There are two ways the sequencer (rollup operator) could extract profits :

- Censoring transactions to delay them

- Injecting their own frontrunning transactions

For a centralized sequencer, governance relies on trust they won't frontrun. If suspected, they'd be fired.

For a decentralized sequencer committee, frontrunning is harder but could happen via collusion. Threshold encryption of transactions before ordering helps prevent this.

This ordering scheme resembles a sealed-bid auction, though transactions can still be gossiped before sequencing (22:45)

Ed agrees. For decentralized sequencers, gossip is reasonable but frontunning is still a concern. Threshold encryption helps address this.

How does the encryption scheme work to prevent frontrunning ? (24:20)

- he committee agrees on a set of encrypted transactions in a time window.

- The committee publicly commits to this set.

- Then privately, the committee decrypts and sorts/filters transactions.

- Finally, they jointly sign the sorted transactions.

This prevents frontrunning because the contents stay encrypted until after the committee commits to the order.

But doesn't this require publishing all transactions for verification ? (26:00)

Ed disagrees. The committee knows the transactions, but outsiders don't see the contents. Some schemes allow later proving if a valid transaction was wrongly discarded.

What are the potential approaches for decentralized sequencing in rollups ? (27:00)

Both centralized and decentralized versions will be built. Users can choose which one to submit to. Whole chains decide which model to use based on governance.

Transitioning from centralized to decentralized sequencer requires paying the decentralized committee to incentivize proper behavior. The decentralized committee would be known, trusted entities to disincentivize bad behavior.

Custom ordering rules are possible for app chains if needed