MEV Workshop at SBC 23 Part 2

Summary of MEV Workshop at the Science of Blockchain Conference 2023. Videos covered are about robust decentralization and MEV

Intro

Phil argues geographic decentralization is the most important property for cryptocurrencies and blockchain systems.

Cryptocurrencies aim to be "decentralized". But decentralization must be global to really disperse power. Protocols should be designed so nodes can be run from anywhere in the world, not just concentrated regions.

Winning a Nobel Prize, jk (2:15)

Phil believes researching geographic decentralization techniques could be as impactful as winning a Nobel Prize. Even though there is no Nobel for computer science, the author jokes you can "win" by advancing this research area.

For consensus researchers, this should be a top priority especially during the "bear market", which is a good time to focus on building technology, rather than just speculation.

Geographic decentralization > Consensus (3:00)

Rather than just studying abstract models like Byzantine Fault Tolerance, researchers should focus on geographic decentralization. Because this is the main point that makes systems like Ethereum to be truly decentralized

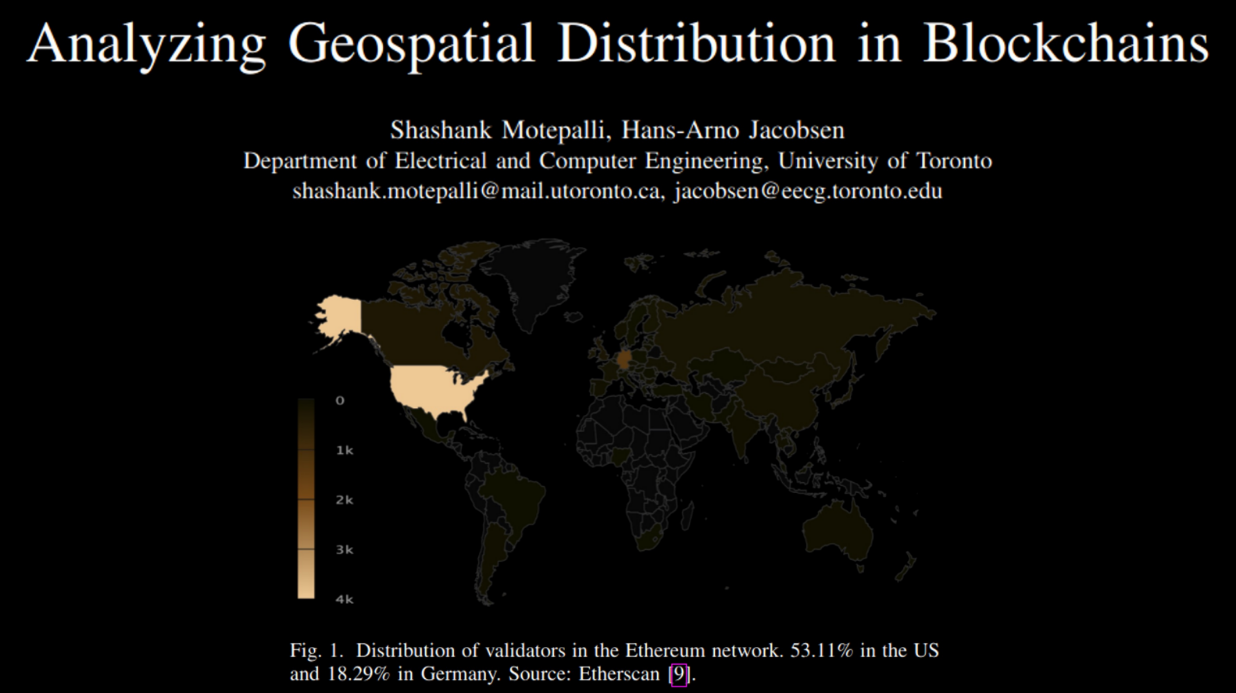

Geospatial Distribution (5:00)

Right now, 53.11% of Ethereum validators are located in the United States, and 18.29% in Germany. This means Ethereum is not as decentralized as people might think, since a large percentage of nodes are concentrated in one country.

Having nodes clustered in a few regions makes the network more vulnerable - if there are internet or power outages in those areas, a large part of the network could go offline. Spreading the nodes out across different countries and continents improves the network's resilience and fault tolerance.

...But why do we care ? (6:55)

Global decentralization is important for many reasons, even if technically challenging :

- To comply with regulations in different countries. If all the computers running a cryptocurrency are in one country, it may not comply with regulations in other countries.

- To be "neutral" and avoid having to make tough ethical choices. There is an idea in tech that systems should be neutral and avoid ethical dilemmas. Like Switzerland is viewed as neutral.

- For use cases like cross-border payments that require geographic decentralization to work smoothly.

- To withstand disasters or conflicts. If all the computers are in one place, a disaster could destroy the system. Spreading them worldwide makes the system more resilient.

- For fairness. If all the computers are in one region, people elsewhere lose input and control. Spreading them globally allows more fair participation.

- For network effects and cooperation. A globally decentralized system allows more cooperation and growth of the network.

The recipe

What is geograhical decentralization ? (11:00)

Phil believes the most important type of decentralization for crypto/blockchain systems is decentralization of power, specifically economic power.

Technical forms of decentralization (like number of validators) matter only insofar as they impact the decentralization of economic power.

A metric to study (12:25)

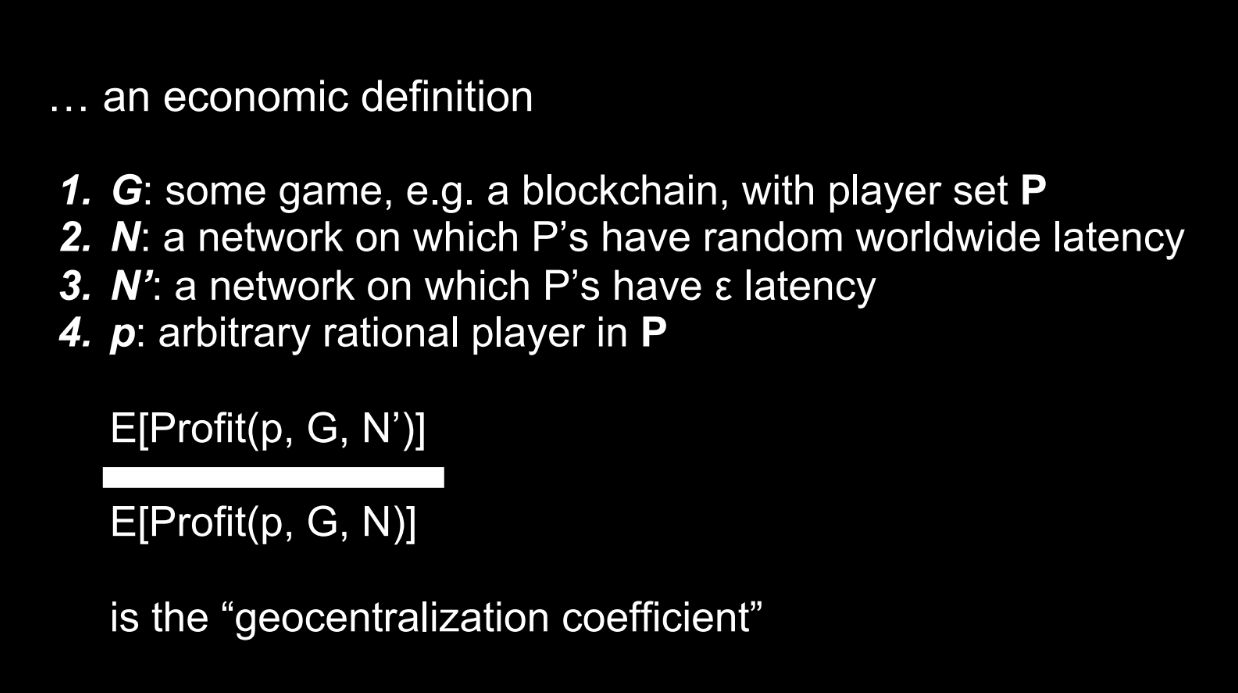

Some terminology :

- G = an economic game/mechanism

- P = the set of players in game G

- N = a network where players experience real-world latencies

- N' = a network where players have minimal latency to each other

- p = an arbitrary rational player in P

The "geocentralization coefficient" refers to the ratio of a player's expected profit in the minimal latency network N' versus the real-world latency network N.

A high coefficient means there is more incentive for players to be geographically co-located. The goal is to keep this coefficient low, to reduce incentives for geographic centralization.

Some examples

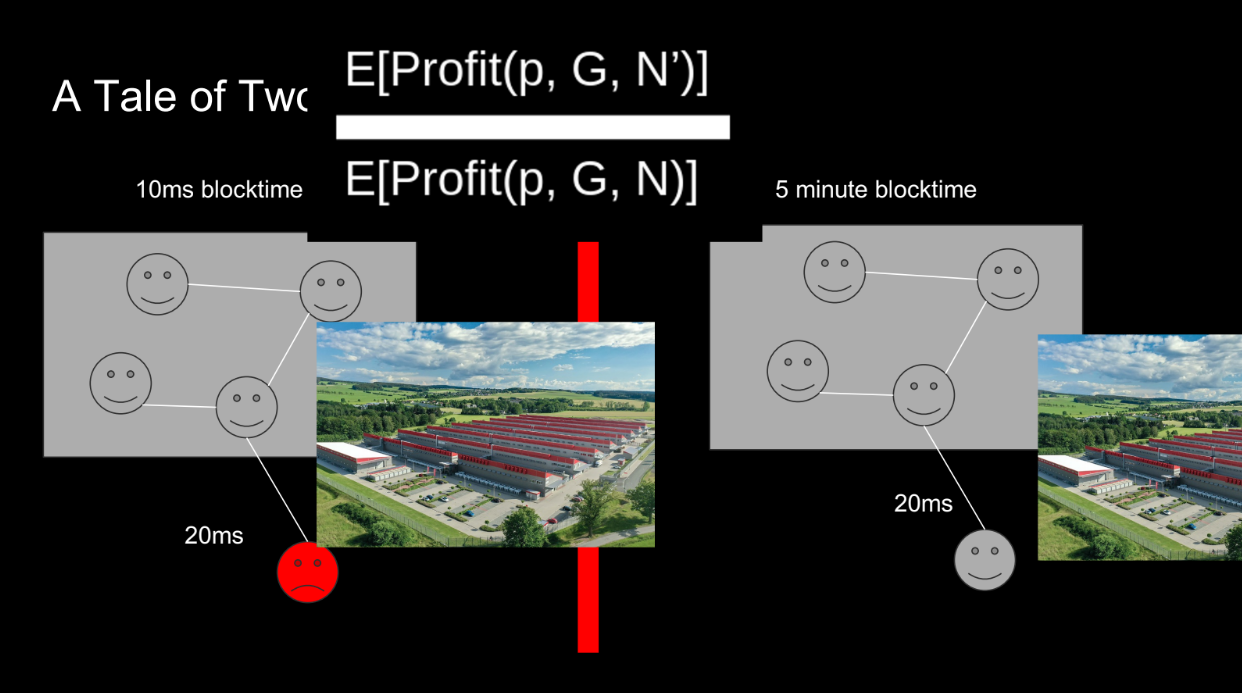

Fast blockchains have higher coefficient (14:00)

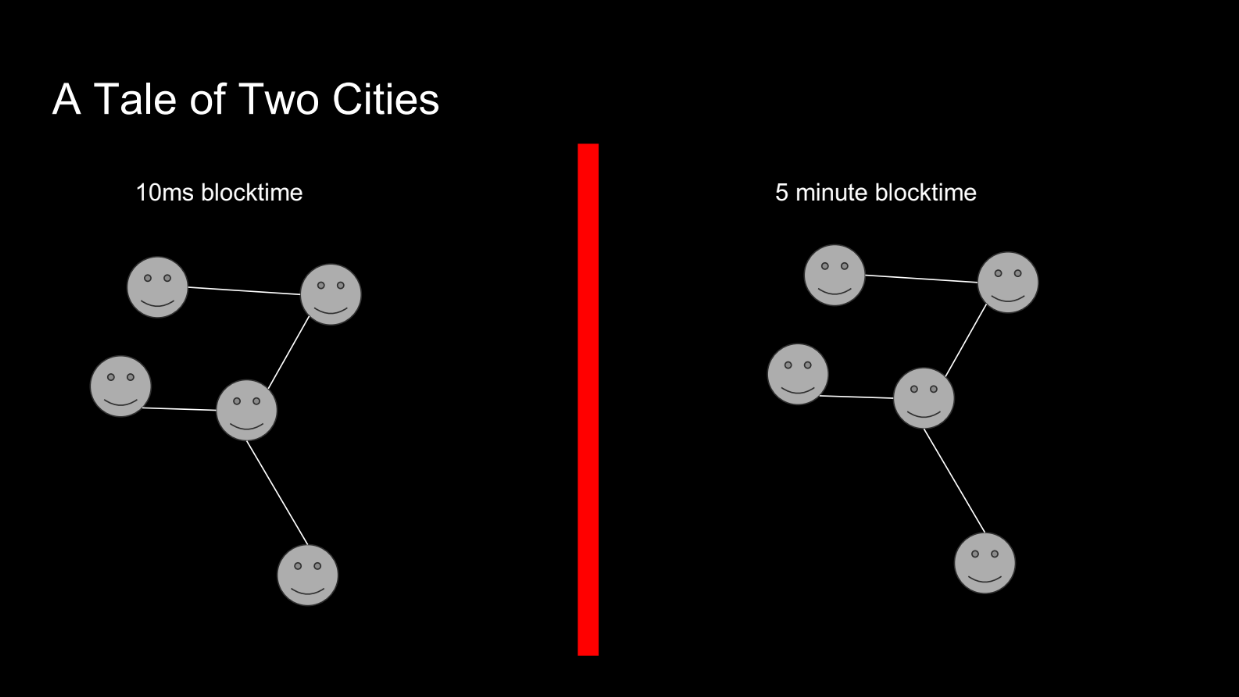

Let's give an example comparing 2 blockchains : one with fast 10ms blocks, one with 5 min blocks :

- In the fast blockchain, a node with just 20ms higher latency cannot participate, so its profit is zero.

- In the slower blockchain, 20ms doesn't matter much. The profits are similar.

Therefore, the fast blockchain has a higher "geocentralization coefficient" - it incentivizes nodes to be geographically closer. So a fast blockchain like Solana is less geographically decentralized than a slower one like Ethereum.

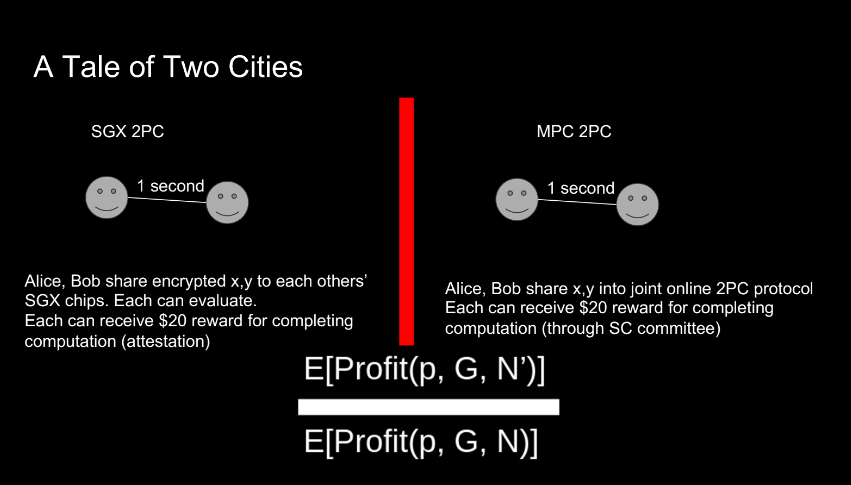

SGX and MPC (16:45)

Let's try another example with 2 parties trying to compute a result and get paid $20 each :

- MPC protocols require multiple rounds of communication between parties to compute a result securely. With higher network latency between parties, the time to complete the MPC protocol increases.

- Using SGX, they can encrypt inputs and get the output fast with 1 round of messaging. Latency doesn't matter much.

Adding MPC to blockchain systems can be useful, but protocols should be designed carefully to avoid increased geographic centralization pressures.

SGX FTW ? (19:00)

Phil concludes that SGX protocols can be more geographically decentralized than MPC protocols.

This is counterintuitive, since MPC is often seen as more decentralized. But SGX has lower latency needs than multi-round MPC protocols, then SGX has a lower "geocentralization coefficient" - less incentive for geographic centralization

Q&A

How the actual physical nature of the Internet informs this concept of geographical distribution ? (20:15)

The internet itself is not evenly distributed globally. This limits how decentralized cryptocurrencies built on top of it can be.

More research is needed into how the physical internet impacts decentralization.

Would proof of work be considered superior to proof of stake for geographic decentralization ? (22:15)

Proof-of-work (PoW) cryptocurrencies may be more geographically decentralized than proof-of-stake (PoS), because PoW miners have incentives to locate near cheap electricity.

However, PoW also has issues like mining monopolies. So it's not clear if PoW is ultimately better for geographic decentralization.

Would you agree that the shorter the block times, the more decentralization we can have in the builder market ? (24:00)

Shorter block times may help decentralize the "builder market" - meaning more developers can participate in building on chains. But it's an open research question, not a definitive advantage

Are you aware of any research done on how do you incentivize geographic decentralization? (26:30)

One kind of very early experiment was an SGX-based geolocation protocol and then possibly layering incentives on top of that. But Phil doesn't have answer here

What do you think the viability of improving the physical infrastructure network such as providing decentralized databases or incentivized solo stakers ? (29:00)

Improving physical infrastructure like decentralized databases could help decentralization.

Governments should consider subsidizing data centers to reduce compute costs. This makes it easier for decentralized networks to spread globally. This is important not only for blockchains but also emerging technologies like AI. Compute resources will be very valuable in the future.

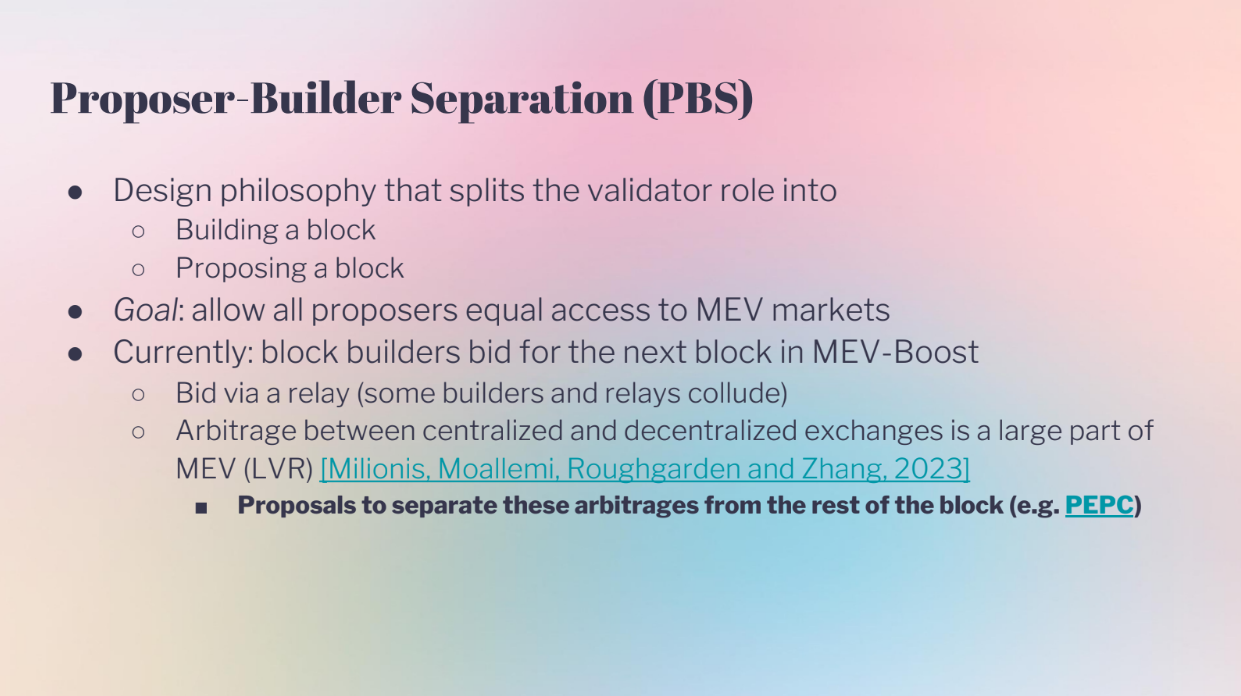

Proposer Builder Separation "PBS" (0:00)

PBS separates the role of validators into proposing blocks and building blocks. This helps decentralize Ethereum by allowing anyone to propose blocks, while keeping the complex block building to specialists. The goal is to allow equal access to MEV (maximum extractable value).

But currently, PBS auctions like MEV-Boost have issues :

- Bids happen through relays which could collude or have latency advantages.

- Arbitrage between exchanges is a big source of MEV

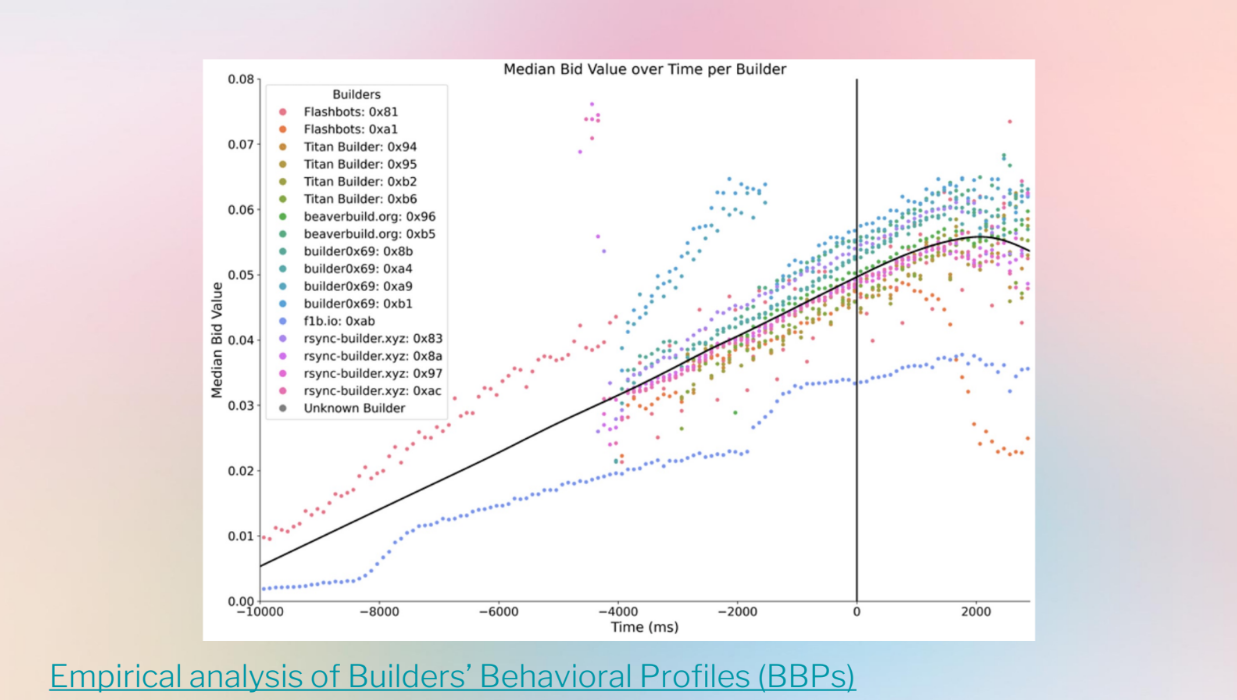

- The auction data shows messy and varying bid strategies between builders

Goals (2:30)

Robust Incentives Group is discussing research on modelling PBS theoretically to understand long term dynamics, not just the current messy data. They look at:

- How bidder latency affects profits and auction revenue

- Bidder strategies without modeling centralization or inventory risks

The goal is to improve PBS auctions like MEV-Boost by modeling bidder behaviors dynamically. This could help make MEV access more equal and decentralized long-term.

Classic example (3:10)

The classic example is as follows :

- There is one item being auctioned.

- All bidders agree on what the item is worth, but don't know the exact value.

- Each bidder gets a "signal" (guess) of what the value might be.

- Some bidders might overestimate or underestimate the actual value.

This models a PBS auction where builders bid for MEV in a block, as the actual MEV value is unknown during the bidding, and builders have different guesses on what a block will be worth.

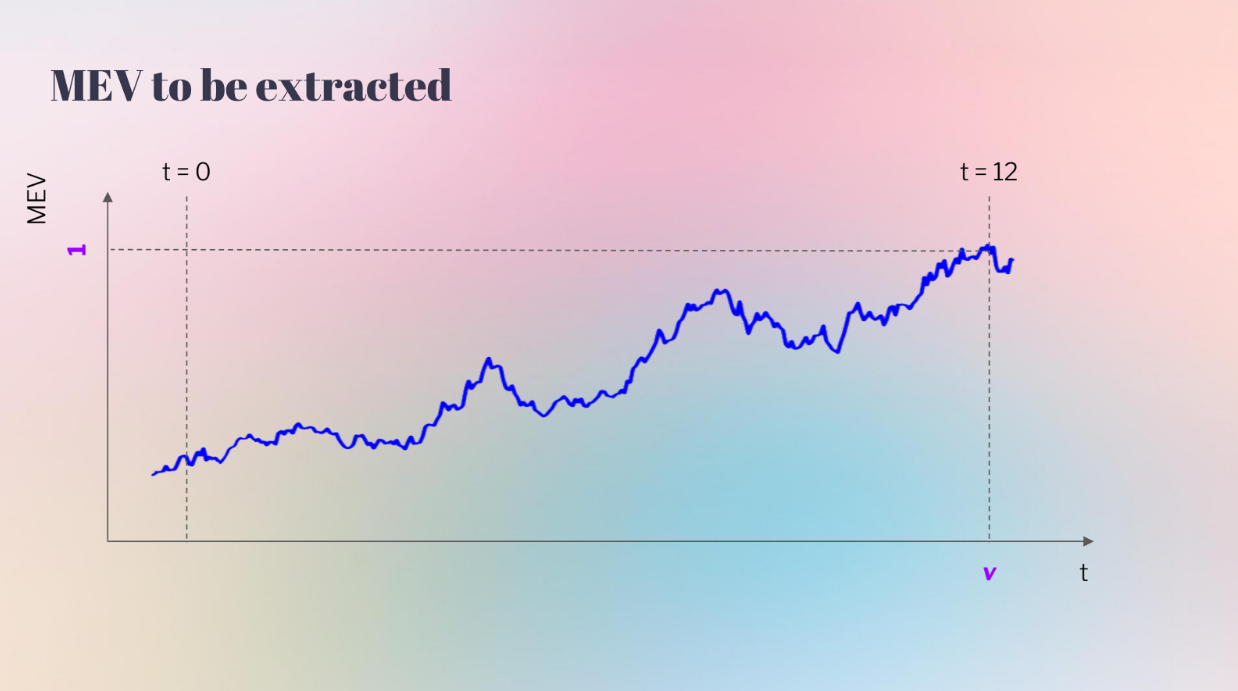

But this model doesn't include bidder latency. A more realistic model shows MEV value changing throughout the slot time :

- At the start (t0) the MEV value is uncertain

- By the end (t12) the actual value is known

- Faster bidders can wait longer to bid, closer to the real value

So bidder 2 who can bid later (lower latency) has an advantage over bidder 1 who must bid earlier with more uncertainty. This results in higher profits for lower latency bidders.

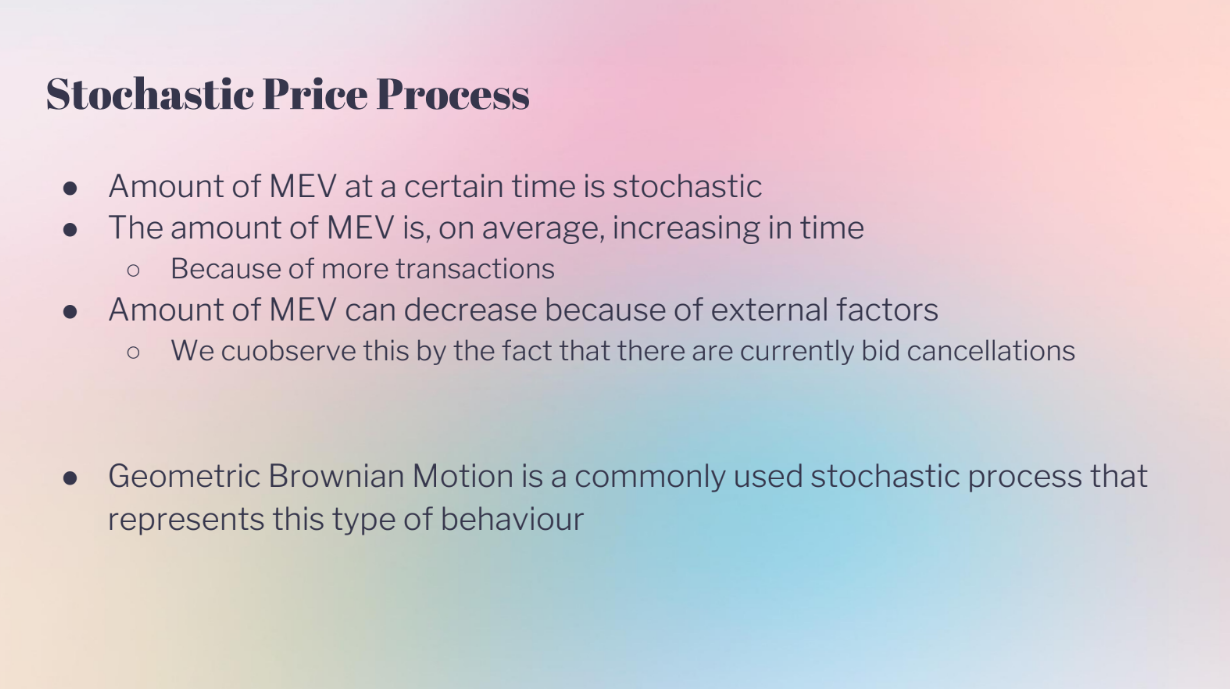

Stochastic Price Process (5:00)

The MEV value changes randomly over time, like a stochastic process. It can increase due to more transactions coming in, and can decrease due to external factors like changing prices

This is different than a classic auction model where the auctionned value is fixed and bidders get signals conditioned on that fixed value.

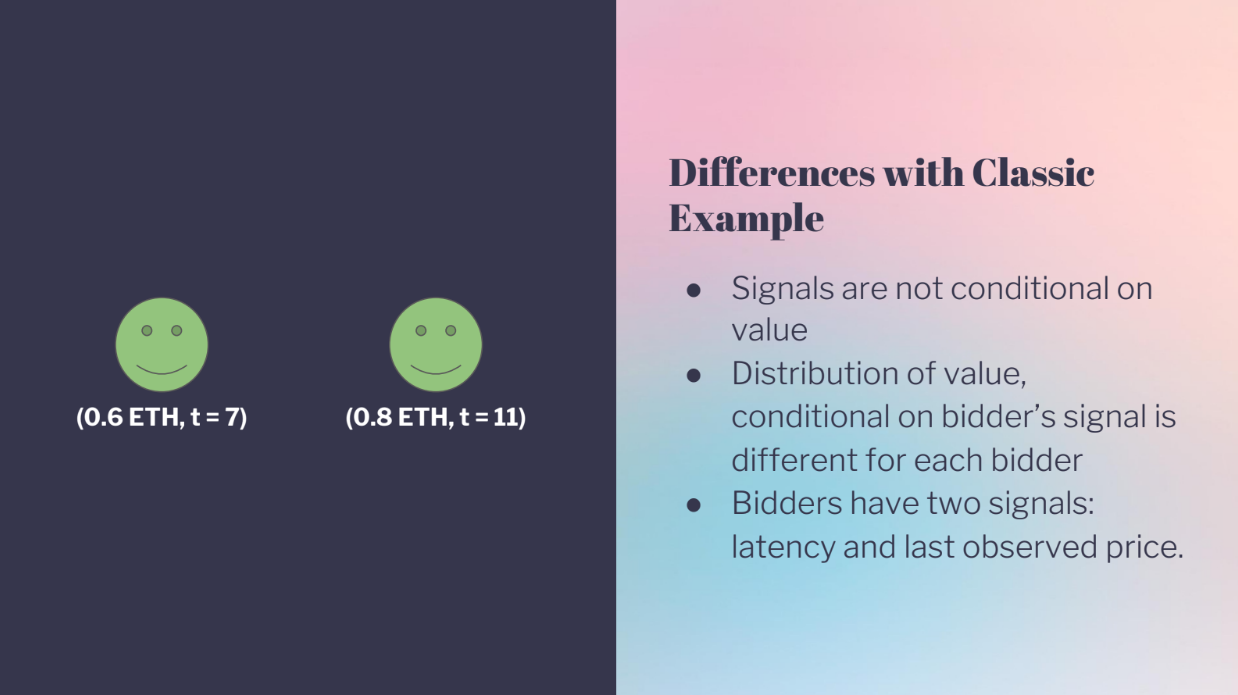

Differences with classic example (5:30)

- Signals are not conditional on value

- The distribution of the value depends on each bidder's signal. Lower latency bidders have more confidence in their signals, Higher latency bidders have wider confidence intervals

- Bidders get two signals : The last observed MEV price, and their own latency

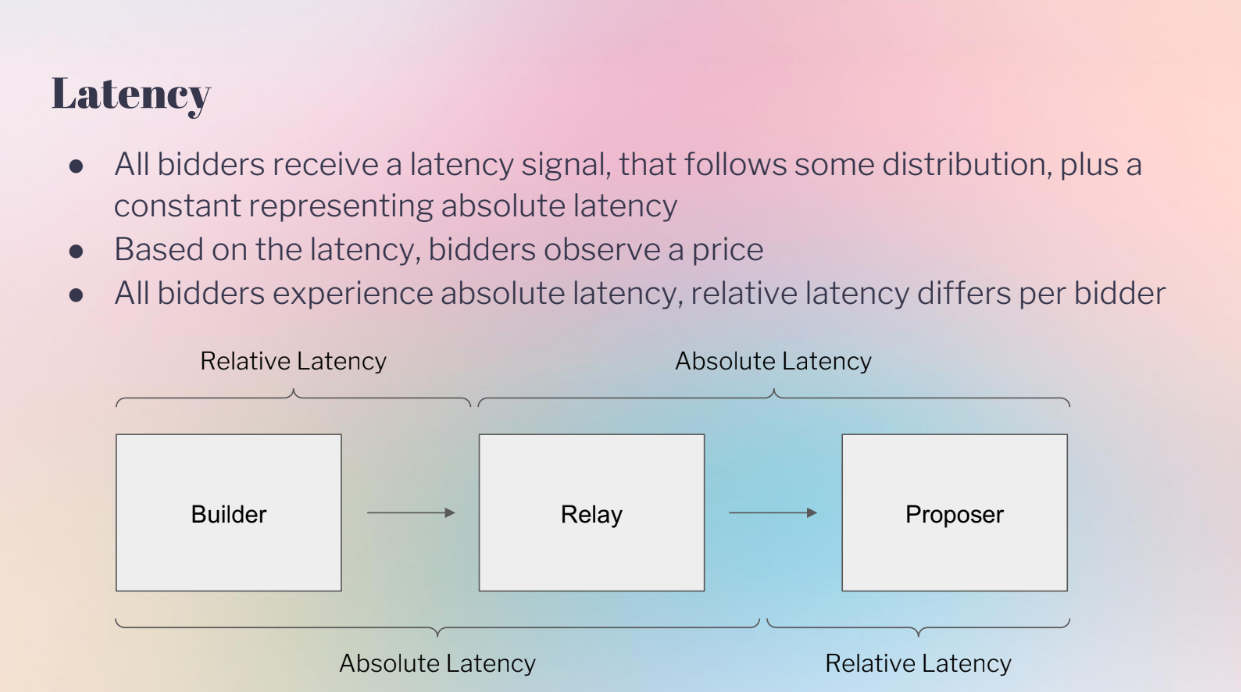

Latency (6:25)

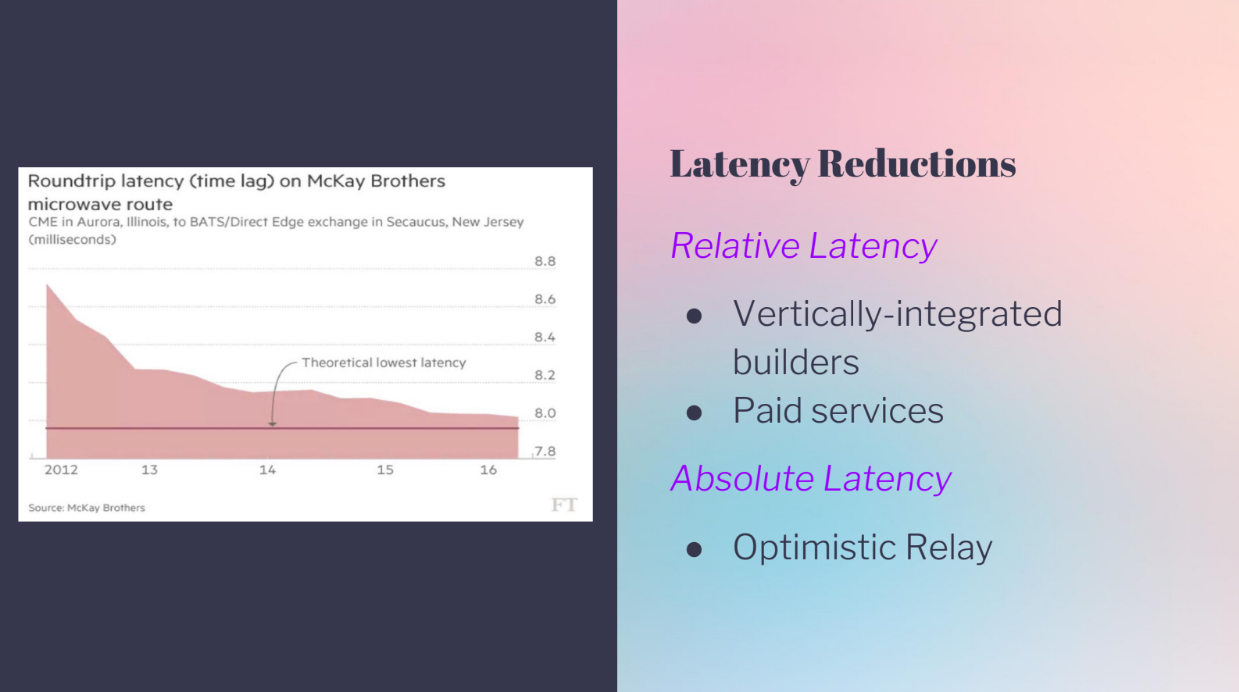

The model considers two types of latency :

- Absolute latency : Affects all bidders equally, like network speed.

- Relative latency : Advantages some bidders over others, like colocation.

What we're seeing now is there are a lot of latency reductions :

- A relay network has more absolute latency.

- Integrated builders have relative latency advantages.

- Optimistic relay improves absolute latency for everyone.

In Ethereum PBS, even the absolute minimum latency could be improved a lot by better systems, and many latency reductions recently help some bidders over others. So modeling both absolute and relative latency is important to understand bidder advantages.

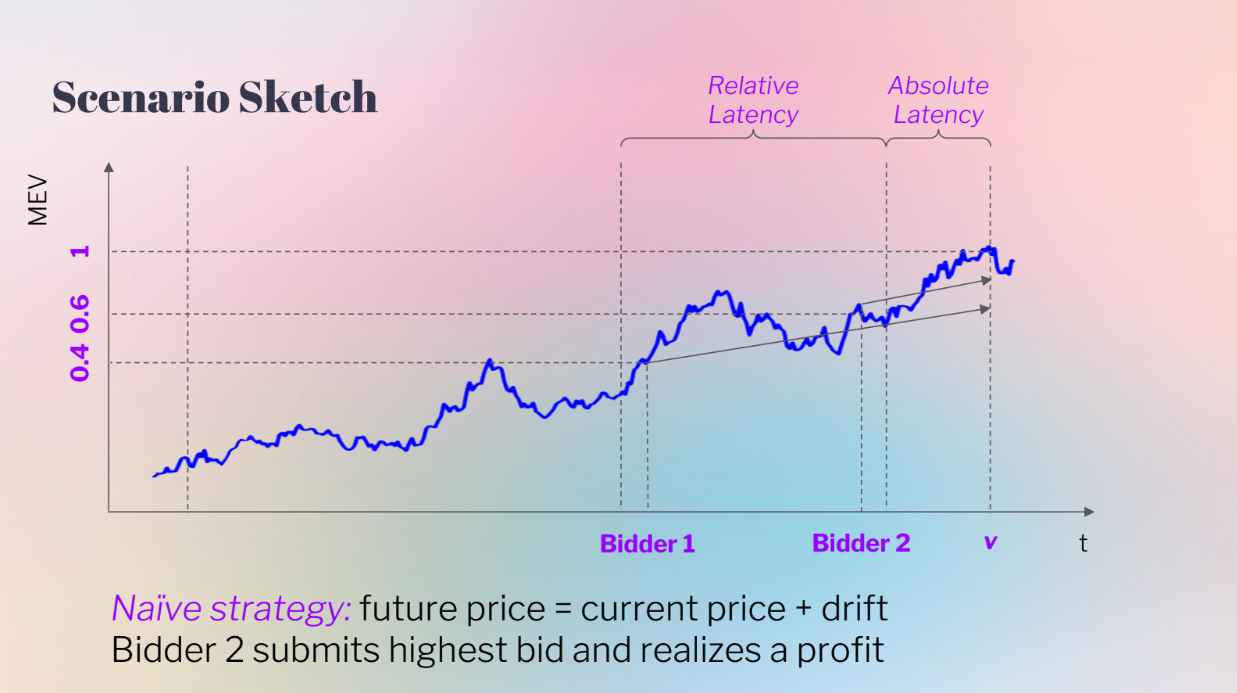

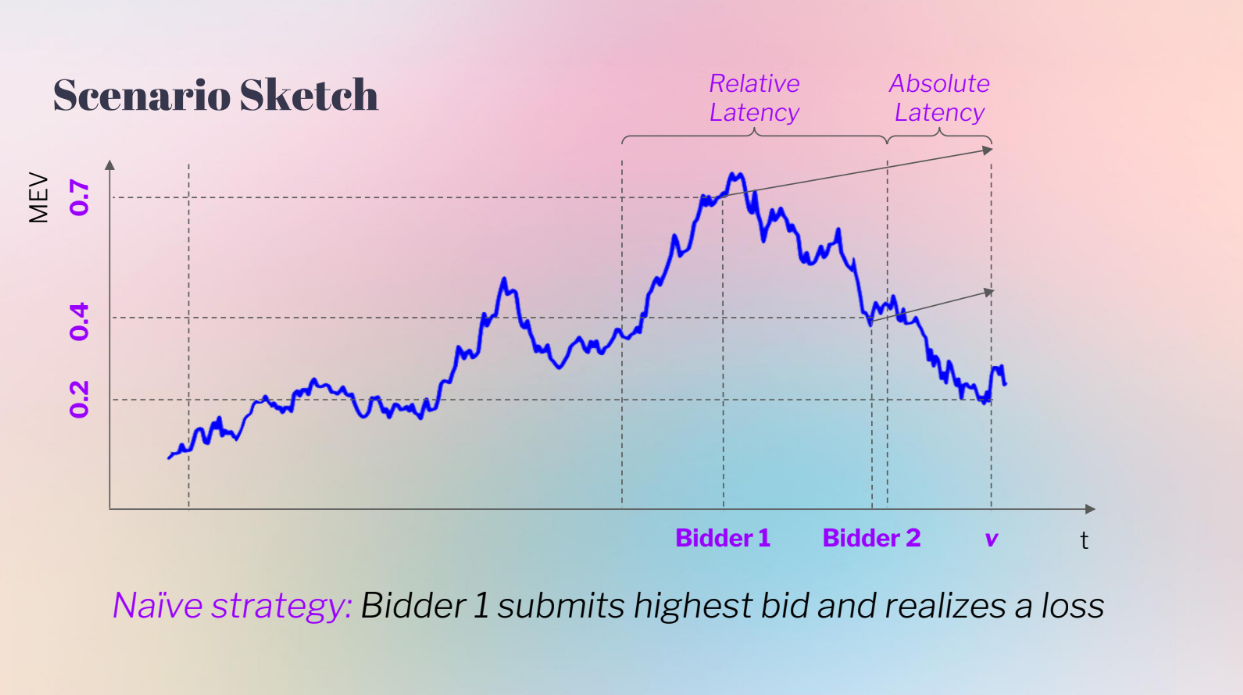

Bidder behavior, with absolute and relative latency (8:15)

The model considers absolute and relative latency :

- Absolute latency : Affects all bidders equally at the end.

- Relative latency : Earlier advantage for Bidder 2.

- The bidders use a naive bidding strategy : they observe MEV current price, and bid = current price + expected change

In the left case, Bidder 2 sees a higher current price, so Bidder 2 bids higher and wins

In the right case, Bidder 1 sees a much higher current price. Bidder 1 bids very high based on his expectation but the actual value ends up much lower, so Bidder 2's latency advantage avoids overbidding.

In other words, having less information meant Bidder 2 made more profit by bidding lower

Can bidders do better ? (10:30)

Bidder 1 can improve their strategy by accounting for the "winner's curse" :

The winner's curse refers to the situation in auctions where winning the bid means you likely overpaid, because your information was incomplete relative to more pessimistic losing bidders. Winner's need to account for this in their bidding strategy

- If you win, it means other bidders had worse information.

- So winning means your information was likely overestimated.

- Bidder 1 can adjust their bid down to account for this.

Implications for PBS (11:15)

More strategic bidding may be necessary in PBS auctions. Lower relative latency reduces these adverse selection costs, and lower absolute latency also reduces the costs.

Application to Order Flow Auctions (12:45)

Bidders compete for arbitrage opportunities over time, and they still face the winner's curse based on their latency.

Decentralized exchanges pay part of revenue to bidders to account for their adverse selection costs.

So modeling the winner's curse and latency advantages is important for understanding bidder strategies and revenues in MEV auctions.

Conclusion (13:30)

In PBS auctions, latency advantages affect bidder strategies and revenues like a double-edged sword : It helps you win bids, but winning with high latency means you likely overbid

Bidders need to balance latency optimizations with strategic bidding to account for the winner's curse. Furthermore, fair access to latency improvements can benefit the overall PBS auction.

Q&A

Besides arbitrage, what other external factors affect MEV bids decreasing ? (15:00)

For now arbitrage is the biggest factor. In the future, other types of MEV may become more important as PBS mechanisms evolve

How does the sealed bid bundle merge differ from the open PBS auction in terms of winner's curse implications ? (15:45)

The model looks at bidder valuations regardless of open or sealed bids. In both cases bidders need to account for the risk of others bidding after them.

Can you explain more about how lower latency leads to adverse selection ? (17:00)

If you bid early (high latency) and win, it means a later bidder saw info that made them bid lower. So winning means you likely overbid due to having less info.

The later bidder's advantage leads to adverse selection costs for the higher latency winner.

Intro (0:00)

Traditionally, censorship resistance meant that valid transactions eventually get added to the blockchain. But for some transactions like those from oracles or arbitrage traders, just eventual inclusion is not good enough - they need to get on chain quickly to be useful

Mallesh argues we need a new definition of censorship resistance : one that guarantees timely inclusion of transactions, not just eventual inclusion. This would allow new mechanisms like on-chain auctions. It could also help rollups and improve blockchain protocols overall.

Vitalik already talked about it in 2015, but Mallesh is advocating for the community to revisit it now, as timely transaction inclusion unlocks new capabilities.

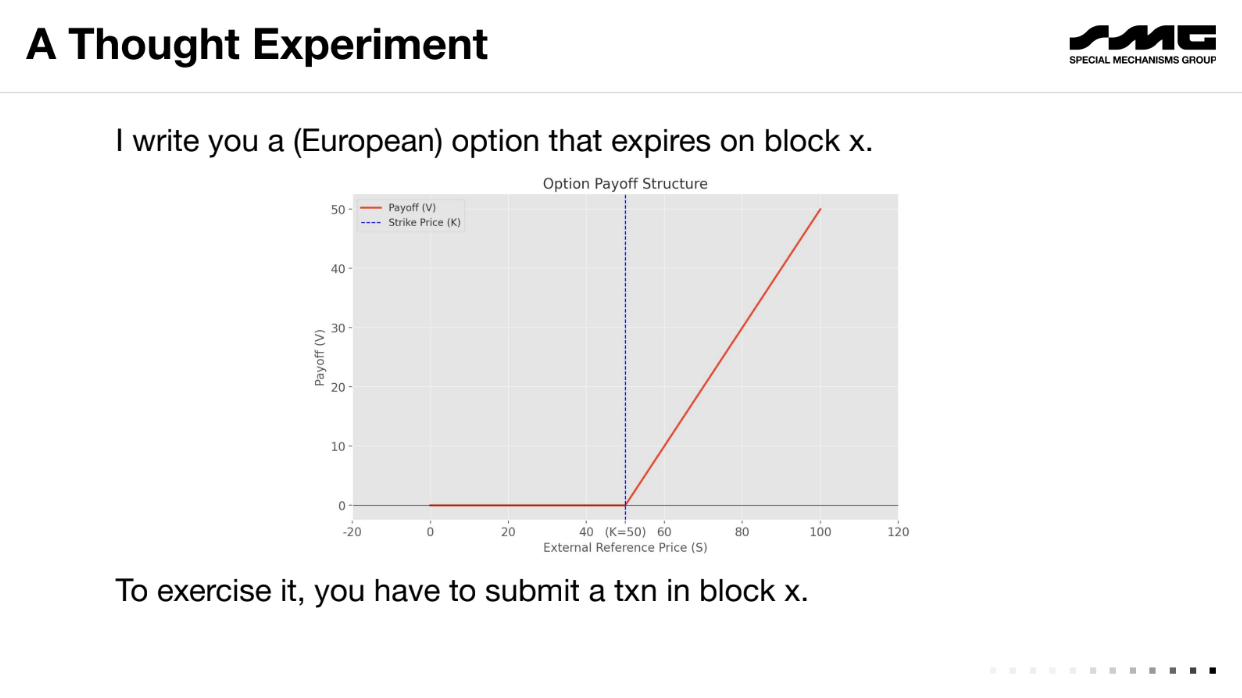

A thought experiment (2:30)

Let's take an example of an "European Option", a financial contract where an user agrees to pay the difference between a reference price (say $50) and the market price of an asset at a future date (block X), if the market price is higher

On block X, if the price is $60, the user owes the holder $10 (the difference between price at block X and reference price). The holder would be willing to pay up to $10 to get the transaction included on the blockchain to exercise the option. But the user also has incentive to pay up to $10 to censor the transaction and avoid paying out.

On the expiration date, there is an incentive for both parties to pay high fees to either execute or censor the transaction. This creates an implicit auction for block space where the transaction will end up going to whoever is willing to pay more in fees.

The dark side of PBS (4:20)

The existing fee auction model enables censorship. By paying high fees, you can block valid transactions without others knowing

This is not just thoretical, as Special Mechanisms Group demonstrated the problem some weeks before the presentation : by buying a block that contained only their own promotional transaction, they could have silently censored others by including some but not all valid transactions

The key problems :

- Options and other time-sensitive DeFi transactions create incentives for participants to engage in blocking/censorship bidding wars. This extracts value rather than creating it.

- The existing fee auction model allows value extraction through silent censorship by omitting transactions without it being visible.

Economic definition of censorship resistance (5:50)

Mallesh introduces the concept of a "public bulletin board" to abstractly model blockchain censorship resistance. This model has two operations

- Read : Always succeeds, no cost. Lets you read board contents.

- Write : Takes data and a tip "t". Succeeds and costs t, or fails and costs nothing

Censorship resistance is defined as a fee function - the cost for a censor to make a write fail. Higher fees indicate more resistance.

Some examples (7:45)

- Single designated block : Censorship fee is around t. A censor can outbid the transaction tip to block it.

- EIP-1559 : Potentially worse resistance since base fee is burnt. Censor only needs to outbid t-b where b is base fee

The goal is to reach high censorship fees regardless of t, through better blockchain design, like multiple consecutive slots.

Better censorship resistance (9:15)

Having multiple consecutive slots with different producers increases censorship fees.

A censor must pay off each producer to block a transaction, costing k*t for k slots at tip t. But this also increases read latency - you must wait for k blocks.

On-Chain Auctions (10:45)

Low censorship resistance limits on-chain mechanisms like auctions. As an example, consider a second-price auction in 1 block with N+1 bidders :

- Bidders 1 to N are honest, submit bids b_i and tips t_i

- Bidder 0 is a censor. It waits for bids, offers the producer p to exclude some bids.

- Producer sees all bids/tips, and censor's offer p. It decides which transactions to include.

- Auction executes on included bids.

A censor can disrupt the auction by blocking bids. This exploits the low censorship resistance of a single block.

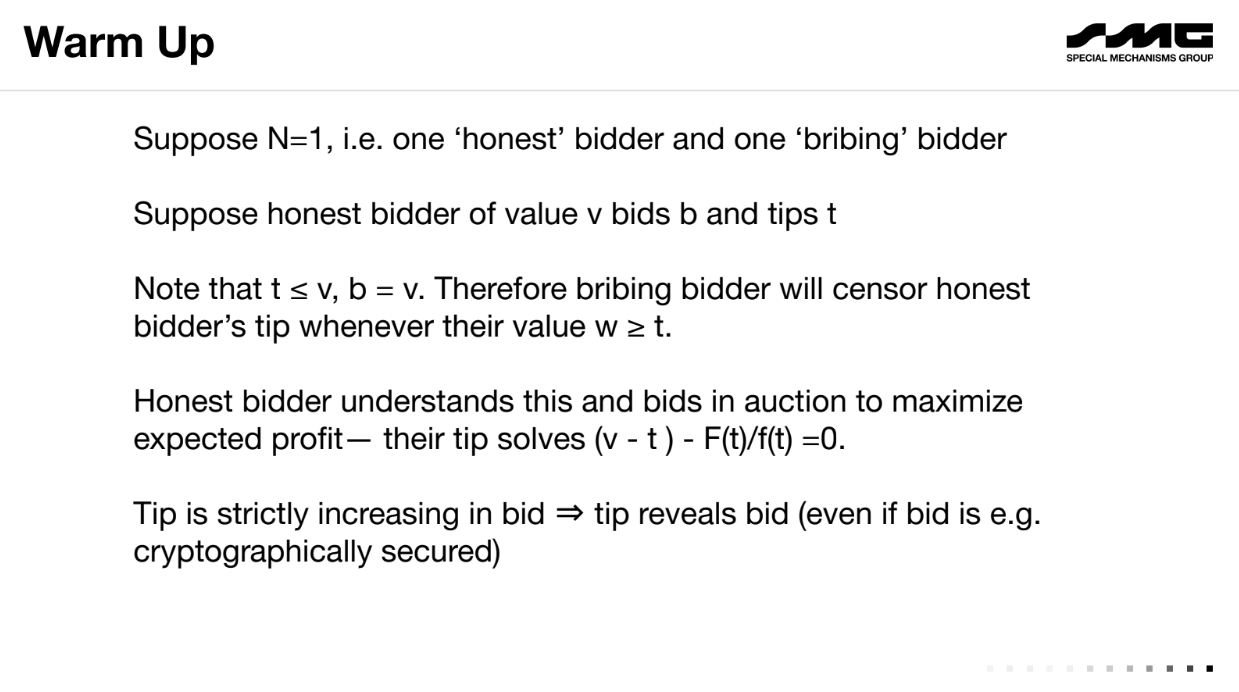

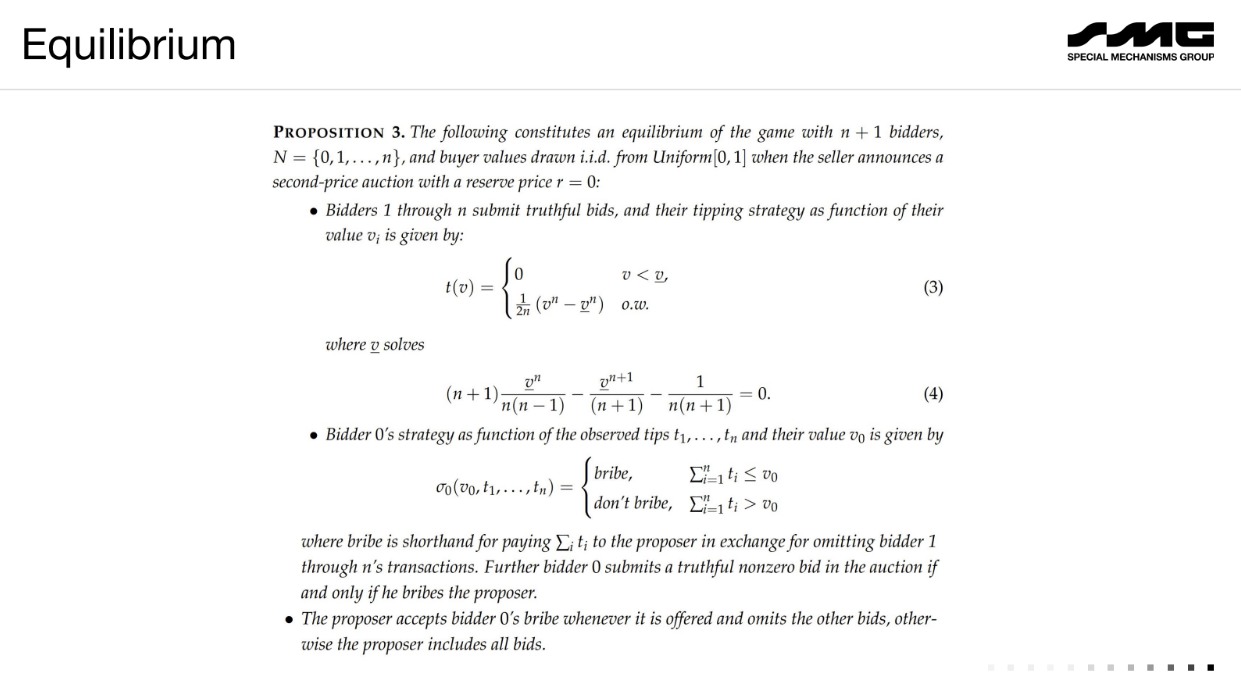

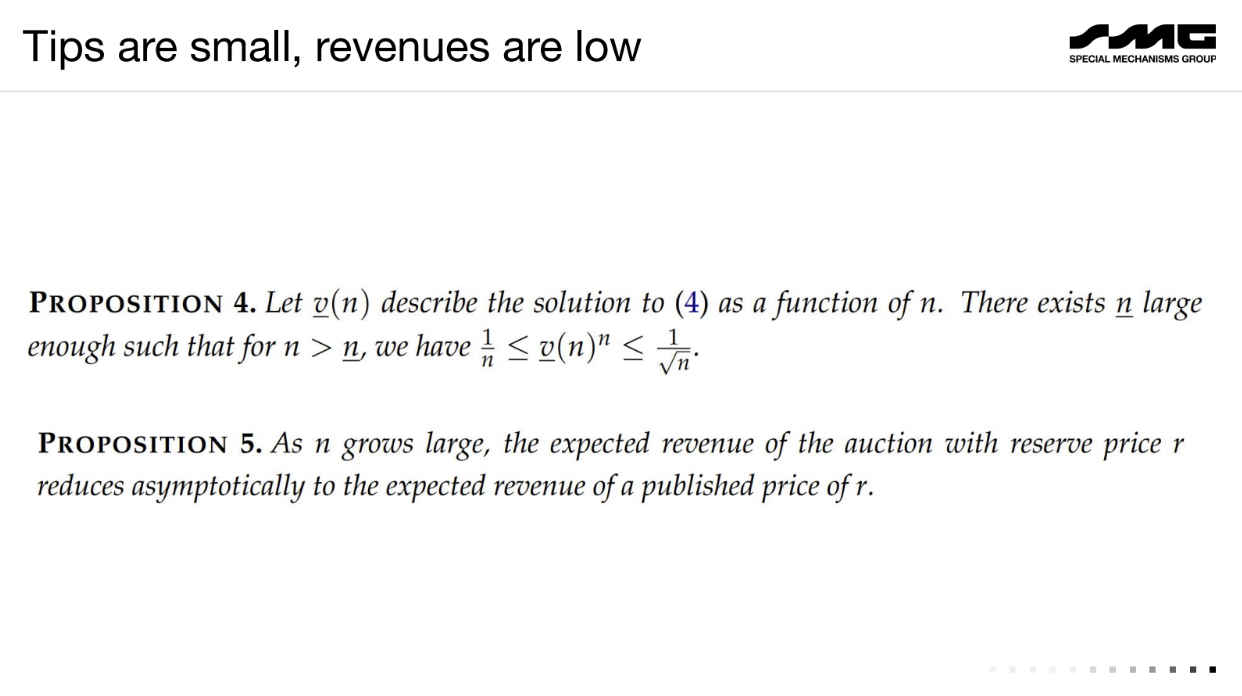

Warm up (13:50)

Suppose a second-price auction with just 1 honest bidder and 1 censor bidder :

- The honest bidder bids their value v. Their tip t must be < v, otherwise they overpay even if they win.

- The censor sees t is less than v, so it will censor whenever its value w > t. It pays the producer slightly more than t to exclude the honest bidder's bid.

- Knowing this, the honest bidder chooses t to maximize profit, resulting in a formula relating t to v.

With N+1 bidders :

Honest bidders bid their value but tip low due to censorship risk. The censor often finds it profitable to exclude all other bids and win for free.

As a result, the auction revenue collapses - the censor gets the item cheaply. If multiple censors, the producer running the auction extracts most of the value.

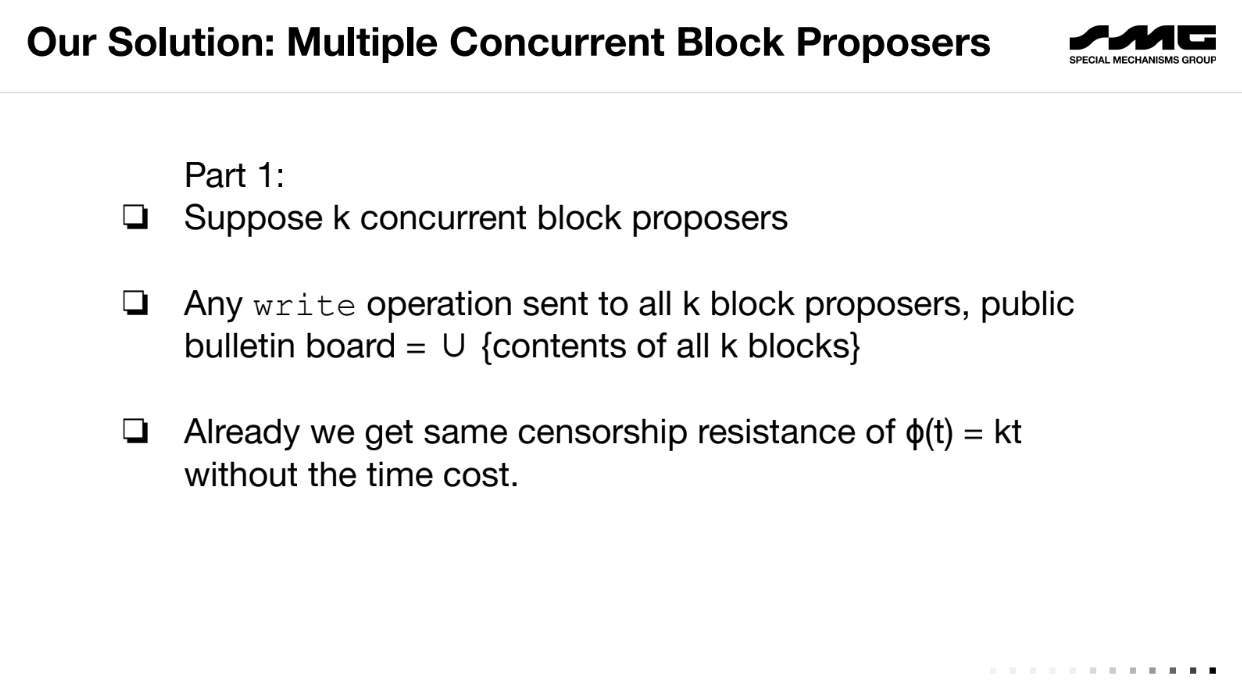

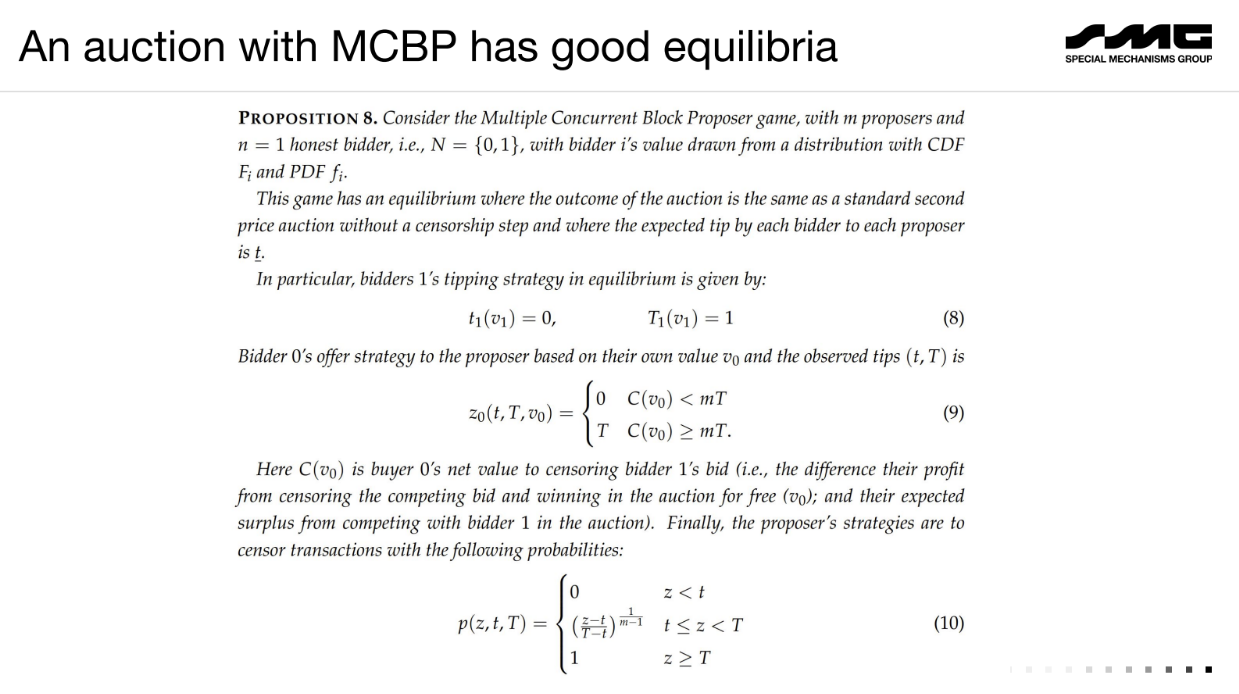

Proposed solution : Multiple Concurrent Block Proposers (18:00)

Mallesh proposes a solution to the blockchain censorship problem using multiple concurrent block producers rather than one monopolist producer.

Have k block producers who can all submit blocks concurrently. The blockchain contains the union of all blocks.

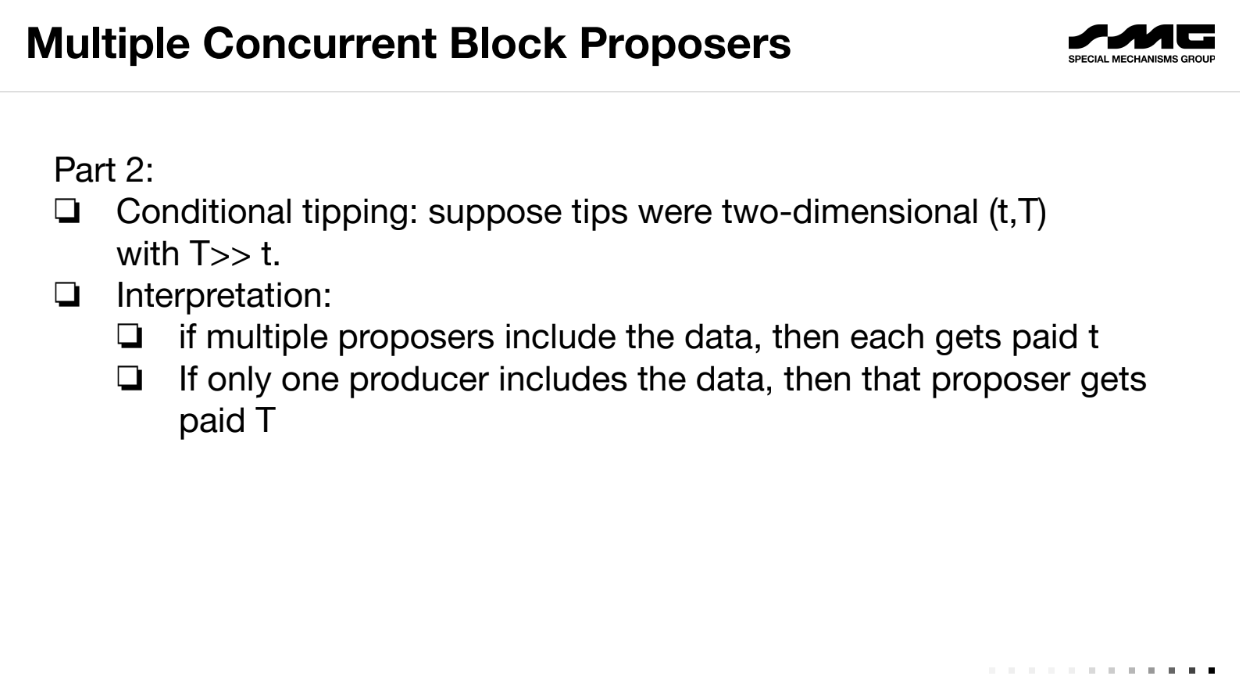

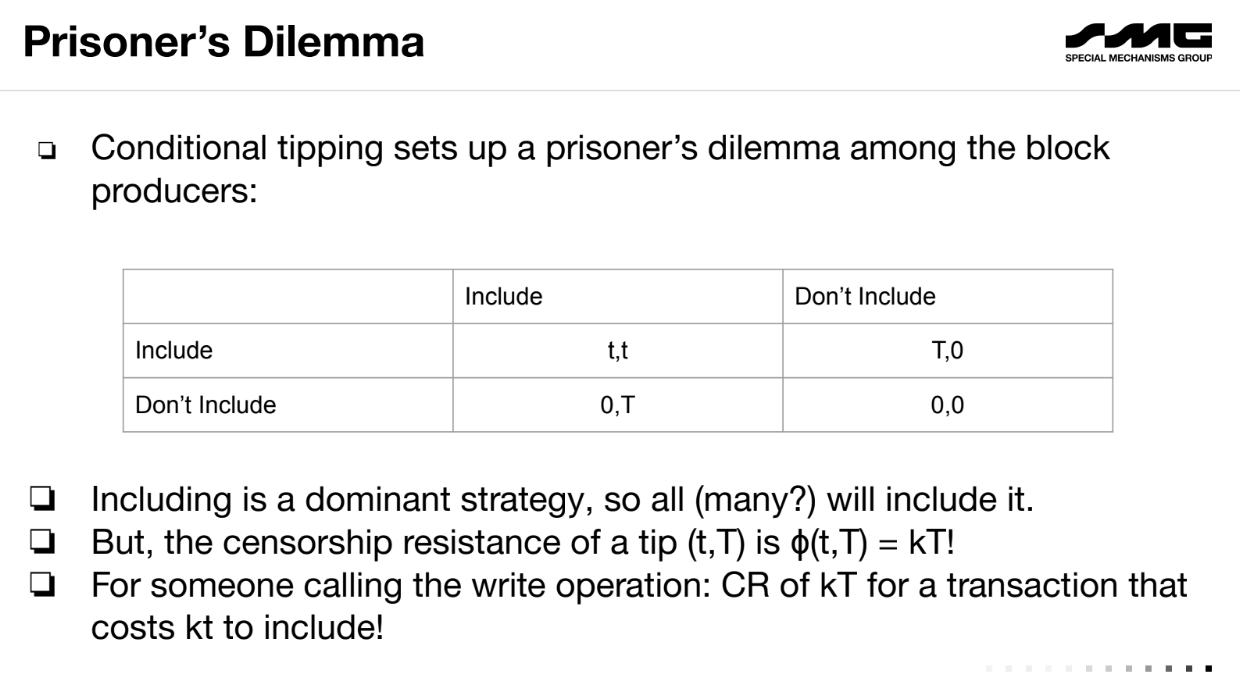

Tips are conditional : small tip t if included by multiple producers, large tip T if only included by one.

This creates a "Prisoner's Dilemma" for producers - including is a dominant strategy to get more tips.

Cost of inclusion is low (kt) but censorship resistance is high (kT) with the right t and T. Hence, a censor finds it too expensive to bribe all producers. Honest bidders can participate fairly.

Concluding thoughts (20:20)

Benefits :

- Removes producer monopoly power over censorship.

- Lowers tipping requirements for inclusion.

- Enables more on-chain mechanisms like auctions resistant to censorship attacks.

But the community needs to think about which of these notions to adopt/prioritize/optimize for.

Q&A

What if the multiple block producers collude off-chain to only have 1 include a transaction, and share the large tip ? (22:00)

This approach assumes producers follow dominant strategies, not collusion. Collusion is an issue even with single producers. Preventing collusion is challenging.

To do conditional tips, you need to see other proposals on-chain. Doesn't this create a censorship problem for that data ? (22:45)

Tips can be processed in the next block using the info from all proposal streams in the previous round. The proposals would be common knowledge on-chain.

Having multiple proposals loses transaction ordering efficiency. How do you address this ? (24:30)

This approach is best for order-insensitive mechanisms like auctions. More work is needed on ordering with multiple streams.

Can you really guarantee exercising an option on-chain given bounded blockchain resources ? (26:00)

There will still be baseline congestion limits. But economics change - transaction fees are based on value, not cost of block space. This improves but doesn't fully solve guarantees.