MEV Workshop : an 8-hour conference summarized in 68 minutes

Summary of MEV Workshop at the Science of Blockchain Conference 2023

Fair ordering is misguided

This talk was inspired by a number of events kind of back in February this year or some work from others

A lot of people have looked at ordering policies and kind of how to implement Fair Ordering, which usually means these First In First Out (FIFO), aka First Come, First Serve (FCFS) type mechanisms

FIFO/FCFS ordering processes transactions based solely on the time they arrive, without considering factors like transaction fees or priority.

Solana and Arbitrum use FIFO/FCFS in production, so we can look what's happening with this ordering policy

Solana (1:15)

Solana without Jito effectively uses something like FIFO ordering and there's close to negligible fees. The thing is, it's a very high utility transaction to be first and only one person can get it.

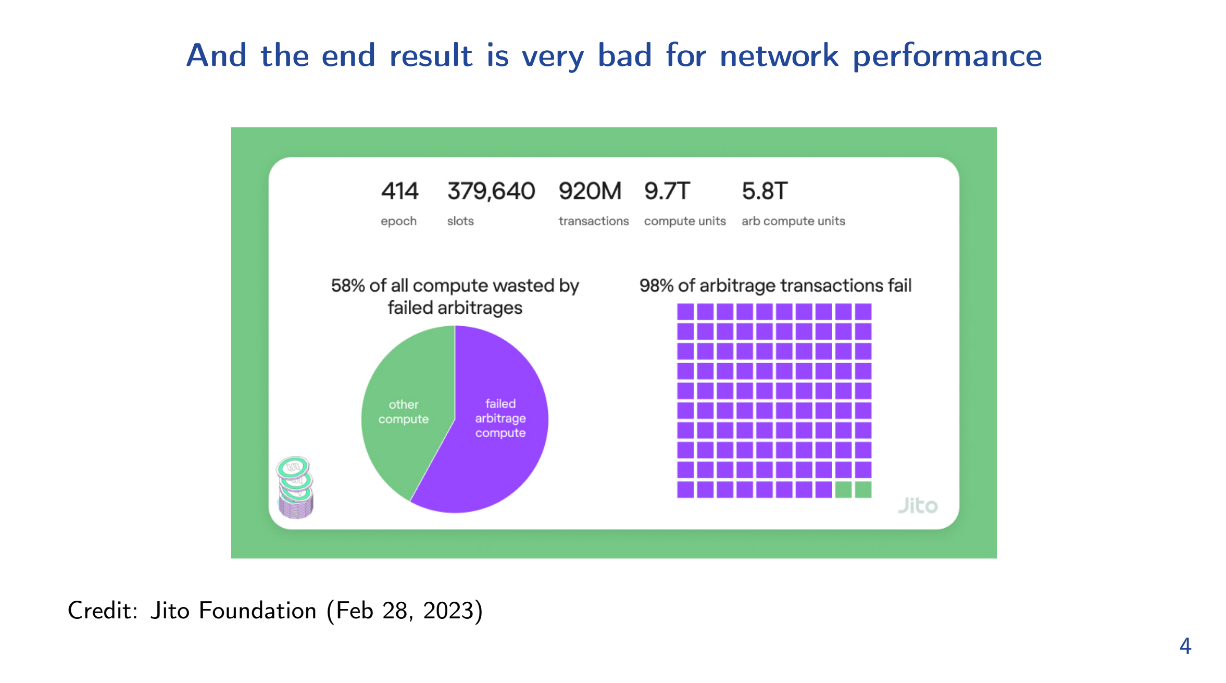

Therefore, Searchers are going to end up spamming the network, trying to be the first transaction. And it hurts other users and validators, with 58% of wasted compute and 98% of Arbitrage transactions fail.

Arbitrum (2:30)

Arbitrum sequencer FIFO ordering also led to spam. The network ended up with 150,000 WebSocket connections and that was not sustainable.

Abritrum proposed a solution called "hashcash", but that was controversial at it was Proof-of-Work. Now they are moving towards priority fees instead

Conclusion (3:30)

FIFO ordering distorts market for block space by forcing regular users to bear the costs of externalities like Arbitrage spam. We need a market to allocate block space efficiently

We need a market to allocate block space

The Math model (3:45)

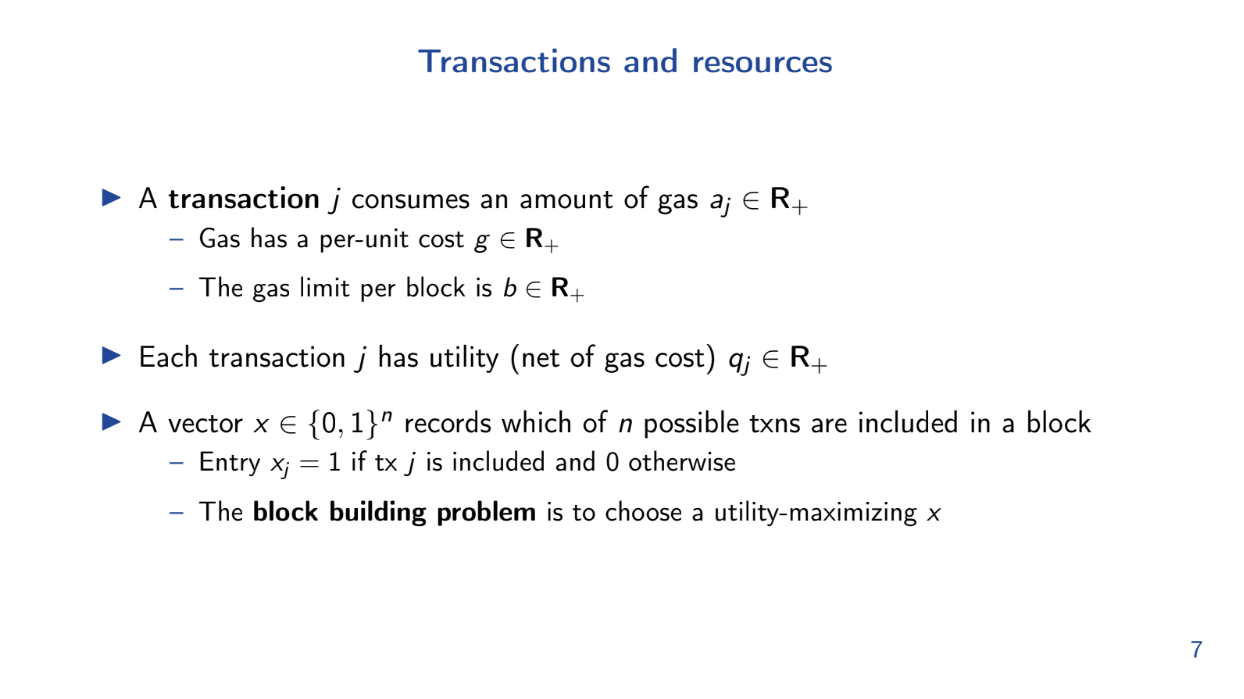

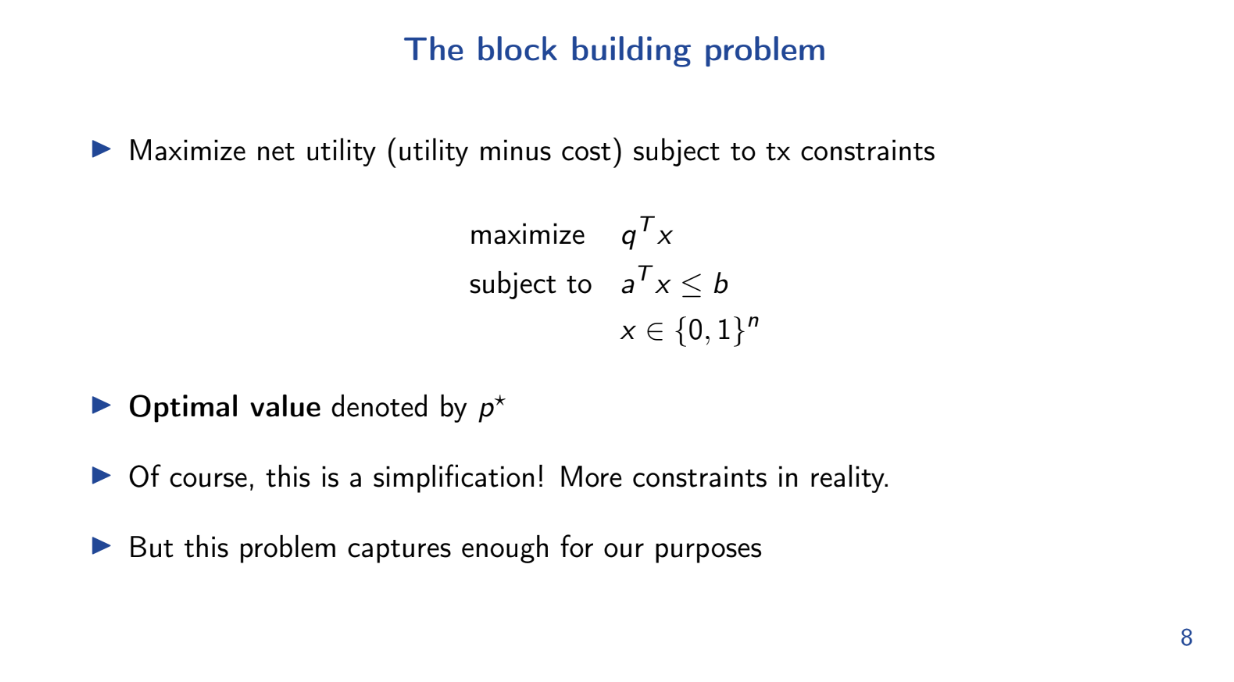

Theo introduces a mathematical formulation of the block building problem as an optimization model.

More specifically, this is a knapsack problem, where we must maximize net utility for our transactions with gas limit constraints

This model is a simplification, but it captures enough for our purposes

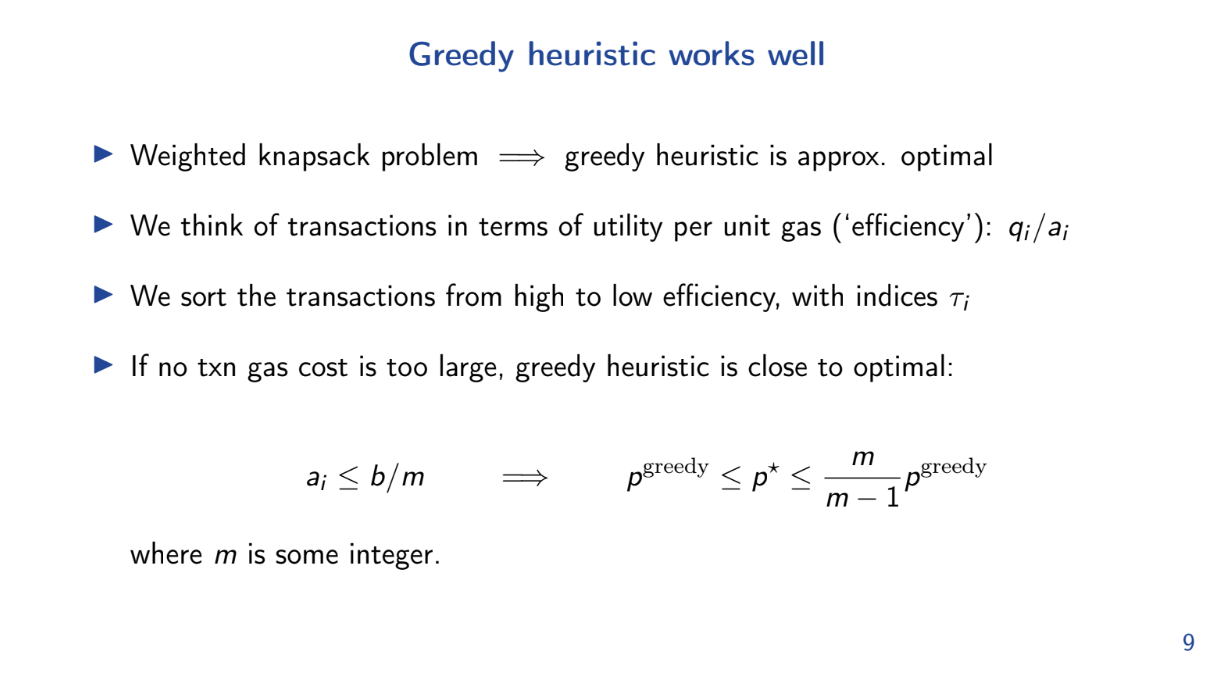

Greedy heuristic works well (6:00)

Very strictly speaking, essentially if the gas cost per unit transaction isn't too close to the limit, this means that we can fit a lot of transactions into the block.

The model shows that the greedy algorithm of taking highest utility/gas transactions first is approximately optimal for this problem.

Though not directly stated, the model demonstrates utility maximization outperforms simpler policies like FIFO for block building.

The FIFO Block (8:30)

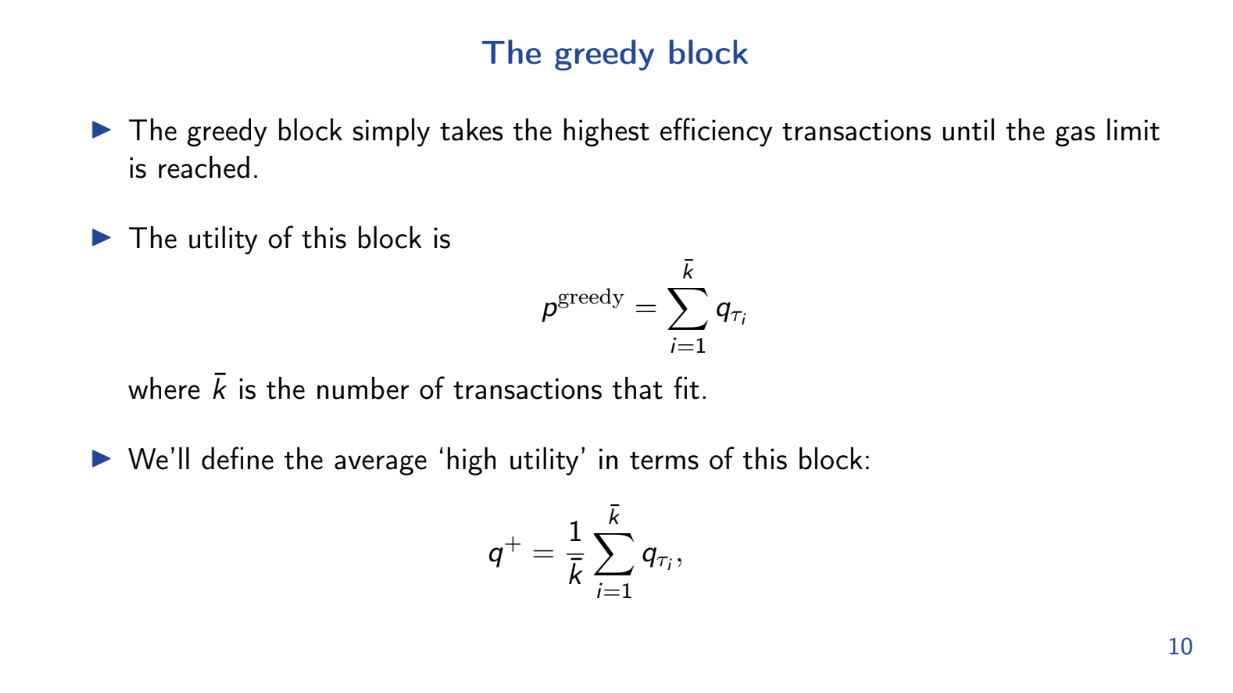

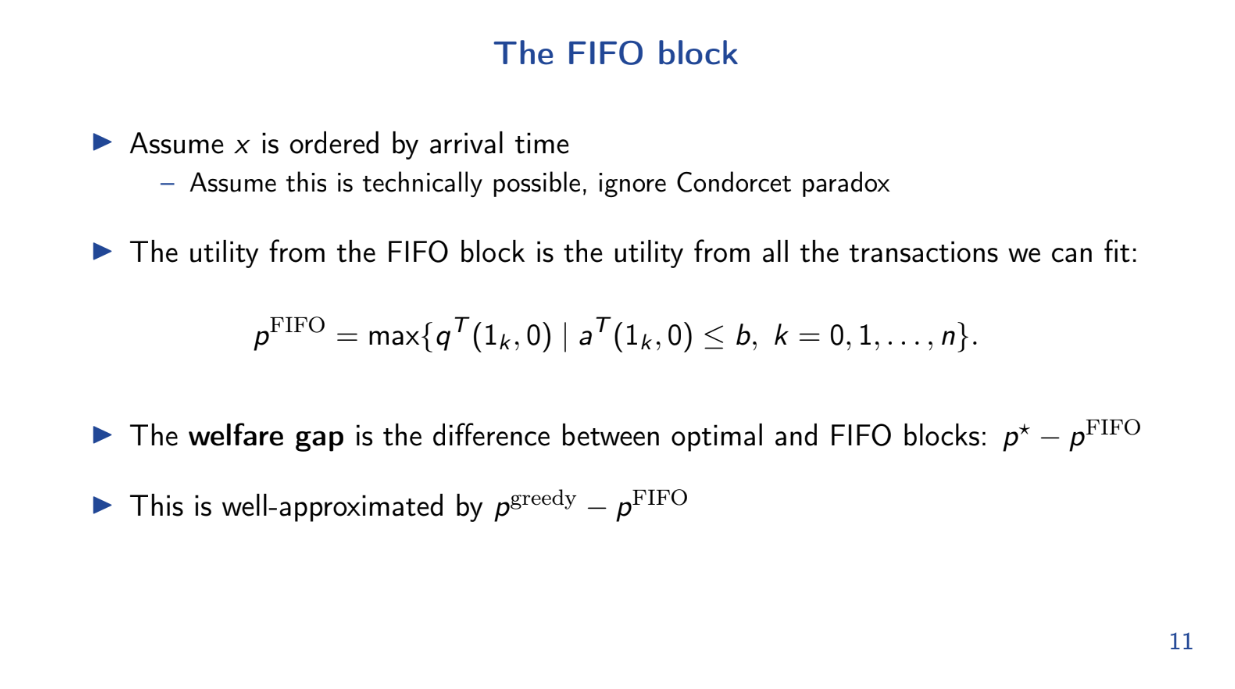

Theo analyzes how a First In First Out (FIFO) block performs compared to an optimal utility maximizing block.

The FIFO Block is pretty simple : transactions are ordered by arrival time and added until block is full. We assume random arrival order

Because of the random arrival assumption, it's easy to calculate an upper bound on the expected total utility of the FIFO block

Calculating the "Welfare Gap"

What's the gap ? (9:30)

The "welfare gap" is defined as the utility of the optimal block minus the expected utility of the FIFO block. The goal is to show this gap is large.

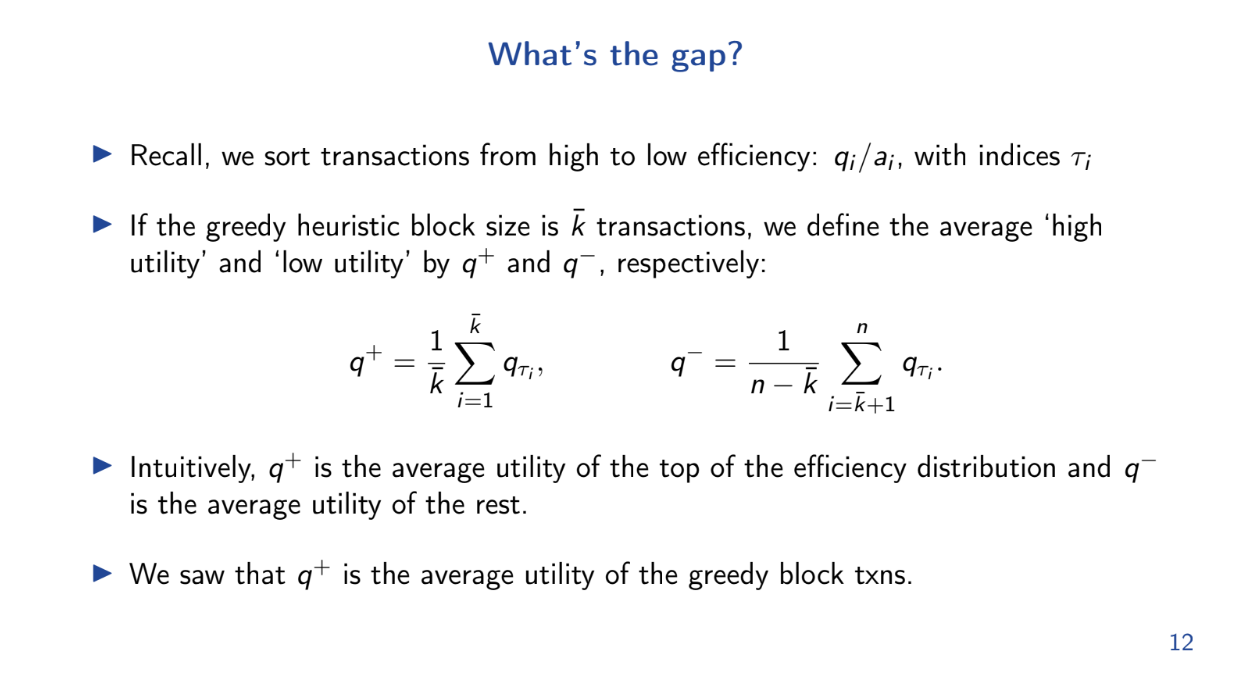

Using previous results, we can lower bound the utility of the optimal greedy block. And we just upper bounded the FIFO utility. So we can now upper bound the welfare gap using 2 bounds :

- q+ is the average utility per unit of gas for transactions in the optimal greedy block.

- q- is the average for the remaining transactions.

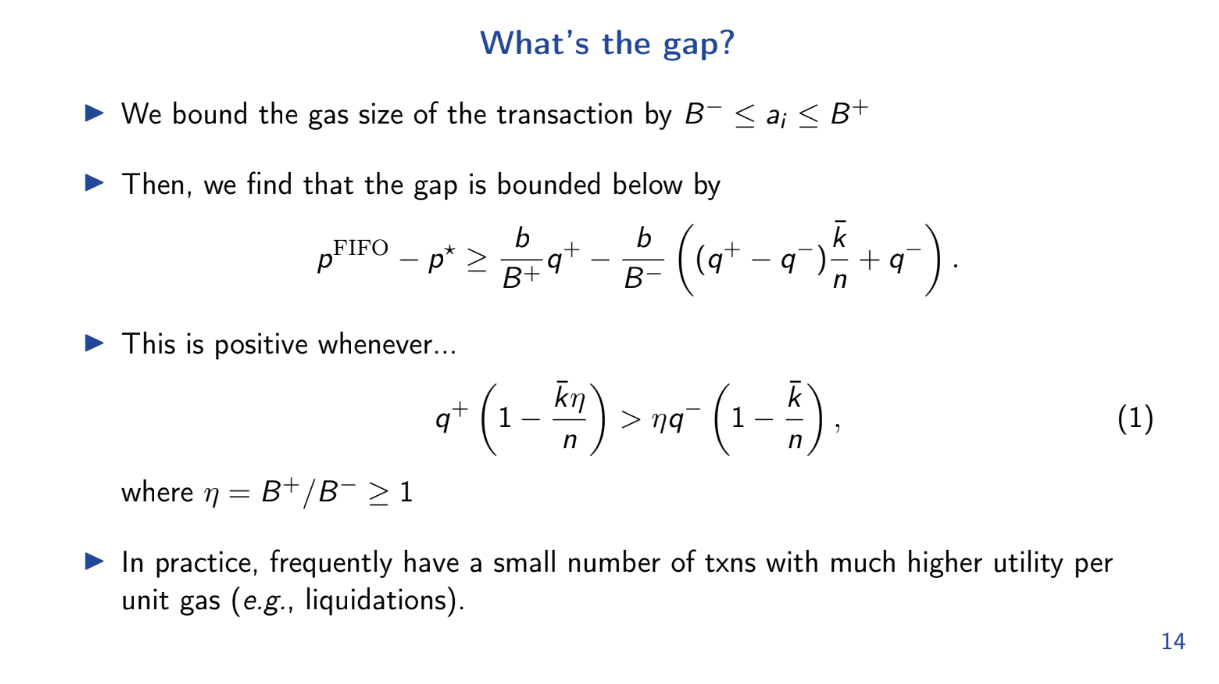

The gap is positive whenever q+ > q- by even a small amount. Intuitively, whenever there are some very high utility transactions, the gap will be positive and FIFO performs worse than optimal.

In practice, the gap is frequently positive because highest utility transactions (like liquidations) are picked first for the optimal block. As a result, FIFO is suboptimal

A simpler bound (11:45)

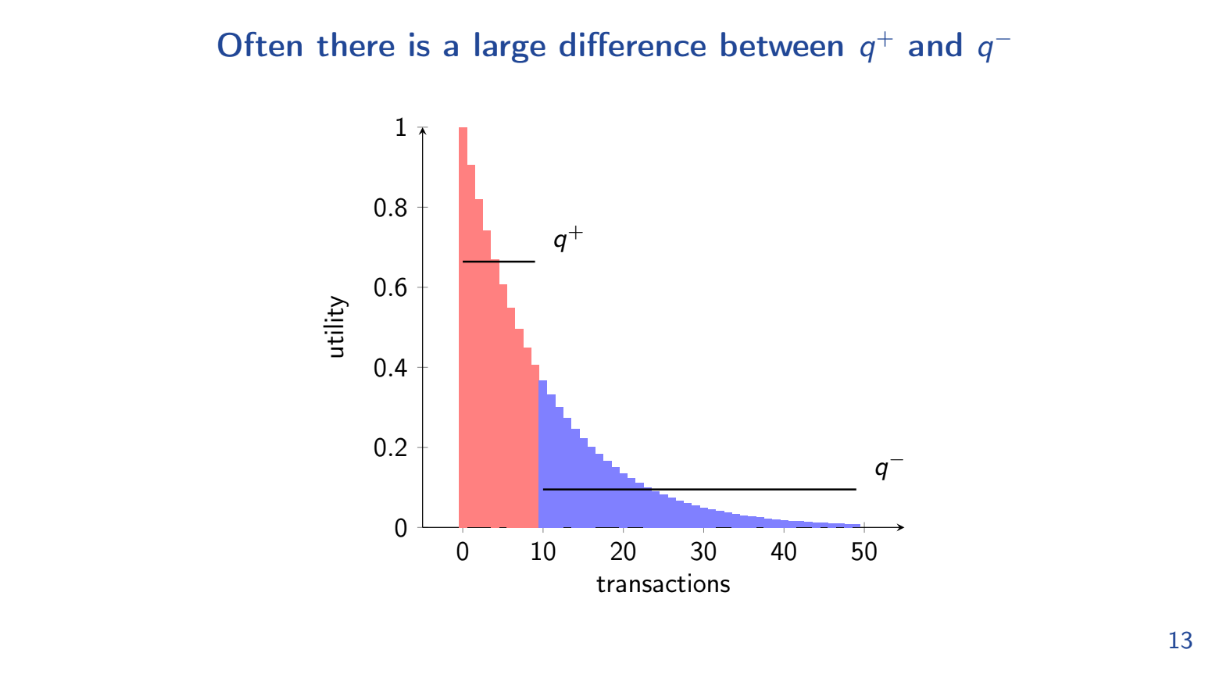

Let's try another formula for calculating the "Welfare Gap" between the optimal block and the FIFO block :

q+ > (B+ / B-) q-

- Q+ is the average utility per gas for the optimal block

- B+ is the max transaction size

- B- is the min transaction size

- Q- is the average utility per gas for remaining transactions

So whenever the above equation is true, the FIFO block performs worse than optimal.

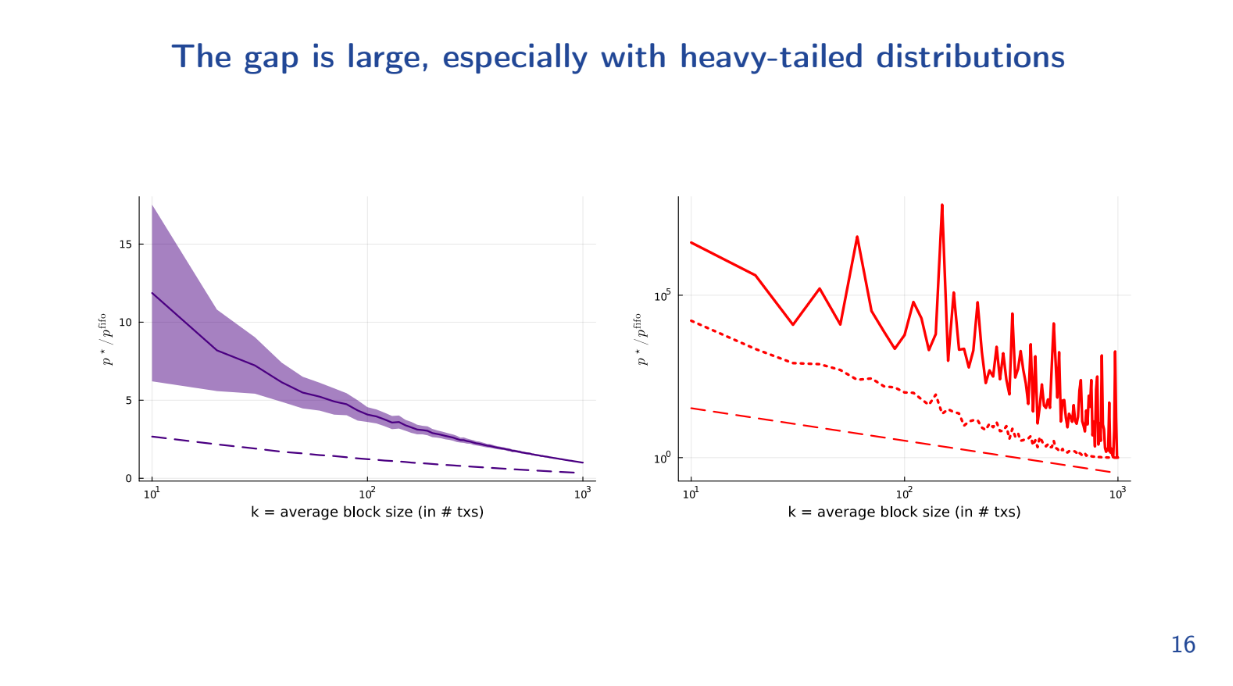

With a fast decaying distribution, the FIFO block has 2-3x lower utility than optimal, even for large blocks.

With a heavy tailed distribution (some very high utility txs), the gap is 10^5x - massive!

This causes spammers to flood the network trying to get those high utility transactions in first under FIFO, and this is not optimal

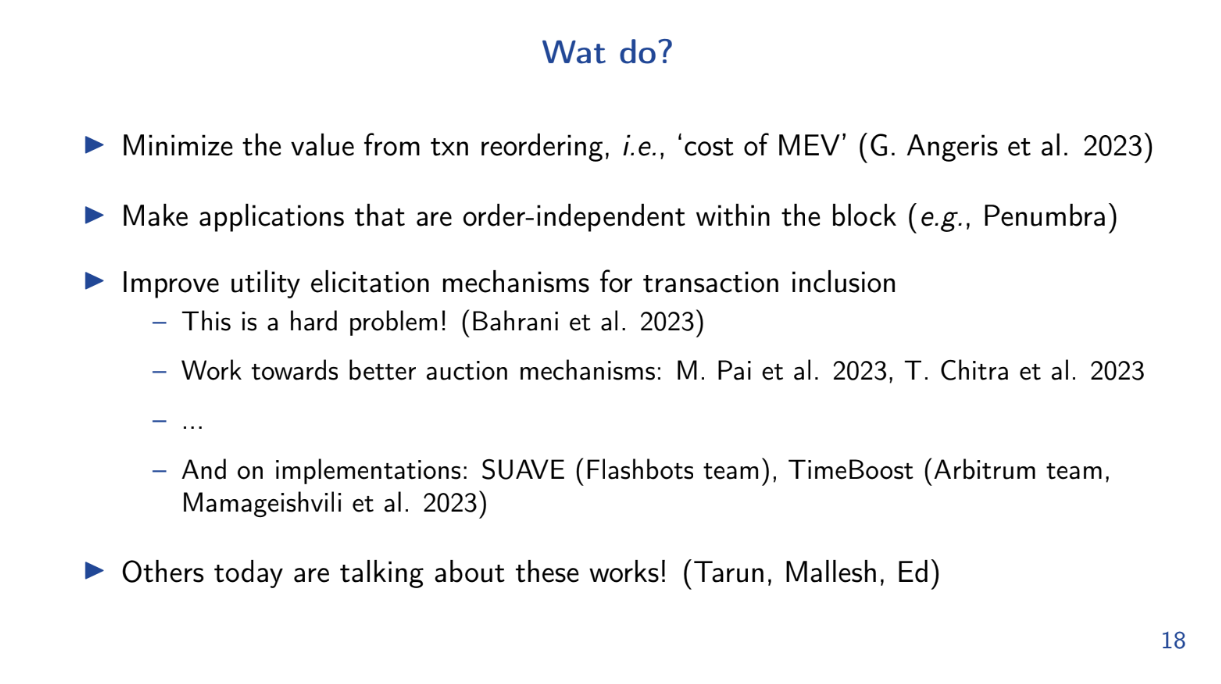

Wat do ? (14:30)

Even if you could implement FIFO type policies, there is going to be a welfare gap and that causes these benign users to pay for their externalities.

Theo outlined problems, solutions are being explored :

- One approach is better characterizing the value of transaction reordering and designing mechanisms to minimize MEV costs

- Making applications order independent also helps, so transaction order in block doesn't matter

- Developing better on-chain auction mechanisms for block space is promising

Lots of people (Flashbots, Arbitrum...) are working towards implementing policies that have sensible markets for blockspace, and some talks are dedicated to them

Q&A

Based on the analysis showing issues with FIFO/short blocks, are longer blocktimes more efficient ? (16:45)

The model doesn't address optimal block time and can't conclude anything about it. But the nice thing is these results are independent of that.

The model was just intended to show FIFO is problematic from an optimization viewpoint, regardless of blocktime.

Considering Arbitrage utility decreases if wait longer, would accounting for changing transaction utility over time affect the analysis ? (19:00)

Model doesn't account for this currently. Extending the model to account for dynamic transaction utilities is an interesting potential extension in the future.

Order Policy Enforcement

Limitations and Circumvention

Reordering transactions

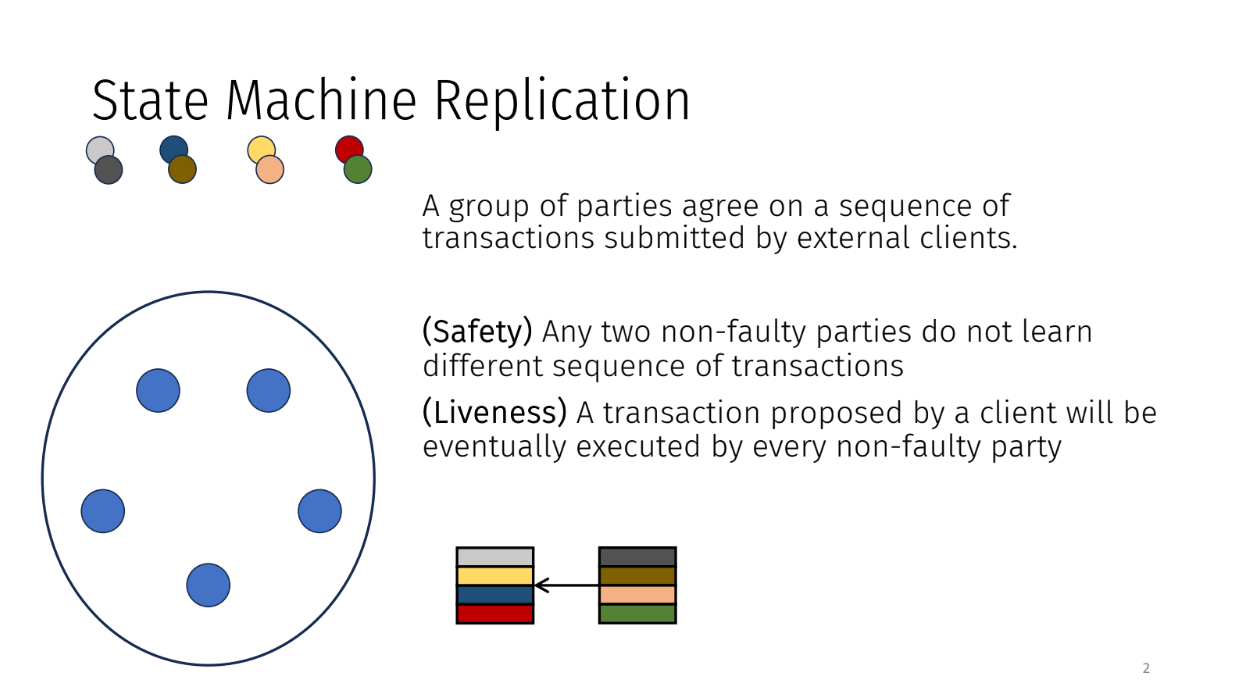

The problem of state machine replication (0:00)

The problem of state machine replication is one where a group of parties agree on sequence of transactions submitted by external clients. There are two key properties that we're looking for :

- Safety : no disagreement on order

- Liveness : transactions eventually executed

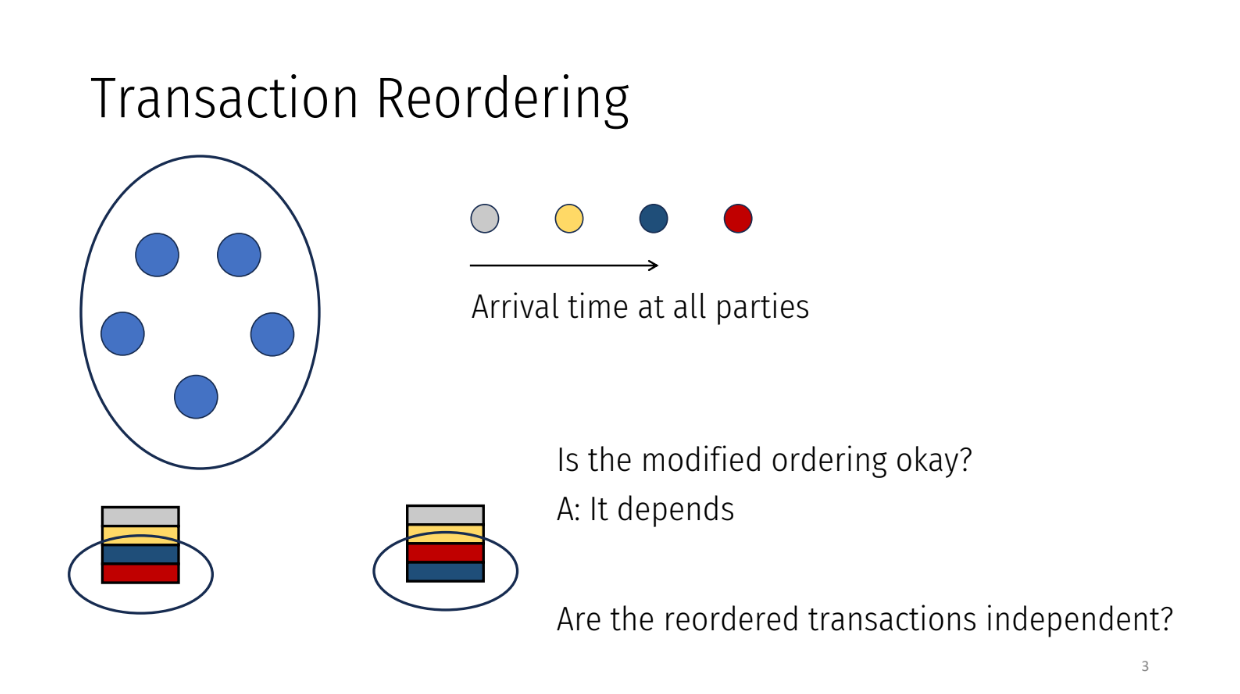

Transaction reordering (1:00)

The parties receive transactions one by one over time. They group transactions into blocks and agree on an order to process each block.

The order should match the order the transactions arrived in the system. For example, if a gray transaction arrived first, a yellow second, and a blue third, then the agreed order should be gray, yellow, blue.

However, the parties could decide to change the order for some reason. They may put a transaction that arrived later (like red) before an earlier one (like blue).

Whether this is okay or fair depends on if the transactions are related. If two transactions are completely independent, changing the order is probably fine. But if they are related, it causes problems.

Reordering transactions is a known issue with known attacks. But there are approaches to prevent cheating by enforcing orders that match when transactions arrived. The talk will discuss solutions to this problem.

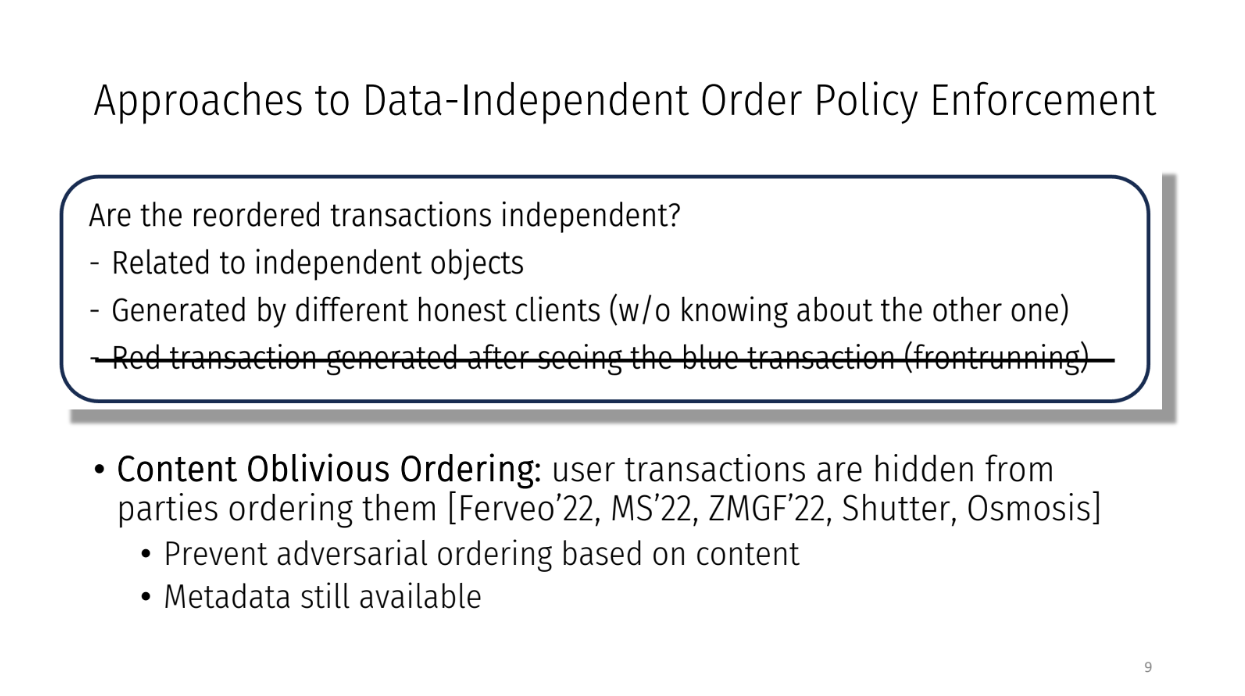

Approaches to Data-Independent Order Policy Enforcement (3:15)

Researchers have tried 2 approaches to make sure transactions are ordered fairly :

- "Order Fairness" : This orders transactions based only on when they arrive to the system. So if Transaction A arrives before Transaction B at all the parties, then A will be ordered before B. This prevents transactions from being reordered unfairly.

- "Content Oblivious Ordering" : The content of each transaction is hidden from the parties ordering them. The goal is to prevent cheating based on transaction contents. Parties can only use metadata like arrival time to order transactions.

Both try to enforce fair ordering of transactions. But do they fully prevent unfair reordering attacks ?

The concern is that both approaches rely on most parties being honest. But the reason for unfair reordering is parties want to cheat for personal gain. There is a mismatch between the threat model these protocols assume, and the real-world motivations for attacks.

Today's talk (6:00)

The key question is whether these protocols are secure when all parties are rational actors trying to maximize personal profits.

In this talk, Kartik will focus on answering this question first by analyzing existing order fairness protocols when all parties are rational, then exploring a new approach called Rational Ordering that secures ordering against rational adversaries

Create the framework

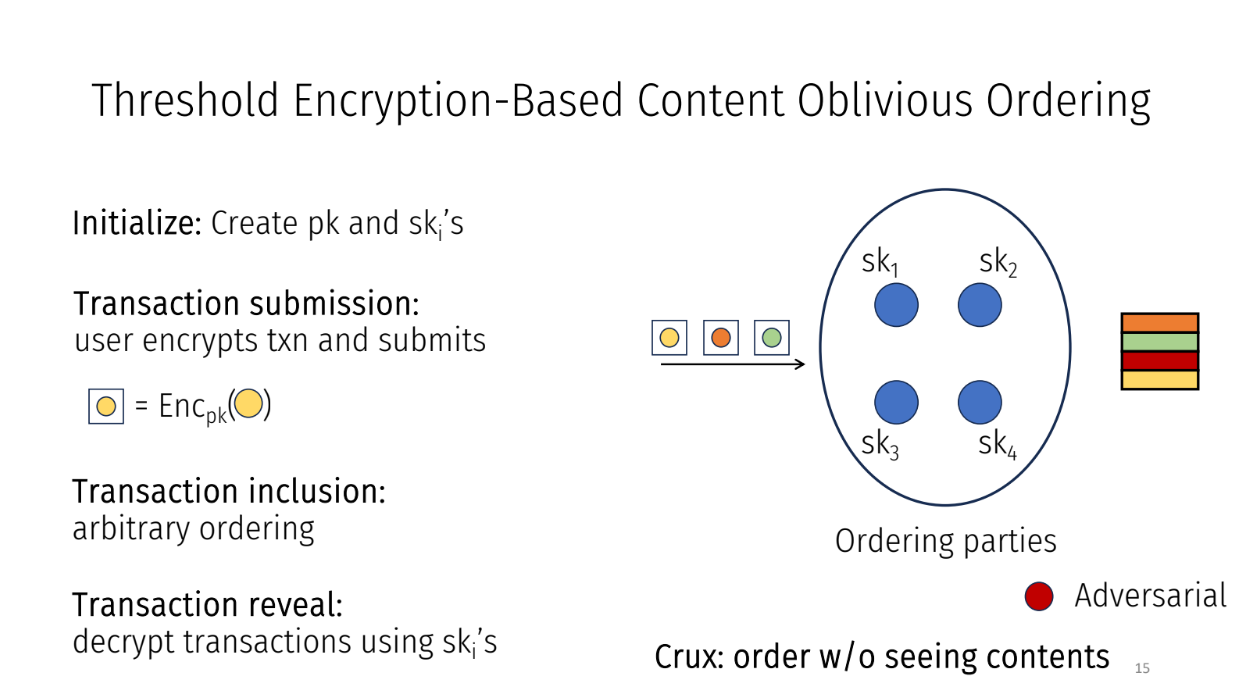

Kartik is explaining a framework that captures different protocols for fairly ordering transactions in a system. They use a specific protocol called "Threshold Encryption Content Oblivious Ordering" as an example within this framework.

The framefork works as following :

- Initialization : Generate a public key and secret keys for each party in the system

- Transaction Submission : Users encrypt their transactions using the public key and submit them

- Ordering : Parties order the encrypted transactions however they want since they can't see the contents

- Revealing : Once ordered, parties decrypt the transactions using their secret keys

The idea is parties can't unfairly order transactions since they don't know what's inside them when ordering. Even if a bad transaction came earlier, it doesn't matter since contents were hidden.

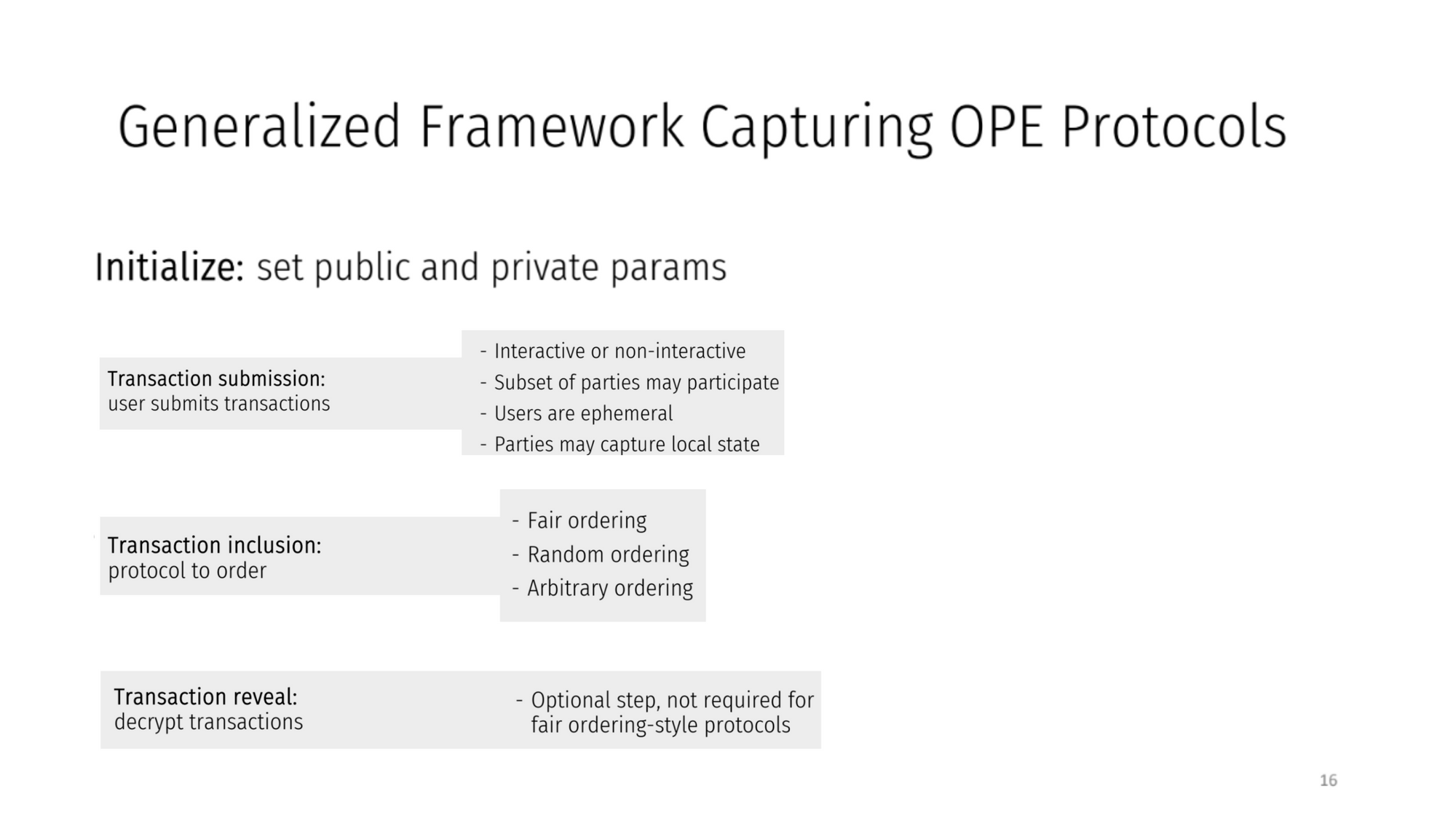

Generalized Framework Capturing OPE protocols (8:00)

In general, protocols in this framework have :

- Initialization with public/private parameters

- Users submitting transactions

- Parties capturing metadata like transaction arrival times

- Transactions ordered based on metadata

- Optional revealing of contents after ordering

- A binding property which says that a transaction executed by the protocol is the one that is submitted to it

- We assume that between after ordering and before revealing, the state of the transaction does not change.

Impossibility for Order Policy Enforcement

Explanation (10:30)

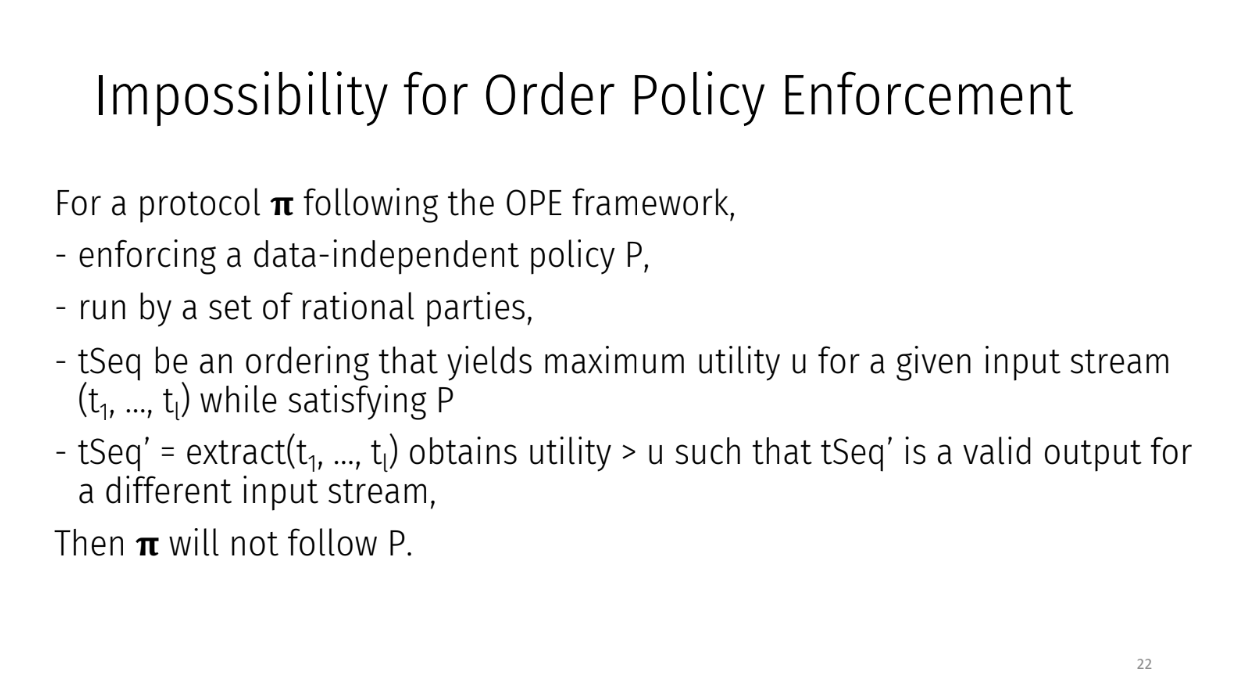

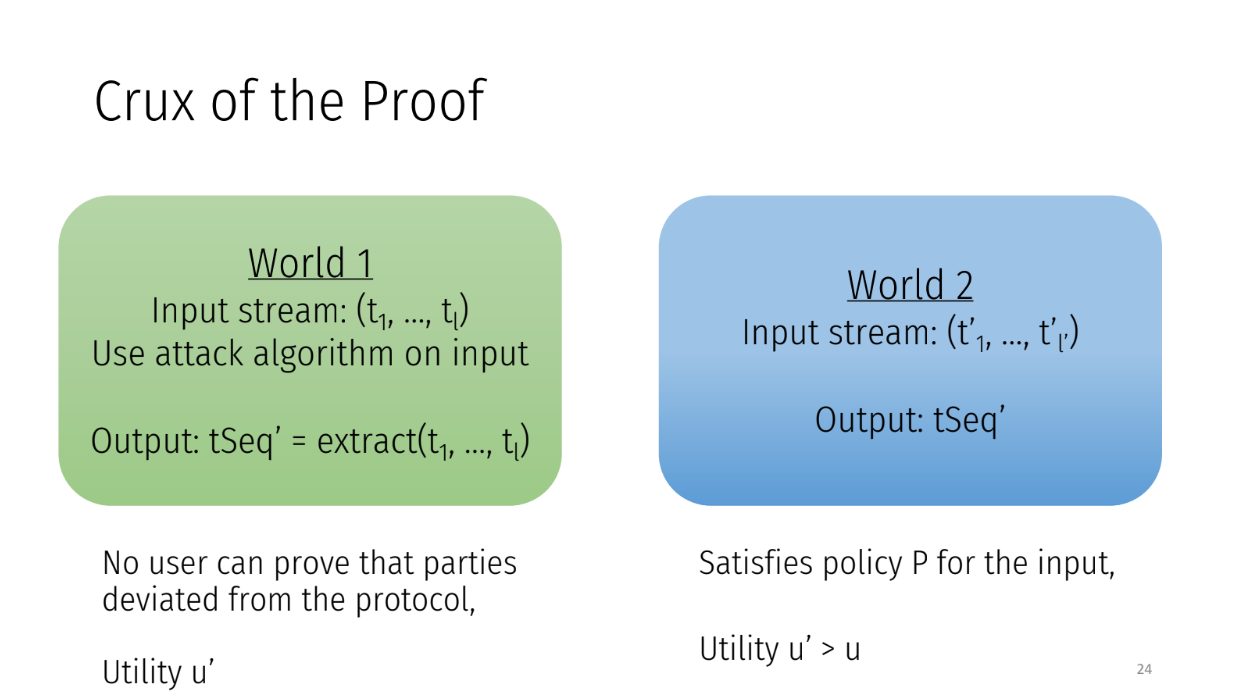

For protocols in this framework, if there is a way for parties to reorder transactions and earn more profit, they will do it.

Let's say the original order of transactions is t1, t2, ..., tl. And for this order, the sequence tSeq gives parties the maximum profit u.

Then parties will output tSeq instead of following the protocol policy P. They will manipulate the order if they can profit more.

The attack works like:

- Parties reveal all transactions

- Run extraction functions to maximize profit

- Replay transactions in a different order TSEC'

- Publish new state with higher profit

This makes it look like a different input stream was submitted. Hence, no one can prove the attack happened. So rational parties will attack protocols in this framework if a profitable reorder exists.

How can order fairness be achieved when parties are rational ? That is the next part of the talk

Circumventing the impossibility

The two approaches (13:30)

- Require clients to stay online until their transaction is committed. This prevents attacks since parties can't see contents until after ordering.

- Relax the binding property : allow the executed transaction to differ from the submitted one, as long as rational parties still follow correct behavior.

In reality, keeping clients online is cumbersome. A better approach is relaxing binding

For example, say a client submits a "buy 50 tokens" transaction. The executed transaction could be "sell 50 tokens" instead. This is problematic, but if rational parties still follow correct behavior, binding only needs to hold for them. This is "rational binding"

From this, we can create a protocol using rational binding that prevents "sandwich attacks" in certain applications like automated market makers (AMMs).

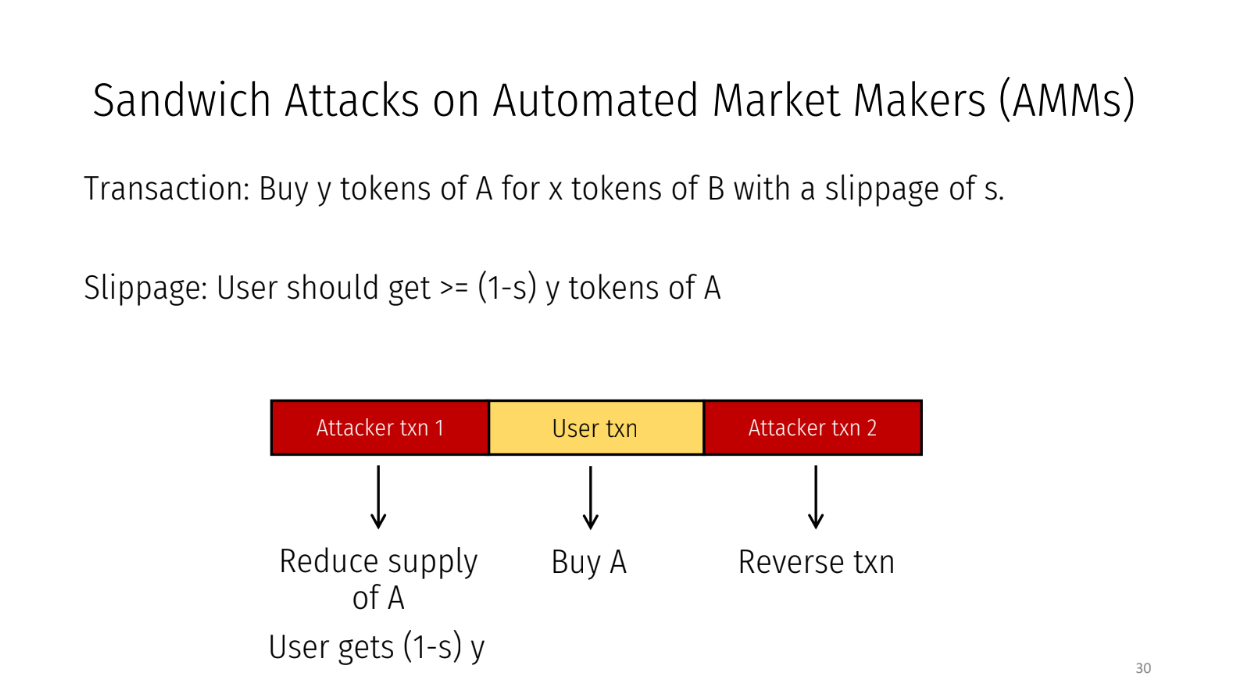

Sandwich attacks on AMMs (16:00)

In an AMM, we trade one token for another:

- The exchange rate depends on token availability

- A user submits a transaction like "Buy Y tokens of A for X tokens of B"

- There is a slippage parameter S to allow for rate changes

Attackers exploit this by making transactions before and after the user's transaction. This reduces A's availability so the user gets a worse rate of 1-S times Y tokens. The attacker profits.

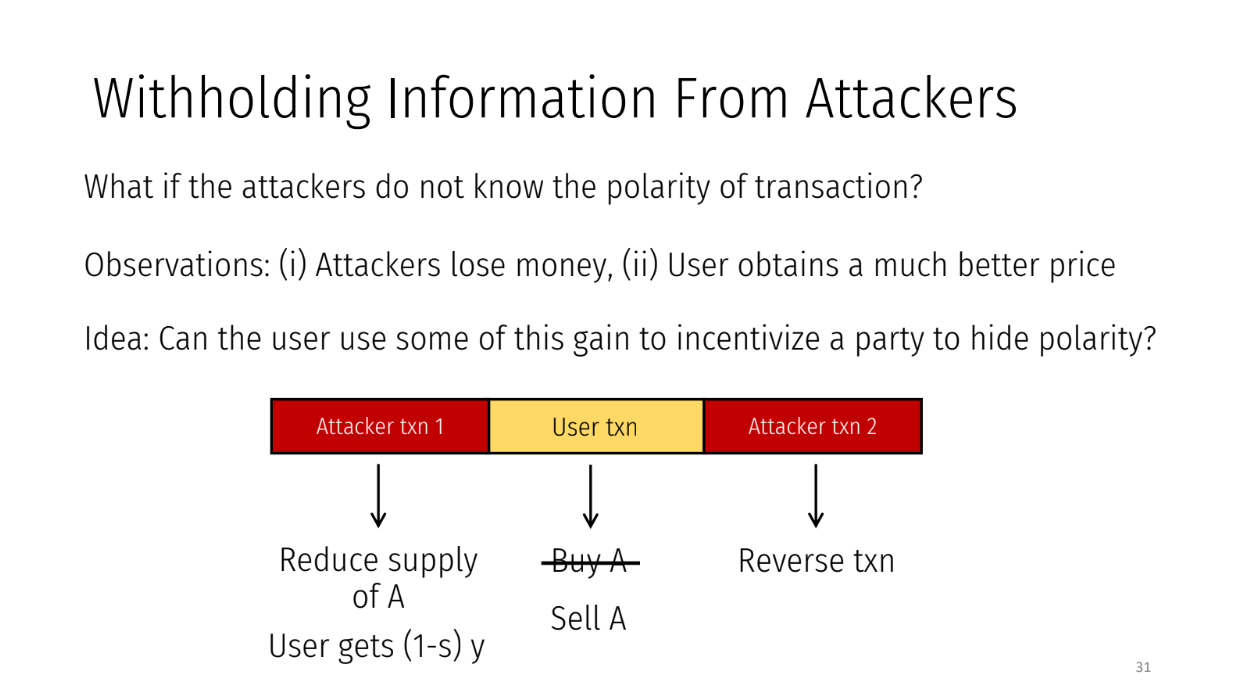

But how do attackers exploit slippage if transaction types (buy/sell) are hidden from them ?

If an attacker thinks his target is a buy order whereas this is a sell order, attackers would lose money by performing this attack and users would obtain a price that is much better than what they were initially anticipated.

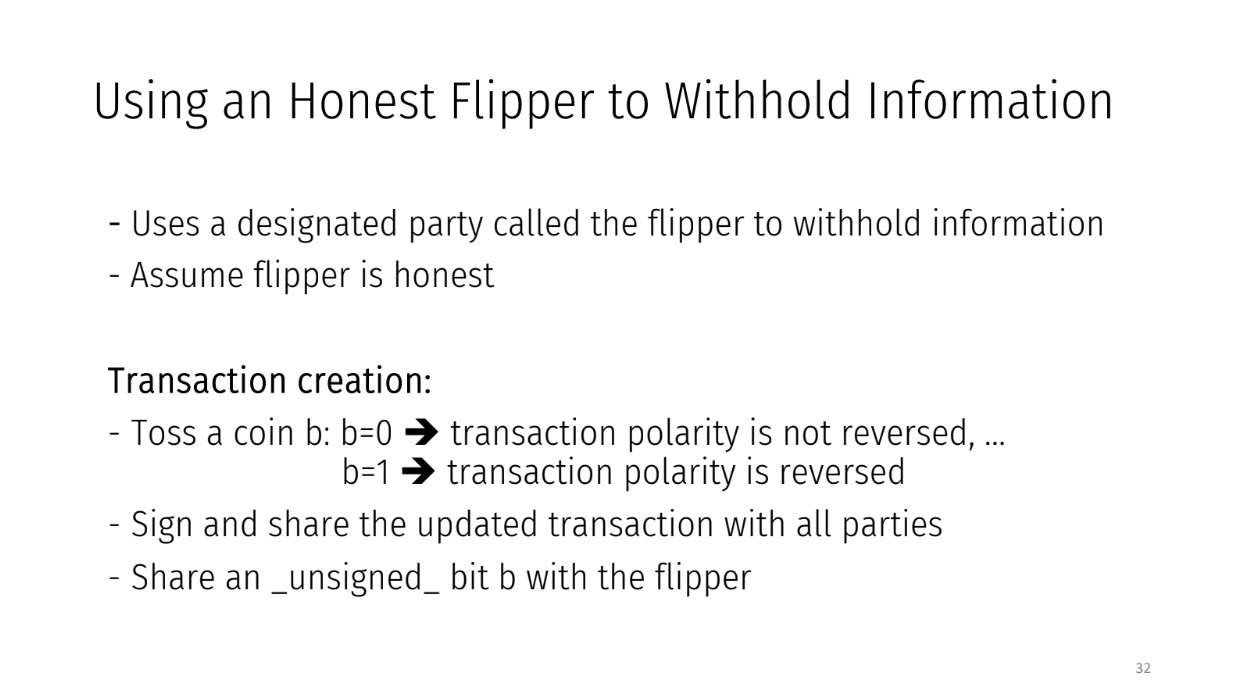

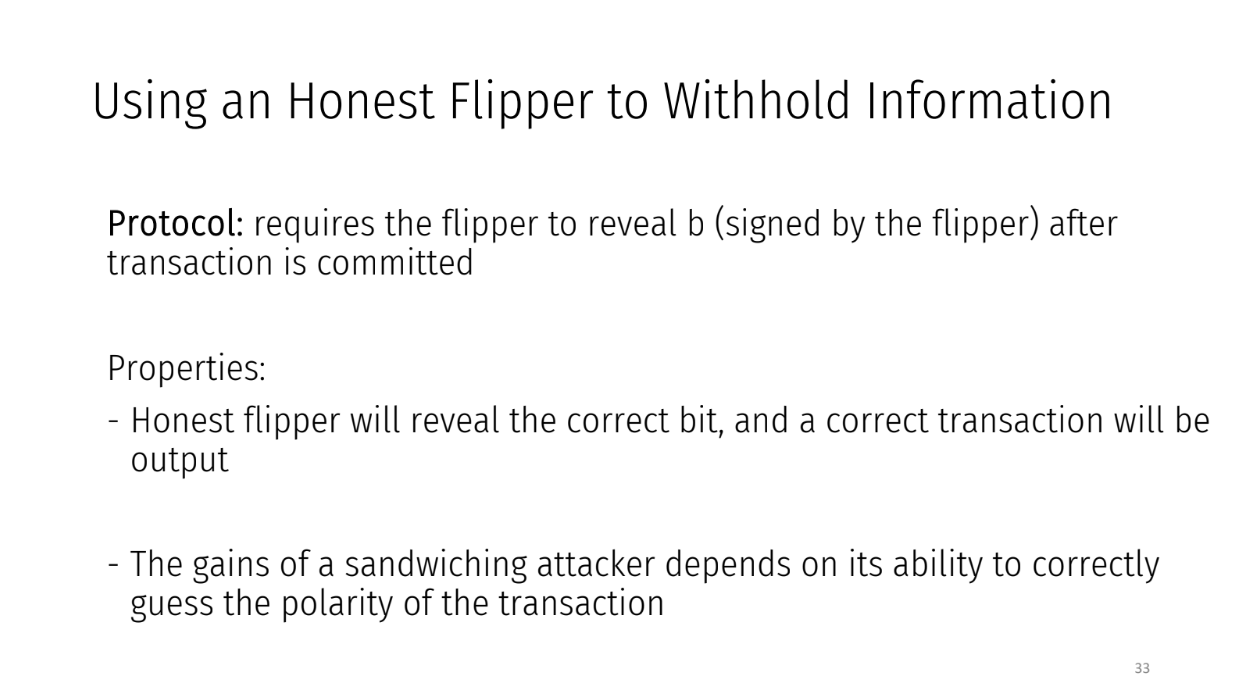

Using an honest flipper to withhold information (18:30)

A "flipper" party sees the real transaction but hides it from other parties. This confuses them so they can't sandwich attack, and the flipper earns fees for its role.

Here is how it works with an honest flipper:

- User submits a "buy" transaction but flips it to "sell" with 50% probability before sharing with parties

- User shares real unsigned transaction with flipper

- Parties order the hidden transactions

- Flipper reveals the real transaction type after ordering

- This prevents sandwich attacks and guarantees correct execution

- Flipper earns fees when users get better prices from its help

To incentivize a rational flipper, it must be rewarded when its tricks help users, and must be penalized if it colludes and reveals info early

This uses rational binding to relax transaction binding while preventing attacks. Kartik is exploring caveats like insufficient user funds, but it's an interesting idea to stop attacks with rational parties.

Q&A

How do you discern that the flipper was honest after the events occur ? (22:00)

The flipper doesn't need to actually be honest. The flipper makes a commitment to the user to reveal the correct transaction type later. If they don't, the user can penalize the flipper.

Wouldn't you need to pay the flipper at least as much profit as they could make from a sandwich attack ? Otherwise they could just attack instead of helping (23:00)

By flipping the transaction, the user can gain more than the sandwich attack profit.

This extra profit can be shared with the flipper as payment. The flipper earns from the user, not from attacking.

Does the AMM need special integration with fle flipper ? (24:30)

Yes, after commitment the flipper must send an input to reveal the real transaction type. So some coordination is needed.

What prevents a malicious flipper from messing up transactions as a denial of service attack ? (25:00)

This is a limitation - a Byzantine flipper could attack and be penalized. They are relaxing transaction binding, so this attack is possible.

How a flipper is selected ? (25:45)

Kartik suggests who proposes a block could be the flipper. No specific selection method is defined.

Protected Order Flow

Fair Transaction Ordering in a Profit-Seeking World

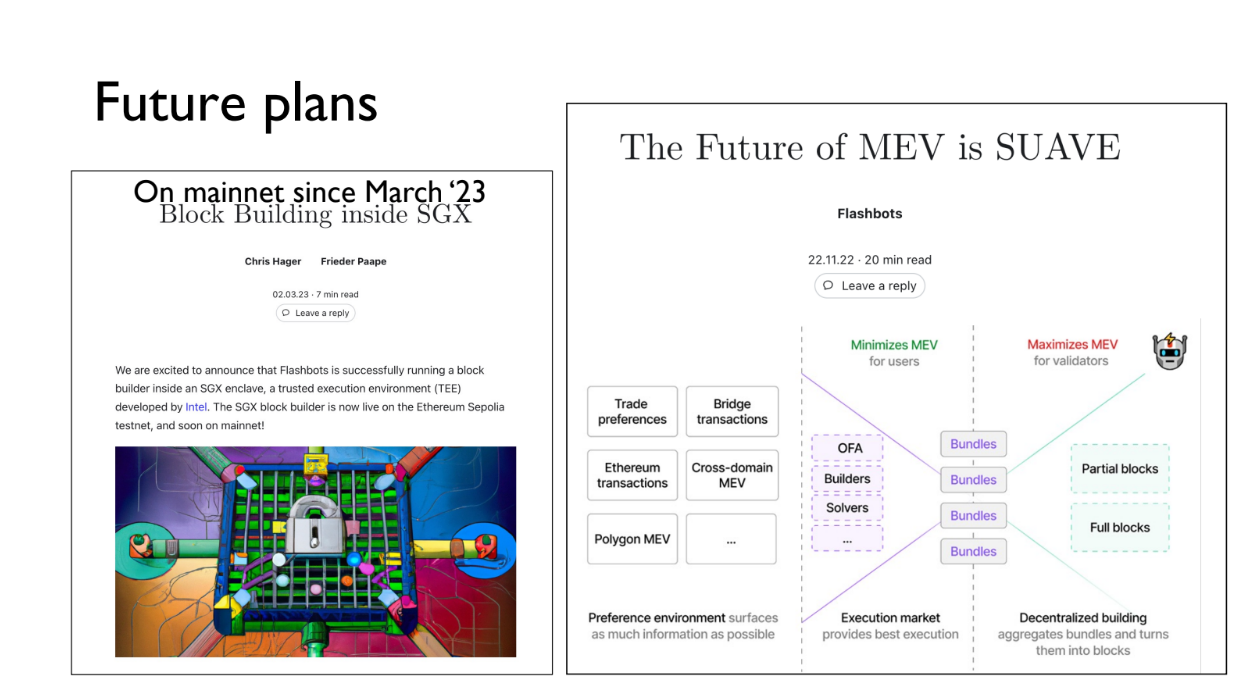

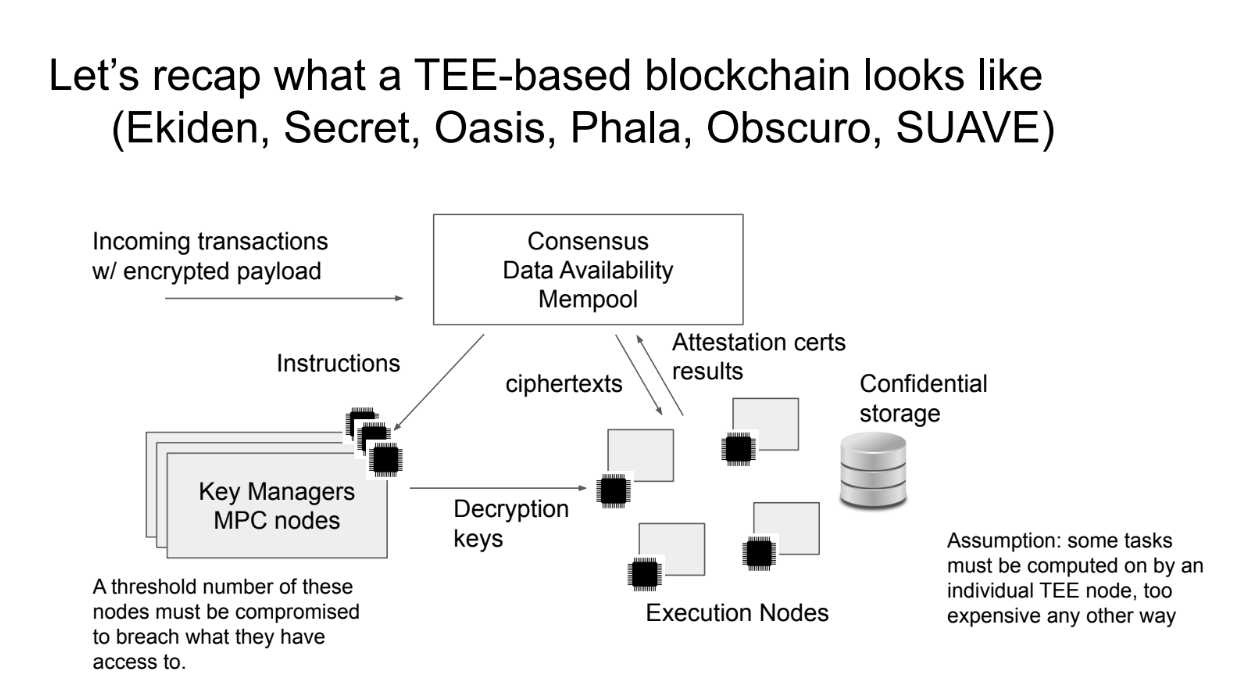

This talk is about an ongoing research project called Protected Order Flow (PROF) that aims to enable fair ordering of transactions on blockchain networks like Ethereum.

The goal of PROF is to append bundles of transactions to blocks in a fair order, while still being compatible with existing systems like Flashbots.

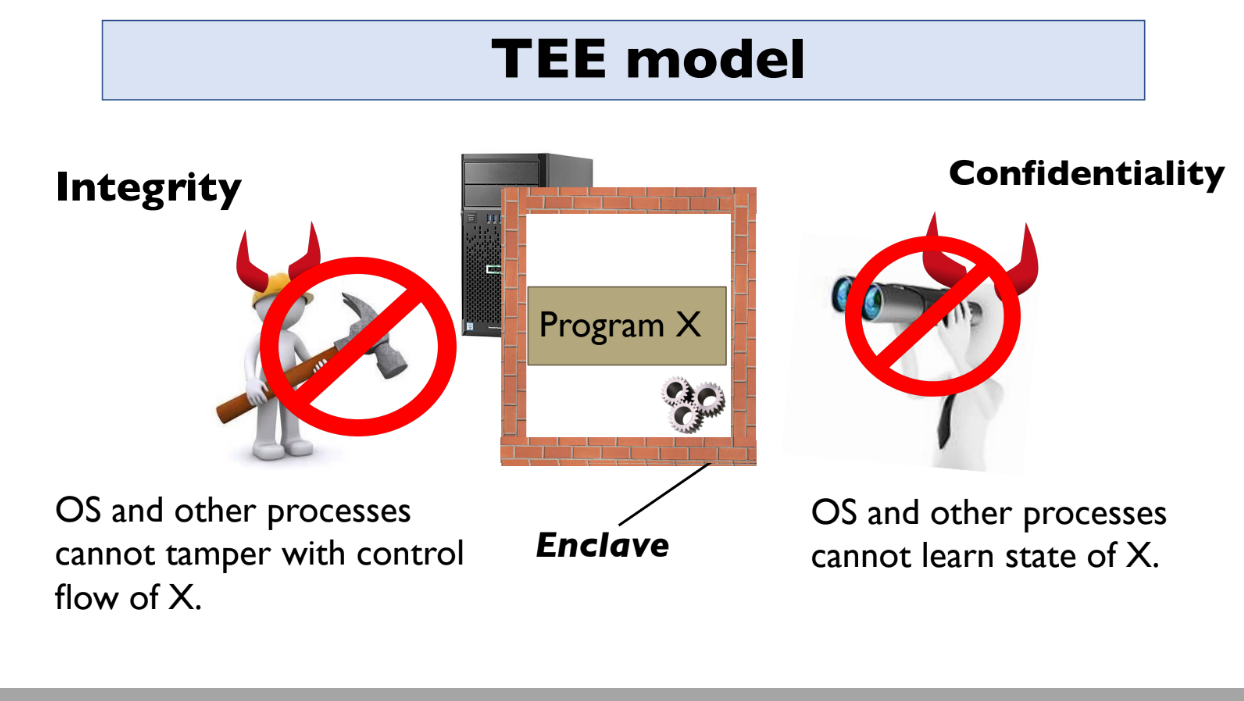

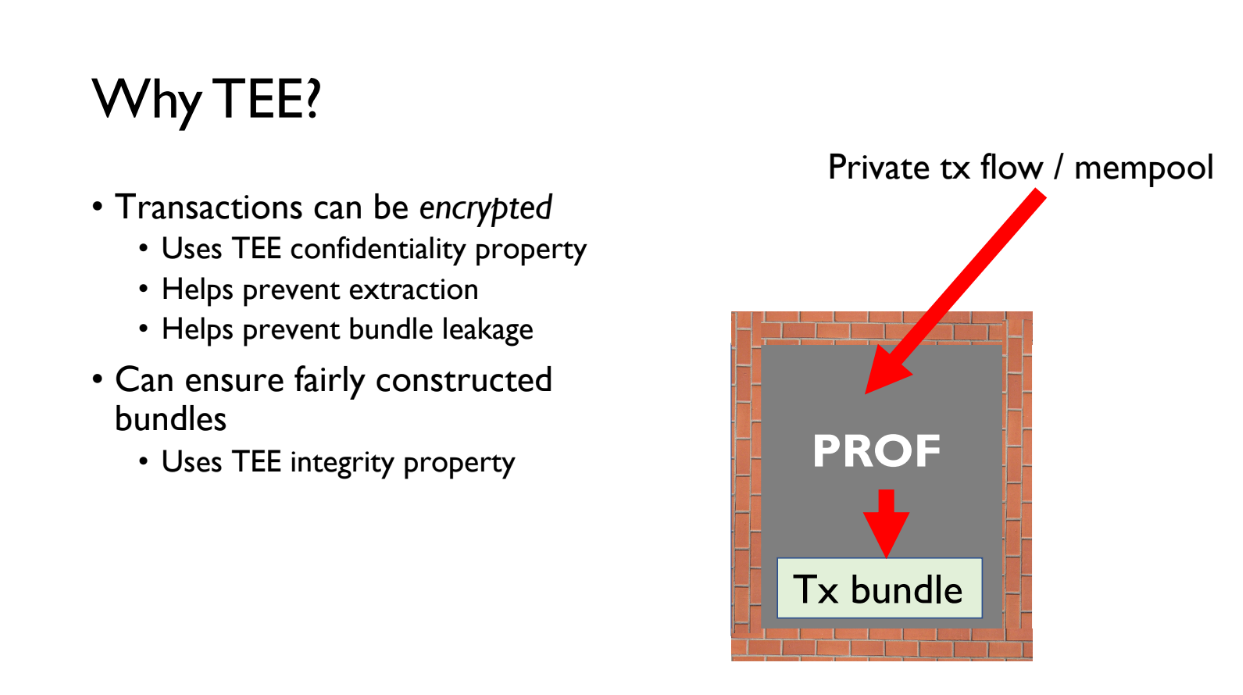

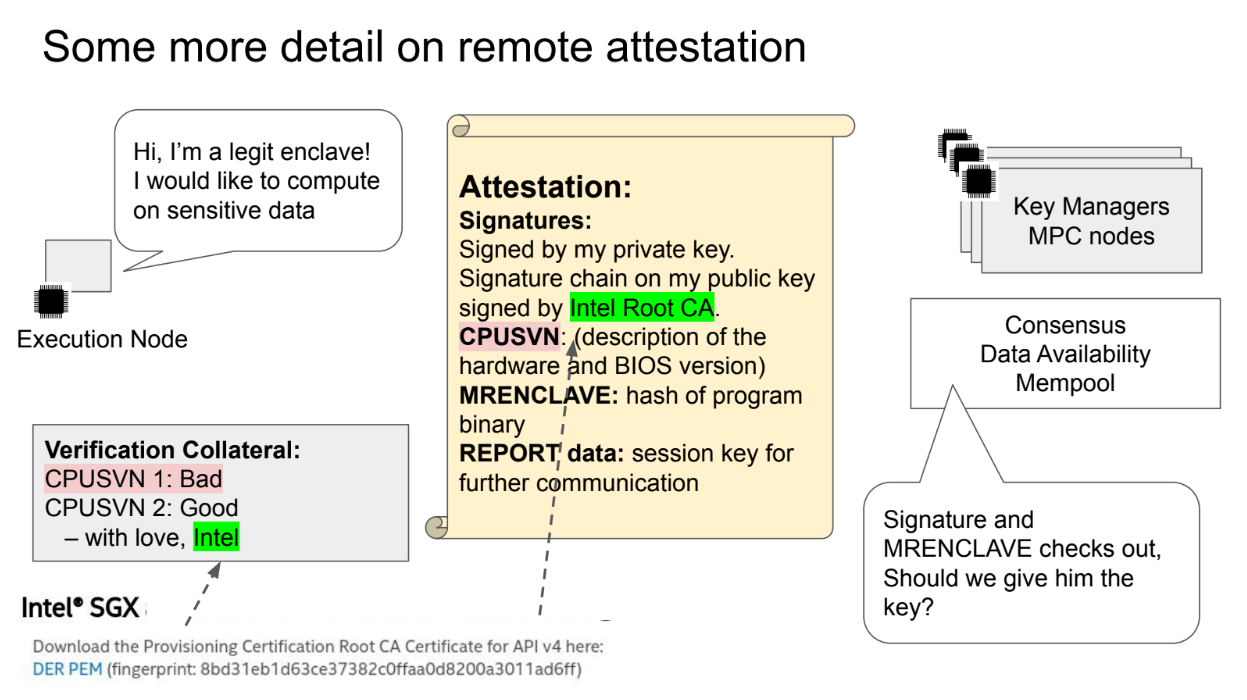

To do this, PROF leverages trusted execution environments (TEEs) like Intel SGX. TEEs allow you to run programs privately inside a protected environment.

The key properties of TEEs are:

- Integrity - no one can tamper with the program running inside

- Confidentiality - no one can see inside the program

- Attestation - TEEs can prove to users that they are running the correct program

Examples : AWS Nitro, Intel SGX, AMD SEV, ARM TrustZone

Using TEEs, PROF can run a program that securely orders transactions in a fair way and appends them to blocks.

This is different from previous systems because TEEs provide better trust guarantees. However, TEEs have some limitations around availability and side channel attacks.

TEEs for Block-Building and MEV supply chain

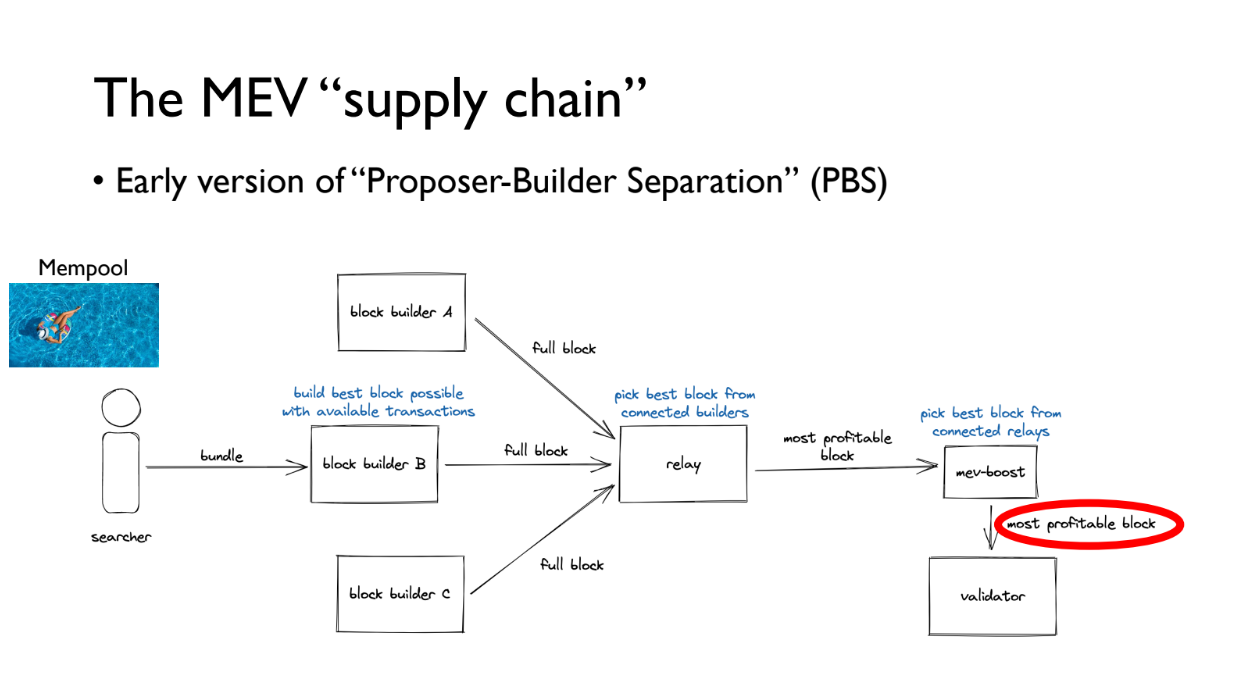

The MEV "supply chain" (4:00)

How the MEV supply chain works :

- There is a public mempool of transactions

- Searchers find profitable bundles of transactions and give them to block builders

- Block builders create full blocks and give them to relays

- Relays conduct auctions for the most profitable block in a fair way

- The most profitable block goes to the MEV-Boost plugin

- MEV-Boost makes sure validators only get the block after committing to include it

This system already uses TEE, as Flashbots runs its builder in a TEE. That said, there are plans to use them for PROF to allow appending a special bundle of fairly ordered, protected transactions to blocks to improve transaction ordering

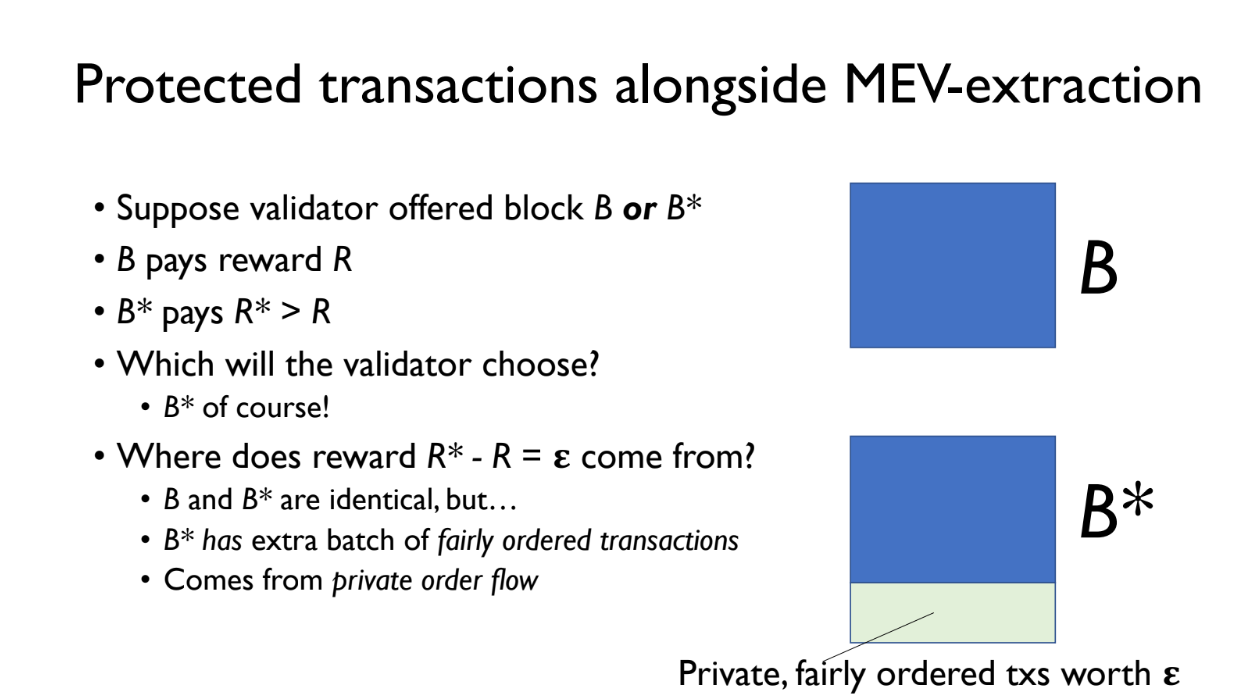

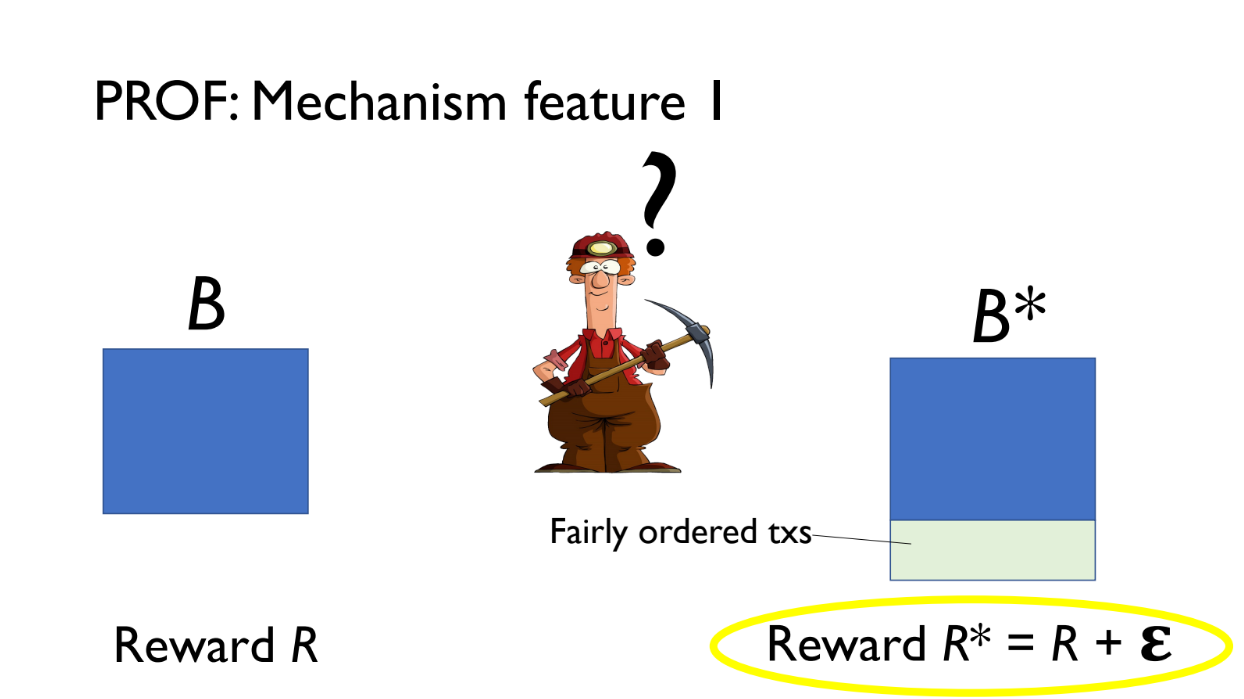

Protected transaction alongside MEV-extraction (5:15)

PROF creates two versions of a block:

- B = normal block

- B* = B plus an extra PROF bundle of transactions

Both B and B* are offered to the validator.

- B pays reward R

- B* pays reward R + epsilon (extra from PROF bundle fees)

The validator will choose B* since it pays more. The extra revenue epsilon comes from small fees paid by transactions in the PROF bundle

These PROF transactions are kept confidential using the TEE so no one can extract MEV from them

Why TEE ? (6:30)

TEE provide privacy and integrity : transactions are encrypted inside PROF program, and it ensures bundle follows fair policy even if PROF runs untrusted hosts

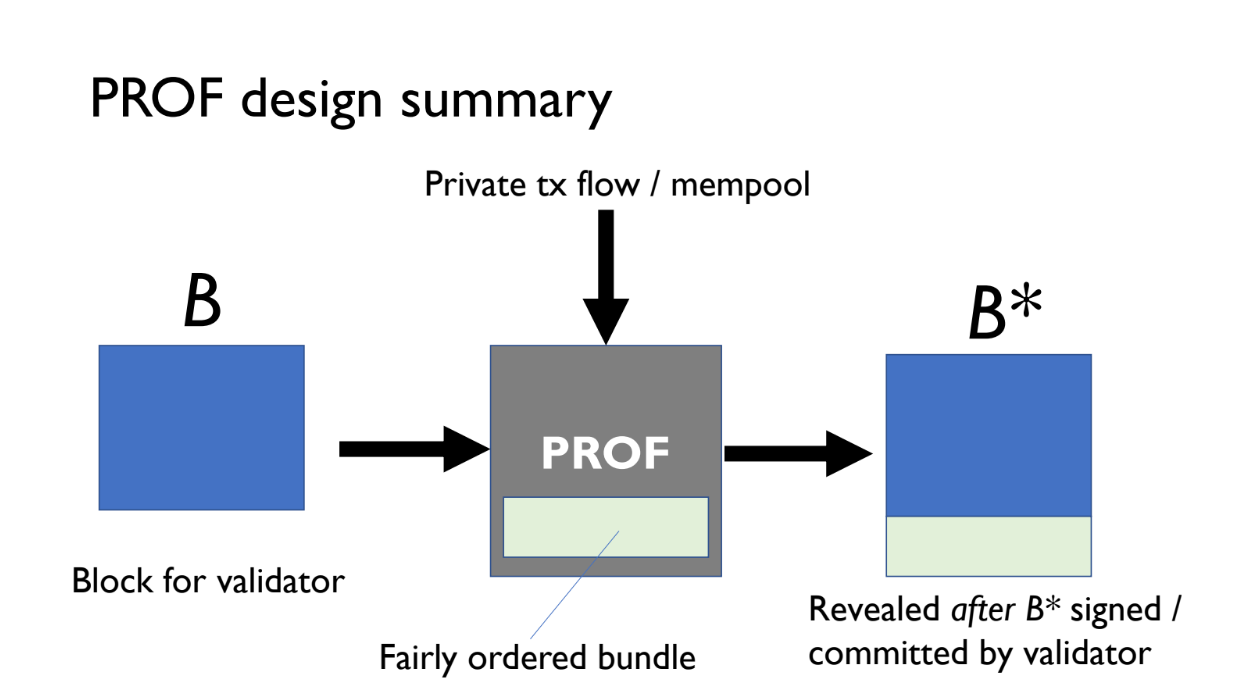

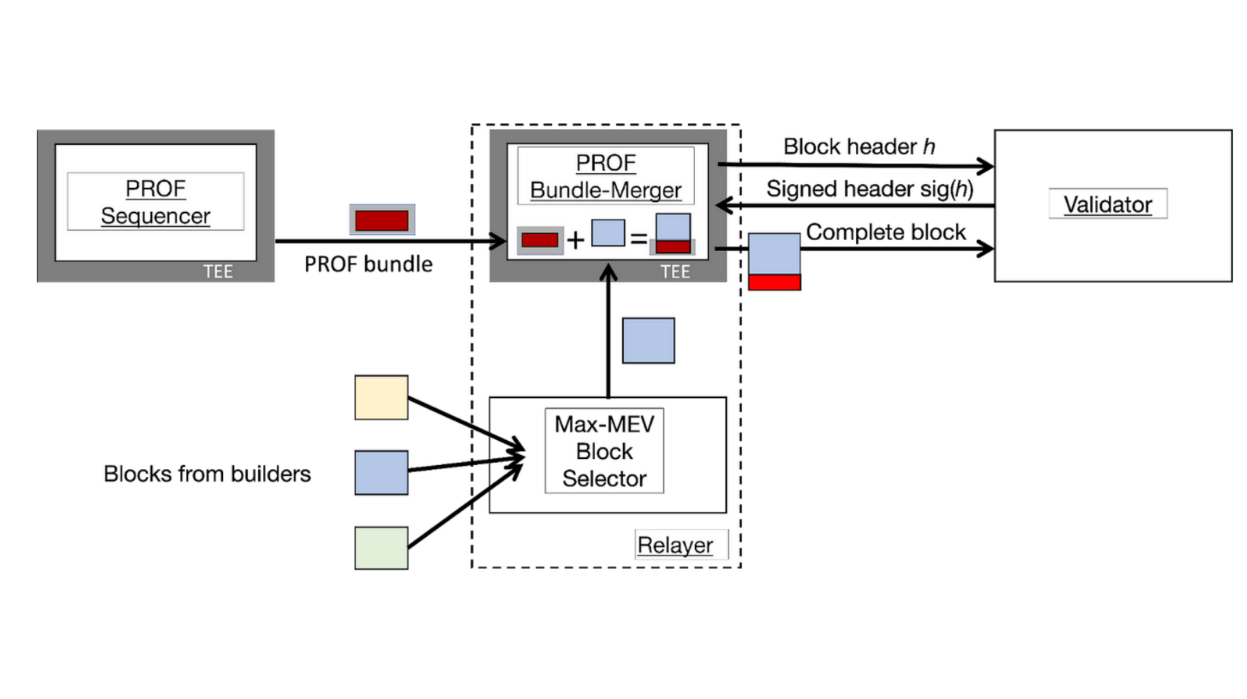

PROF has 2 main components (7:30)

PROF Sequencer

- Forms bundles of transactions

- Can enforce different ordering policies

- There can be multiple Sequencers with different policies

- Sequencer encrypts the bundle to protect transactions

PROF Bundle Merger

- Runs inside a TEE at the relayer

- Relayer does its normal auction for blocks

- Winning block is sent to the Bundle Merger

- Merger appends encrypted bundle from Sequencer to the block

The relayer operates normally. The PROF components allow appending a special encrypted bundle with fair ordering to the winning block.

The Bundle Merger makes sure the full block is only released after validator commitment.

This design separates bundle creation from merging to allow flexible policies and leverages TEEs to keep transactions confidential.

Features

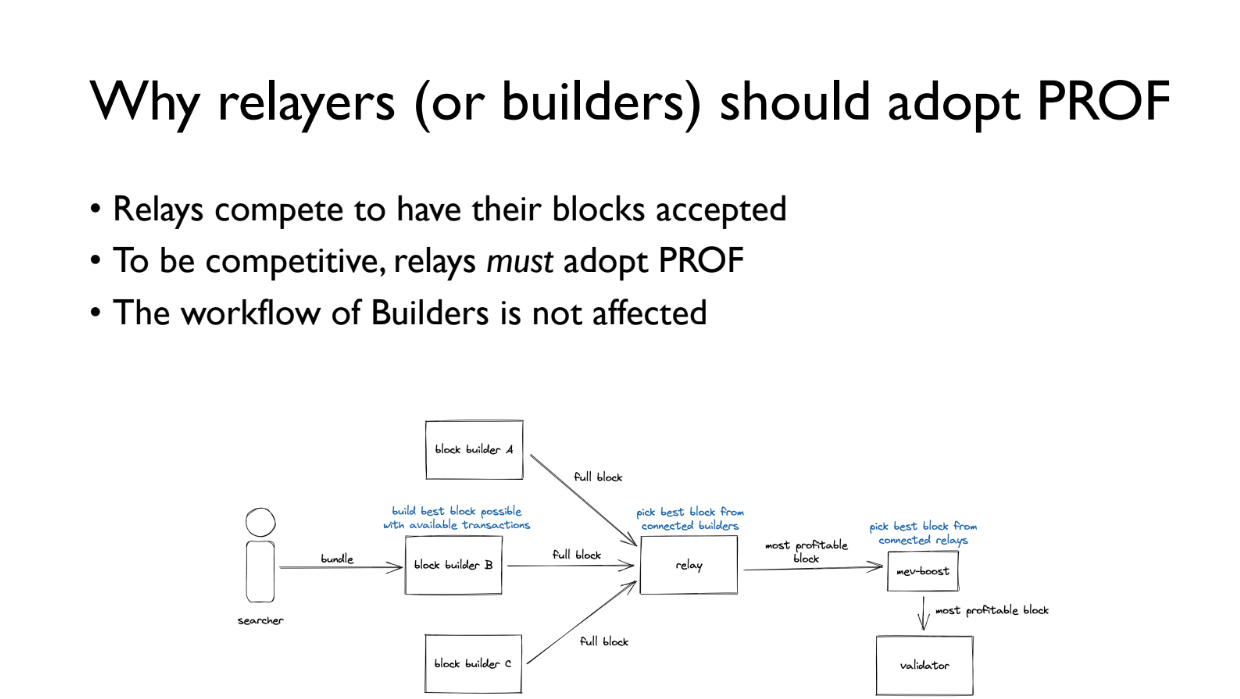

Fairly Ordered Transactions (9:15)

Validators profit more by including the PROF bundle, so are incentivized to use it

Relayers compete to have their blocks included by validators. By running PROF Bundle Merger, they can include extra PROF transactions to earn more fees and be chosen more often

Builders workflow is not affected. The PROF bundle is appended after their block, so their MEV extraction remains the same

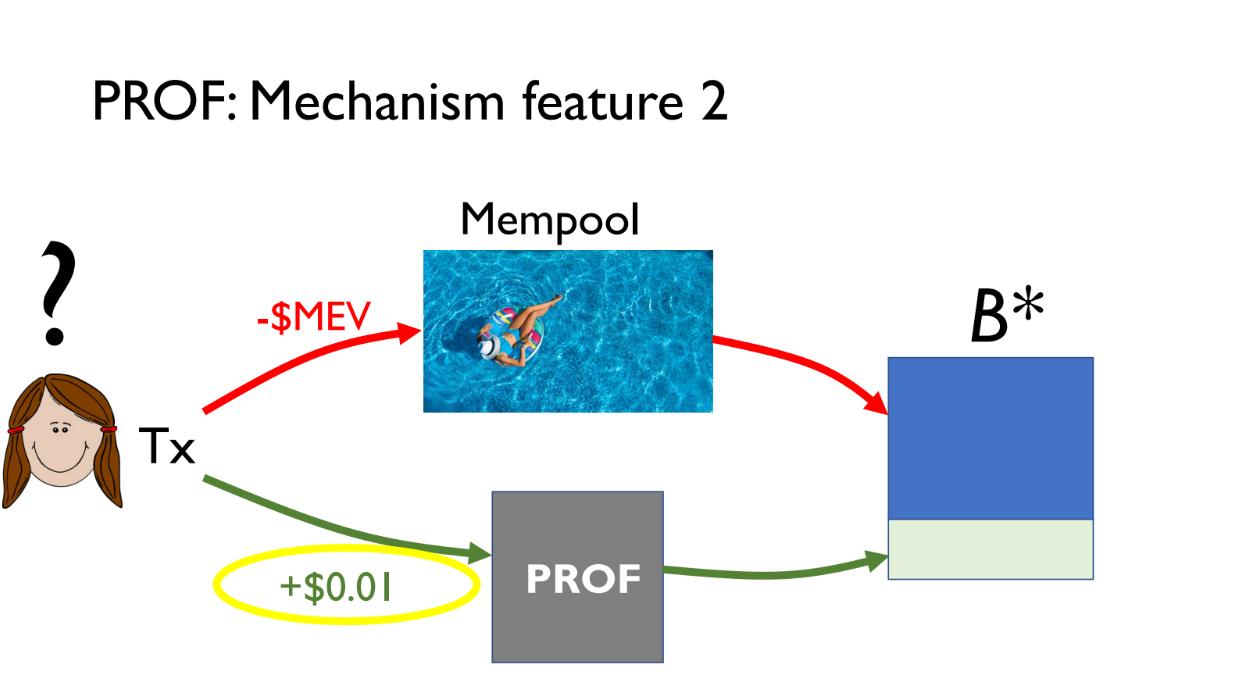

Users can either use public mempool and risk MEV extraction, or pay small fee to use PROF for fair ordering and transaction privacy

Things to work out (11:30)

PROF is designed so all parties benefit from adopting it. But there are still open questions to align everyone's incentives :

- Should PROF run at builders or relayers ? This implies tradeoffs around security and latency.

- How to handle PROF payments efficiently ?

- Can have multiple PROF Sequencers with different policies ?

We need more analysis needed of mechanism design and comparisons to other systems

The concerns (12:30)

Kushal recommends reading the Prof blog post on the IC3 website to learn more details about the design and considerations. Two optential concerns are adressed :

- PROF can use different fair ordering schemes (First come first serve, Causal ordering where transactions stay private until on-chain, both or neither)

- Trusted execution environments (TEEs) have some limitations like availability failures and side channel attacks

Bothering with TEEs (13:30)

There are some serious limitations to using trusted execution environments (TEEs) like Intel SGX. There have been many security vulnerabilities and attacks against TEEs, especially around confidentiality (major attack in August 2022)

But without TEEs, user transactions have no protection and are public anyway. TEEs provide additional security on top of the status quo.

There are also ways to improve TEE security :

- New TEE designs and attack mitigations are being researched.

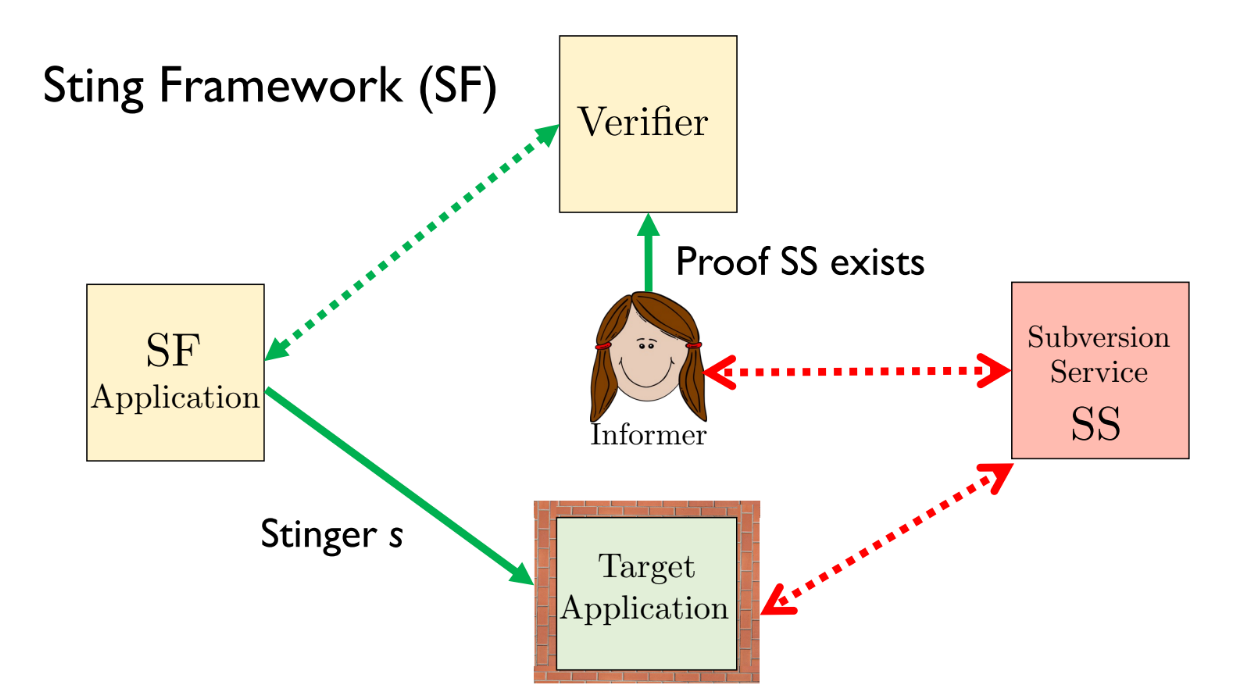

- The "Sting" framework is one approach to protect against TEE vulnerabilities

Q&A

Currently the relayer needs to build a full block and bid on it to participate. How PROF changes this and whether it should run at builders or relayers ? (15:00)

The bundle merging happens at the relayer in PROF's design. The builder workflow doesn't change - they make the same payment to validators.

The bid changes because the block now contains the additional PROF bundle, so validators see a higher bid.

There are different ways to share profits between validators, relayers and builders. TEEs can help enforce policies around profit sharing. The goal is for all parties to benefit in some way.

Appending a PROF bundle may increase latency, and higher latency generally means lower value. How to incentivize around block value and latency ? (16:15)

PROF bundle would only be used when latency costs are low towards the end of the bidding window. Relayers can run both normal and PROF bidding in parallel and take the more profitable block.

For high value low latency blocks, normal bidding would be used and PROF transactions would wait. This maximizes overall profitability.

Do you think that people would use this as a way to minimize tips (compared to using the public mempool) ? (18:30)

Using PROF still requires paying a tip, but protects against MEV extraction which is a risk with the public mempool.

Another person noted that Prof transactions go at the end of the block, so you lose priority. This may not work for arbitrage where you want priority.

Kushal agrees PROF is not for users who need top priority. It's for those okay having transactions at the bottom. Researchers are exploring inserting bundles earlier in the block.

Is there a potential design where you could submit private bundles that included transactions from the public mempool ? (20:00)

We could do that, but duplicate transactions get dropped when merging the bundle, so our sandwich may not succeed

Can relayers potentially colluding with validators to steal the PROF block from the TEE without publishing it ? (20:30)

If enough validators collude, attacks can't be prevented. But having an honest validator quorum would prevent this collusion.

How does the TEE know which validator's signatures it should release the PROF block to ? (22:00)

TEE runs something like a light client tracking validator sets and network state. Checkpointing and rollback protection help prevent network attacks on the TEE's view.

Intro

Phil argues geographic decentralization is the most important property for cryptocurrencies and blockchain systems.

Cryptocurrencies aim to be "decentralized". But decentralization must be global to really disperse power. Protocols should be designed so nodes can be run from anywhere in the world, not just concentrated regions.

Winning a Nobel Prize, jk (2:15)

Phil believes researching geographic decentralization techniques could be as impactful as winning a Nobel Prize. Even though there is no Nobel for computer science, the author jokes you can "win" by advancing this research area.

For consensus researchers, this should be a top priority especially during the "bear market", which is a good time to focus on building technology, rather than just speculation.

Geographic decentralization > Consensus (3:00)

Rather than just studying abstract models like Byzantine Fault Tolerance, researchers should focus on geographic decentralization. Because this is the main point that makes systems like Ethereum to be truly decentralized

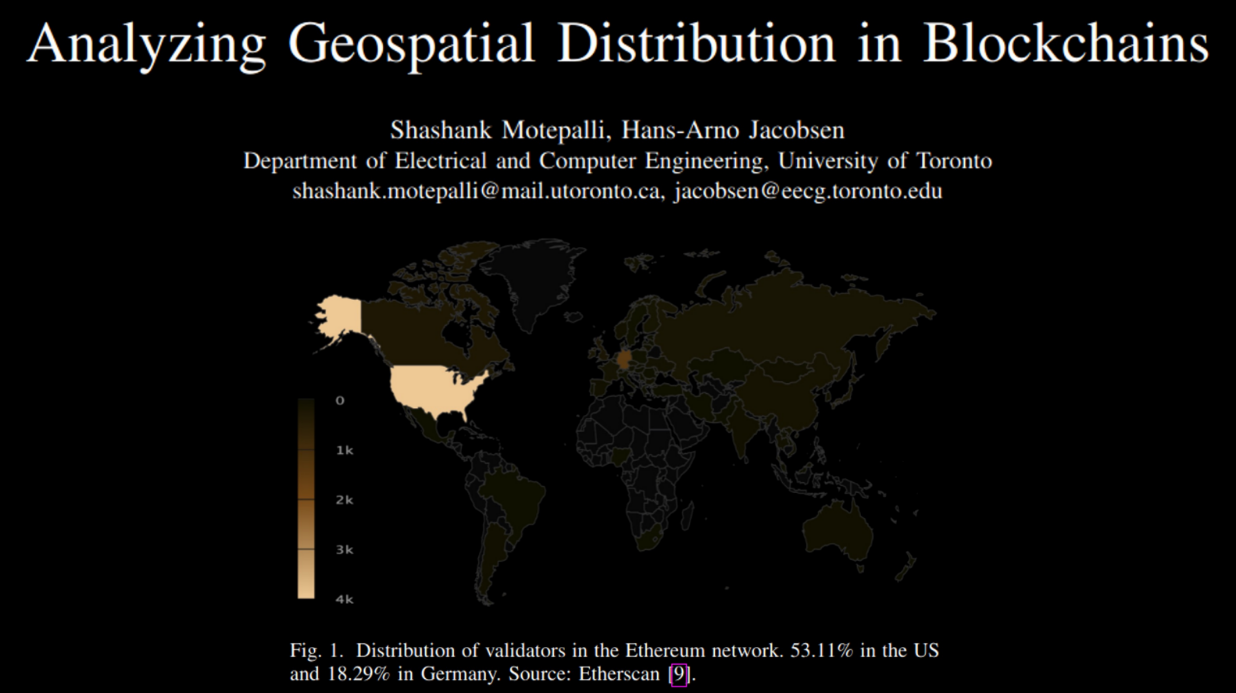

Geospatial Distribution (5:00)

Right now, 53.11% of Ethereum validators are located in the United States, and 18.29% in Germany. This means Ethereum is not as decentralized as people might think, since a large percentage of nodes are concentrated in one country.

Having nodes clustered in a few regions makes the network more vulnerable - if there are internet or power outages in those areas, a large part of the network could go offline. Spreading the nodes out across different countries and continents improves the network's resilience and fault tolerance.

...But why do we care ? (6:55)

Global decentralization is important for many reasons, even if technically challenging :

- To comply with regulations in different countries. If all the computers running a cryptocurrency are in one country, it may not comply with regulations in other countries.

- To be "neutral" and avoid having to make tough ethical choices. There is an idea in tech that systems should be neutral and avoid ethical dilemmas. Like Switzerland is viewed as neutral.

- For use cases like cross-border payments that require geographic decentralization to work smoothly.

- To withstand disasters or conflicts. If all the computers are in one place, a disaster could destroy the system. Spreading them worldwide makes the system more resilient.

- For fairness. If all the computers are in one region, people elsewhere lose input and control. Spreading them globally allows more fair participation.

- For network effects and cooperation. A globally decentralized system allows more cooperation and growth of the network.

The recipe

What is geograhical decentralization ? (11:00)

Phil believes the most important type of decentralization for crypto/blockchain systems is decentralization of power, specifically economic power.

Technical forms of decentralization (like number of validators) matter only insofar as they impact the decentralization of economic power.

A metric to study (12:25)

Some terminology :

- G = an economic game/mechanism

- P = the set of players in game G

- N = a network where players experience real-world latencies

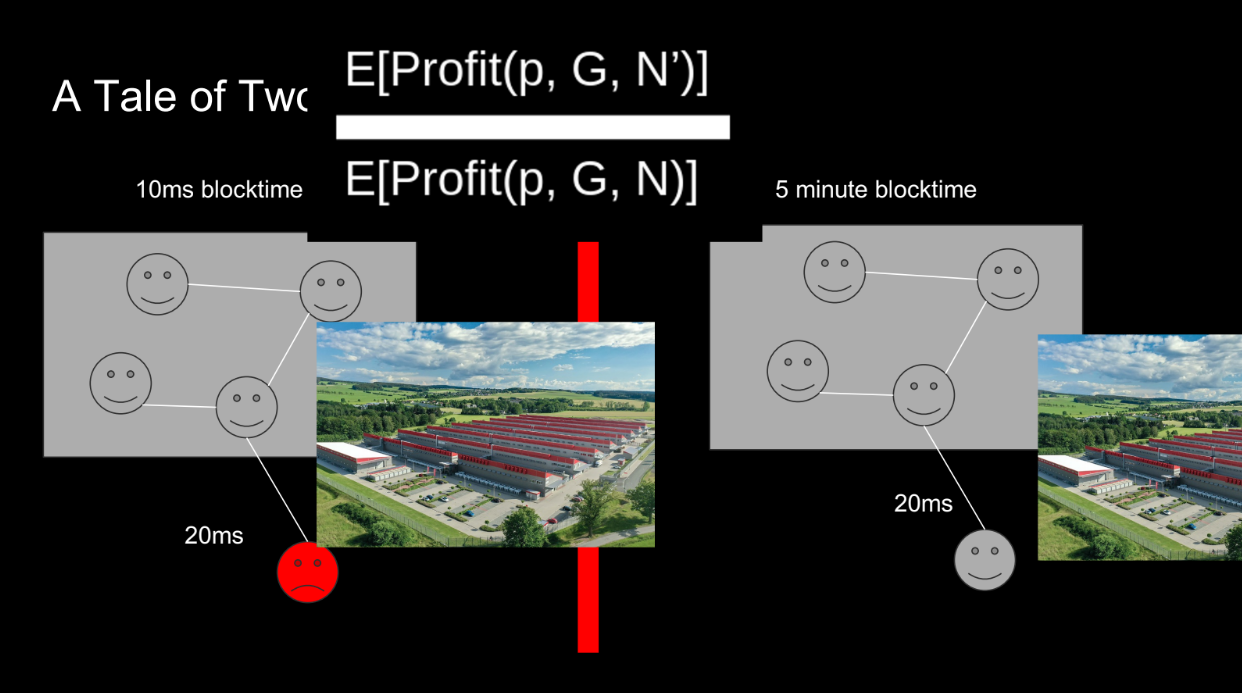

- N' = a network where players have minimal latency to each other

- p = an arbitrary rational player in P

The "geocentralization coefficient" refers to the ratio of a player's expected profit in the minimal latency network N' versus the real-world latency network N.

A high coefficient means there is more incentive for players to be geographically co-located. The goal is to keep this coefficient low, to reduce incentives for geographic centralization.

Some examples

Fast blockchains have higher coefficient (14:00)

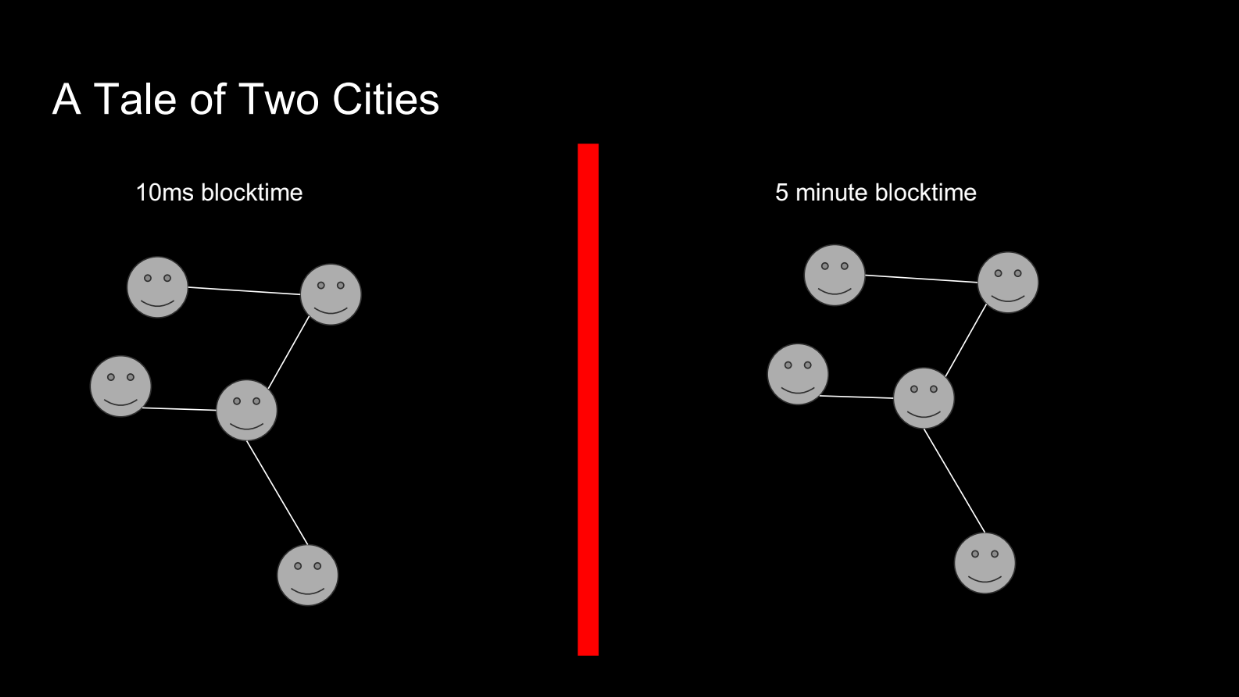

Let's give an example comparing 2 blockchains : one with fast 10ms blocks, one with 5 min blocks :

- In the fast blockchain, a node with just 20ms higher latency cannot participate, so its profit is zero.

- In the slower blockchain, 20ms doesn't matter much. The profits are similar.

Therefore, the fast blockchain has a higher "geocentralization coefficient" - it incentivizes nodes to be geographically closer. So a fast blockchain like Solana is less geographically decentralized than a slower one like Ethereum.

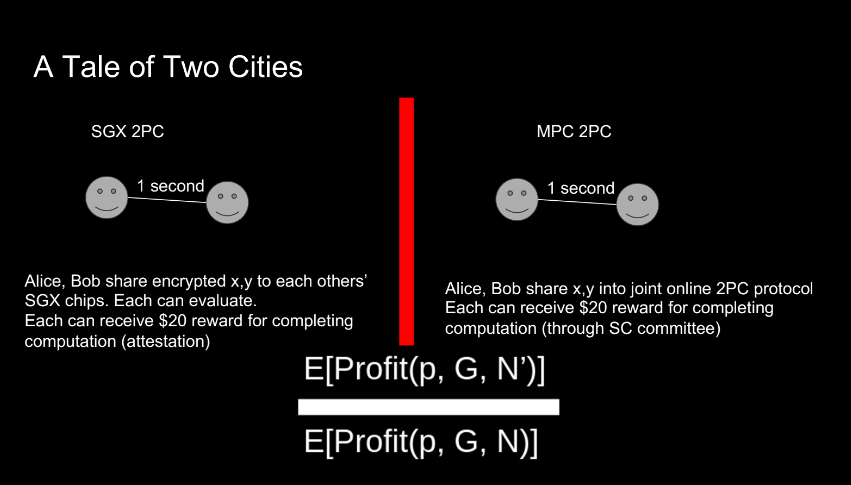

SGX and MPC (16:45)

Let's try another example with 2 parties trying to compute a result and get paid $20 each :

- MPC protocols require multiple rounds of communication between parties to compute a result securely. With higher network latency between parties, the time to complete the MPC protocol increases.

- Using SGX, they can encrypt inputs and get the output fast with 1 round of messaging. Latency doesn't matter much.

Adding MPC to blockchain systems can be useful, but protocols should be designed carefully to avoid increased geographic centralization pressures.

SGX FTW ? (19:00)

Phil concludes that SGX protocols can be more geographically decentralized than MPC protocols.

This is counterintuitive, since MPC is often seen as more decentralized. But SGX has lower latency needs than multi-round MPC protocols, then SGX has a lower "geocentralization coefficient" - less incentive for geographic centralization

Q&A

How the actual physical nature of the Internet informs this concept of geographical distribution ? (20:15)

The internet itself is not evenly distributed globally. This limits how decentralized cryptocurrencies built on top of it can be.

More research is needed into how the physical internet impacts decentralization.

Would proof of work be considered superior to proof of stake for geographic decentralization ? (22:15)

Proof-of-work (PoW) cryptocurrencies may be more geographically decentralized than proof-of-stake (PoS), because PoW miners have incentives to locate near cheap electricity.

However, PoW also has issues like mining monopolies. So it's not clear if PoW is ultimately better for geographic decentralization.

Would you agree that the shorter the block times, the more decentralization we can have in the builder market ? (24:00)

Shorter block times may help decentralize the "builder market" - meaning more developers can participate in building on chains. But it's an open research question, not a definitive advantage

Are you aware of any research done on how do you incentivize geographic decentralization? (26:30)

One kind of very early experiment was an SGX-based geolocation protocol and then possibly layering incentives on top of that. But Phil doesn't have answer here

What do you think the viability of improving the physical infrastructure network such as providing decentralized databases or incentivized solo stakers ? (29:00)

Improving physical infrastructure like decentralized databases could help decentralization.

Governments should consider subsidizing data centers to reduce compute costs. This makes it easier for decentralized networks to spread globally. This is important not only for blockchains but also emerging technologies like AI. Compute resources will be very valuable in the future.

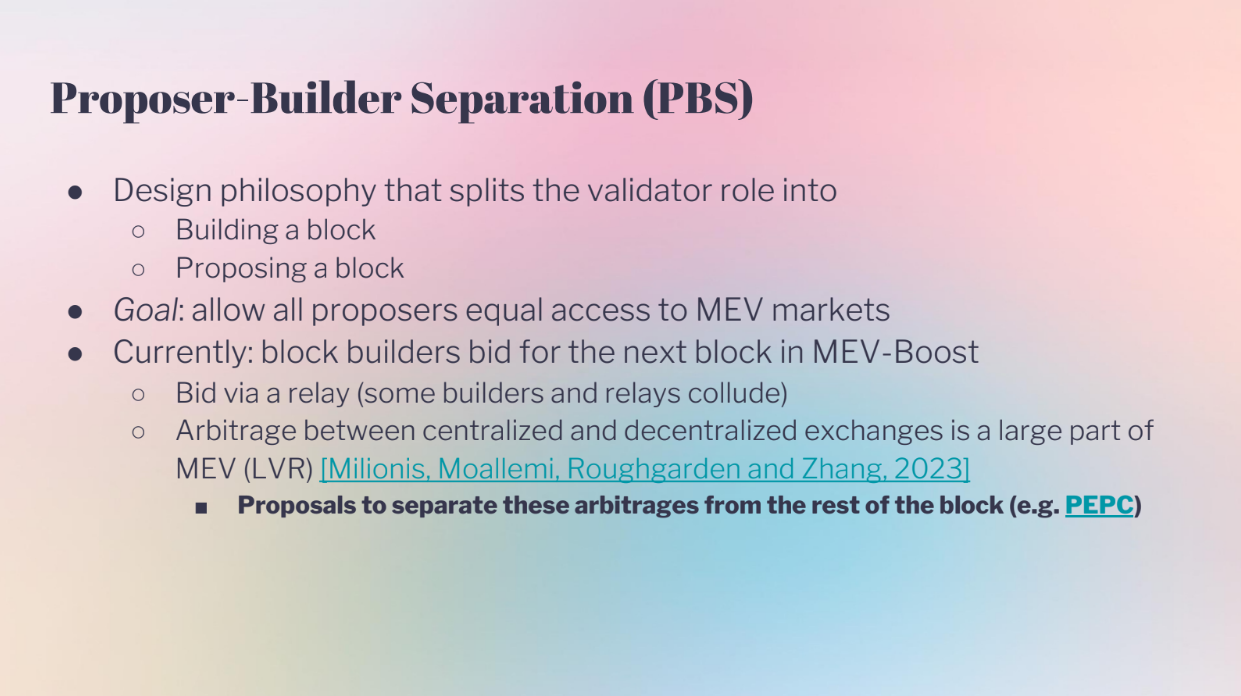

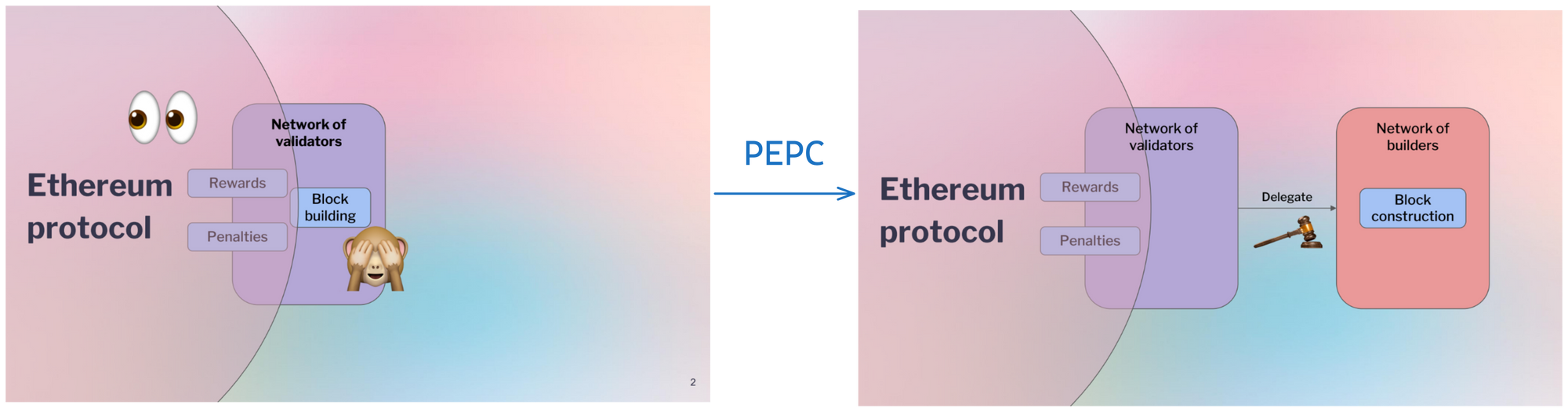

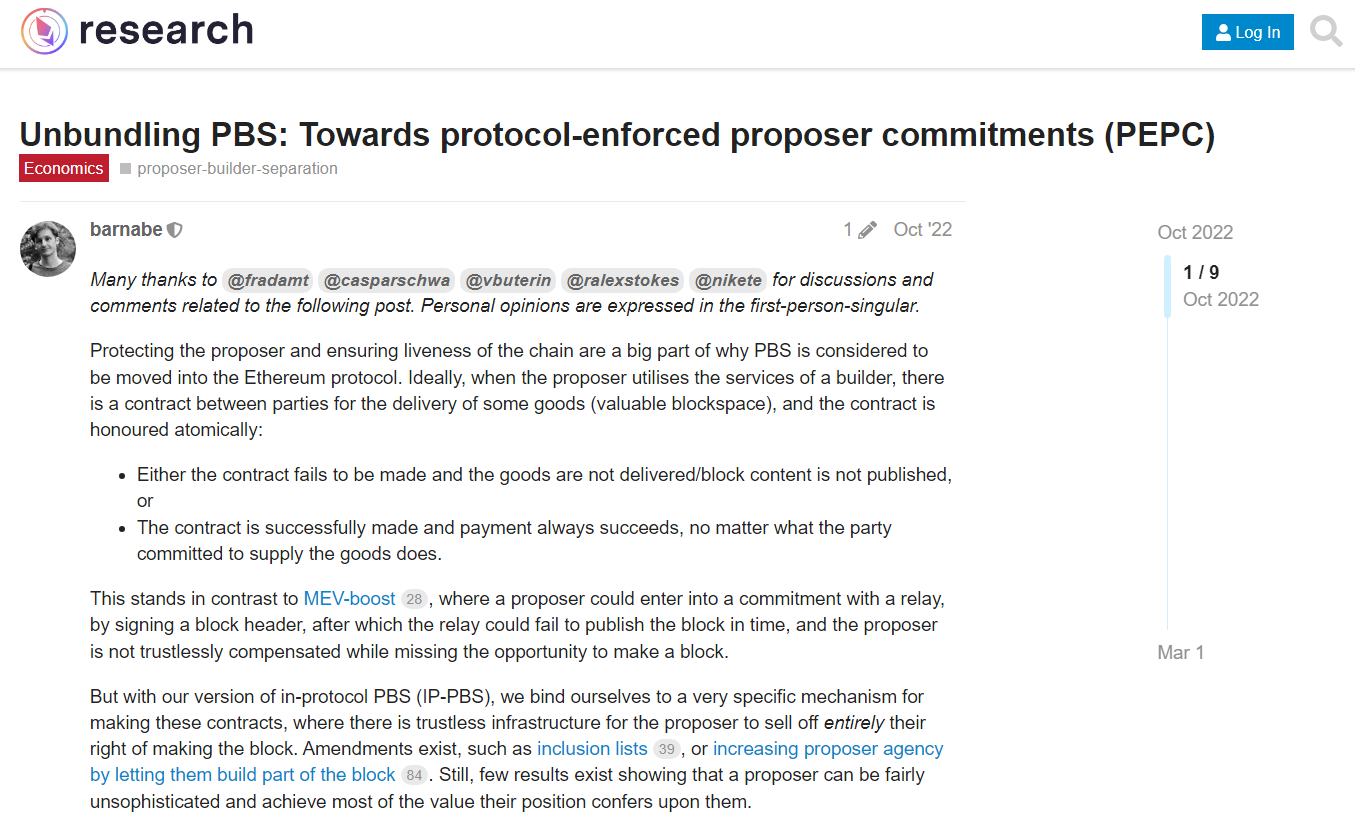

Proposer Builder Separation "PBS" (0:00)

PBS separates the role of validators into proposing blocks and building blocks. This helps decentralize Ethereum by allowing anyone to propose blocks, while keeping the complex block building to specialists. The goal is to allow equal access to MEV (maximum extractable value).

But currently, PBS auctions like MEV-Boost have issues :

- Bids happen through relays which could collude or have latency advantages.

- Arbitrage between exchanges is a big source of MEV

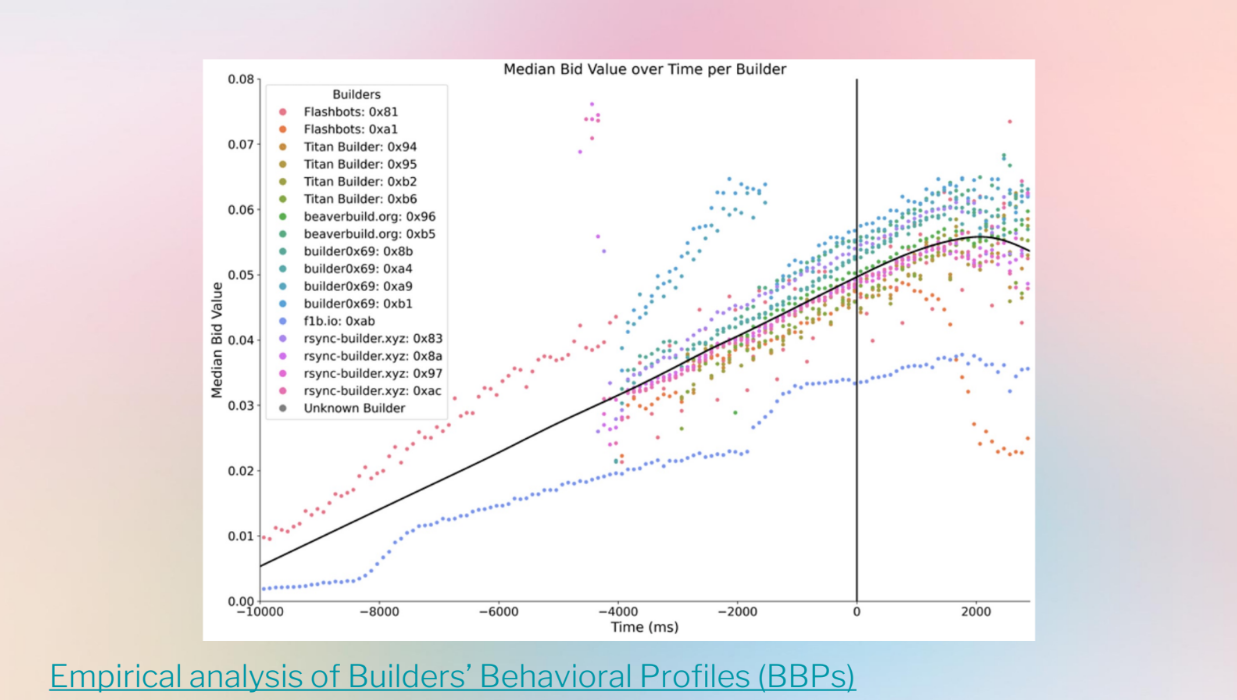

- The auction data shows messy and varying bid strategies between builders

Goals (2:30)

Robust Incentives Group is discussing research on modelling PBS theoretically to understand long term dynamics, not just the current messy data. They look at:

- How bidder latency affects profits and auction revenue

- Bidder strategies without modeling centralization or inventory risks

The goal is to improve PBS auctions like MEV-Boost by modeling bidder behaviors dynamically. This could help make MEV access more equal and decentralized long-term.

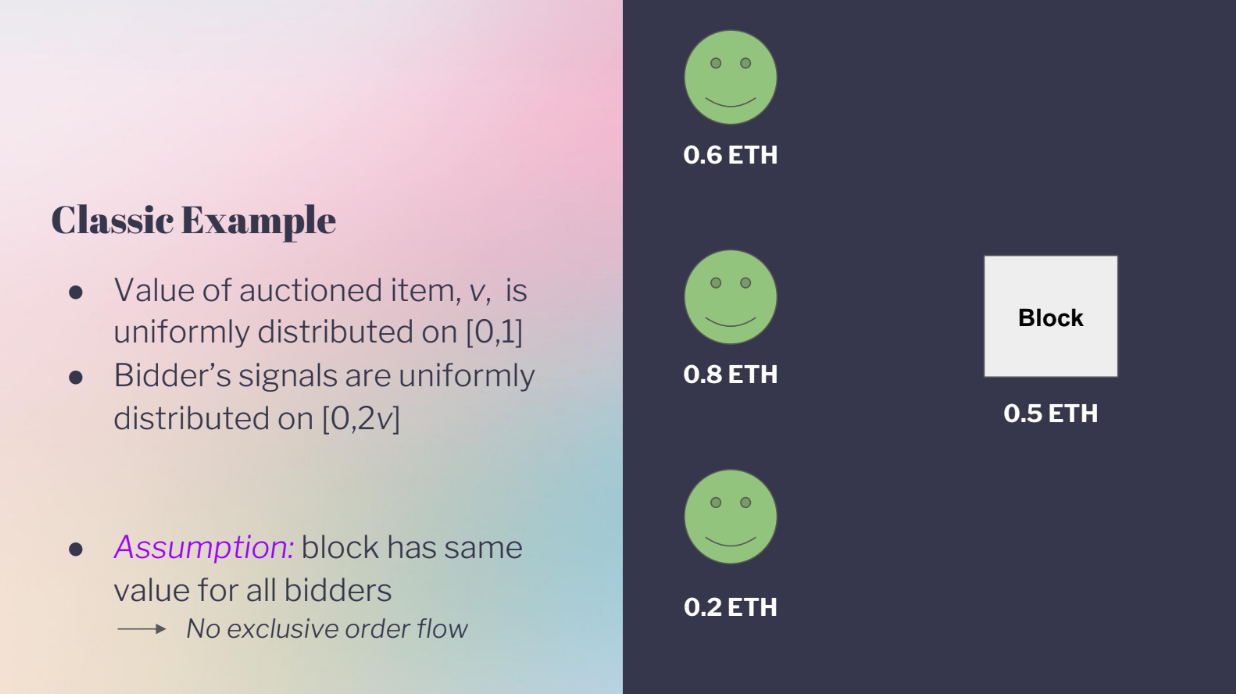

Classic example (3:10)

The classic example is as follows :

- There is one item being auctioned.

- All bidders agree on what the item is worth, but don't know the exact value.

- Each bidder gets a "signal" (guess) of what the value might be.

- Some bidders might overestimate or underestimate the actual value.

This models a PBS auction where builders bid for MEV in a block, as the actual MEV value is unknown during the bidding, and builders have different guesses on what a block will be worth.

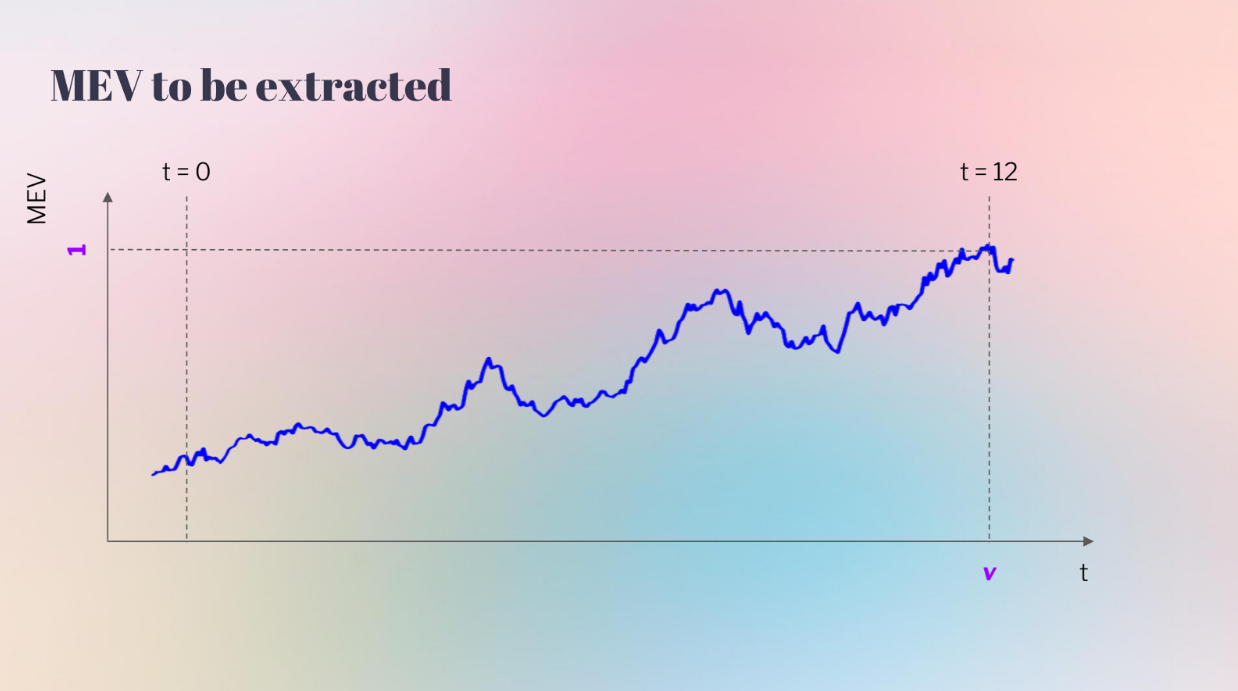

But this model doesn't include bidder latency. A more realistic model shows MEV value changing throughout the slot time :

- At the start (t0) the MEV value is uncertain

- By the end (t12) the actual value is known

- Faster bidders can wait longer to bid, closer to the real value

So bidder 2 who can bid later (lower latency) has an advantage over bidder 1 who must bid earlier with more uncertainty. This results in higher profits for lower latency bidders.

Stochastic Price Process (5:00)

The MEV value changes randomly over time, like a stochastic process. It can increase due to more transactions coming in, and can decrease due to external factors like changing prices

This is different than a classic auction model where the auctionned value is fixed and bidders get signals conditioned on that fixed value.

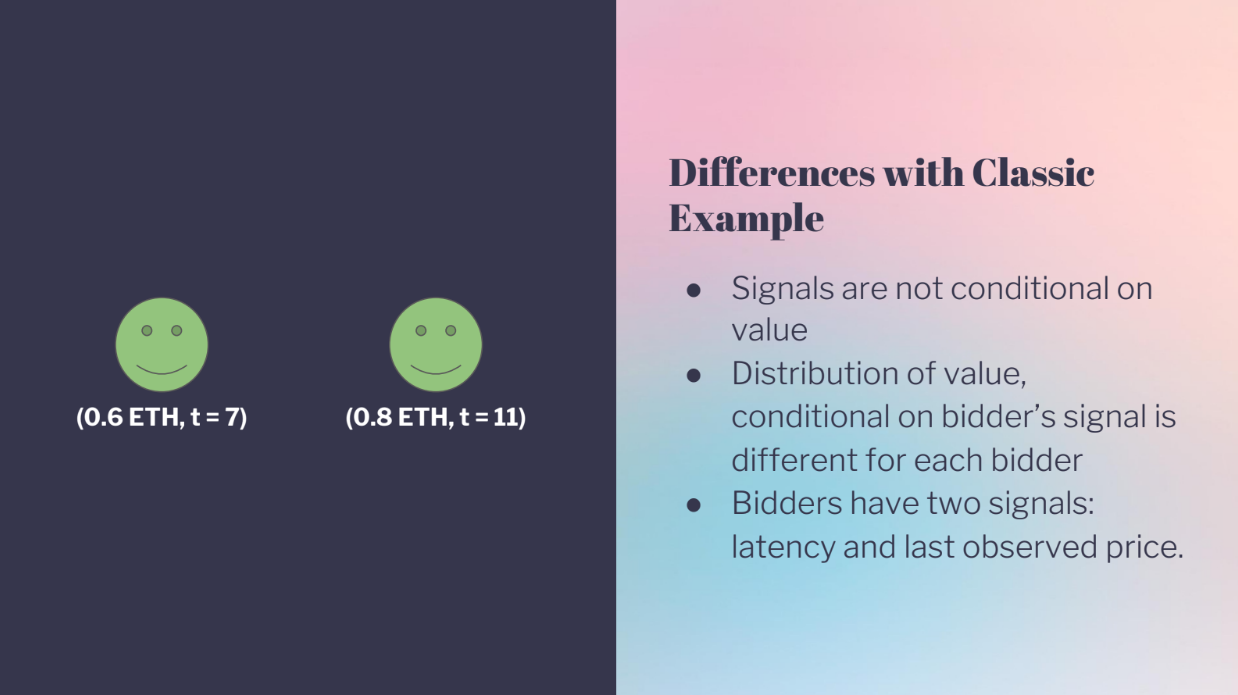

Differences with classic example (5:30)

- Signals are not conditional on value

- The distribution of the value depends on each bidder's signal. Lower latency bidders have more confidence in their signals, Higher latency bidders have wider confidence intervals

- Bidders get two signals : The last observed MEV price, and their own latency

Latency (6:25)

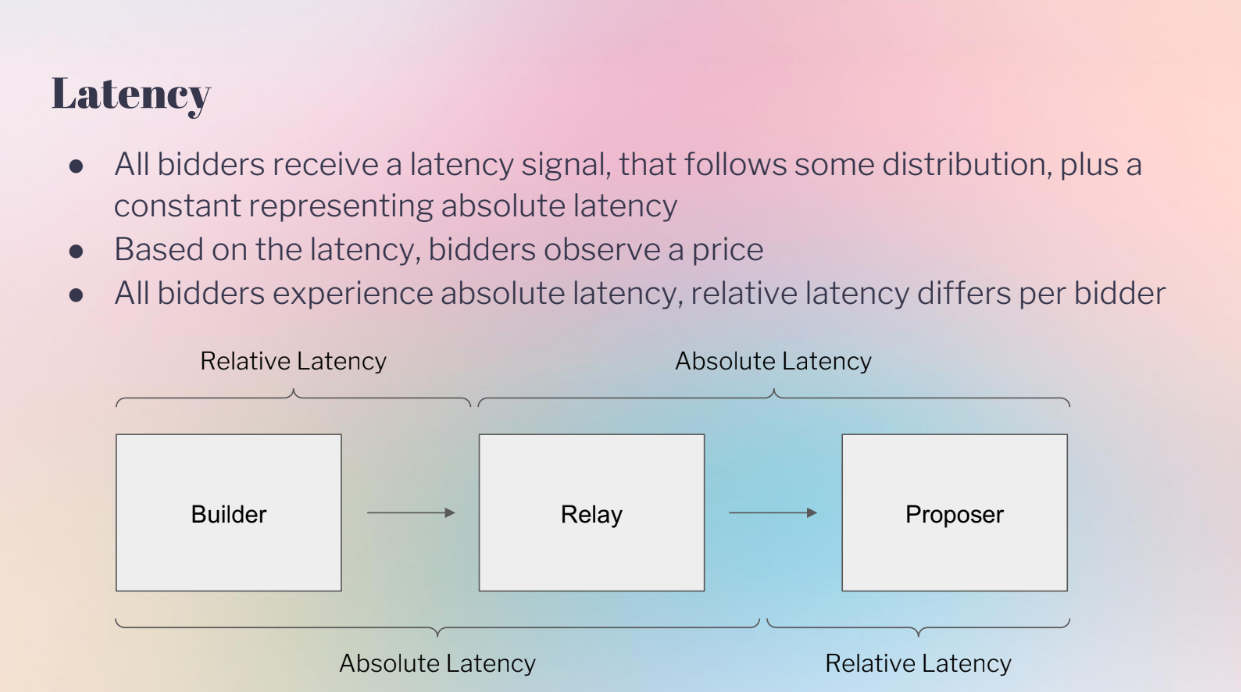

The model considers two types of latency :

- Absolute latency : Affects all bidders equally, like network speed.

- Relative latency : Advantages some bidders over others, like colocation.

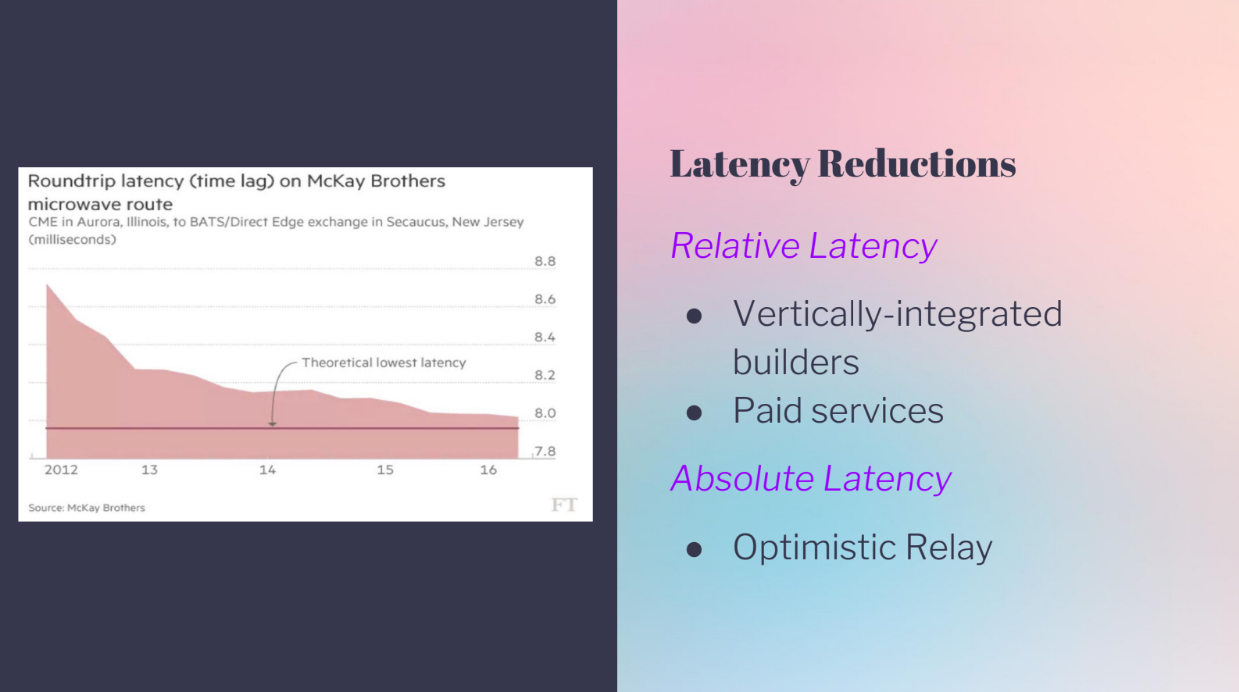

What we're seeing now is there are a lot of latency reductions :

- A relay network has more absolute latency.

- Integrated builders have relative latency advantages.

- Optimistic relay improves absolute latency for everyone.

In Ethereum PBS, even the absolute minimum latency could be improved a lot by better systems, and many latency reductions recently help some bidders over others. So modeling both absolute and relative latency is important to understand bidder advantages.

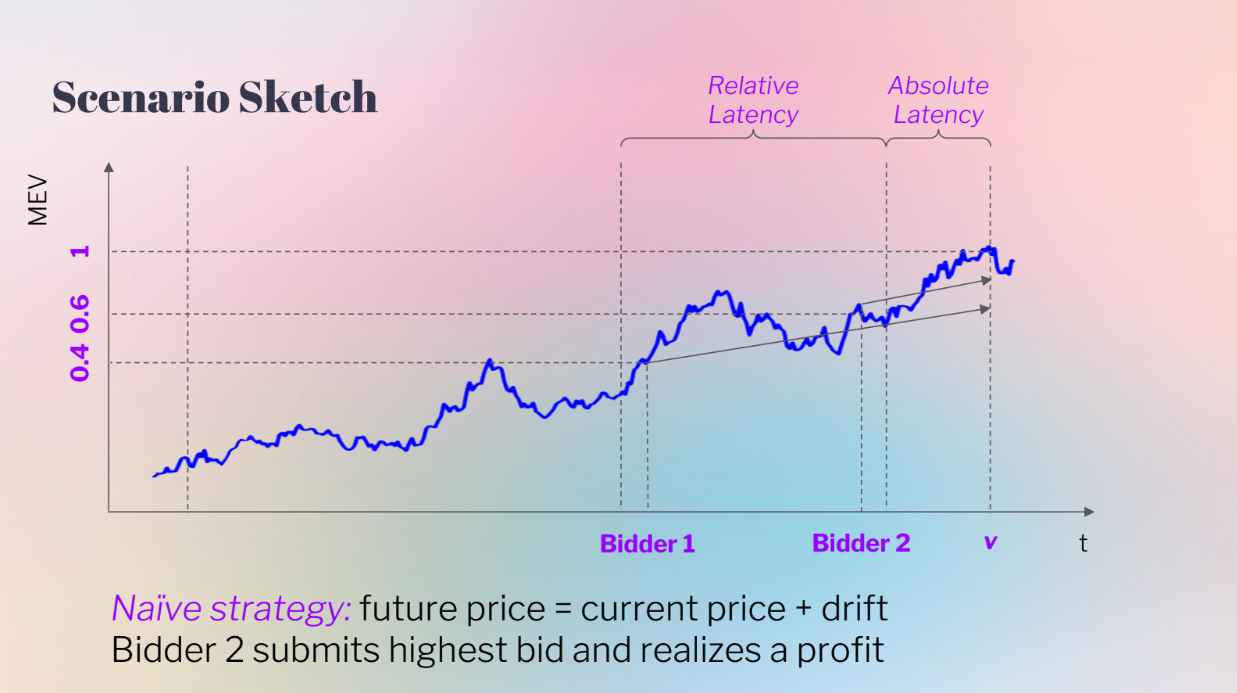

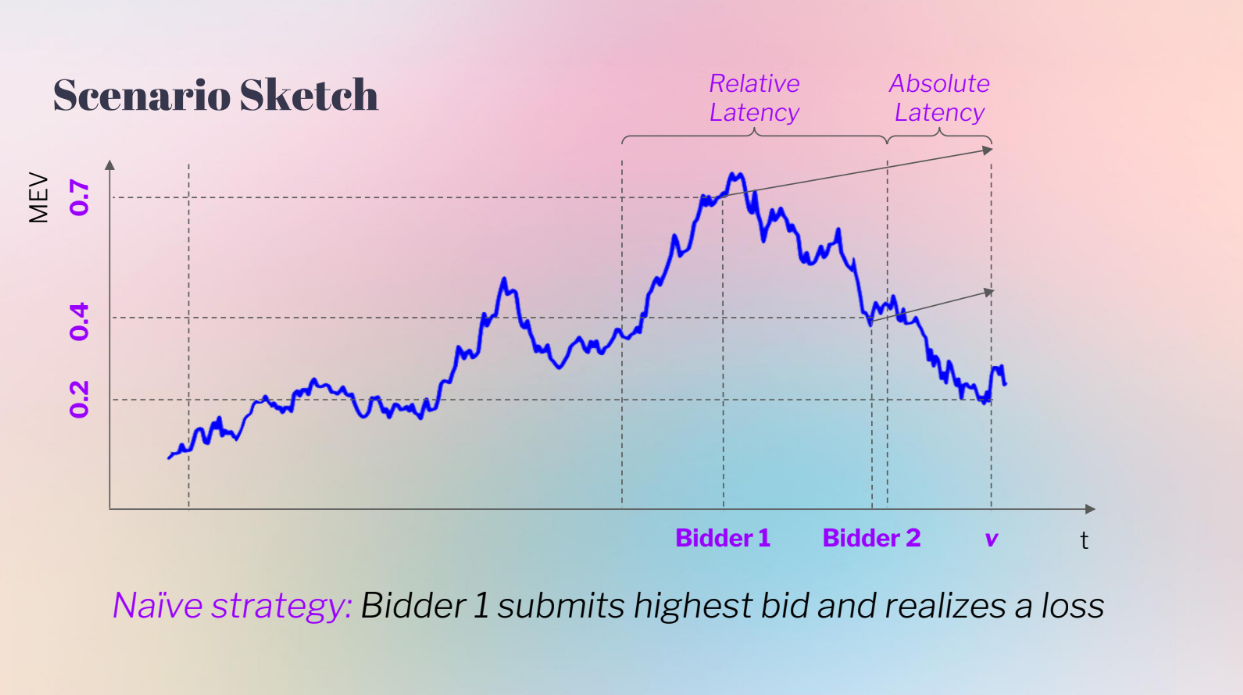

Bidder behavior, with absolute and relative latency (8:15)

The model considers absolute and relative latency :

- Absolute latency : Affects all bidders equally at the end.

- Relative latency : Earlier advantage for Bidder 2.

- The bidders use a naive bidding strategy : they observe MEV current price, and bid = current price + expected change

In the left case, Bidder 2 sees a higher current price, so Bidder 2 bids higher and wins

In the right case, Bidder 1 sees a much higher current price. Bidder 1 bids very high based on his expectation but the actual value ends up much lower, so Bidder 2's latency advantage avoids overbidding.

In other words, having less information meant Bidder 2 made more profit by bidding lower

Can bidders do better ? (10:30)

Bidder 1 can improve their strategy by accounting for the "winner's curse" :

The winner's curse refers to the situation in auctions where winning the bid means you likely overpaid, because your information was incomplete relative to more pessimistic losing bidders. Winner's need to account for this in their bidding strategy

- If you win, it means other bidders had worse information.

- So winning means your information was likely overestimated.

- Bidder 1 can adjust their bid down to account for this.

Implications for PBS (11:15)

More strategic bidding may be necessary in PBS auctions. Lower relative latency reduces these adverse selection costs, and lower absolute latency also reduces the costs.

Application to Order Flow Auctions (12:45)

Bidders compete for arbitrage opportunities over time, and they still face the winner's curse based on their latency.

Decentralized exchanges pay part of revenue to bidders to account for their adverse selection costs.

So modeling the winner's curse and latency advantages is important for understanding bidder strategies and revenues in MEV auctions.

Conclusion (13:30)

In PBS auctions, latency advantages affect bidder strategies and revenues like a double-edged sword : It helps you win bids, but winning with high latency means you likely overbid

Bidders need to balance latency optimizations with strategic bidding to account for the winner's curse. Furthermore, fair access to latency improvements can benefit the overall PBS auction.

Q&A

Besides arbitrage, what other external factors affect MEV bids decreasing ? (15:00)

For now arbitrage is the biggest factor. In the future, other types of MEV may become more important as PBS mechanisms evolve

How does the sealed bid bundle merge differ from the open PBS auction in terms of winner's curse implications ? (15:45)

The model looks at bidder valuations regardless of open or sealed bids. In both cases bidders need to account for the risk of others bidding after them.

Can you explain more about how lower latency leads to adverse selection ? (17:00)

If you bid early (high latency) and win, it means a later bidder saw info that made them bid lower. So winning means you likely overbid due to having less info.

The later bidder's advantage leads to adverse selection costs for the higher latency winner.

Intro (0:00)

Traditionally, censorship resistance meant that valid transactions eventually get added to the blockchain. But for some transactions like those from oracles or arbitrage traders, just eventual inclusion is not good enough - they need to get on chain quickly to be useful

Mallesh argues we need a new definition of censorship resistance : one that guarantees timely inclusion of transactions, not just eventual inclusion. This would allow new mechanisms like on-chain auctions. It could also help rollups and improve blockchain protocols overall.

Vitalik already talked about it in 2015, but Mallesh is advocating for the community to revisit it now, as timely transaction inclusion unlocks new capabilities.

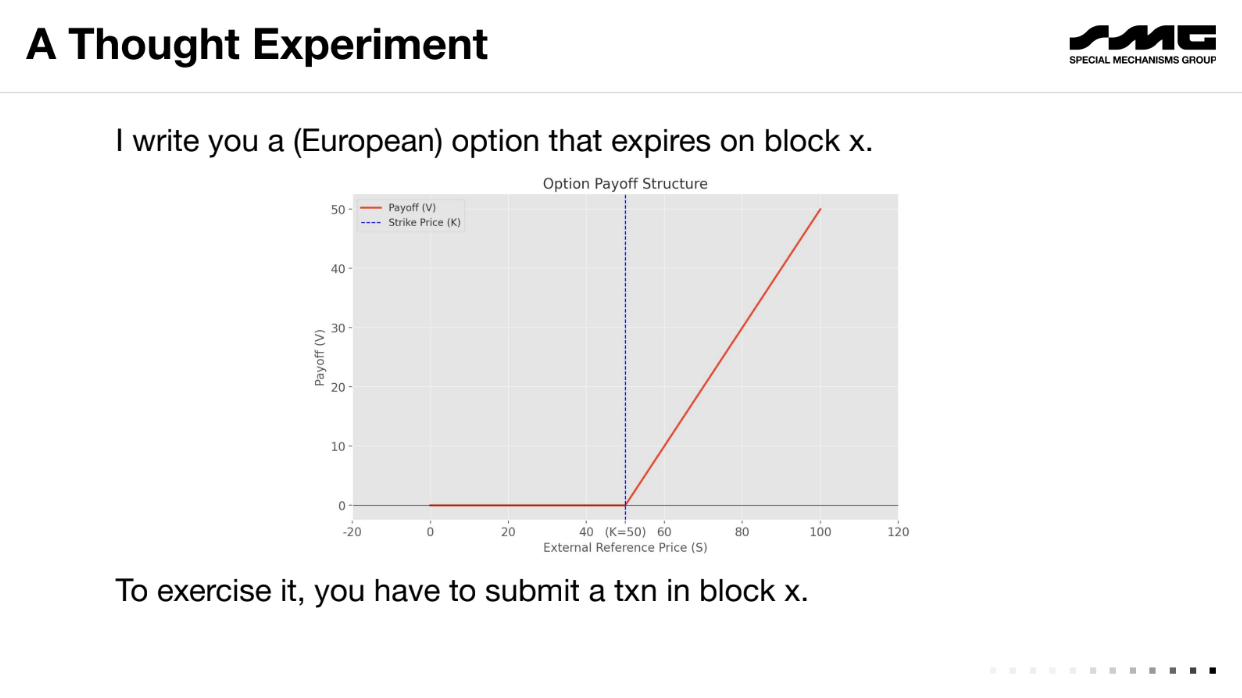

A thought experiment (2:30)

Let's take an example of an "European Option", a financial contract where an user agrees to pay the difference between a reference price (say $50) and the market price of an asset at a future date (block X), if the market price is higher

On block X, if the price is $60, the user owes the holder $10 (the difference between price at block X and reference price). The holder would be willing to pay up to $10 to get the transaction included on the blockchain to exercise the option. But the user also has incentive to pay up to $10 to censor the transaction and avoid paying out.

On the expiration date, there is an incentive for both parties to pay high fees to either execute or censor the transaction. This creates an implicit auction for block space where the transaction will end up going to whoever is willing to pay more in fees.

The dark side of PBS (4:20)

The existing fee auction model enables censorship. By paying high fees, you can block valid transactions without others knowing

This is not just thoretical, as Special Mechanisms Group demonstrated the problem some weeks before the presentation : by buying a block that contained only their own promotional transaction, they could have silently censored others by including some but not all valid transactions

The key problems :

- Options and other time-sensitive DeFi transactions create incentives for participants to engage in blocking/censorship bidding wars. This extracts value rather than creating it.

- The existing fee auction model allows value extraction through silent censorship by omitting transactions without it being visible.

Economic definition of censorship resistance (5:50)

Mallesh introduces the concept of a "public bulletin board" to abstractly model blockchain censorship resistance. This model has two operations

- Read : Always succeeds, no cost. Lets you read board contents.

- Write : Takes data and a tip "t". Succeeds and costs t, or fails and costs nothing

Censorship resistance is defined as a fee function - the cost for a censor to make a write fail. Higher fees indicate more resistance.

Some examples (7:45)

- Single designated block : Censorship fee is around t. A censor can outbid the transaction tip to block it.

- EIP-1559 : Potentially worse resistance since base fee is burnt. Censor only needs to outbid t-b where b is base fee

The goal is to reach high censorship fees regardless of t, through better blockchain design, like multiple consecutive slots.

Better censorship resistance (9:15)

Having multiple consecutive slots with different producers increases censorship fees.

A censor must pay off each producer to block a transaction, costing k*t for k slots at tip t. But this also increases read latency - you must wait for k blocks.

On-Chain Auctions (10:45)

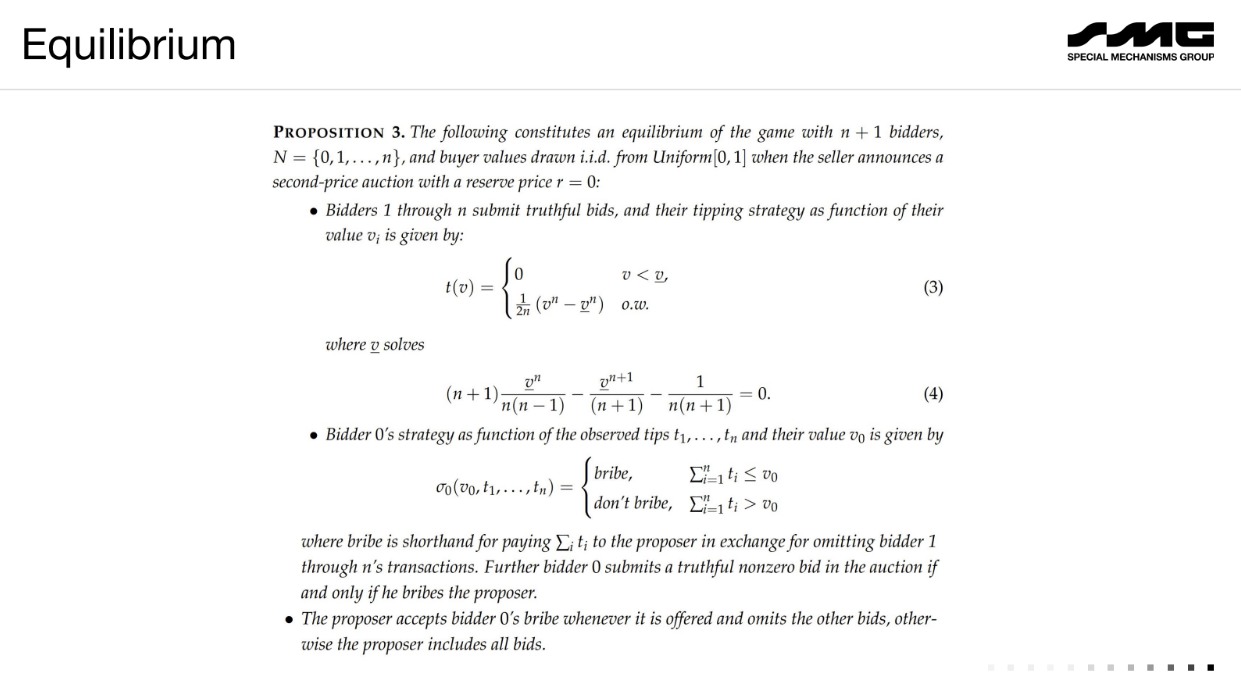

Low censorship resistance limits on-chain mechanisms like auctions. As an example, consider a second-price auction in 1 block with N+1 bidders :

- Bidders 1 to N are honest, submit bids b_i and tips t_i

- Bidder 0 is a censor. It waits for bids, offers the producer p to exclude some bids.

- Producer sees all bids/tips, and censor's offer p. It decides which transactions to include.

- Auction executes on included bids.

A censor can disrupt the auction by blocking bids. This exploits the low censorship resistance of a single block.

Warm up (13:50)

Suppose a second-price auction with just 1 honest bidder and 1 censor bidder :

- The honest bidder bids their value v. Their tip t must be < v, otherwise they overpay even if they win.

- The censor sees t is less than v, so it will censor whenever its value w > t. It pays the producer slightly more than t to exclude the honest bidder's bid.

- Knowing this, the honest bidder chooses t to maximize profit, resulting in a formula relating t to v.

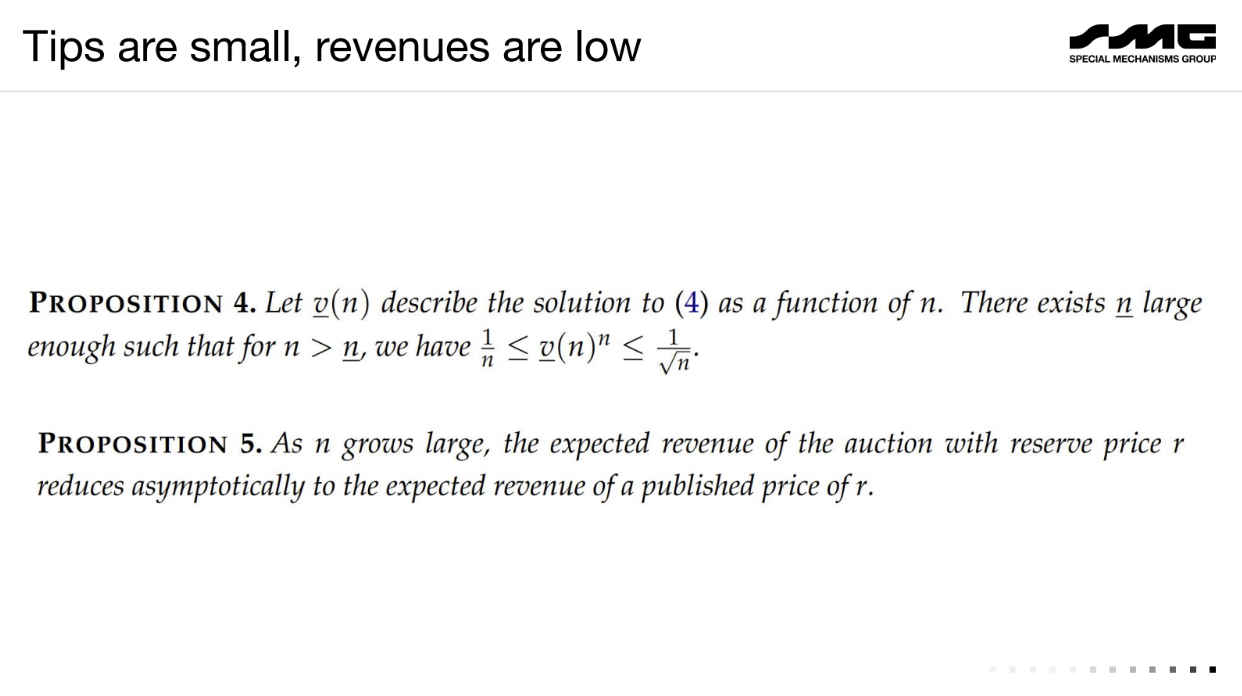

With N+1 bidders :

Honest bidders bid their value but tip low due to censorship risk. The censor often finds it profitable to exclude all other bids and win for free.

As a result, the auction revenue collapses - the censor gets the item cheaply. If multiple censors, the producer running the auction extracts most of the value.

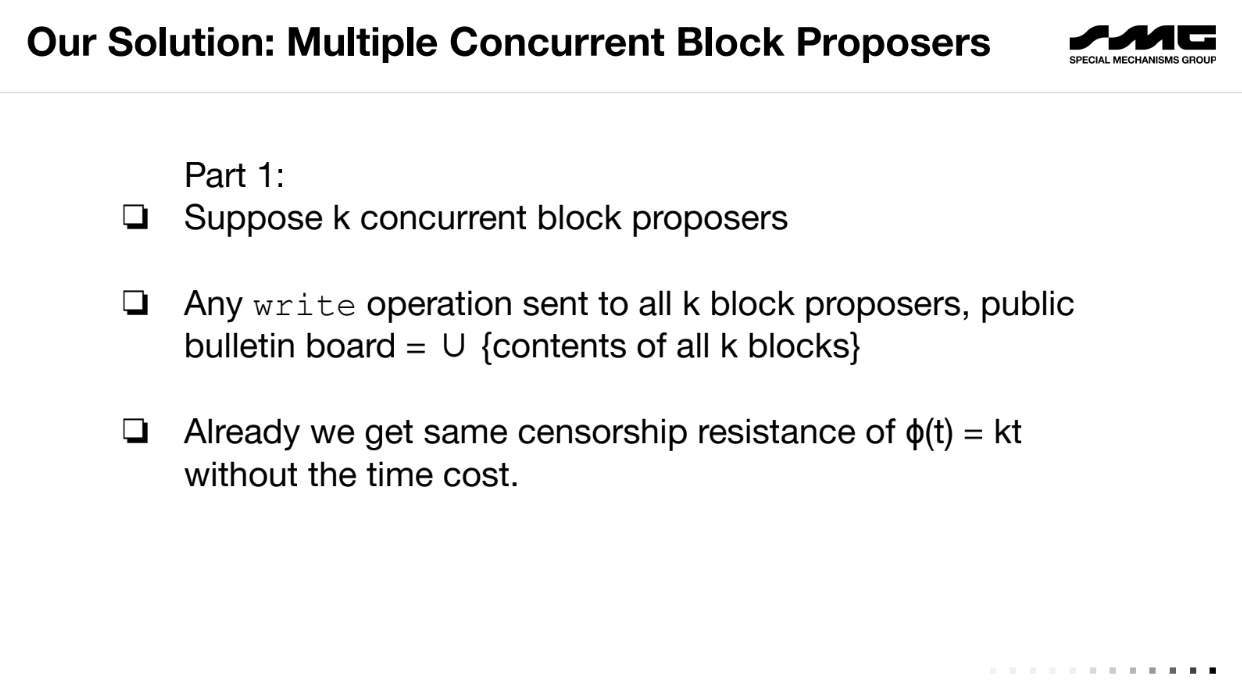

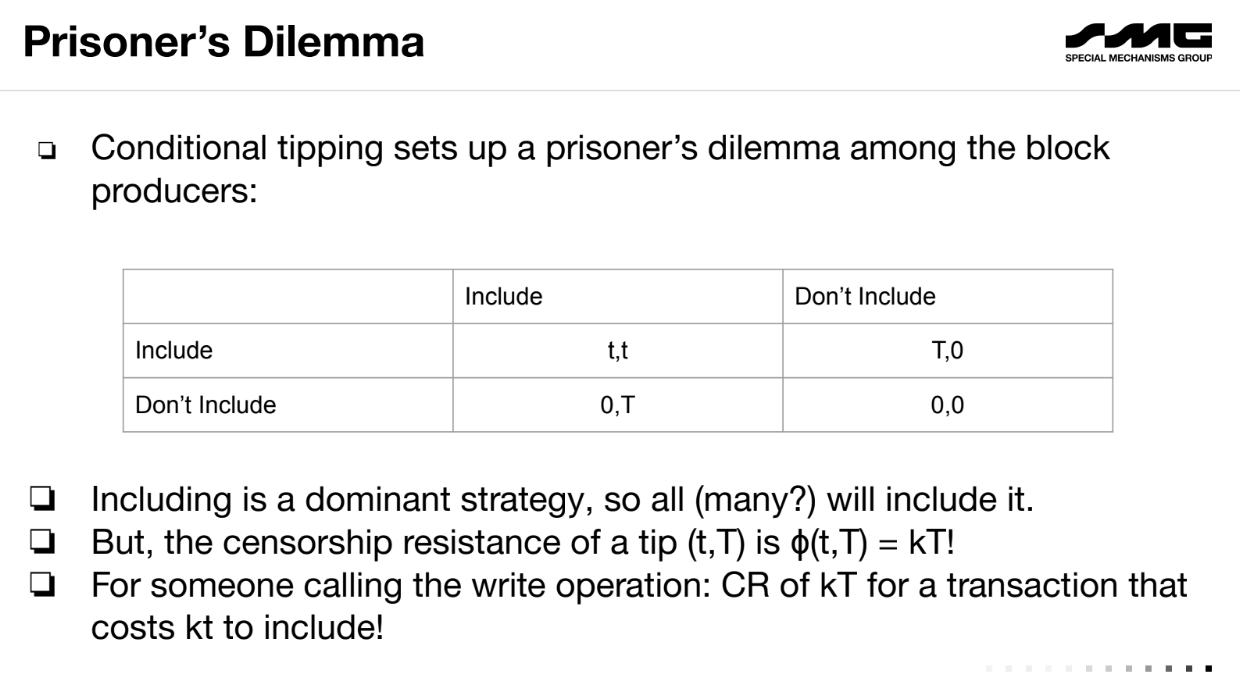

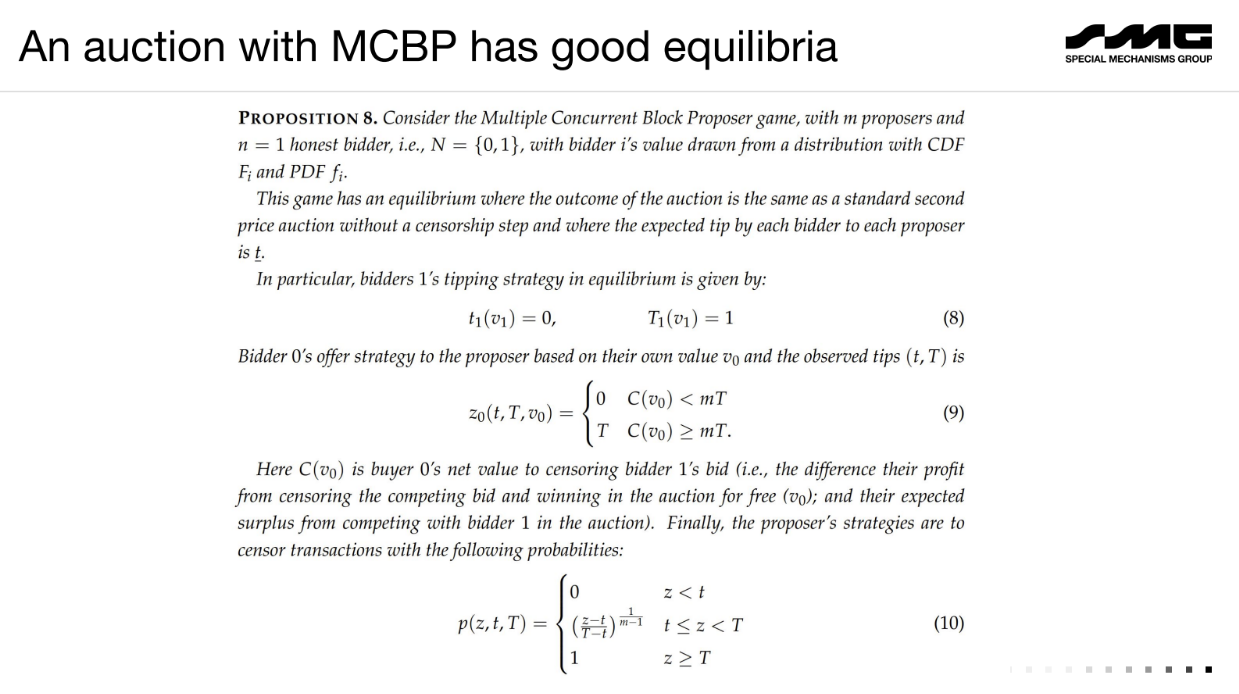

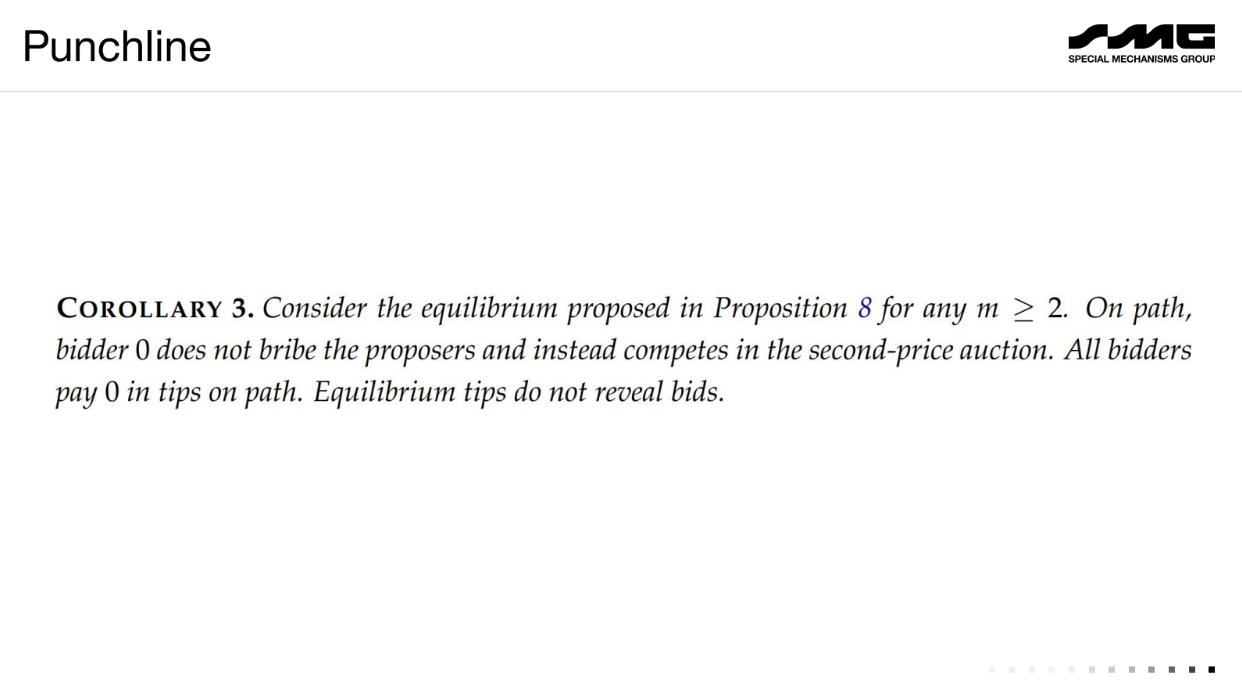

Proposed solution : Multiple Concurrent Block Proposers (18:00)

Mallesh proposes a solution to the blockchain censorship problem using multiple concurrent block producers rather than one monopolist producer.

Have k block producers who can all submit blocks concurrently. The blockchain contains the union of all blocks.

Tips are conditional : small tip t if included by multiple producers, large tip T if only included by one.

This creates a "Prisoner's Dilemma" for producers - including is a dominant strategy to get more tips.

Cost of inclusion is low (kt) but censorship resistance is high (kT) with the right t and T. Hence, a censor finds it too expensive to bribe all producers. Honest bidders can participate fairly.

Concluding thoughts (20:20)

Benefits :

- Removes producer monopoly power over censorship.

- Lowers tipping requirements for inclusion.

- Enables more on-chain mechanisms like auctions resistant to censorship attacks.

But the community needs to think about which of these notions to adopt/prioritize/optimize for.

Q&A

What if the multiple block producers collude off-chain to only have 1 include a transaction, and share the large tip ? (22:00)

This approach assumes producers follow dominant strategies, not collusion. Collusion is an issue even with single producers. Preventing collusion is challenging.

To do conditional tips, you need to see other proposals on-chain. Doesn't this create a censorship problem for that data ? (22:45)

Tips can be processed in the next block using the info from all proposal streams in the previous round. The proposals would be common knowledge on-chain.

Having multiple proposals loses transaction ordering efficiency. How do you address this ? (24:30)

This approach is best for order-insensitive mechanisms like auctions. More work is needed on ordering with multiple streams.

Can you really guarantee exercising an option on-chain given bounded blockchain resources ? (26:00)

There will still be baseline congestion limits. But economics change - transaction fees are based on value, not cost of block space. This improves but doesn't fully solve guarantees.

Quintus wants to provide a new perspective on how to think about "MEV" or "maximum extractable value" in blockchain systems like Ethereum.

To understand potential solutions, we first need to understand the core source of the unfairness and inefficiency

This is an Ethereum-centric view, but believes the points apply to other blockchain systems too.

The issues of MEV (1:10)

- Power asymmetry : Miners/validators earn a lot of money from MEV activities while regular users don't. This is seen by some as unfair.

- Economic inefficiency : Some MEV activities impose extra "taxes" on users, make trading more expensive

- Technical inefficiency : MEV can cause things like failed transactions, extra protocol messages, and uneven validator yields. This leads to technical problems like incentives to reorg and centralization.

- Revenue sharing : Applications want to capture some of the MEV value they create, which relates to the fairness issue.

Quintus wants to focus mostly on power asymmetry and economic inefficiency issues in this talk.

Informational asymmetry

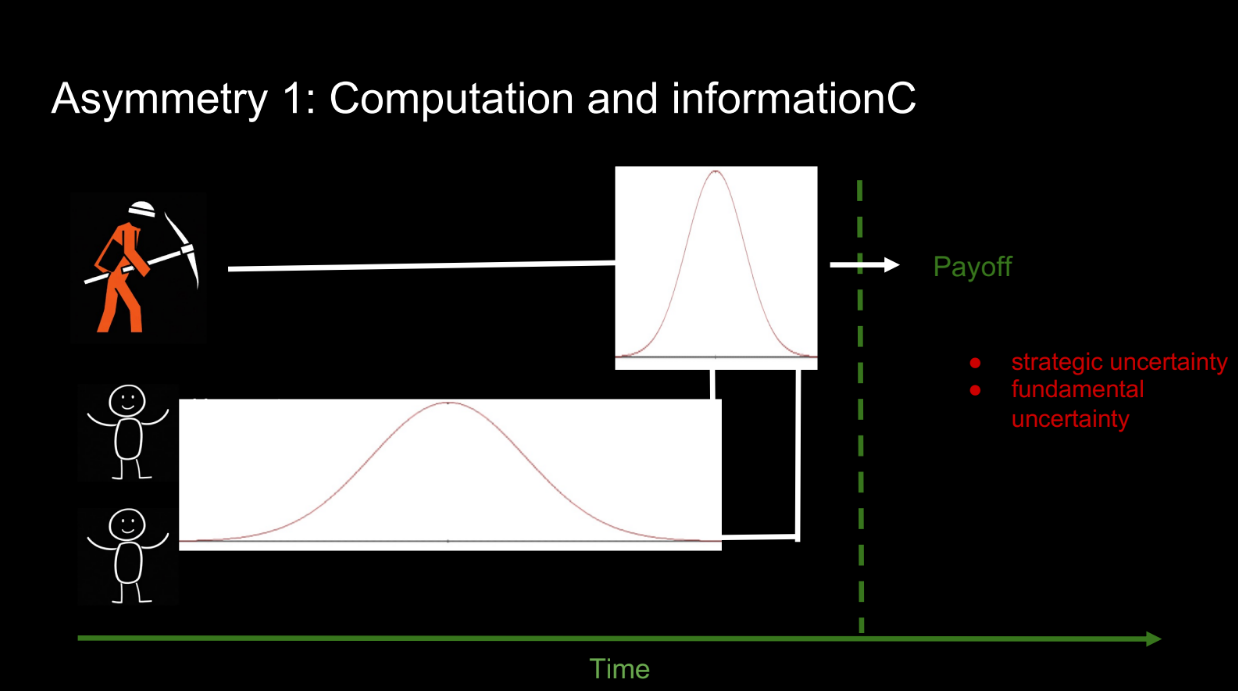

Computation and information (2:30)

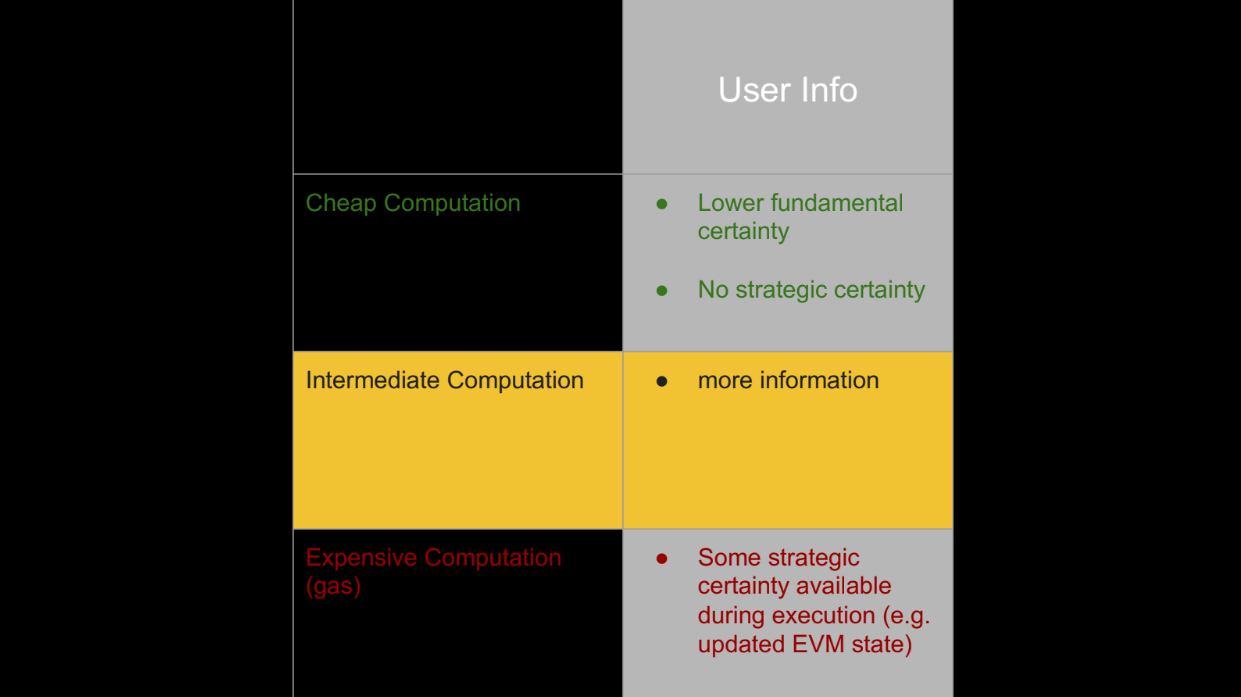

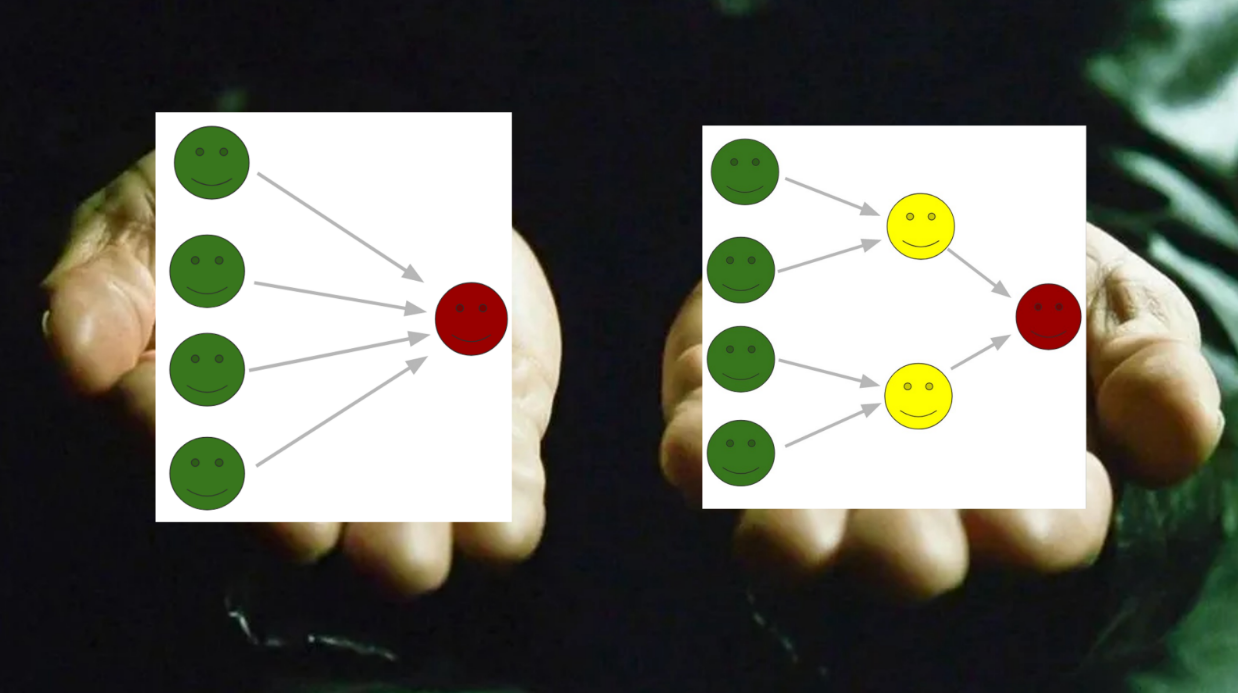

Quintus wants to explain the core issue behind MEV unfairness and inefficiency. Let's use a simple model with 2 actors:

- The miner (stands for any block producer)

- Users

Both the miner and users want to maximize their profits when the next block is produced. Their profits depend on:

- The block contents

- External state (like exchange prices)

There are 2 stages:

- Users submit transactions

- Miner orders transactions and produces the block

This shows an asymmetry:

- Users face lots of uncertainty about other transactions and external state

- The miner has much less uncertainty since they see all transactions and produce the block later

There are two types of uncertainty:

- Strategic - What other people are doing

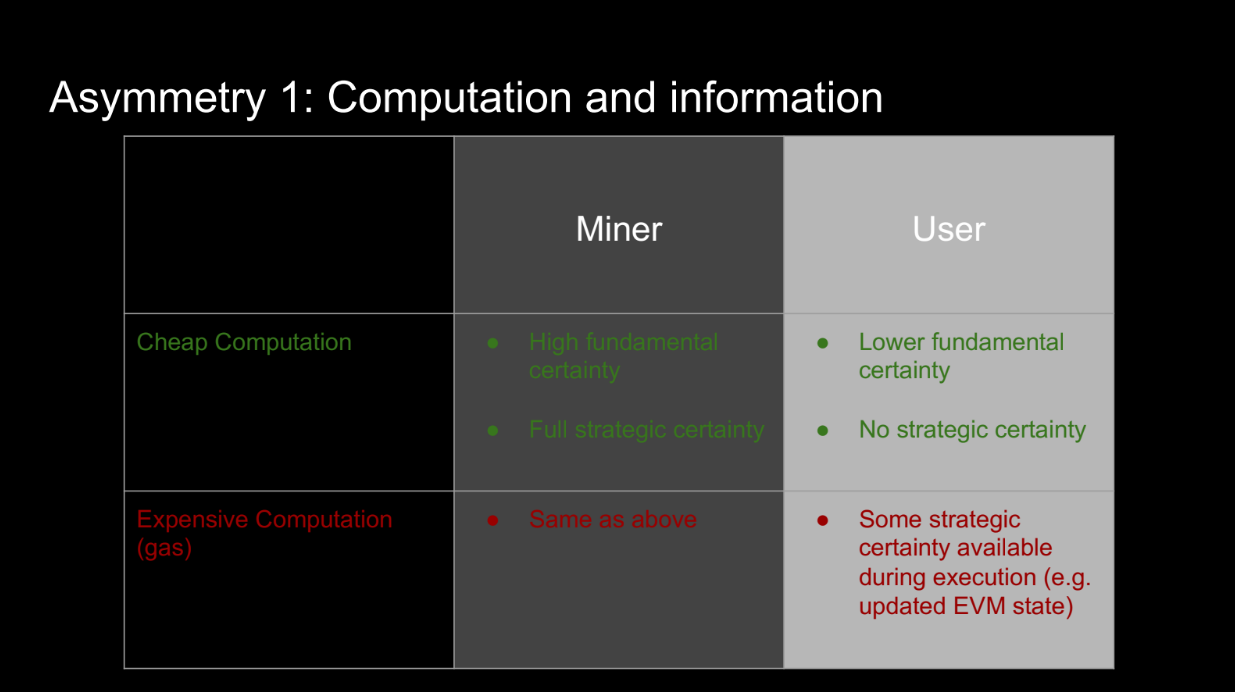

- Fundamental - External state like prices

Users have more of both VS the miner. This information asymmetry advantages the miner. Additionally, Miner can do computations locally and cheaply with full info, and users must pay gas for computations with less info

Even though users can do computations on-chain, this is expensive. The miner's ability to do cheap local computations with full information adds to their advantage.

Information asymmetry

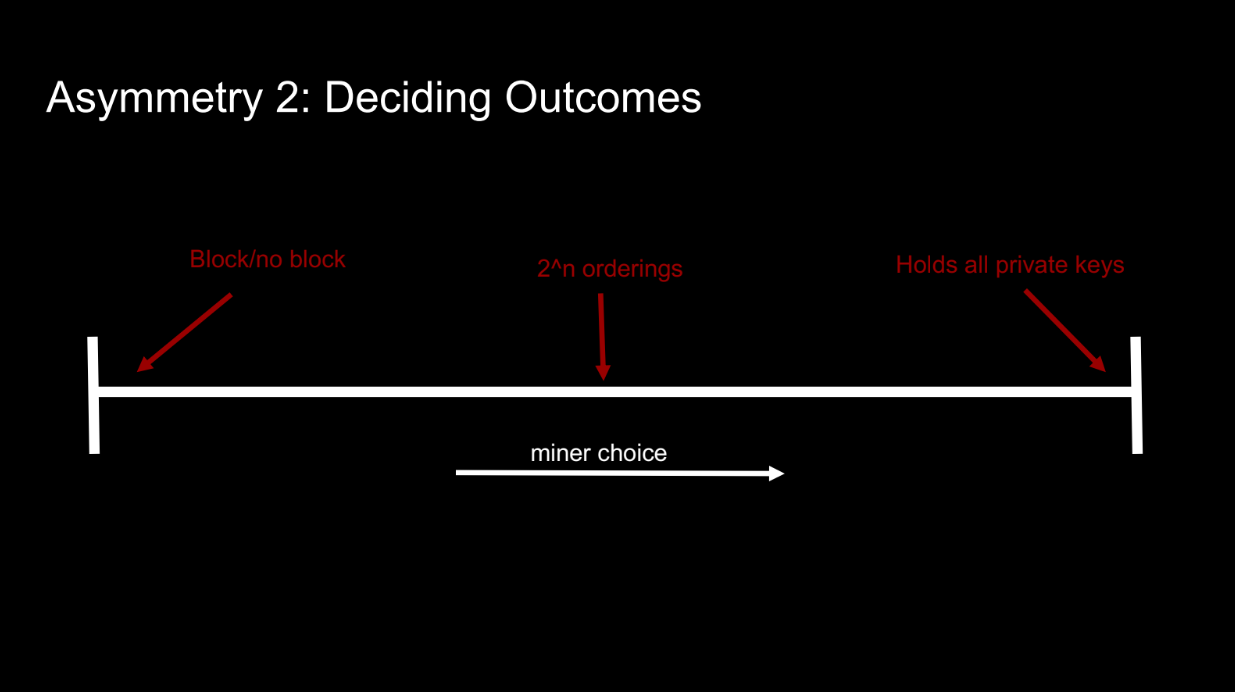

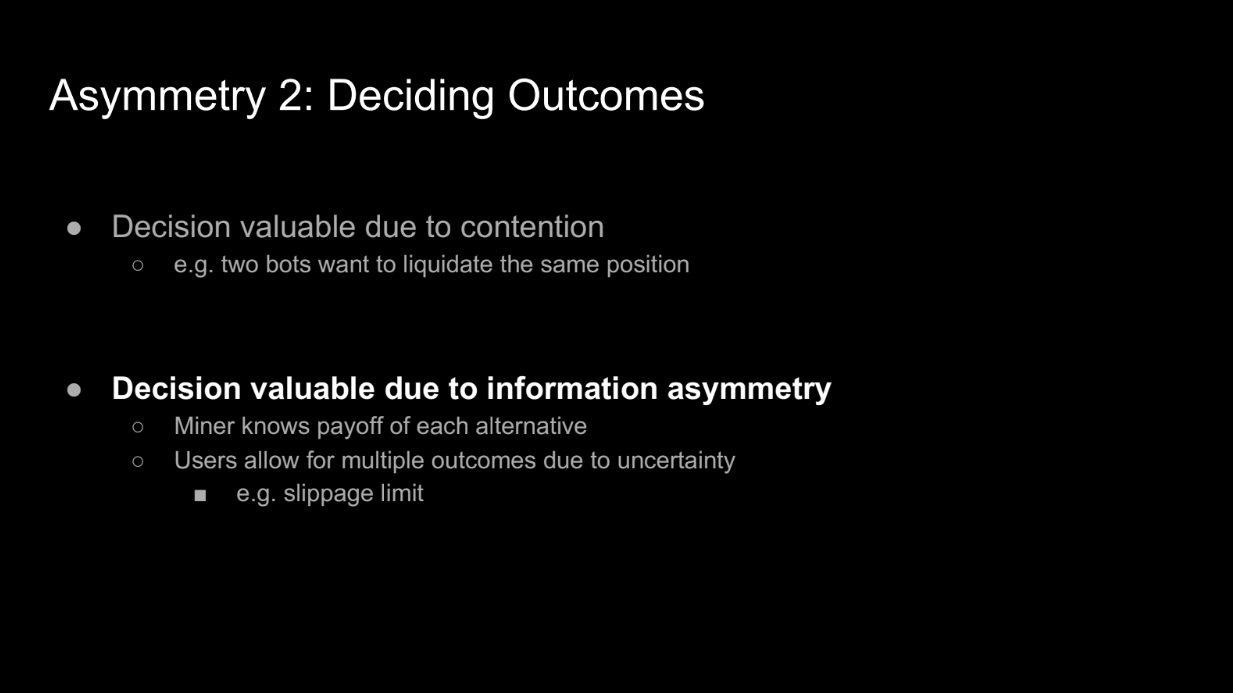

Deciding Outcomes (5:50)

Another asymmetry is the miner's ability to choose between different block outcomes. For example:

- Producing empty VS full blocks

- Ordering transactions differently

- Controlling all private keys (like an exchange)

Two main factors affect the value of the miner's decision making ability:

- Fundamental contention - situations like arbitrage opportunities that users compete for access to. The miner can decide the winner.

- Information asymmetry - The miner knows the value of each outcome. But more importantly, users lack information so they entertain multiple outcomes via things like slippage limits. This gives the miner many options to choose from.

So information asymmetry enables the miner's valuable decision making ability. Without it, there would be less options to choose between.

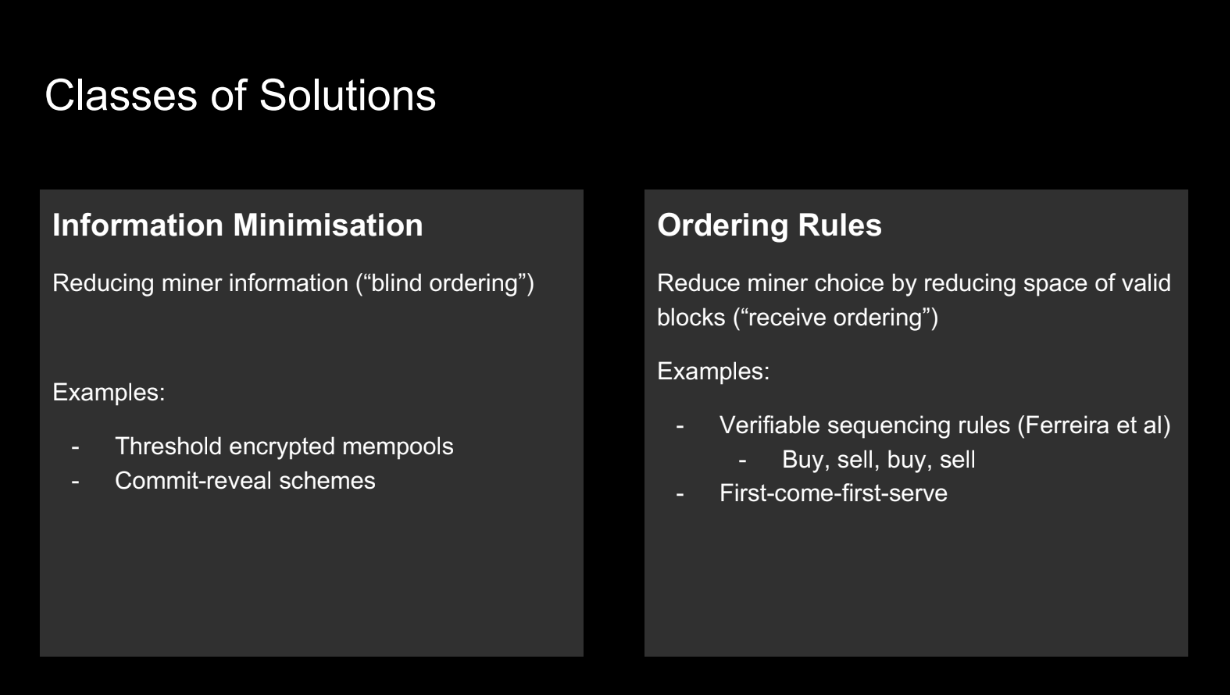

Classes of solution (8:15)

Past solutions to this asymmetry focused on:

- Minimizing miner information - techniques like threshold encryption and commit-reveal schemes.

- Reducing miner choice - ordering rules like first-come-first-serve that limit valid block space.

So the main approaches were minimizing the information miners have and limiting the choices miners can make. This aimed to address the unfair asymmetry.

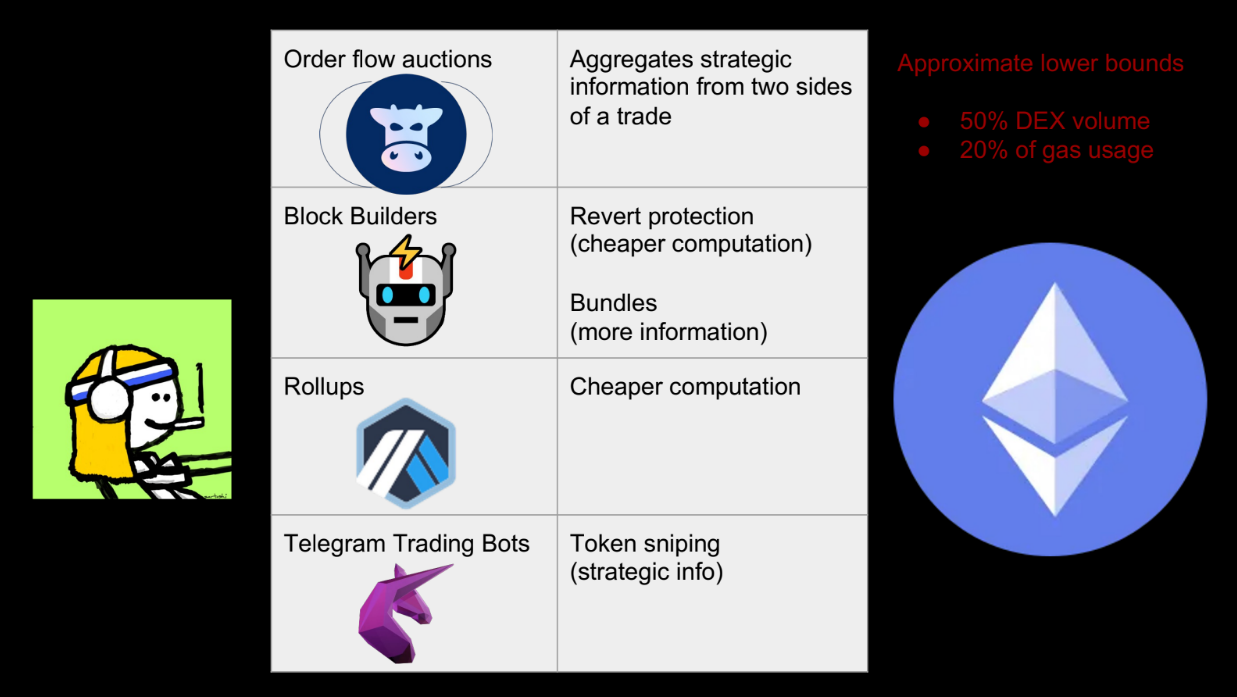

But these past solutions don't reflect what's happening in practice on blockchains like Ethereum. Instead, we see lots of intermediate services emerging that give users more information and computation.

Intermediate computation

So a different approach is needed, improving user capabilities rather than just limiting miners. This allows users and miners to better compete on a level playing field.

Instead of reducing the miner's information/actions, a new approach is emerging : improving users' information and making computation cheaper via "intermediate" services.

Some examples (9:45)

- Order flow auctions (like CowSwap) : aggregate orders off-chain for better pricing

- Blockbuilders : provide revert protection so users can try multiple trades

- Bundles : sequence transactions so later ones can use info from earlier

- Rollups : cheaper way to find optimal trades

- Telegram bots : aggregate user info for trades/snipes

These give users more info and cheaper computation. Over 50% of DEX volume goes through these services

New intermediaries coming (12:40)

The old view of only miners and users directly interacting is limiting. Adding intermediaries to our mental models will allow better reasoning about real-world blockchains.

The key mental shift is accepting that intermediaries are emerging between miners and users. We can't just think of two actors anymore. This better reflects reality and how blockchains operate in practice.

New problems

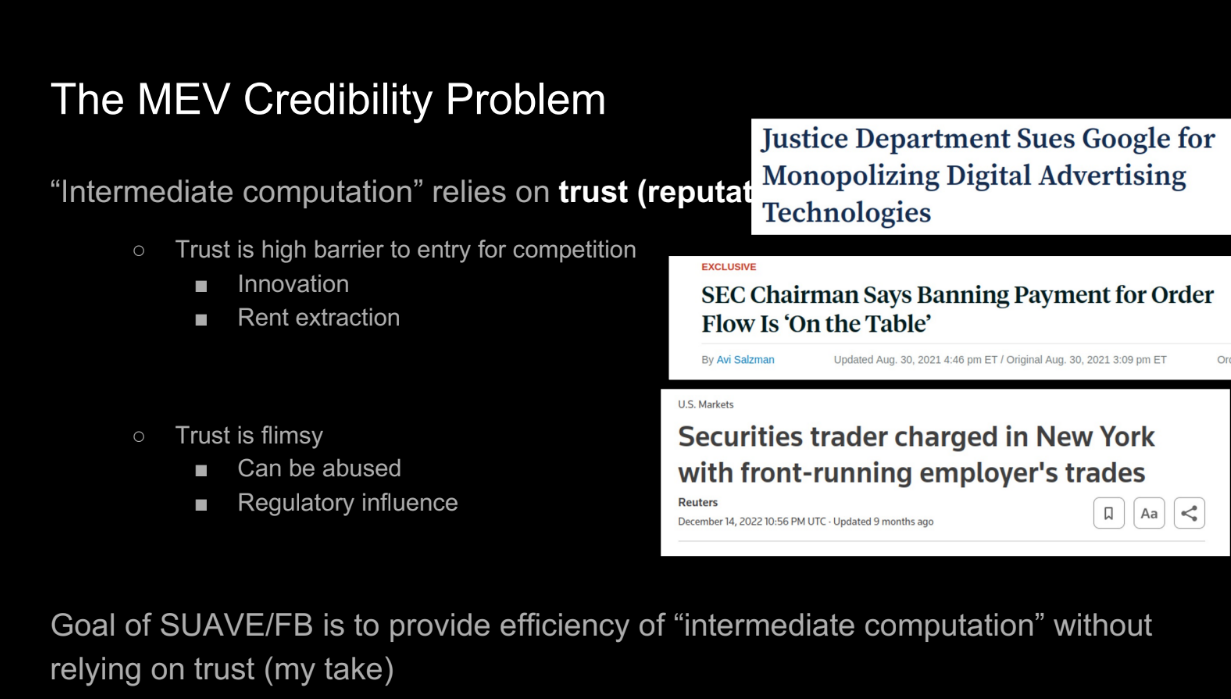

The MEV credibility problem (13:10)

These intermediate services solve some MEV problems, but they rely on trust and reputation, not cryptography. This causes :

- High barrier to entry. New services need to build trust and reputation. Less competition means potential for monopolies, rent extraction, and stagnation.

- Trust is fragile. It can be abused or lost suddenly. We want systems where properties are guaranteed by design, not by actors' decisions.

- Trusted intermediaries can still abuse power or be manipulated. We want "can't be evil" not "don't be evil."

Examples like traditional finance show trusted intermediaries aren't enough to prevent abuse. The goal is efficiency through intermediate services, but with properties guaranteed cryptographically, not via trust.

Examples like traditional finance show trusted intermediaries aren't enough to prevent abuse. This avoids the issues of reputation, fragility, and centralized power that purely trusted solutions have.

Open questions (16:25)

In summary, there are three key issues to think about going forward:

- The credibility/trust problem : How can we get the benefits of intermediate services without relying on trust and reputation ?

- Studying the asymmetries formally : The speaker discussed information and computation asymmetries loosely. How can we study these formally and rigorously?

- Implications for blockchain design : If we assume intermediaries will emerge, how does this change desired properties of things like ordering policies? Do they still achieve their intended goals?

The speaker hopes this talk inspired people to work on these problems and provided a useful perspective on intermediaries and MEV.

Q&A

When intermediaries compete in PBS auctions, how do we prevent them from pushing all the revenue to the block proposer (validator) by competing against each other ? (18:00)

The speaker clarifies that "all the revenue" going to the proposer provides value accessible to everyone in the stack. If miners built the block directly they could also access that value.

The Cost of MEV

Quantifying Economic (un)fairness in the Decentralized World

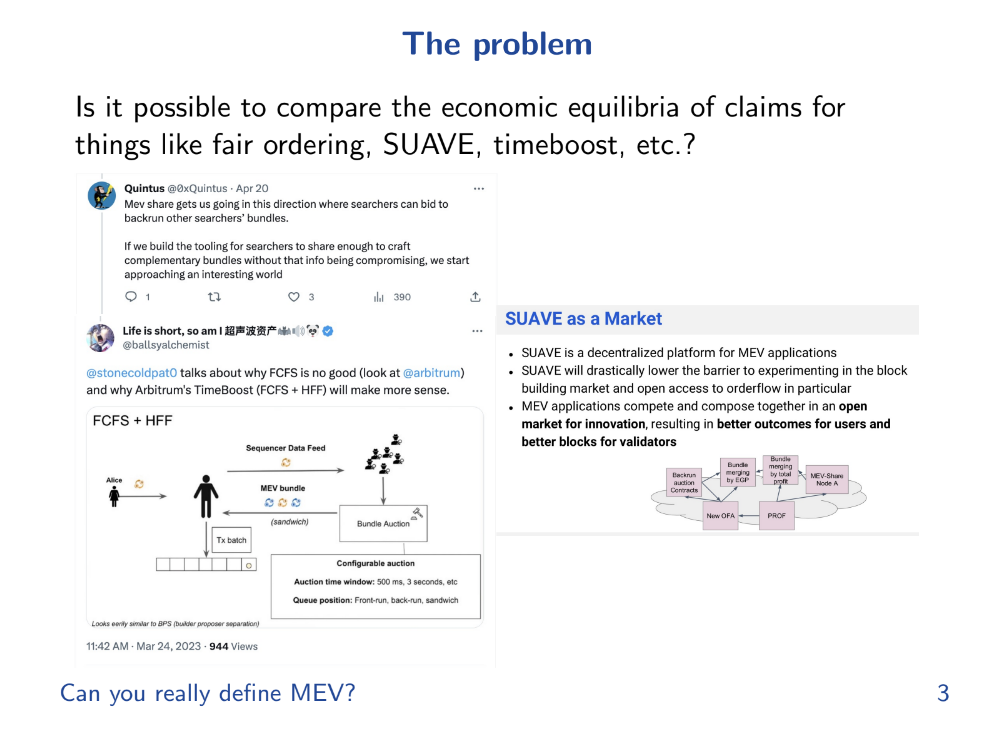

Can you really define MEV ?

There have been many talks discussing qualitative and quantitative aspects of MEV, but this talk will take a more formal approach.

There’s a challenge in defining MEV correctly since many different definitions and formalisms have been proposed, each with its own set of assumptions and outcomes, making it hard to compare them or reach a common understanding.

What do we really want ? (1:40)

Some fair ordering methods (like FCFS - First Come First Serve) haven’t lived up to expectations. Tarun also mentions wanting to explore and compare new concepts like Suave and Time Boost rigorously.

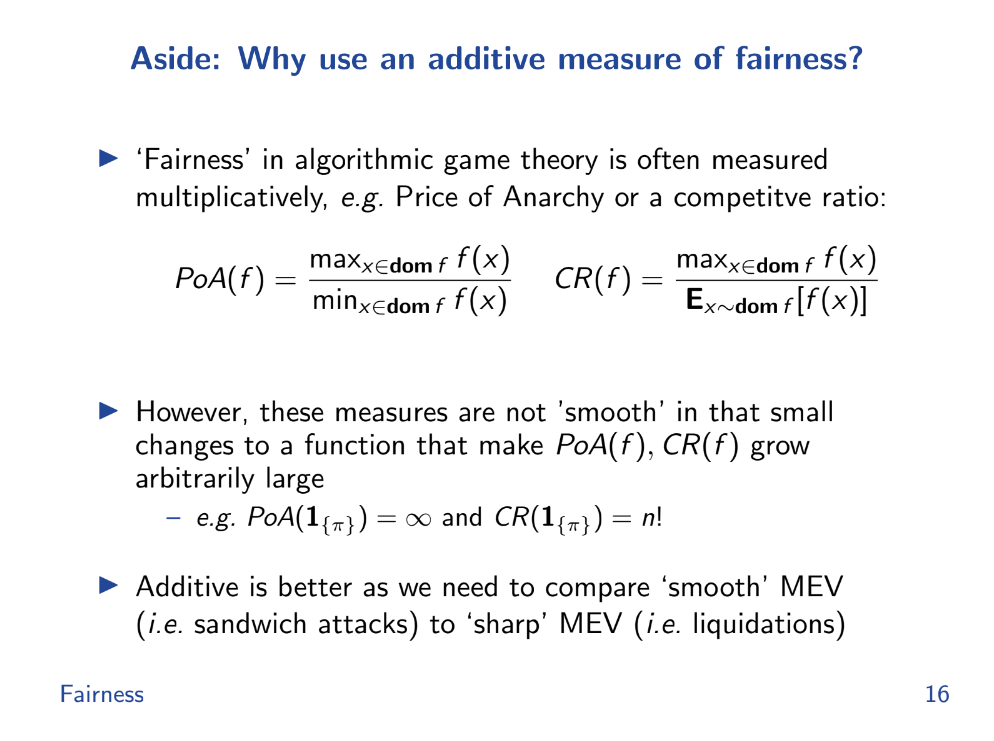

A key question is how much "fairer" one set of orderings is compared to another in terms of the payments made to validators. We define "fair" as meaning the worst case validator payment is not too far from the average case.

The talk aims to explore fairness among different ordering mechanisms, especially in terms of payments to validators

What is MEV (formally) ? (2:50)

MEV is any excess value that a validator can extract by adding, removing or reordering transactions.

To outline a formal mathematical framework for defining and analyzing MEV concretely, 4 things are needed :

- A set of possible transactions named T

- A payoff function f that assigns a value to a subset of transactions T and an ordering π. This represents the payoff to validators.

- Analyzing how f changes over T and π to define "excess" MEV.

- Ways to add, remove, or reorder transactions that validators can exploit.

T could be extremely large, making analysis difficult. But for specific use cases, T may be restricted to make analysis feasible.

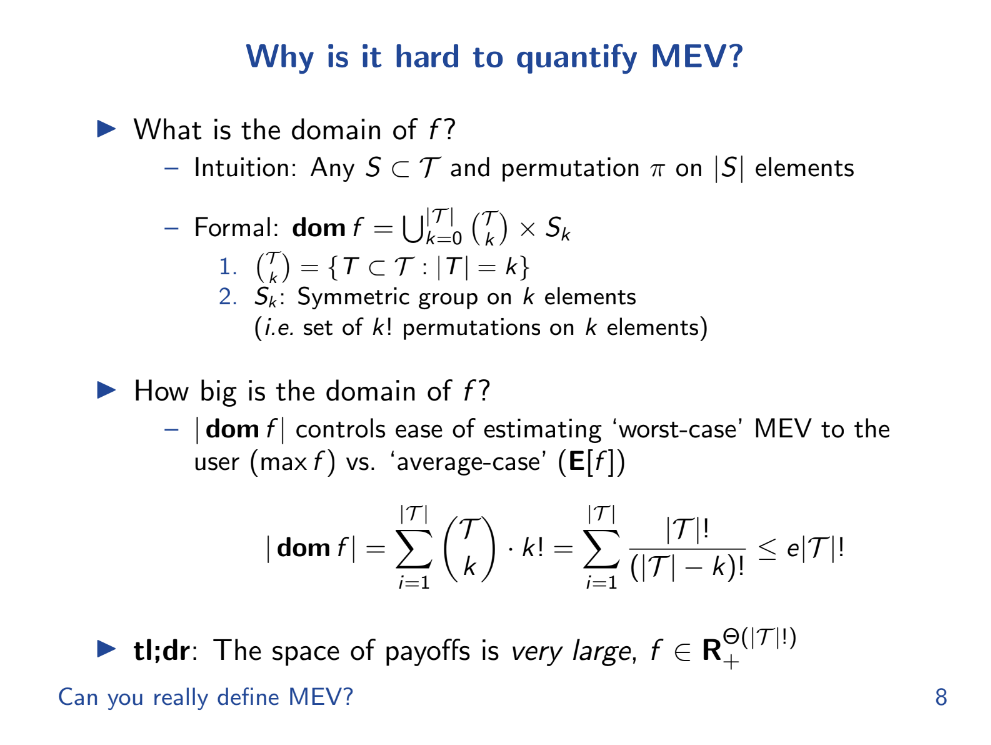

Why is it hard to quantify MEV ? (5:40)

Several reasons why it is hard to quantify MEV :

- Very large transaction space. MEV depends on the set of all possible transactions (T) that could be included in a block. This set is extremely large, especially as the number of blockchain users grows. Quantifying MEV requires looking at all possible subsets and orderings of T.

- Complex valuation. MEV also depends on the valuation (payoff) function f that assigns a value to each transaction subset and ordering. This function can be very complex and determined by market conditions. It's difficult to precisely model.

- Private information. Validators have private information about pending transactions and the valuation of transaction orders that external observers don't have.

- Changing conditions. f depends on dynamic market conditions. In other words, the valuation of transactions is constantly changing as market conditions change. We must quantify a moving target

- New transaction types. As new smart contracts and transaction types are introduced, it expands the transaction space and complicates valuation.

Fairness

We're using the term "payoff" to represent the benefit or outcome someone gets from a certain arrangement of things (like the order of transactions).

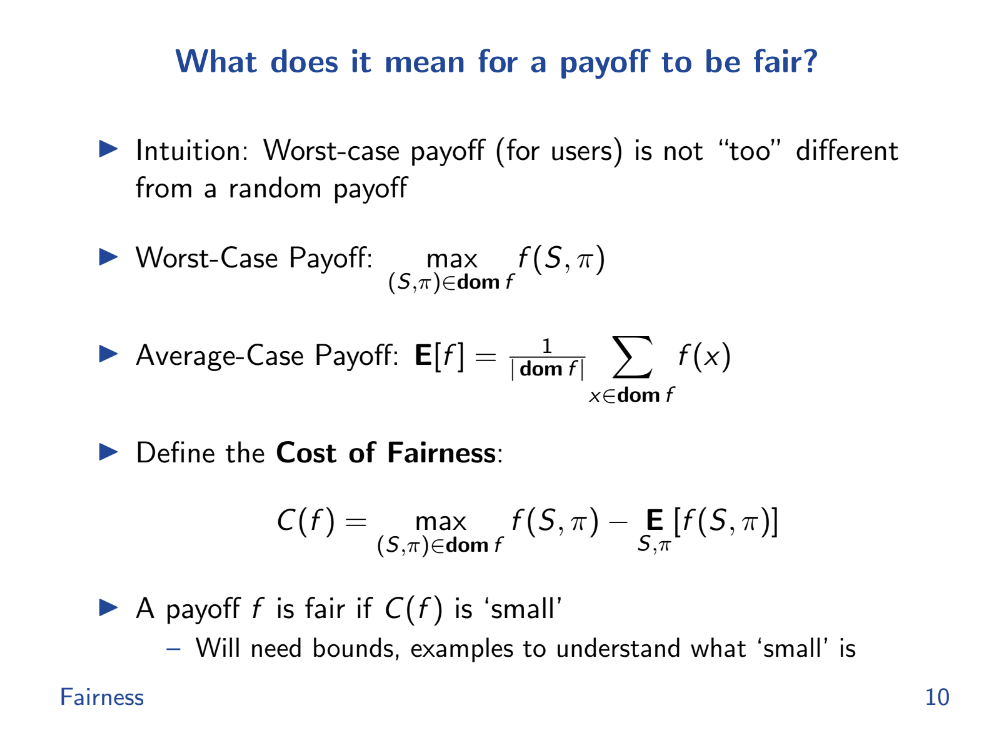

What does it mean for a payoff to be fair ? (6:40)

According to Tarun, "fairness" is where the worst case payoff is not too far from the average random payoff. This represents a validator's inability to censor or reorder transactions.

- Worst Case Payoff: Imagine if someone cheats and arranges the deck to their advantage; this scenario gives them the highest payoff possible. It's the best they can do by cheating.

- Average Case Payoff: This is what typically happens when no one cheats; it's the average outcome over many games.

- Cost of Fairness: It's like a measure of how much someone can gain by cheating. It's the difference between the worst case and the average case payoffs.

A situation is more "fair" if this cost of fairness is small, meaning there's little to gain from cheating

Cost of Fairness for reordering (7:50)

We want to quantify how much extra they can extract. The Assumptions are as follows :

- Fixed set of possible transactions

- Focus only on reordering for now

- No permutation subgroups that leave payoff invariant

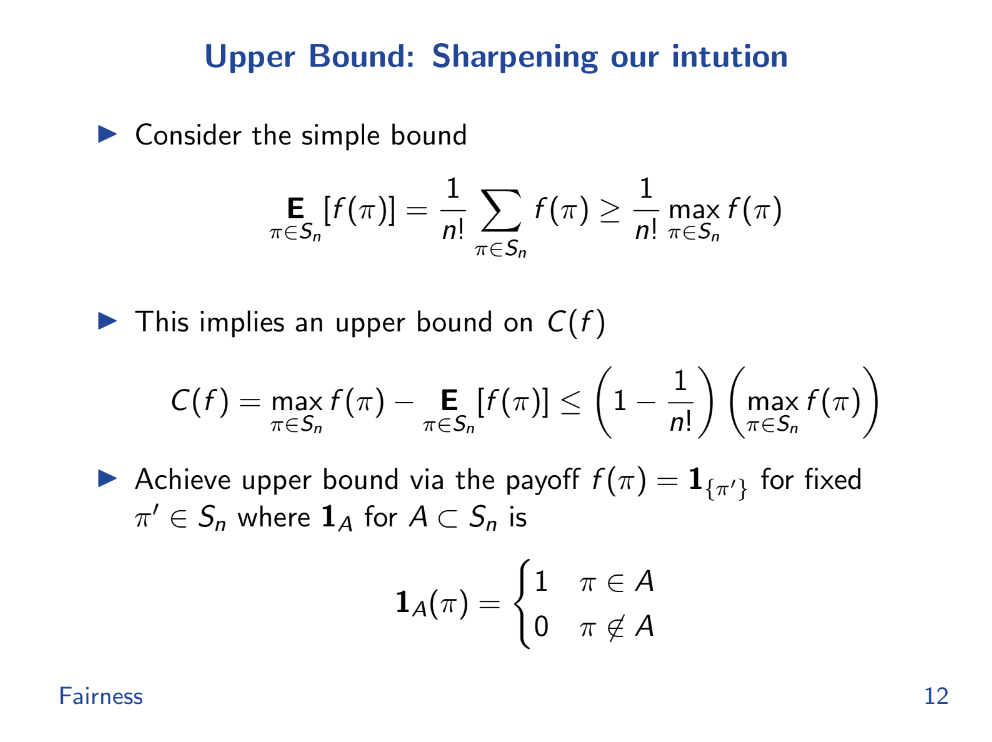

Upper Bound (8:45)

With those assumptions :

- The max possible unfairness happens when payoff is 1 for one order, 0 for all others.

- In general, unfairness is bounded by : (Max payoff - Average payoff) < Max payoff * (1 - 1/n!) where n is number of transactions

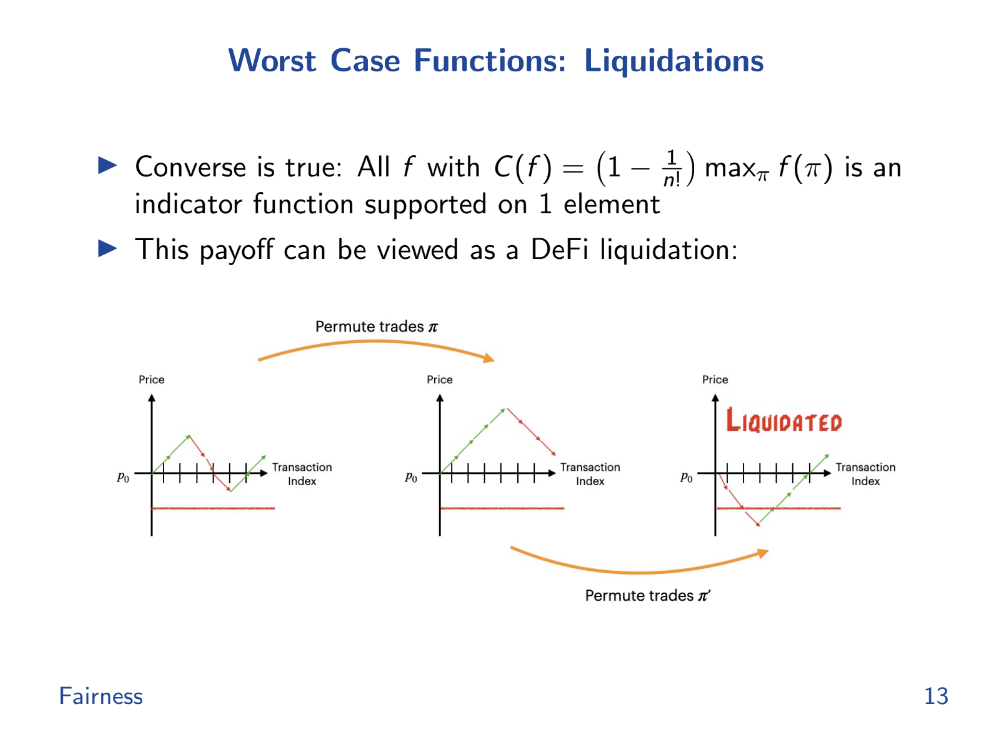

Worst case functions (9:30)

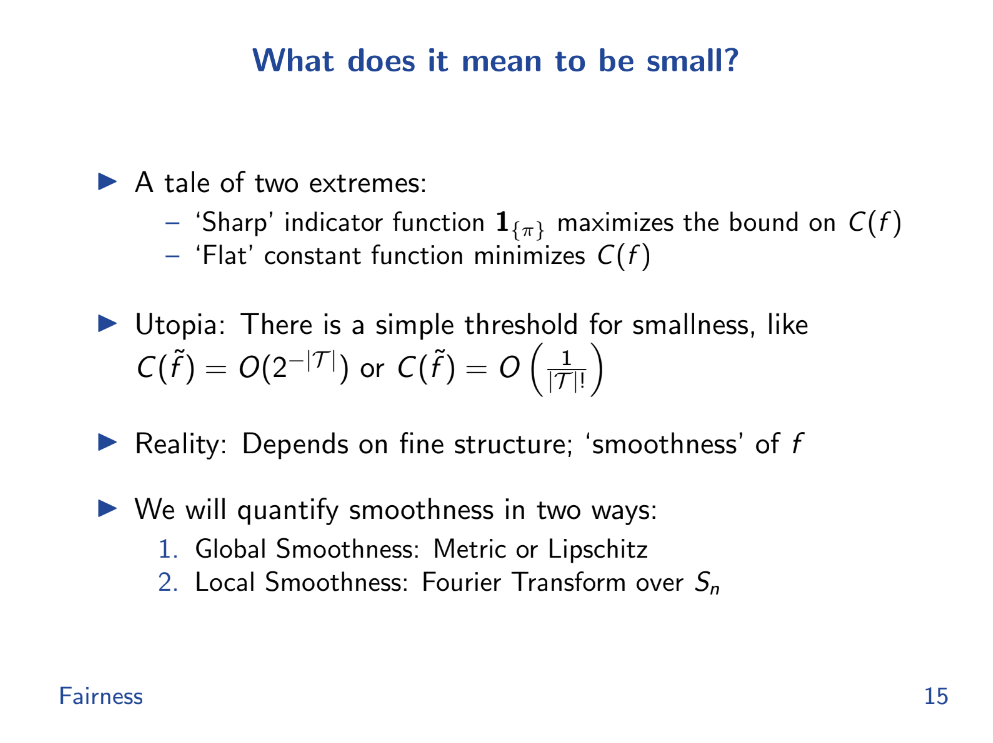

DeFi liquidations represent a kind of "basis" set of payoff functions that generate high costs of fairness.

The order you execute these trades can affect whether you lose a lot of money at once (get liquidated) or not. Different orders or "permutations" of trades have different outcomes, and some might hit a point where you get liquidated, while others won't.

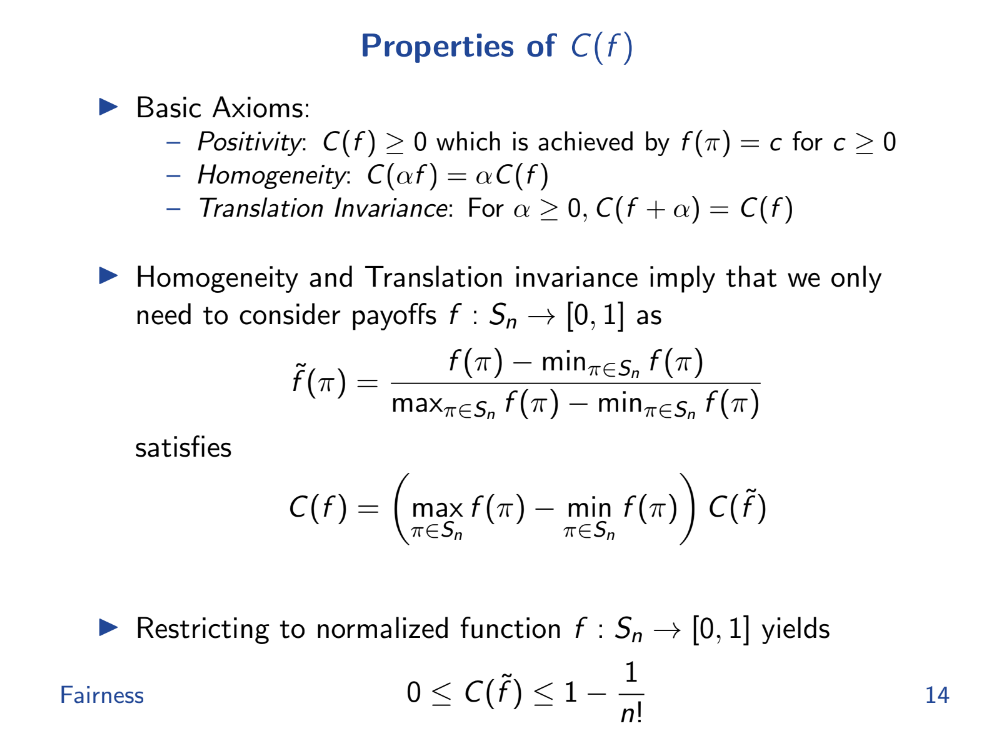

Properties of Cost of Fairness (10:25)

- Fairness is defined as the difference between worst case and average payoffs.

- Perfectly fair means same payoff for any order. Perfectly unfair means payoff only for one order.

Why use an additive measure of fairness ? (12:20)

The problem is, Ratio measures like max/min or max/average can become exaggerated or unbounded for extreme payoff functions like liquidations. So we need an additive measures of fairness that quantify how much the payoff changes between different orderings.

Additive means difference between max and average. By using an additive measure, it's easier to analyze and understand the variations in payoff and thus, the level of fairness in the system, making it a more reliable method in such contexts.

Smooth Functions

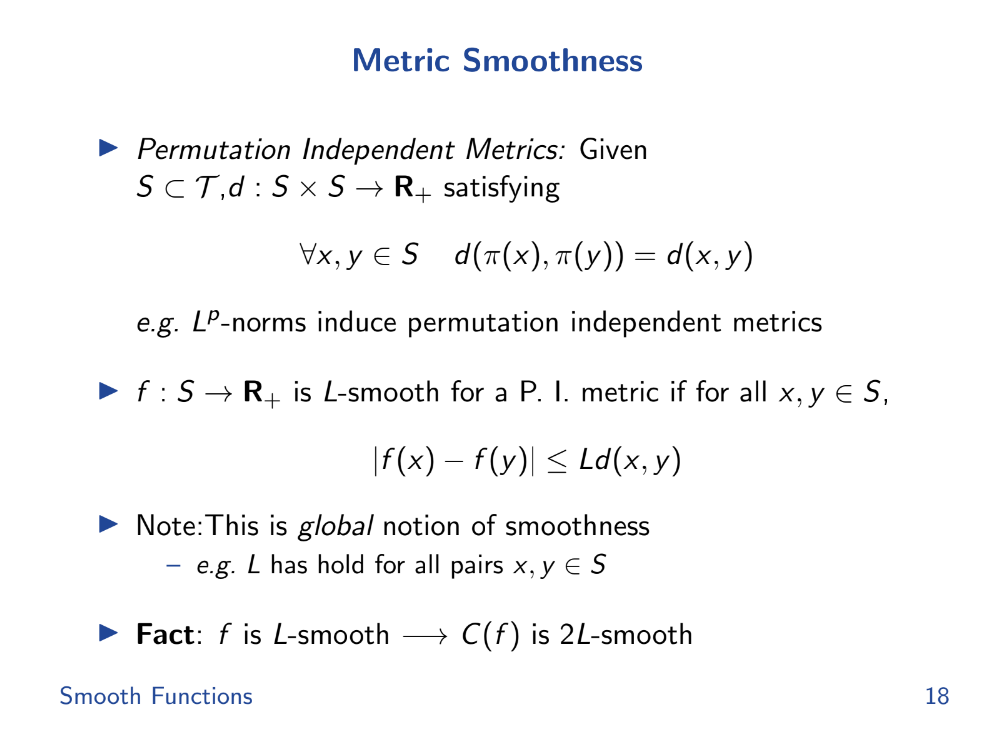

Metric smoothness (13:20)

In calculus, there's a concept called Lipschitz smoothness which helps to measure this predictability. If a function is Lipschitz smooth, the amount its value can change is limited, making it more predictable.

We can define similar "smoothness" metrics for AMMs based on how much their prices change in response to trades.

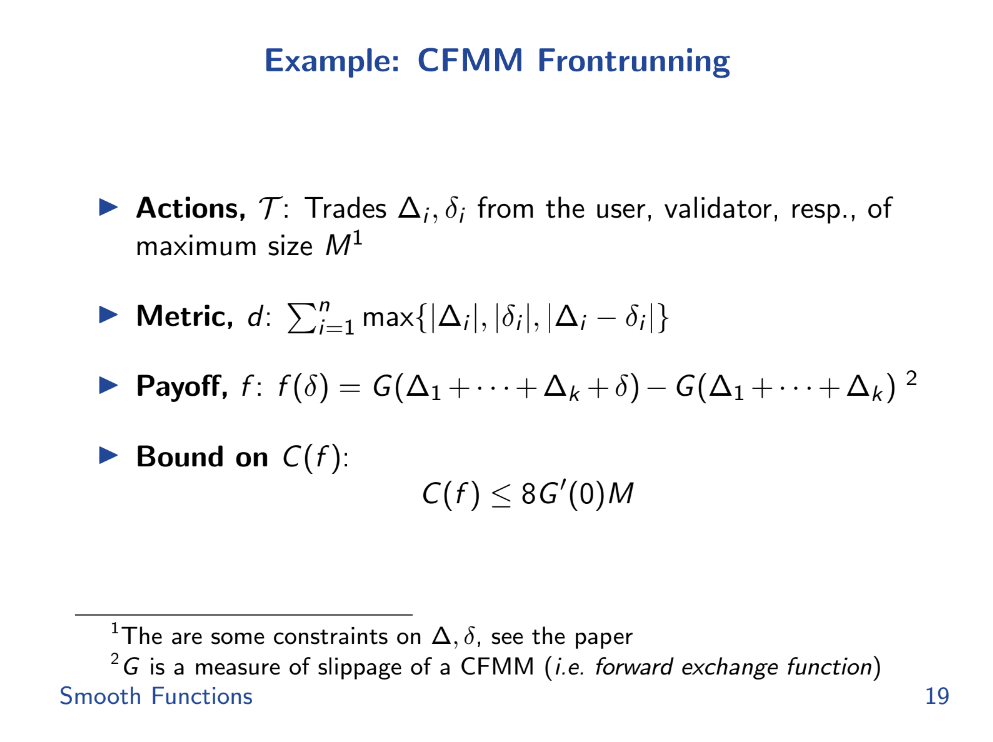

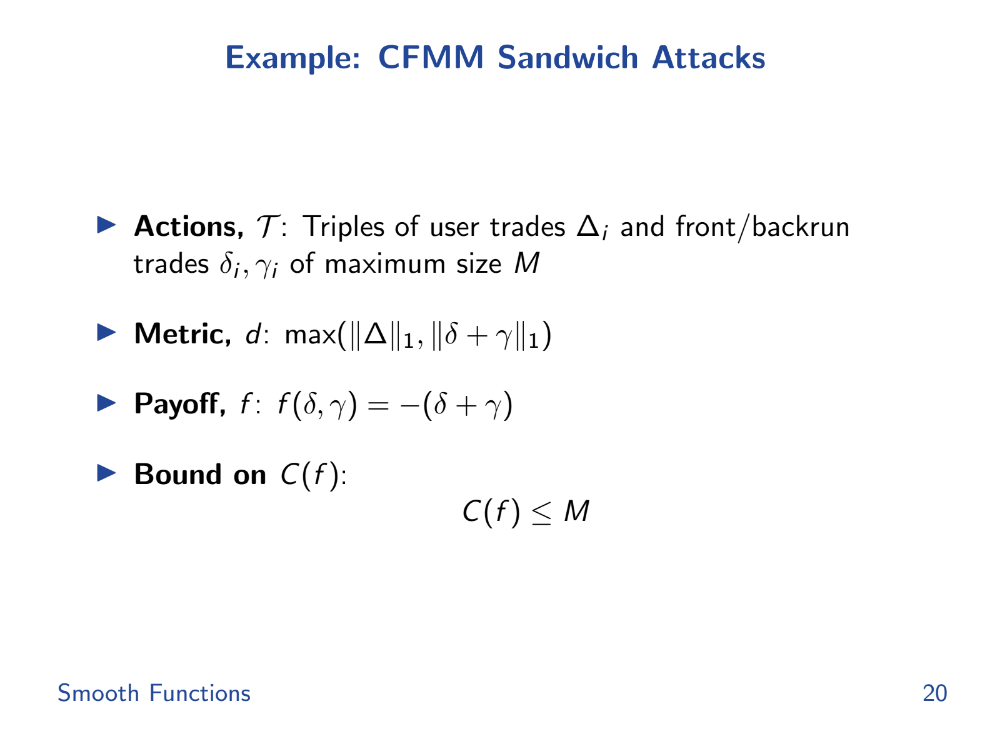

Examples (14:15)

Intuitively, an AMM with smoother price changes (lower gradient) should be less vulnerable to attacks like front-running that exploit rapid price swings. We can mathematically upper bound the potential unfairness or manipulation in an AMM by its smoothness metric.

For front-running, the smoothness of the AMM's pricing function directly controls the max-min fairness gap. But surprisingly, sandwich attacks actually have better (lower) bounds than worst-case front-running in some AMMs like Uniswap.

Spectral Analysis

Localized smoothness (15:35)

We'll shift from global "smoothness" metrics to more local ones. Global metrics compare all possible orderings of transactions, but local metrics only look at small changes.

This matters because in real systems like blockchain lanes or state channels, you often only care about local fairness - like between two trades in a lane, not all trades globally.

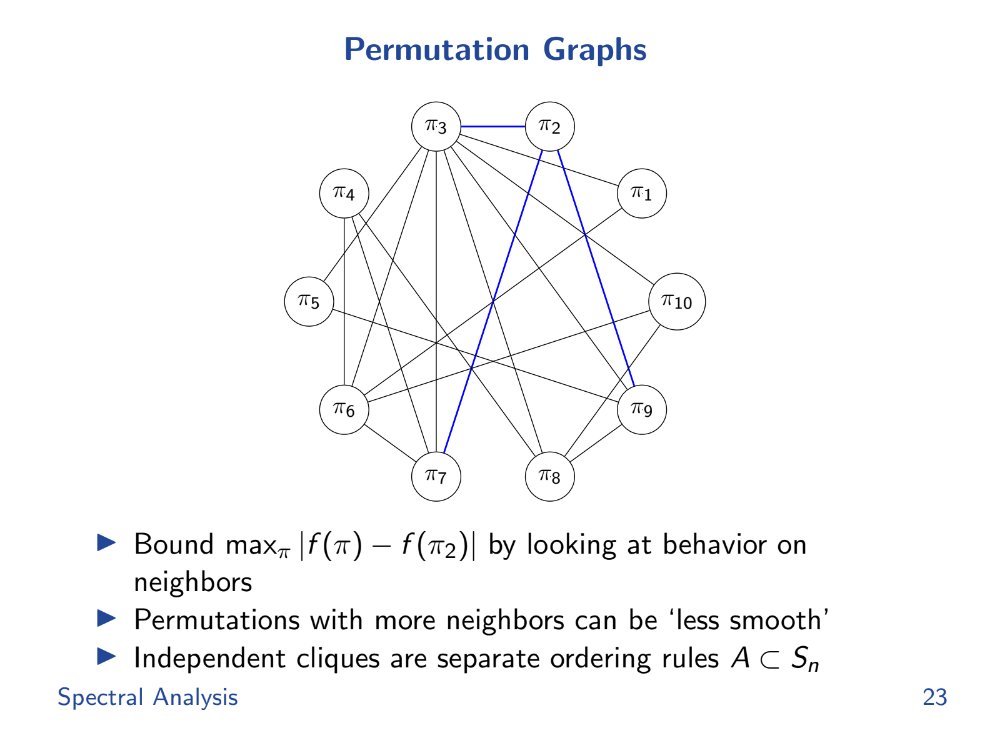

Permutation Graphs (16:30)

To help study this local smoothness, Tarun brings in a field of math called spectral graph theory. It envisions the valid transaction orderings as a graph, with edges for allowed transitions between orderings.

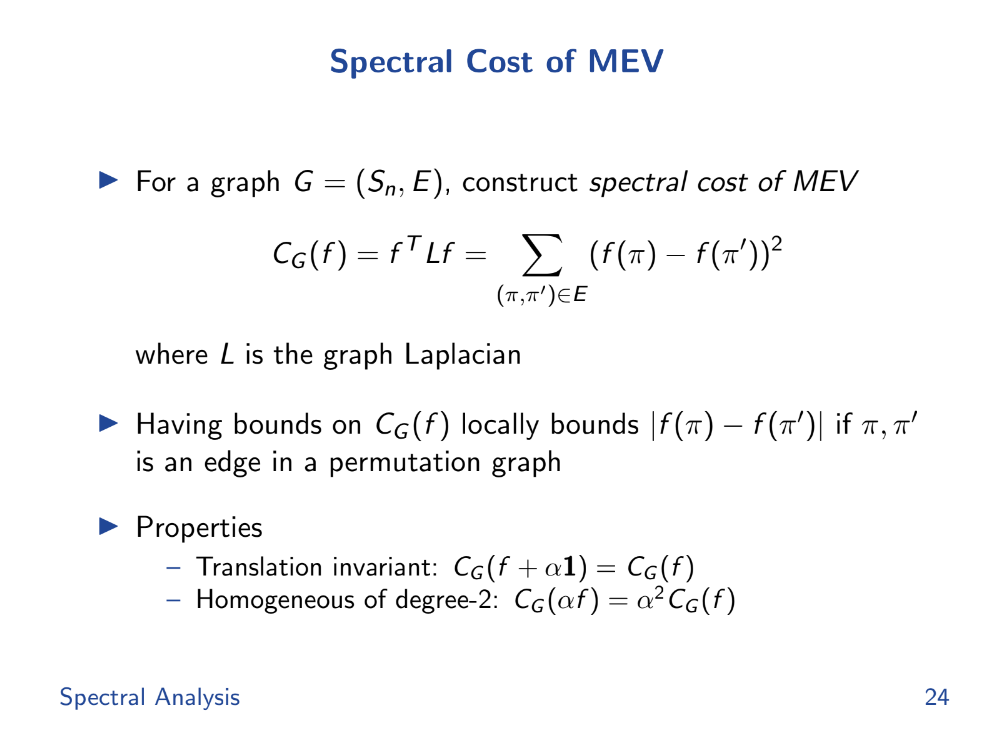

Spectral cost of MEV (17:20)

It defines a "spectral cost of MEV" as the difference in payoff between connected nodes. This measures local smoothness, not all alternatives globally.

Tarun introduces a function Cg and use some mathematical tools like eigenvalues and Fourier transform to analyze and bound the deviation in outcomes.

These tools help measure how "smooth" or predictable the system is with different orderings.

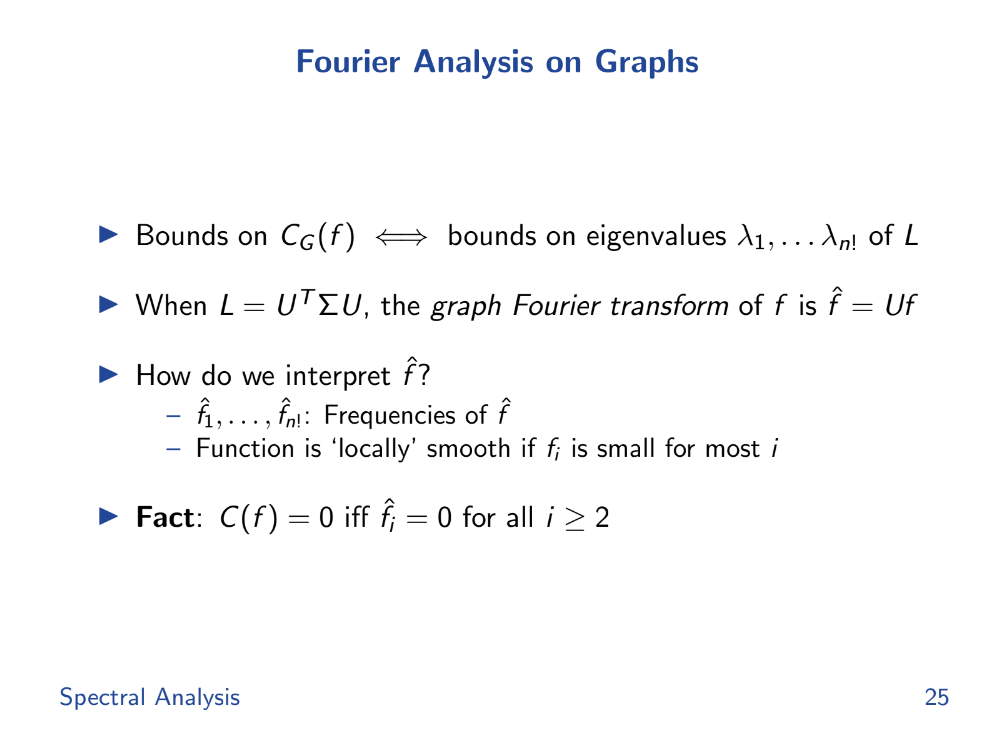

Fourier Analysis (17:50)

If the high frequency Fourier coefficients are zero, the payoff function is constant, then perfectly smooth and fair. The spectral mean of the eigenvalues bounds unfairness cost.

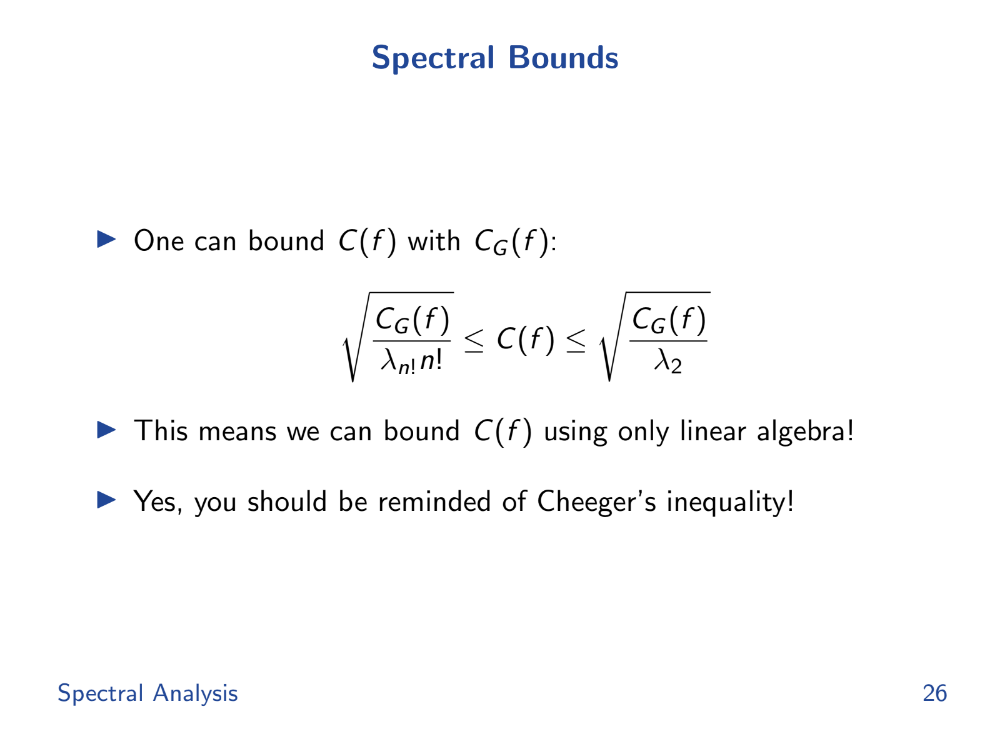

Spectral bounds (18:30)

The spectral bounds, derived from the eigenvalues, help in bounding the local cost. By bounding the local cost, they can ensure that the deviation in outcomes remains within acceptable limits, thus ensuring fairness.

So if we can analyze the spectrum of the graph Laplacian L, we can bound payoff differences between local orderings. This gives a "certificate of fairness".

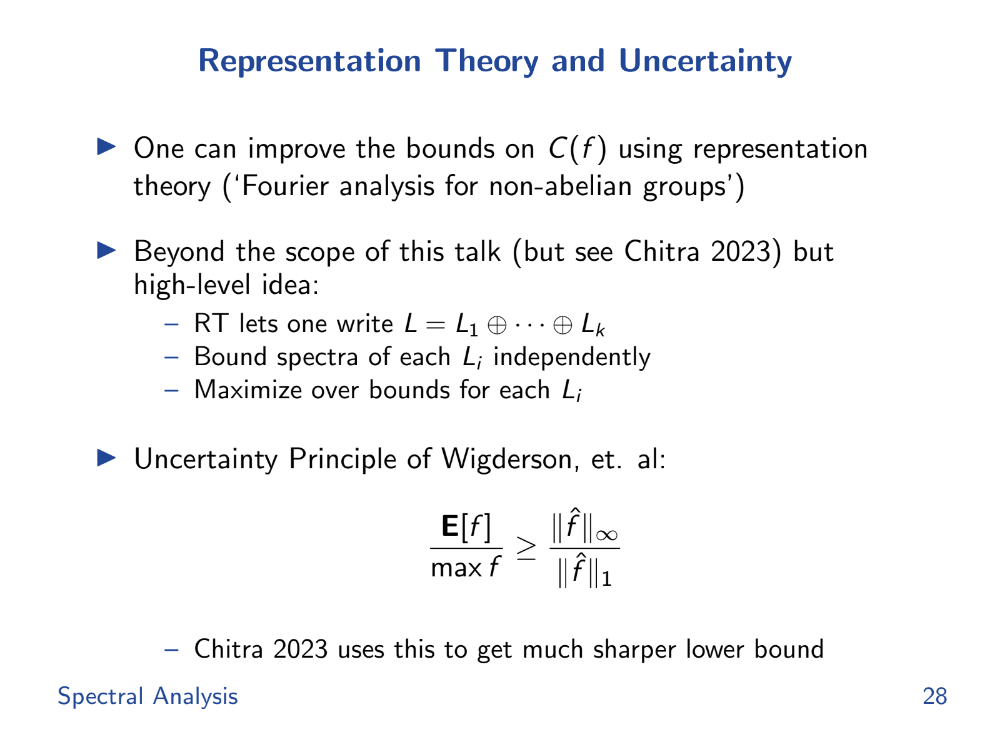

Representation Theory and Uncertainty (20:00)

There are still challenges :

- The graph can be huge, making eigenvalue analysis costly. But for sparse graphs there are shortcuts.

- The bounds are loose. But there are ways to decompose L and tighten the bounds.

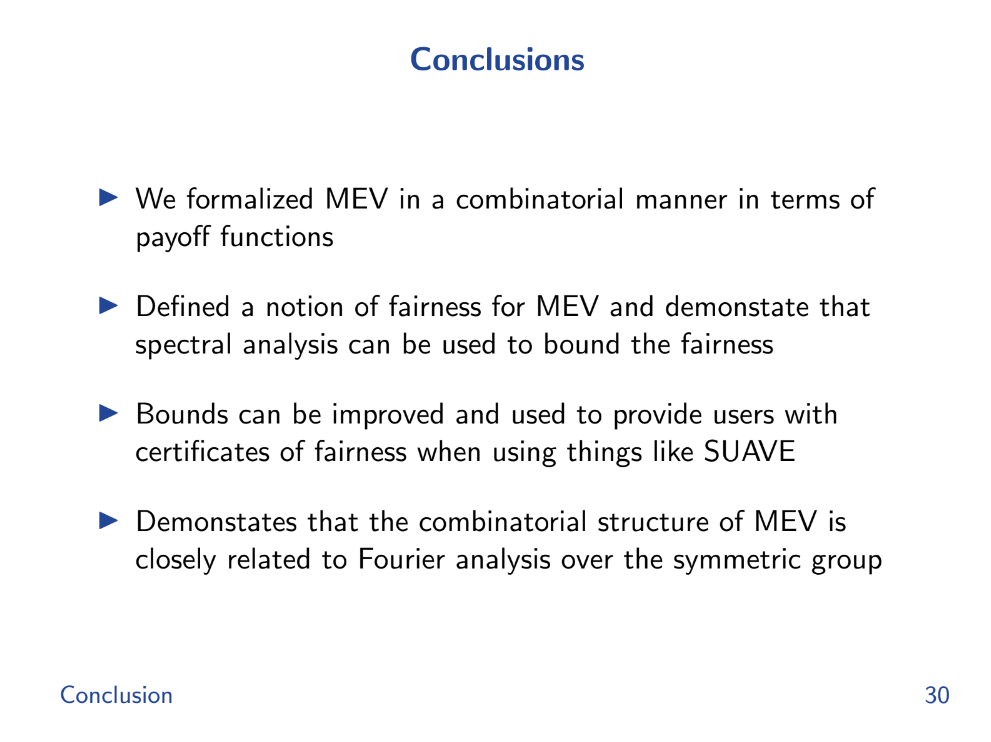

Conclusion (20:20)

Formalized MEV (miner extractable value) using combinatorics rather than programs. This makes the analysis cleaner.

Defined "fairness" as the difference between the worst case and average case payoff over transaction orderings.

We showed how spectral graph theory bounds can limit the smoothness of payoff functions. This helps bound the maximum unfairness.

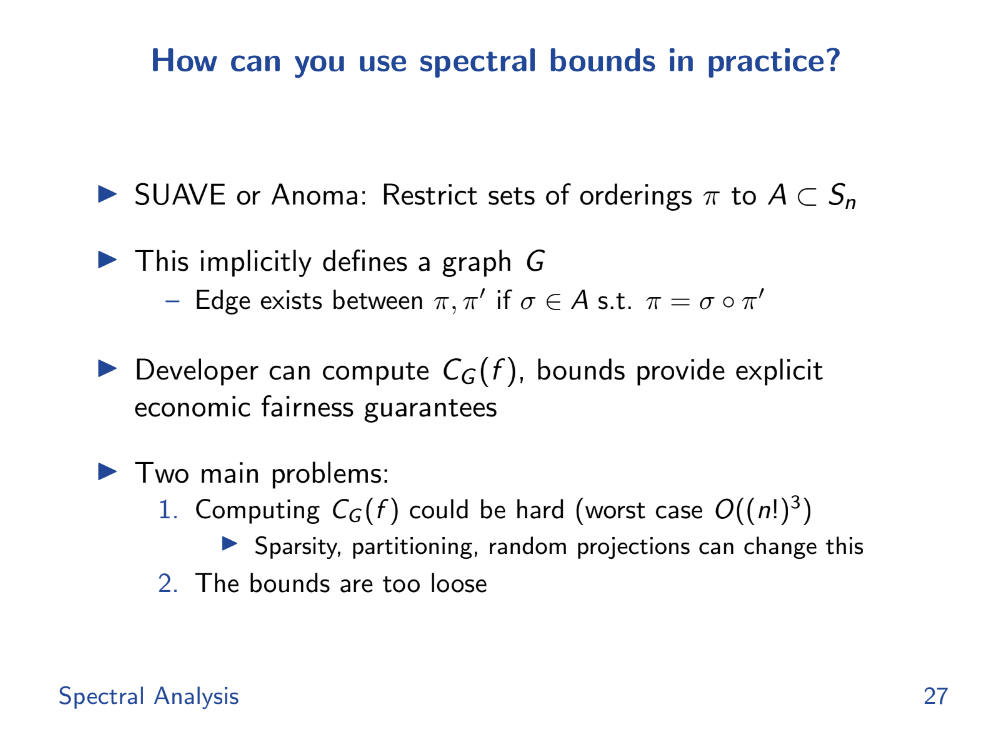

So by doing some linear algebra on the graph of valid orderings, you can get "certificates of fairness" for protocols like Suave or Anoma.

Fourier analysis on the symmetric group of orderings is important for this graph analysis.

Q&A

In Ethereum's decentralized finance (DeFi) ecosystem, liquidations are a big source of MEV. But many DeFi apps now use Chainlink for price feeds, avoiding on-chain price manipulation. So in practice, how much MEV from liquidations still exists ? (21:20)

MEV extraction can be modeled mathematically for any transaction ordering scheme. We can represent any payout scheme (like an auction or lending platform) as a "liquidation basis."

Even if apps use Chainlink to avoid direct manipulation, the ordering of transactions can still impact profits.

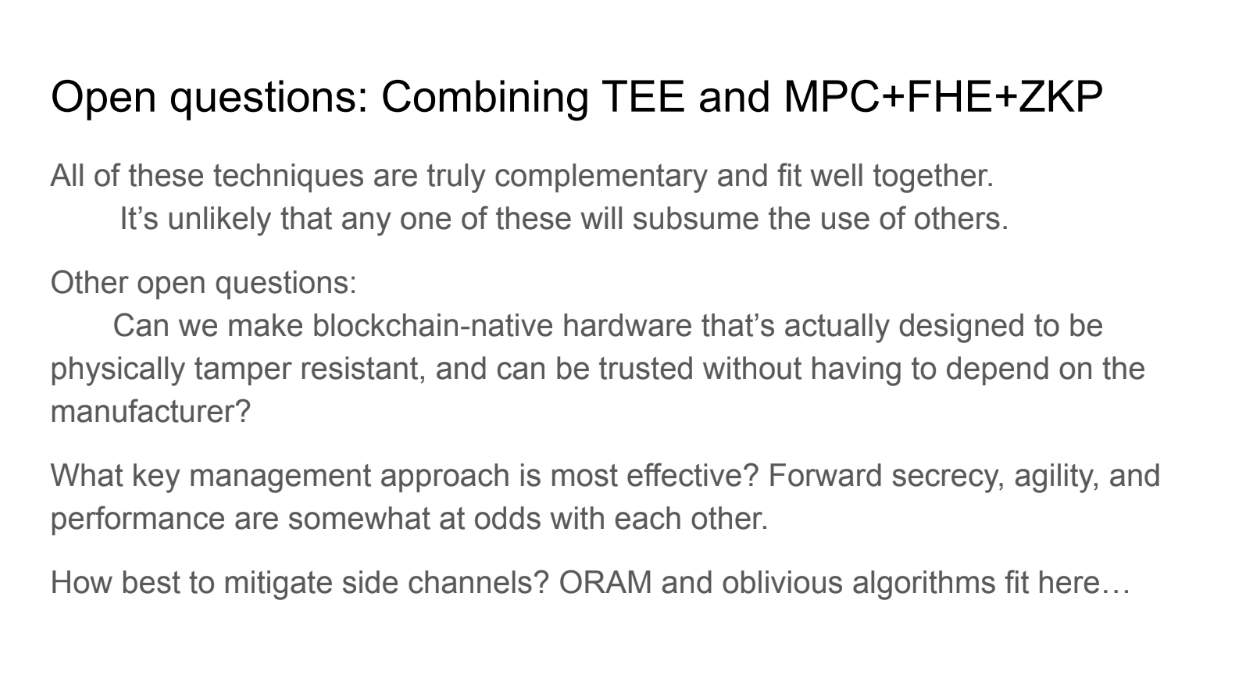

Can mechanisms like PBS or PEPC restrict the set of valid transaction orderings, reducing MEV ? (22:45)

Tarun isn't sure about PBS, but he agrees. Solutions like Anoma and SUAVE restrict transaction ordering, which helps bound/reduce MEV.

The key is formally analyzing how much a given constraint improves "fairness" by restricting orderings.

Could you use this MEV analysis to set bounds on a Dutch auction, as an alternative to order-bundled auctions ? (24:00)

Yes, dutch auctions could potentially be modeled to bound MEV exposure. The core idea is representing payout schemes to reason about transaction ordering effects.

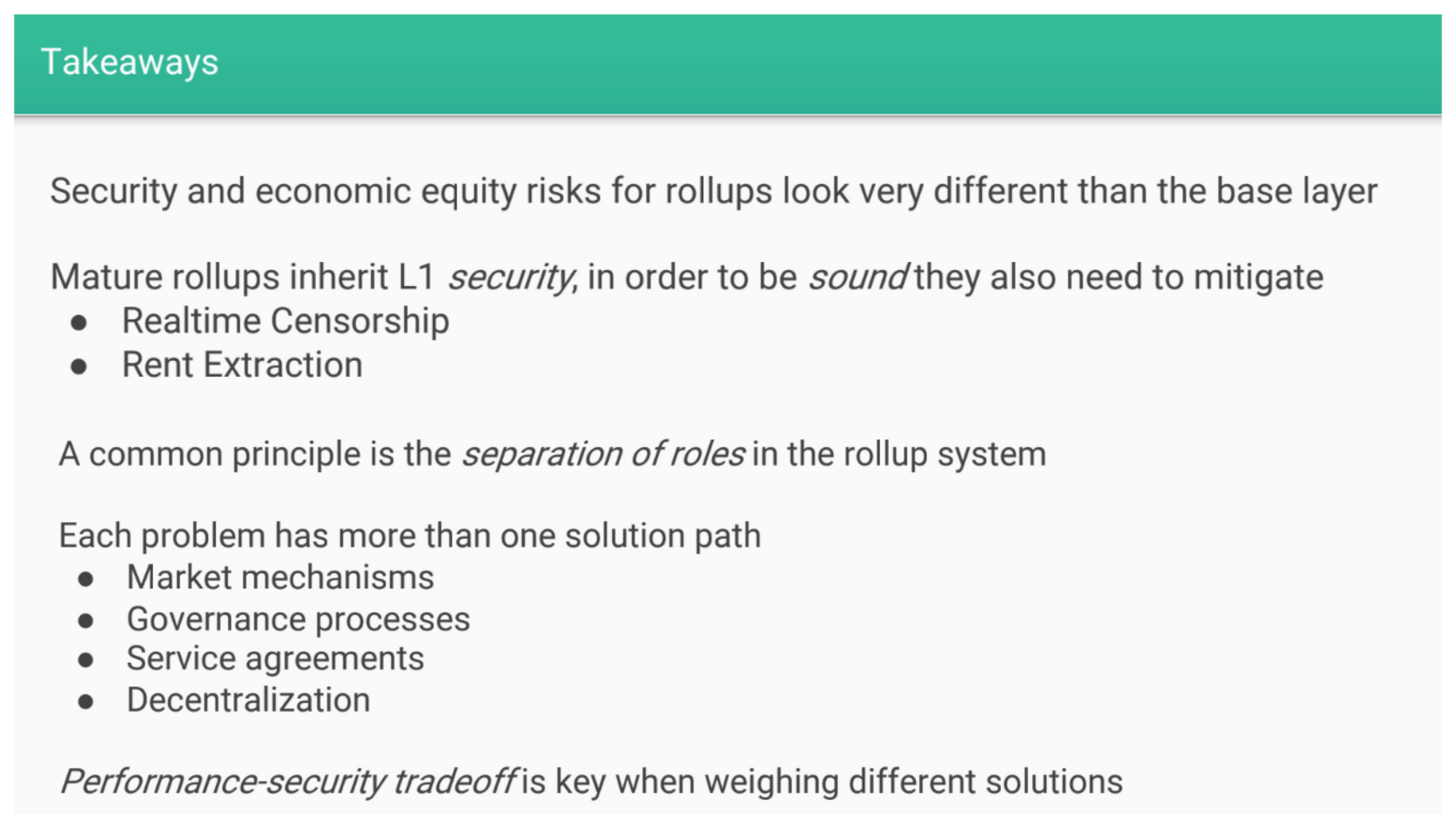

Properties of Mature & Sound Rollups

There are two main types of MEV possible on layer two:

- Macro MEV : where the rollup operator could delay or censor transactions to profit.

- Micro MEV : where validators extracting unnecessary fees from transactions like on layer 1.

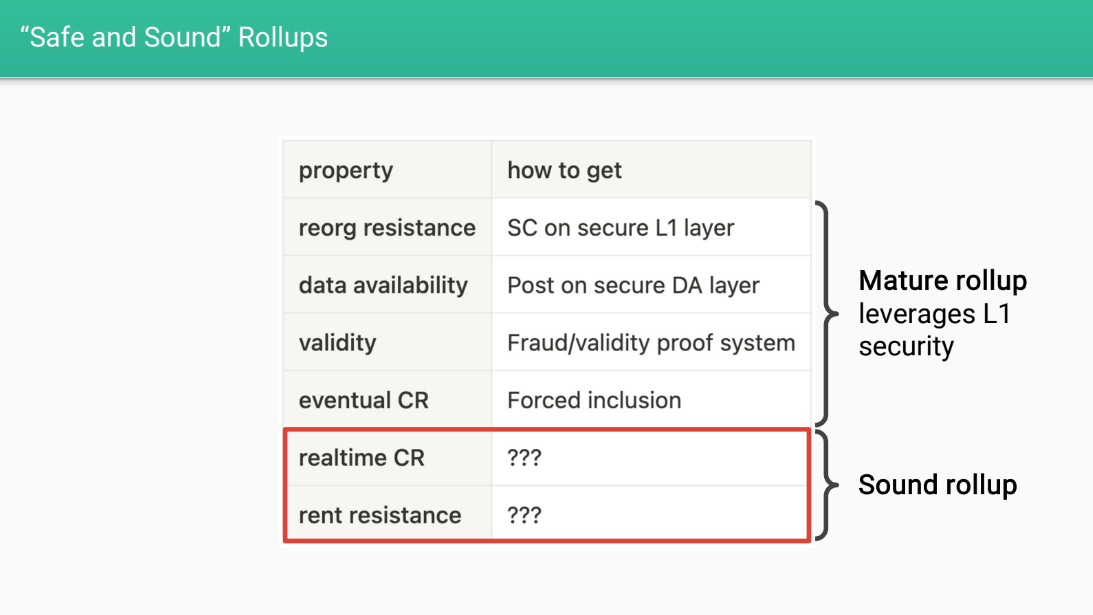

Safe and Sound Rollups (1:00)

Davide wants layer 2 rollups to have certain properties to make them "safe and sound".

Firstly, rollups should have security properties inherited from layer 1 Ethereum :

- Reorg resistance : transactions can't be reversed

- Data availability : transaction data is available

- Validity : transactions follow protocol rules

- Eventual censorship resistance : transactions will eventually get confirmed

They should also have economic fairness properties described below

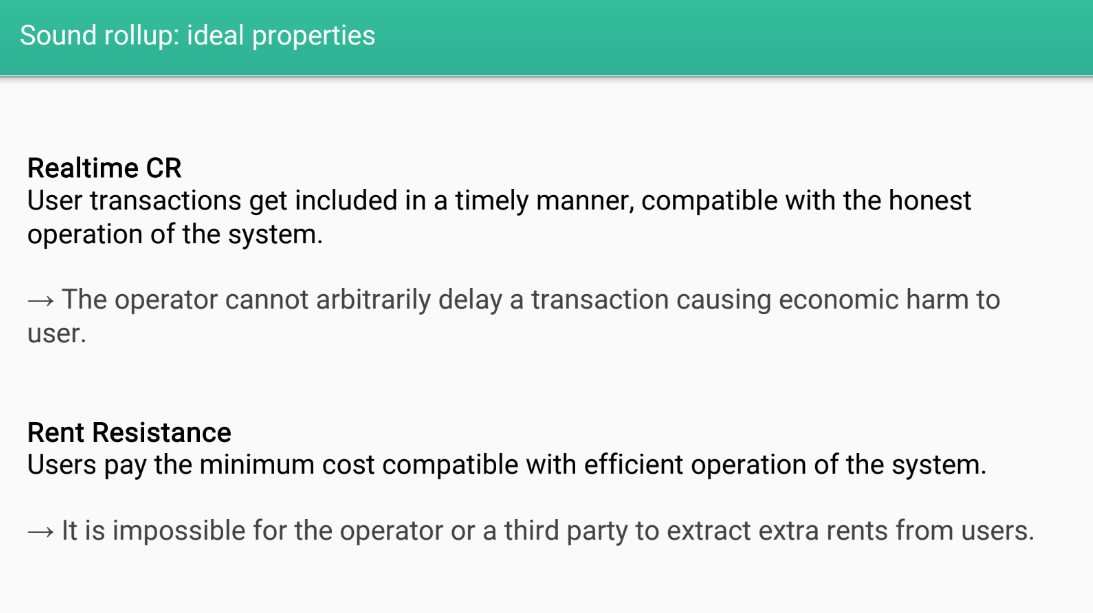

Ideal Properties (2:25)

- Real time censorship resistance : transactions get confirmed quickly without delays

- Rent resistance : users only pay minimum fees needed for operation, no unnecessary rents extracted

With real time CR and rent resistance, the rollup would be more fair and equitable for users

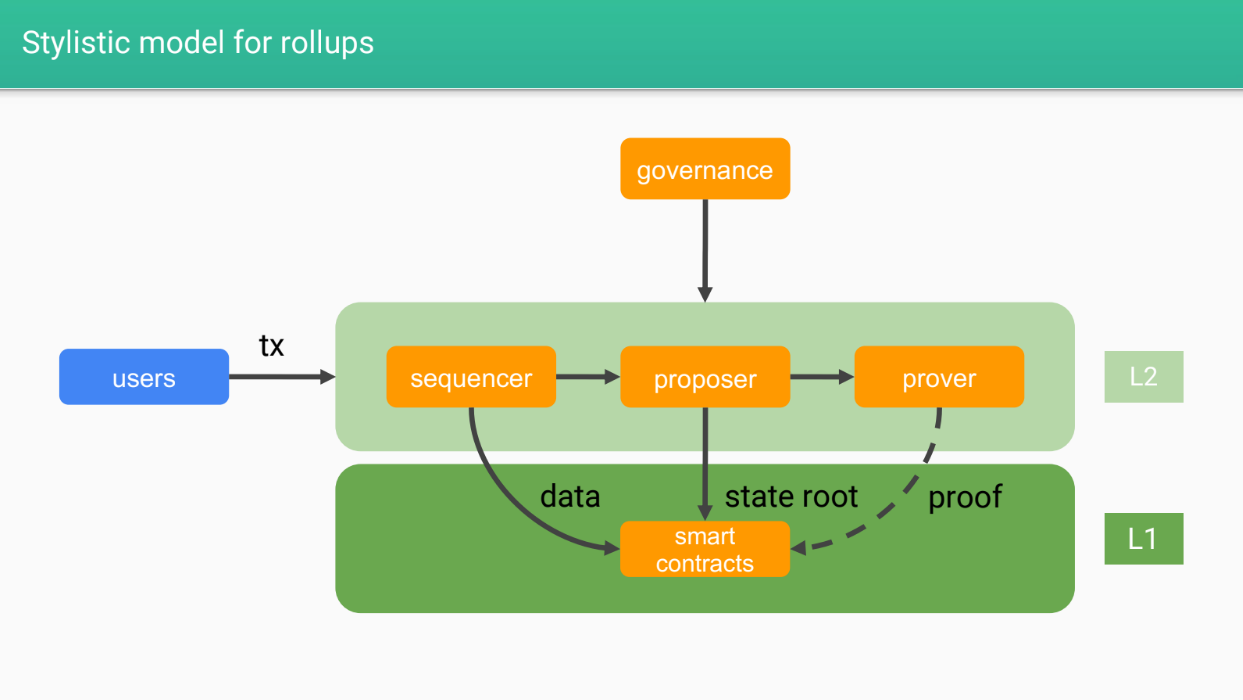

Stylistic model for rollups (3:05)

- Sequencers : Responsible for ordering transactions into blocks/batches and posting to layer 1

- Proposers : Execute transactions and update state on layer 1

- Provers : Check validity of state transitions (optional for some rollups)

- Smart contracts : The rollup logic on layer 1

- Governance : Designs and upgrades the rollup system

- Users : Interact with the rollup, secured by layer 1

The different roles in the rollup system will relate to the potential for "macro mev" and "micro mev" which will be discussed next. The goal is to design rollups to be fair and equitable for users.

Types of L2 MEV

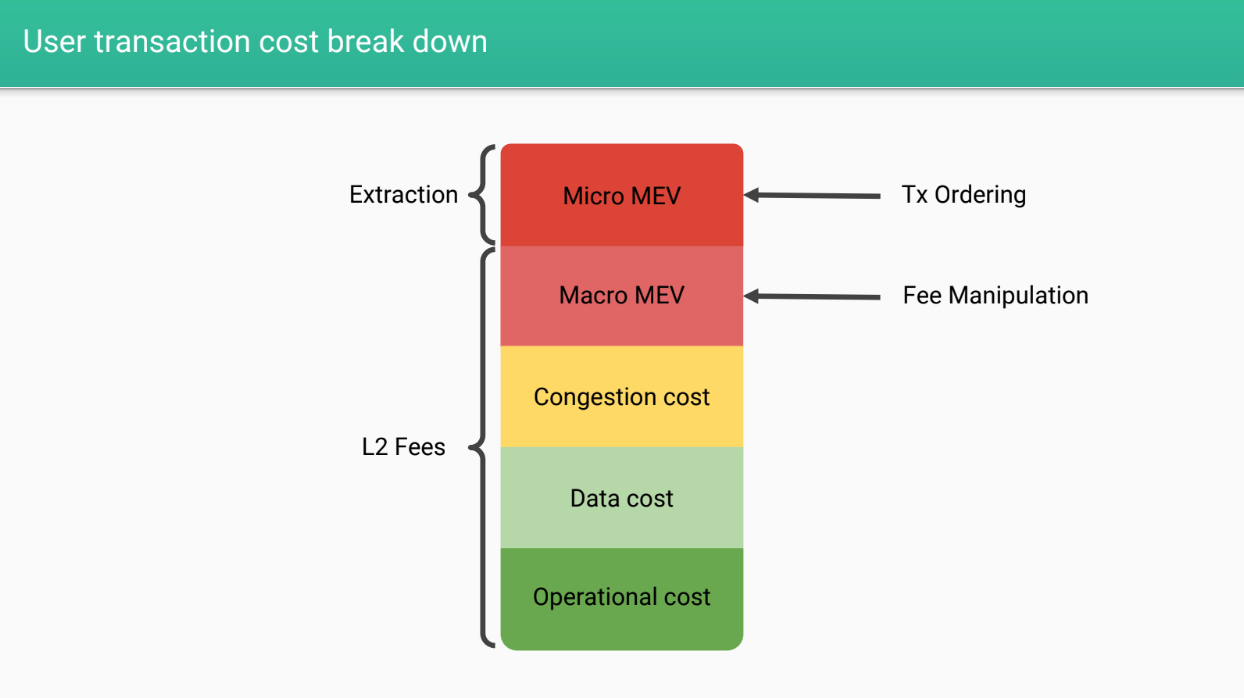

User transaction cost break down (4:30)

When a user sends a transaction on a rollup, they pay :

- Layer 2 fees for operation costs

- Data costs for posting data to layer 1

- Congestion fees for efficient resource allocation

- Potential "macro MEV" : fees extracted by the operator

- Potential "micro MEV" : transaction ordering extraction

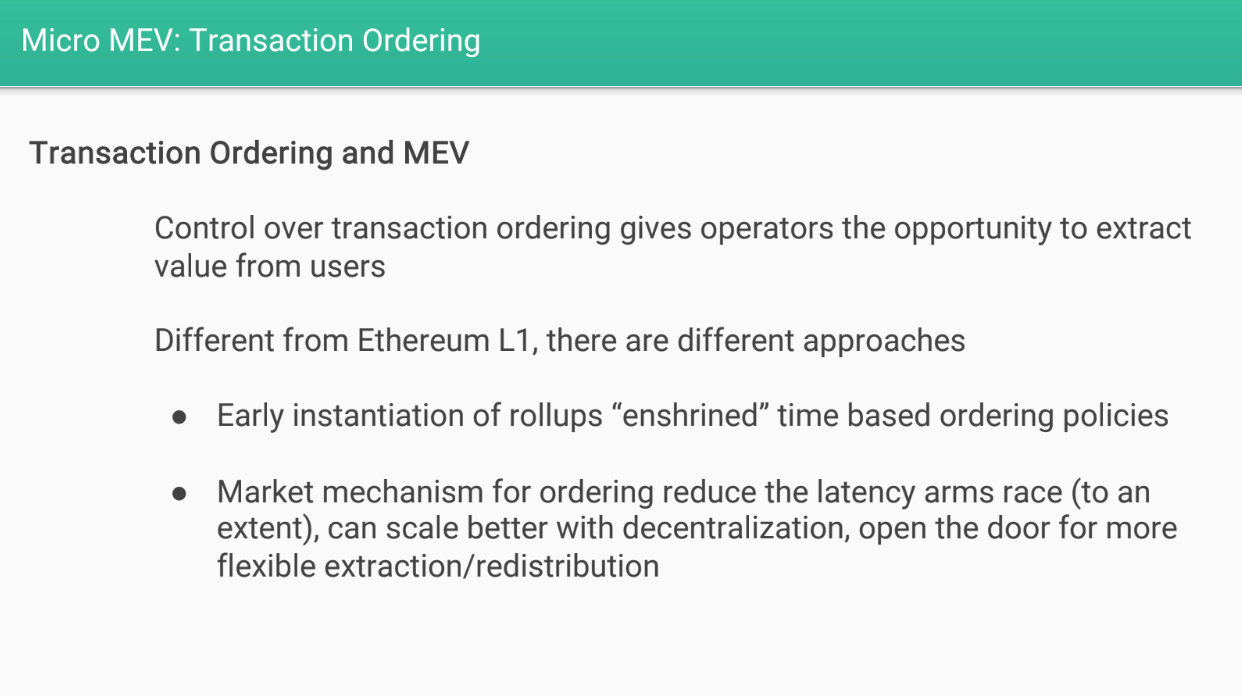

Micro MEV (6:05)

Micro MEV is transaction ordering extraction, like on layer 1. Others have discussed this, so we will focus on macro mev by the operator.

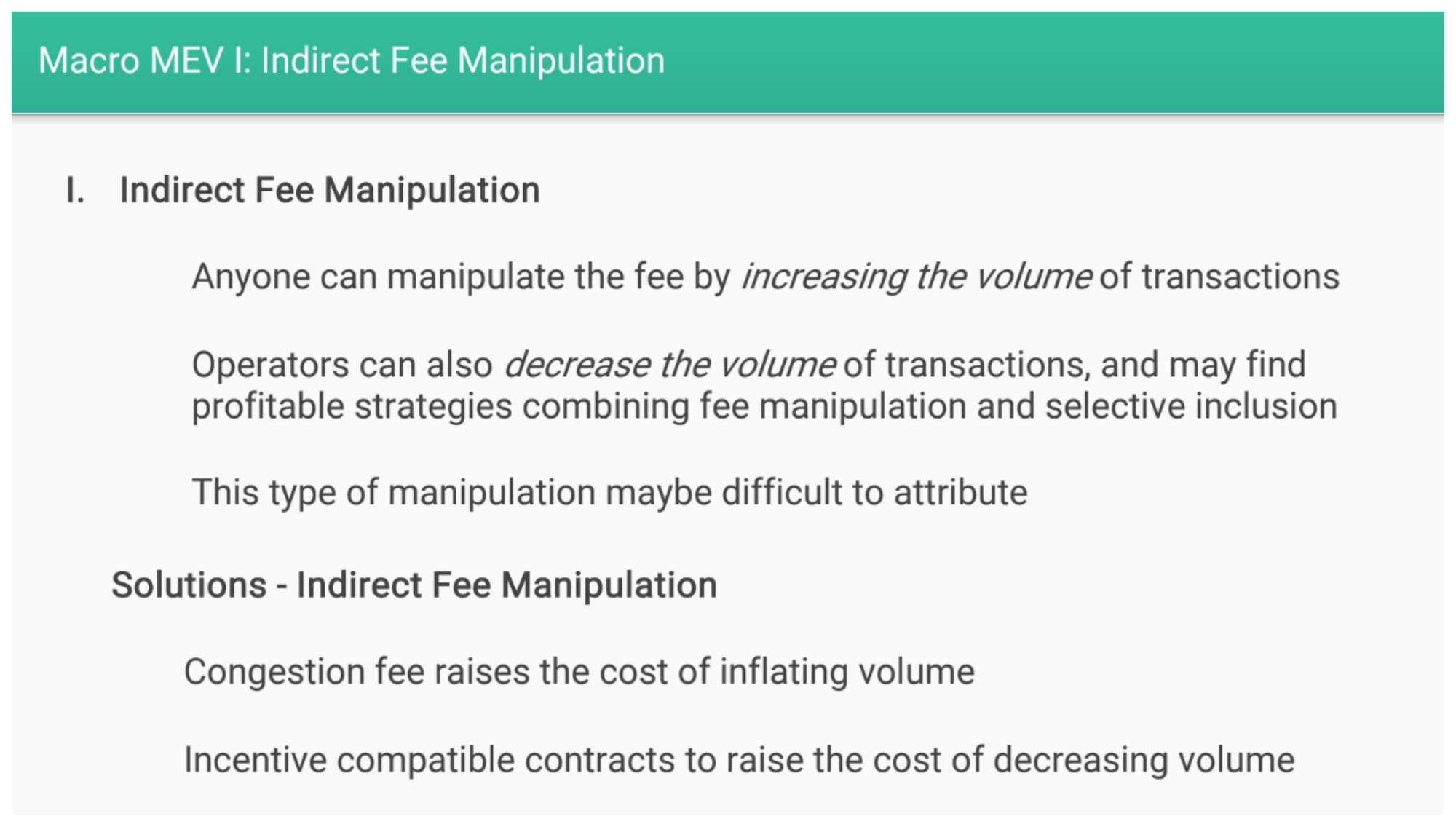

Macro MEV

There are 3 main forms of macro MEV:

- Indirect fee manipulation : Increasing or decreasing transaction volume to manipulate fees

- Direct fee manipulation : Operator directly sets high fees

- Delaying transactions : Operator delays transactions to profit from fees

Indirect fee manipulation (7:40)

Anyone can manipulate fees by increasing/decreasing transaction volume. But we can't define precisely who is responsible of this kind of manipulation

Potential solutions for indirect fee manipulation :

- Careful design of congestion fee and allocation

- Governance could create incentives to raise the cost of manipulating volume for the operator

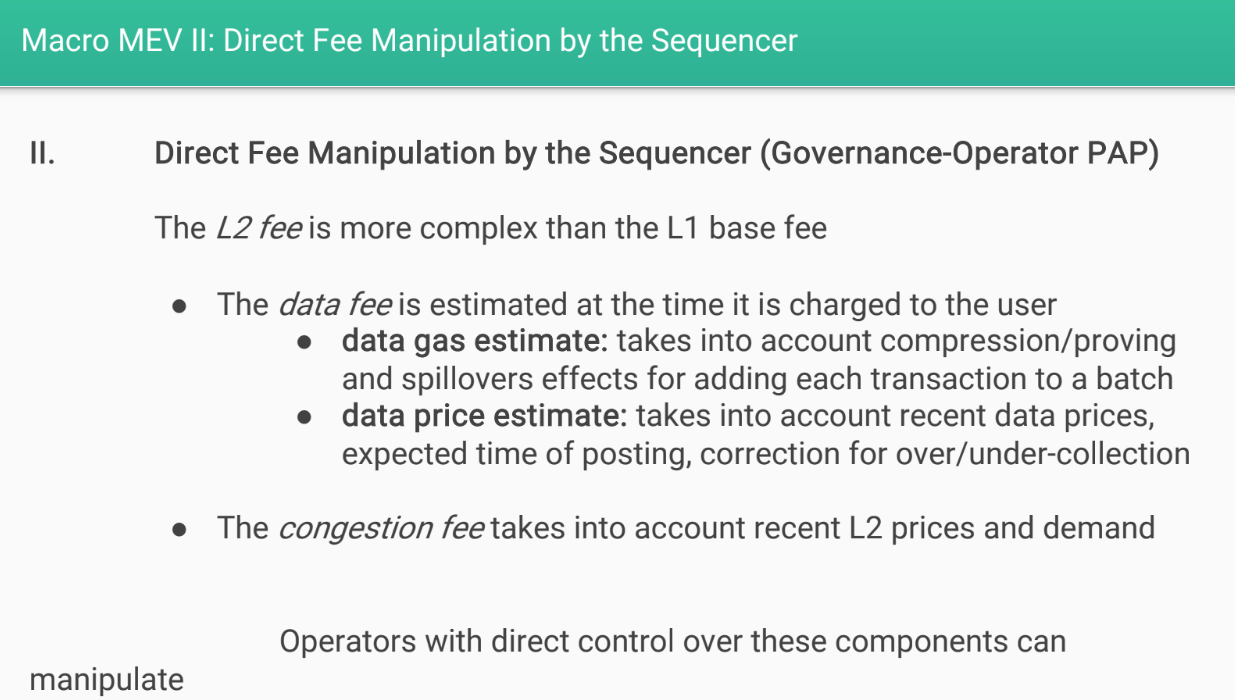

Direct fee manipulation by sequencer (8:45)

Layer 2 systems tend to be more centralized, so they have more control over transactions.

The sequencer controls estimating data fees in advance and setting congestion fees. This provide opportunities to manipulate fees overall, and discriminate between users by charging them different fees

Potential solutions for direct fee manipulation :

- Governance contracts to align incentives of sequencer

- Observability into fees and ability to remediate issues

- Decentralize the sequencer role more

- Proof-of-Stake incentives to prevent bad behavior (L2 tokens ?)

Fee market design manipulation (11:30)

The governance controls the parameters of the fee market. For example:

- With EIP-1559 style fees, governance controls the base fee and tip limits

- Governance could artificially limit capacity

- Or fail to increase capacity when demand increases

This could increase fees and rents extracted from users.

Potential solutions for market design manipulation :

- Transparent and participatory governance

- Align governance incentives with users

- Flag fee market changes as high stakes decisions requiring more governance process

Takeaways (12:55)

Rollups are layer 2 solutions built on top of layer 1 blockchains like Ethereum. They inherit the security of the underlying layer 1, but need to mitigate other risks like censorship on their own.

Rollups need to separate different roles/duties to remain secure and decentralized. For example, keeping the role of sequencer separate from governance.

There are multiple potential solutions to challenges like preventing censorship on rollups. Each solution has trade-offs between performance and security/decentralization.

Rollup operators need to balance these trade-offs when designing their architectures.

They have more flexibility than layer 1 chains since they can utilize governance and agreements in addition to technical decentralization.

Q&A

Do you see a role for a rollup's own token in these attacks that you described ? (15:00)

If a small group controls a large share of the governance token, they could potentially manipulate votes for their own benefit. this is called a "governance attack."

Proper decentralized governance is still an open research problem.

Besides governance attacks, could governance activities enable MEV extraction ? (16:20)

This is a valid consideration : High stakes governance votes could lead to "MEV markets" for votes. It's another direction where MEV issues could arise related to on-chain governance processes.

Transaction Ordering

The View from L2 (and L3)

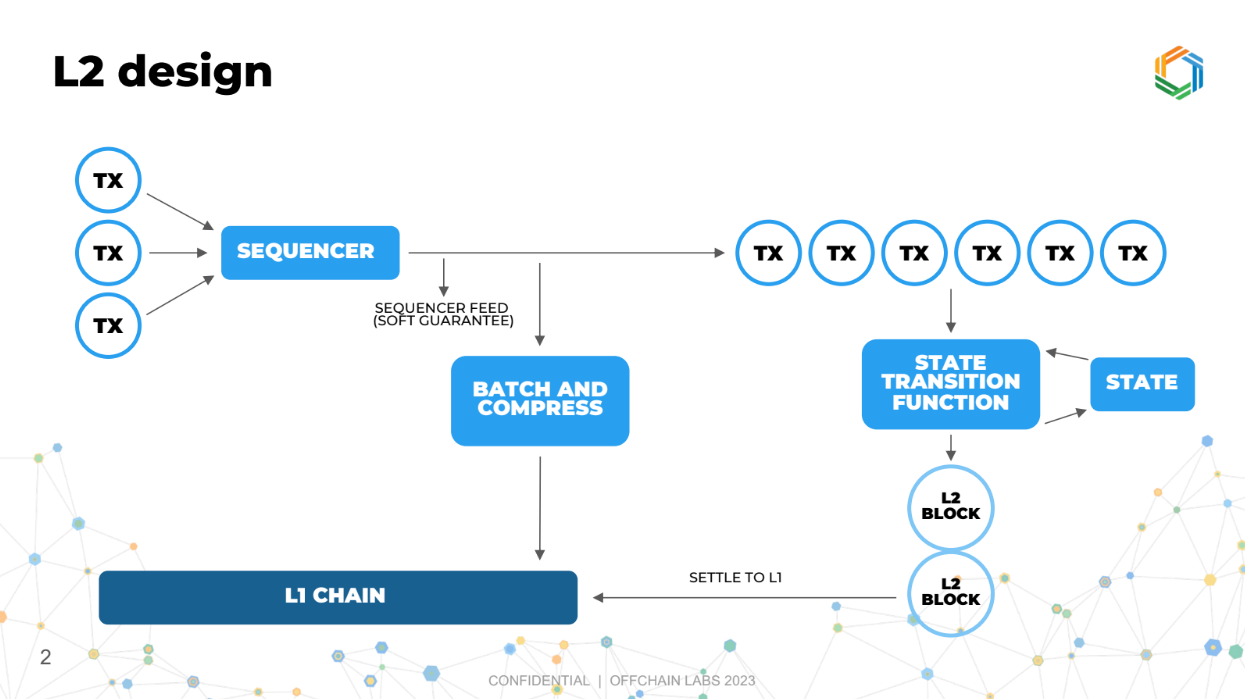

L2 Design (0:00)

The sequencer's job in a layer 2 system is to receive transactions from users and publish a canonical order of transactions. It does not execute or validate transactions.

Execution and state updates happen separately from sequencing in layer 2. The sequencer and execution logic are essentially two separate chains, one chain created by the sequencer to organize transactions, and another chain to process these transactions and update the blockchain

How L2 differs from L1 Ethereum (2:00)

On L2, the sequencing (ordering of transactions) is separate from execution (processing of transactions), which helps in faster processing. Arbitrum has 250ms blocks VS 12 sec for Ethereum

L2 typically has private transaction pools rather than a public pool. This rovides more privacy.

The cost structure is lower on L2, and it being more flexible in design compared to L1 because it's less mature and widely used, hence easier to modify or evolve.

But there is an issue related to how transactions are ordered, which is crucial for ensuring fairness and correctness in the system. We'll not think this problem as solving MEV, but as transaction ordering optimization.

Transaction ordering goals (3:15)

- Low latency : Preserve fast block times.

- Low cost : Minimize operational costs.

- Resist front-running : Don't allow unfair trade execution.

- Capture "MEV" ethically : Monetize some of the arbitrage/efficiency opportunities.

- Avoid centralization : Design a decentralized system.

- Independence of irrelevant transactions : Unrelated transactions should have independent strategies.

Independance of irrelevant transactions (4:25)

Transactions seeking arbitrage opportunities should be able to act independently of each other, if they are unrelated.

This independence makes the system simpler and fairer, avoiding a complex, entangled scenario where everyone's actions affect everyone else.

In an entangled system, having private information could give some participants an unfair advantage, leading to a few big players dominating (monopolies or oligopolies).

We want to prevent this by ensuring transactions are independent of each other, making the system more open and competitive.

New ordering policy

Proposed Policy (6:20)

For transaction ordering, Ed proposes using a "frequent ordering auction"

It involves three key attributes :

- Fast : Orders transactions frequently, like every fraction of a second, and repeat this fast process over and over again.

- Sealed bid auction : Participants submit confidential bids for their transactions.

- Priority gas auction : Bids are offers to pay extra per unit of gas used.

This approach is related to "frequent batch auctions" in economics literature, but called frequent ordering auction here to distinguish it. Frequent batch auctions were an inspiration for this proposed frequent ordering auction system.

Doing priority gas auctions rather than a single bid is important, as Ed will explain later.

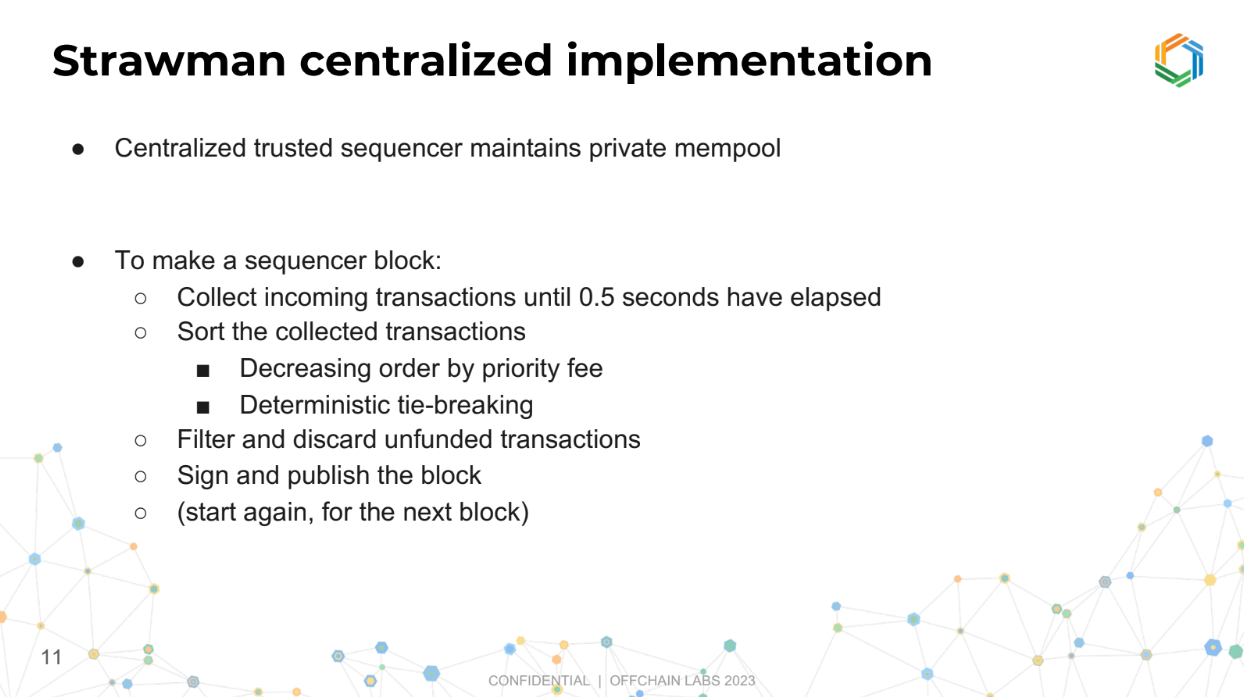

Strawman centralized implementation (7:45)

In this version, there's a single trusted entity called a sequencer that collects all incoming transactions over a short period (like 0.5 second).

Once the collection period is over, it organizes these transactions based on certain criteria (like who's willing to pay more for faster processing).

It also checks and removes any transactions from the list that are not funded properly (like if someone tries to make a transaction but doesn't have the money to cover it).

Then, it finalizes this organized list (called a block), makes it public, and starts the process over for the next set of transactions.

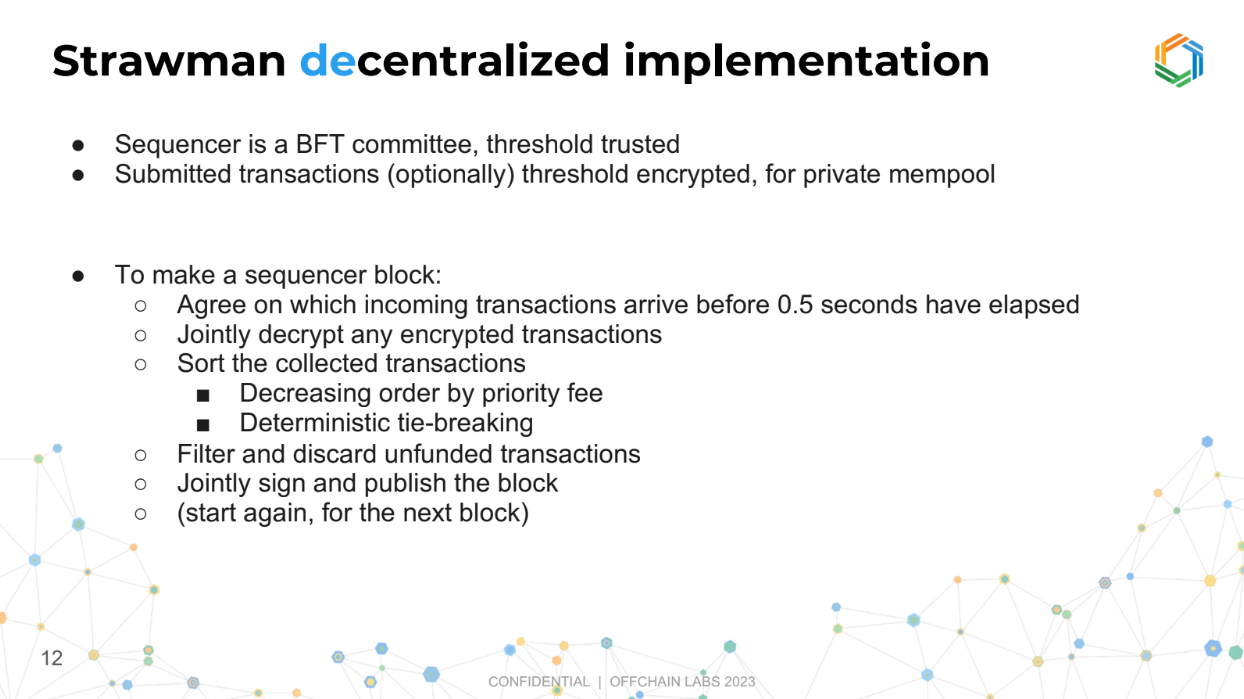

Strawman decentralized implementation (9:30)

Instead of a single trusted entity, a group of entities (a committee) works together to manage the transactions.

They collect all transactions, and once the collection period ends, they jointly organize and check them, similar to the centralized version.

This committee then collectively approves the organized block of transactions and makes it public before moving on to the next set.

Ultimately, governance will decide :

- Centralized for faster blocks and lower latency

- Decentralized for more trust but slower operation

Economics : examples (11:50)

Single arbitrage opportunity

Imagine there’s one special opportunity and everyone wants to grab it. Whoever gets their request in first, gets the opportunity.

It’s like an auction where everyone submits their bids secretly (sealed bid), but unlike regular auctions, everyone has to pay, not just the winner (all pay).

This method is known to be fair and efficient, and the strategies for participants are well understood.

Two independant arbitrage opportunity

There are two separate opportunities (A and B). If you're going for opportunity A, you don’t care about those going for opportunity B, as they don’t affect your chances.

Each opportunity has its own mini auction, making the process straightforward and independent, which they find desirable.

In other words, If you combine opportunities A and B in one transaction, you pay more total gas. This makes the strategy easier to understand and follow, as you only focus on the opportunity you’re interested in, without worrying about others.

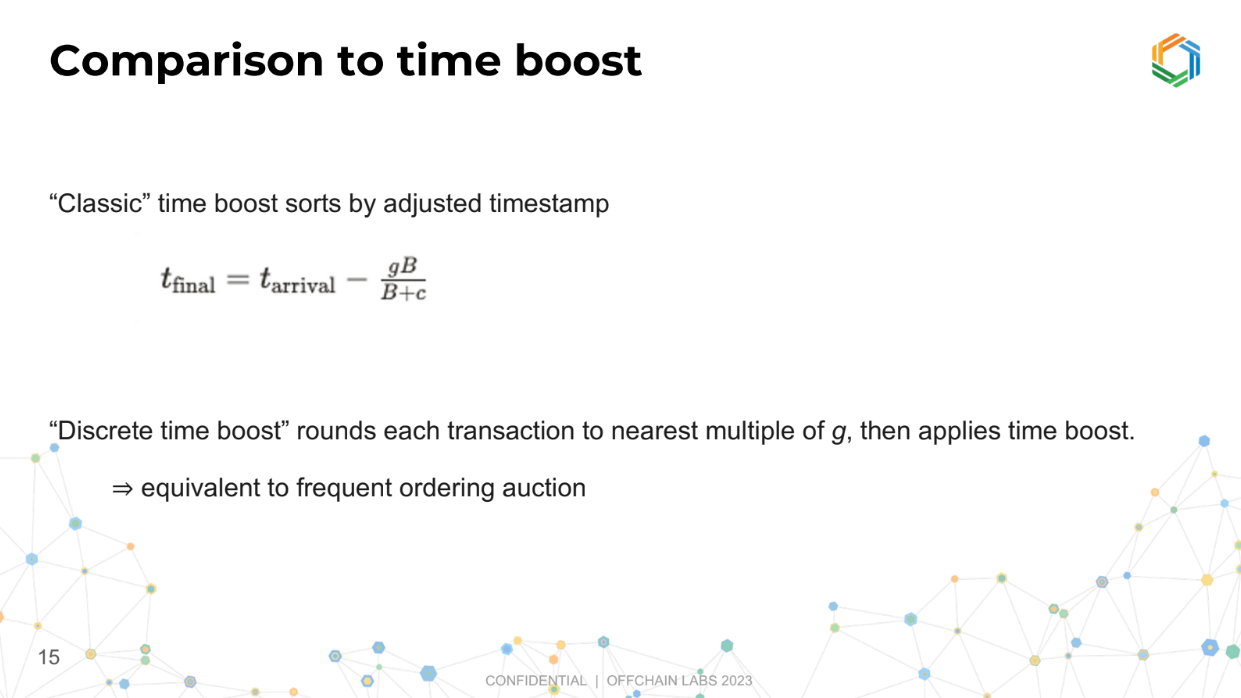

Comparison to Time Boost (14:30)

In Time Boost, the actual time a transaction arrives gets adjusted based on a formula. The formula considers your bid (how much you're willing to pay), a constant for normalization, and the maximum time advantage you can buy. Even if you bid a huge amount, the time advantage caps at a certain point.

A variation called Discrete Time Boost rounds the transaction's time to a fixed interval and then applies the same formula.

We can think of the frequent ordering auction as a frequent batch auction (grouping transactions together for processing) applied to blockchain, or a modified version of Time Boost. This is up to us.

FOA with bundles (16:25)

This is a new idea about allowing people to submit bundles of transactions together. The sequencer (the entity organizing transactions) should accept these bundles.

Guarantees for bundles :

- All transactions in a bundle will be in the same sequencer block.

- Transactions in a bundle with the same bid will be processed consecutively in the order they are in the bundle.

This just requires a tweak to the deterministic tiebreak rules to make sure this happens, but they believe it's a straightforward adjustment.

Implementation notes (17:25)

The L2 chain should collect the extra fees (priority fees) from transactions since it's already equipped to do so. This way, there's no need to create a new system to handle these fees.

But currently Arbitrum doesn't collect priority fees for backwards compatibility. This was done because many people were accidentally submitting transactions with high priority fees which didn't provide any benefit, so to keep things simple, they ignored these fees.

The solution is to create a new transaction type that is identical but collects fees. It's also important to support bundles (groups of transactions processed together), and plan to include this feature

Q&A

Transaction fees don't go to the rollup operator, but to a governance treasury. Doesn't this incentivize the operator to take off-chain payments to influence transaction order and profit (called "frontrunning") ? (20:00)

There are two ways the sequencer (rollup operator) could extract profits :

- Censoring transactions to delay them

- Injecting their own frontrunning transactions

For a centralized sequencer, governance relies on trust they won't frontrun. If suspected, they'd be fired.

For a decentralized sequencer committee, frontrunning is harder but could happen via collusion. Threshold encryption of transactions before ordering helps prevent this.

This ordering scheme resembles a sealed-bid auction, though transactions can still be gossiped before sequencing (22:45)

Ed agrees. For decentralized sequencers, gossip is reasonable but frontunning is still a concern. Threshold encryption helps address this.

How does the encryption scheme work to prevent frontrunning ? (24:20)

- he committee agrees on a set of encrypted transactions in a time window.

- The committee publicly commits to this set.

- Then privately, the committee decrypts and sorts/filters transactions.

- Finally, they jointly sign the sorted transactions.

This prevents frontrunning because the contents stay encrypted until after the committee commits to the order.

But doesn't this require publishing all transactions for verification ? (26:00)

Ed disagrees. The committee knows the transactions, but outsiders don't see the contents. Some schemes allow later proving if a valid transaction was wrongly discarded.

What are the potential approaches for decentralized sequencing in rollups ? (27:00)

Both centralized and decentralized versions will be built. Users can choose which one to submit to. Whole chains decide which model to use based on governance.

Transitioning from centralized to decentralized sequencer requires paying the decentralized committee to incentivize proper behavior. The decentralized committee would be known, trusted entities to disincentivize bad behavior.

Custom ordering rules are possible for app chains if needed

Intro

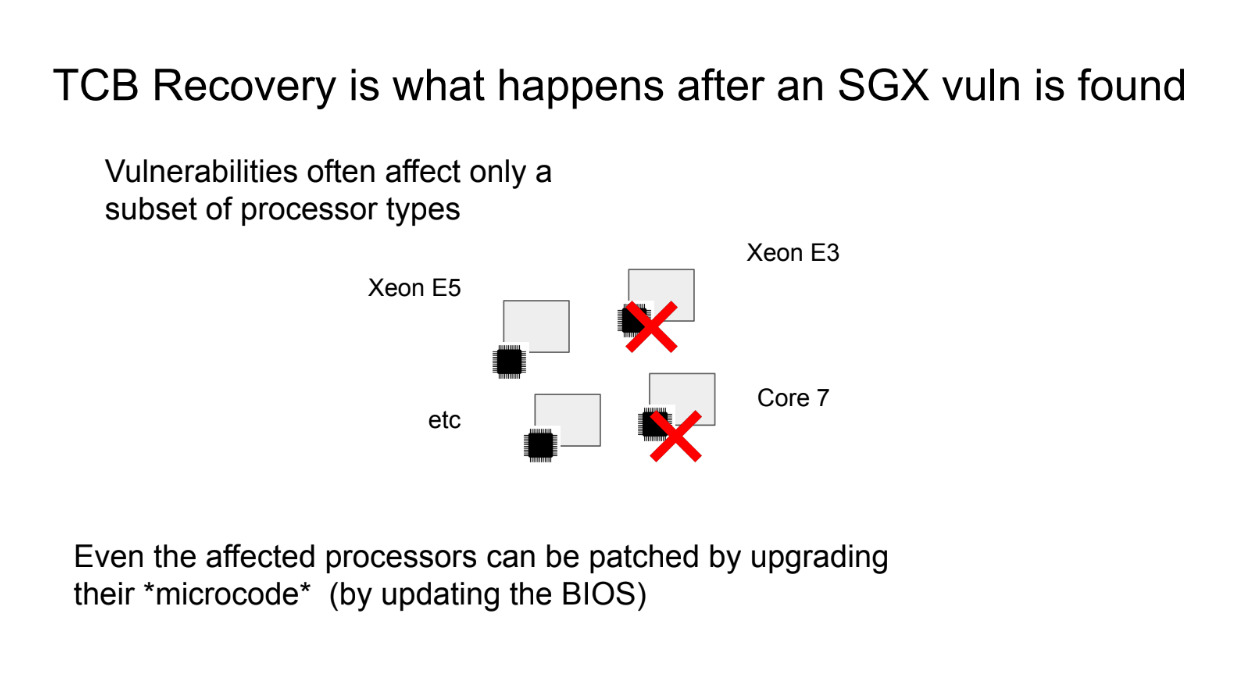

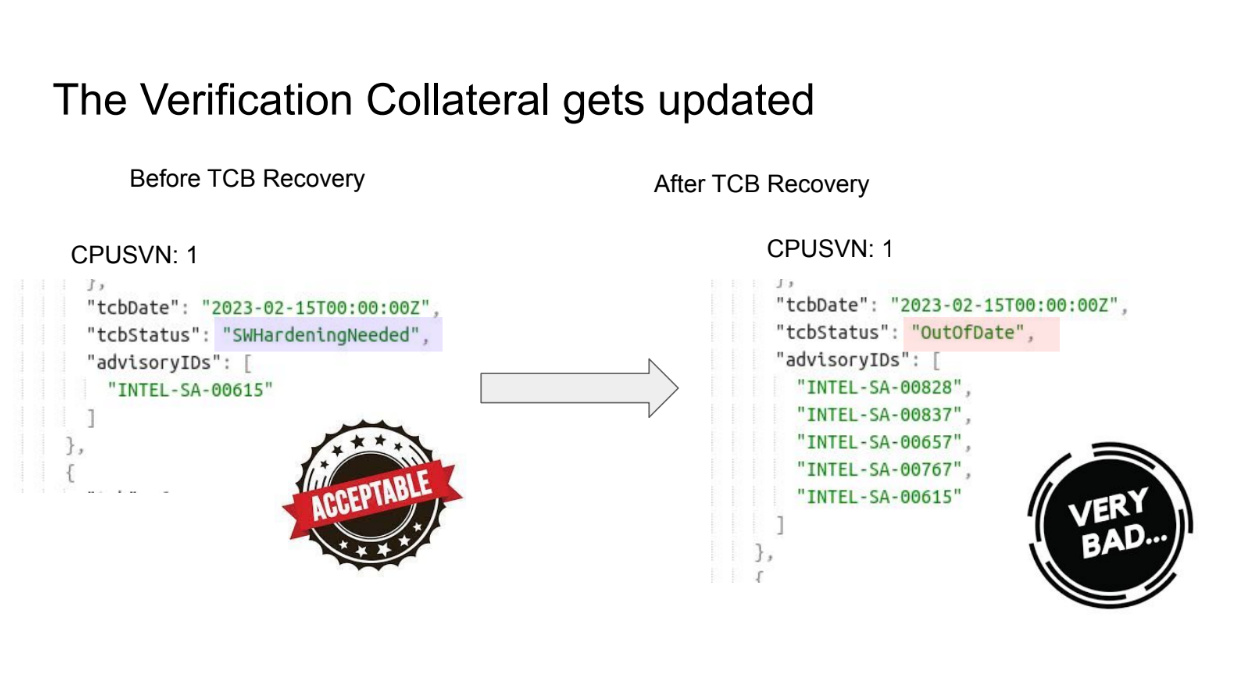

TCB stands for "Trusted Computing Base." It refers to the parts of a secure system that are responsible for maintaining security. Recovery means restoring security after a failure or vulnerability.

So TCB Recovery is the process of restoring the security of a system after its TCB has been compromised in some way.

SGX (Software Guard Extensions) are trusted hardware enclaves provided by Intel CPUs. They allow sensitive computations to be executed in a secure environment isolated from the main operating system.

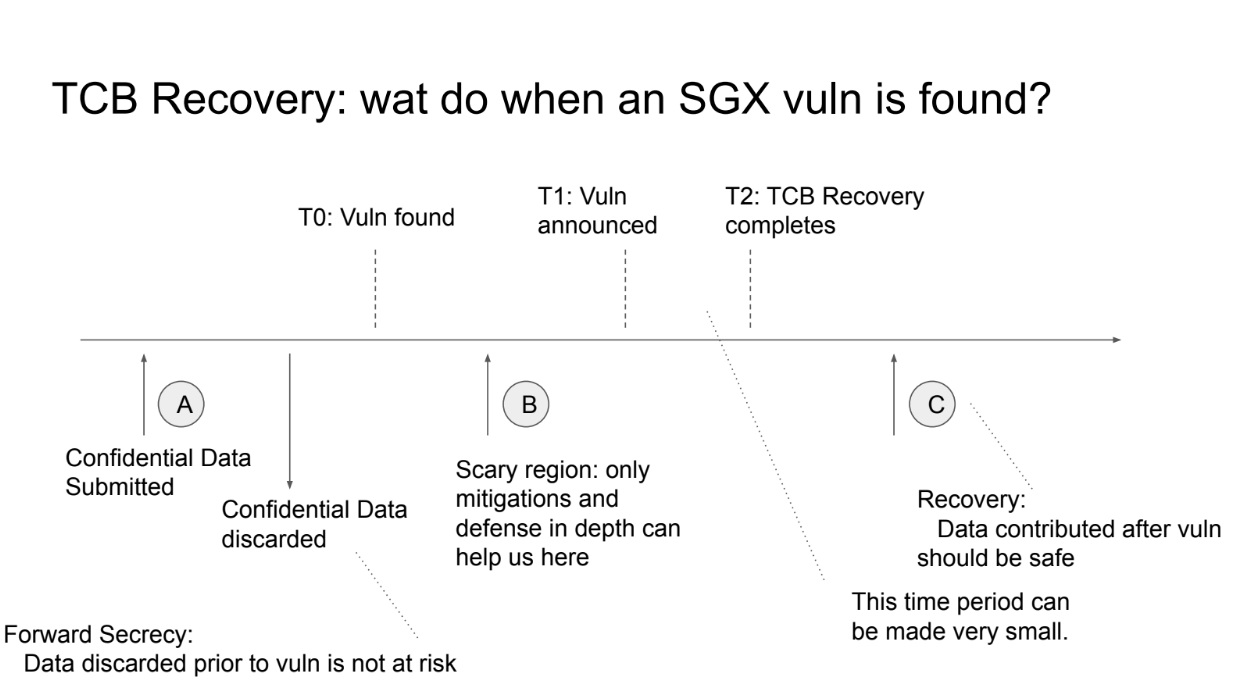

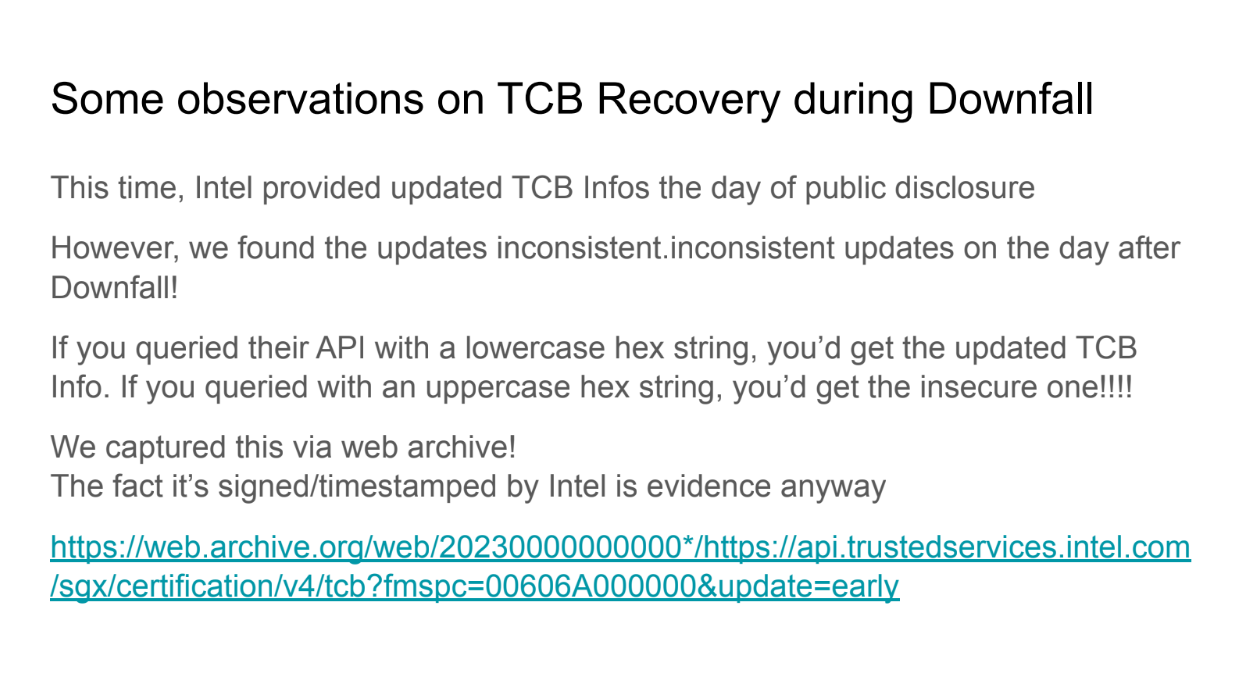

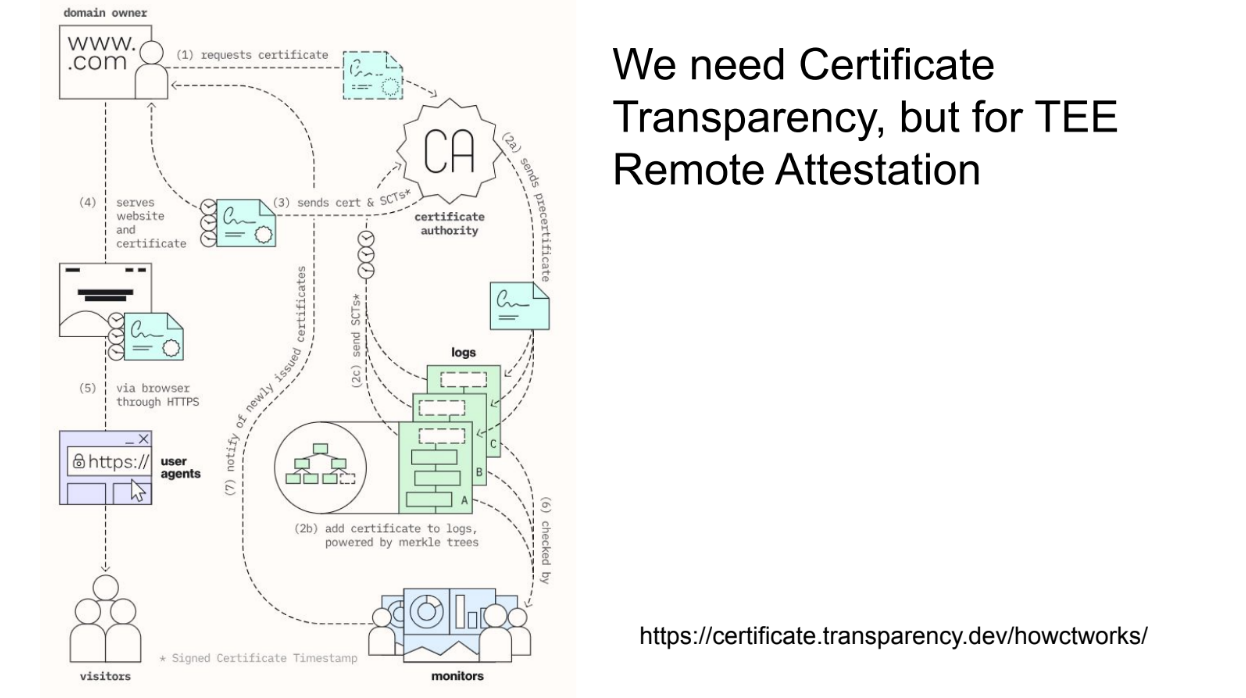

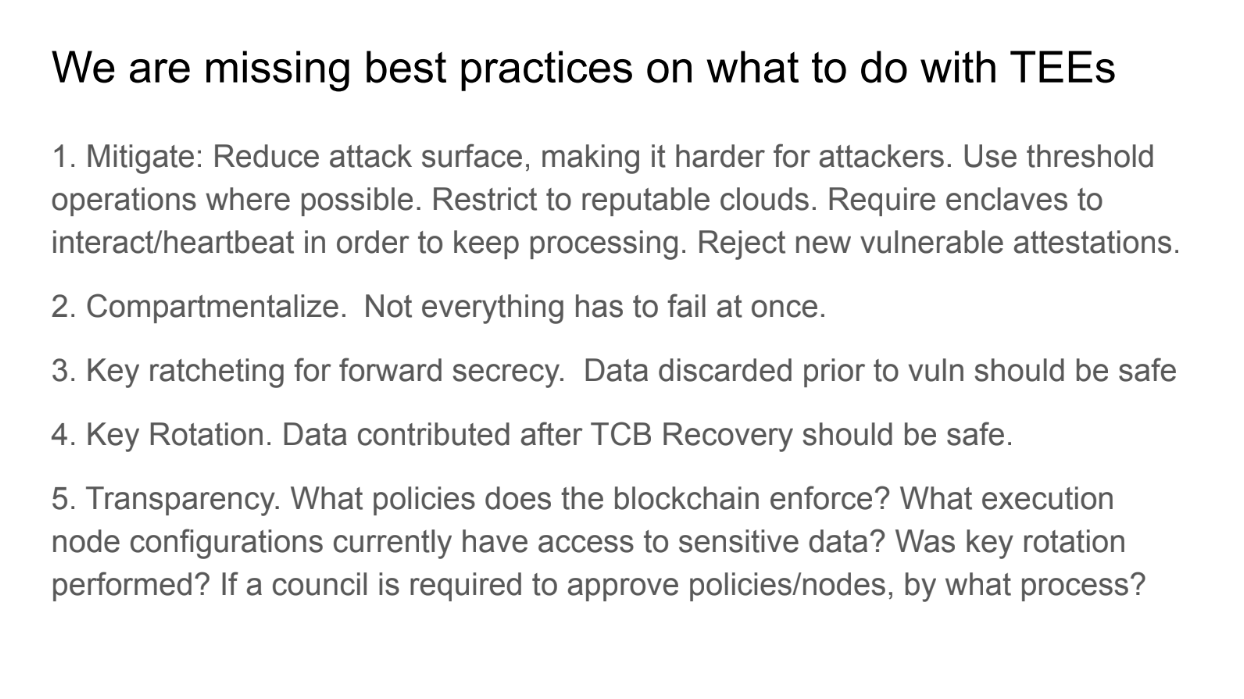

But SGX has had some recent vulnerabilities like the Downfall attack. The text says these SGX issues motivate a discussion of how TCB Recovery applies in this context.